activemq-artemis-helm

activemq-artemis-helm copied to clipboard

activemq-artemis-helm copied to clipboard

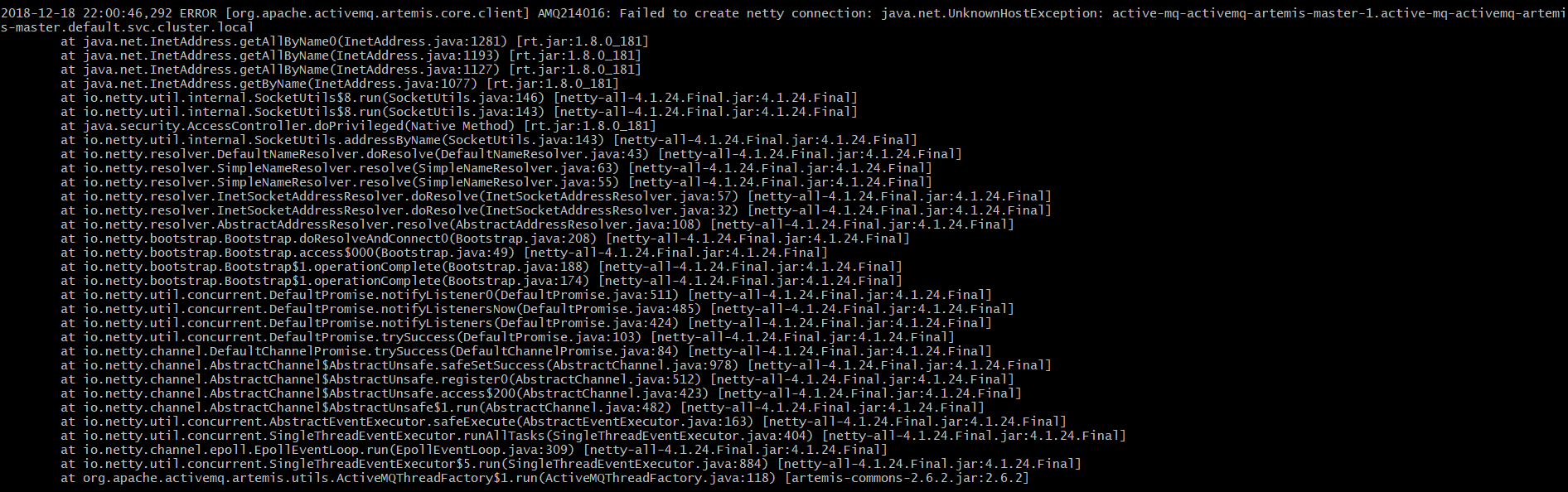

Connection fail

pod/active-mq-activemq-artemis-slave-0 0/1 Running 0 4m pod/active-mq-activemq-artemis-slave-1 0/1 Running 0 4m

Any news regarding this issue ?

On Kubernetes we also have readynesspRoble failed on each slave.

Readiness probe failed: dial tcp 10.X.X.X:61616: connect: connection refused

We are also facing the same issue on kubernetes. Has it worked for anyone so far?

Also having this issue.

@lduparc - For the Readiness Probe failure - this is unfortunately a false-negative on Kubernetes. The charts use the Readiness probe to allow the slaves to be part of the Kubernetes Service group, without assigning them traffic until they become active. So having un-ready slaves is per-design. Once the slaves activate (when a master fails), then they will become 'ready', and Kubernetes will add them to the list of ready endpoints to serve traffic to.

@iankittomar Does this error happen once, or repeated? I sometimes see this once at startup, because the configuration is static, and yet the masters are started in sequence. So the other nodes might try and connect to the master before it's available. Usually this is retried and succeeds.

@DanSalt I've just tried 3 times and I always get on master-0:

2019-07-18 13:03:21,213 ERROR [org.apache.activemq.artemis.core.client] AMQ214016: Failed to create netty connection: java.net.UnknownHostException: amq-activemq-artemis-master-1.amq-activemq-artemis-master.activemq.svc.cluster.local

at java.net.InetAddress.getAllByName0(InetAddress.java:1281) [rt.jar:1.8.0_212]

at java.net.InetAddress.getAllByName(InetAddress.java:1193) [rt.jar:1.8.0_212]

at java.net.InetAddress.getAllByName(InetAddress.java:1127) [rt.jar:1.8.0_212]

at java.net.InetAddress.getByName(InetAddress.java:1077) [rt.jar:1.8.0_212]

at io.netty.util.internal.SocketUtils$8.run(SocketUtils.java:146) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.internal.SocketUtils$8.run(SocketUtils.java:143) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at java.security.AccessController.doPrivileged(Native Method) [rt.jar:1.8.0_212]

at io.netty.util.internal.SocketUtils.addressByName(SocketUtils.java:143) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.DefaultNameResolver.doResolve(DefaultNameResolver.java:43) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.SimpleNameResolver.resolve(SimpleNameResolver.java:63) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.SimpleNameResolver.resolve(SimpleNameResolver.java:55) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.InetSocketAddressResolver.doResolve(InetSocketAddressResolver.java:57) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.InetSocketAddressResolver.doResolve(InetSocketAddressResolver.java:32) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.AbstractAddressResolver.resolve(AbstractAddressResolver.java:108) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap.doResolveAndConnect0(Bootstrap.java:208) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap.access$000(Bootstrap.java:49) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap$1.operationComplete(Bootstrap.java:188) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap$1.operationComplete(Bootstrap.java:174) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:511) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:485) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:424) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.trySuccess(DefaultPromise.java:103) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.DefaultChannelPromise.trySuccess(DefaultChannelPromise.java:84) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetSuccess(AbstractChannel.java:978) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe.register0(AbstractChannel.java:512) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe.access$200(AbstractChannel.java:423) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe$1.run(AbstractChannel.java:482) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:404) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:309) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:884) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at org.apache.activemq.artemis.utils.ActiveMQThreadFactory$1.run(ActiveMQThreadFactory.java:118) [artemis-commons-2.6.2.jar:2.6.2]

Also the log of master 0 and 1 is flooded with:

2019-07-18 13:03:52,899 ERROR [org.apache.activemq.artemis.core.server] AMQ224088: Timeout (10 seconds) while handshaking has occurred.

2019-07-18 13:04:02,888 ERROR [org.apache.activemq.artemis.core.server] AMQ224088: Timeout (10 seconds) while handshaking has occurred.

2019-07-18 13:04:12,888 ERROR [org.apache.activemq.artemis.core.server] AMQ224088: Timeout (10 seconds) while handshaking has occurred.

2019-07-18 13:04:22,887 ERROR [org.apache.activemq.artemis.core.server] AMQ224088: Timeout (10 seconds) while handshaking has occurred.

2019-07-18 13:04:32,888 ERROR [org.apache.activemq.artemis.core.server] AMQ224088: Timeout (10 seconds) while handshaking has occurred.

Hi @irizzant,

For the second set of errors (the timeouts) - please see my comment above. That's unfortunately a false negative - the readiness probe making a connection to the socket to check it's alive, and then closing, which causes an SSL error, but doesn't actually do any harm.

For the first one, I would take a look in the K8s dashboard and see what DNS name has been assigned to your masters in the Kubernetes service to check that it matches the name above. If it doesn't, let me know what you have, and I'll compare against the configuration that sets the cluster names.

Hi @DanSalt

from what I see the connection is going to:

amq-activemq-artemis-master-1.amq-activemq-artemis-master.activemq.svc.cluster.local

and it's fine, but I think the log shows the exceptions because master 0 tries to connect to master 1 while master 1 is still not available.

I see the log shows this too:

2019-07-18 16:05:02,224 INFO [org.apache.activemq.artemis.core.server] AMQ221027: Bridge ClusterConnectionBridge@5f27366e [name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, queue=QueueImpl[name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, postOffice=PostOfficeImpl [server=ActiveMQServerImpl::serverUUID=bbaf0d1a-a975-11e9-9556-0242ac110009], temp=false]@7edf50ef targetConnector=ServerLocatorImpl (identity=(Cluster-connection-bridge::ClusterConnectionBridge@5f27366e [name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, queue=QueueImpl[name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, postOffice=PostOfficeImpl [server=ActiveMQServerImpl::serverUUID=bbaf0d1a-a975-11e9-9556-0242ac110009], temp=false]@7edf50ef targetConnector=ServerLocatorImpl [initialConnectors=[TransportConfiguration(name=amq-activemq-artemis-master-1, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?port=61616&host=amq-activemq-artemis-master-1-amq-activemq-artemis-master-activemq-svc-cluster-local], discoveryGroupConfiguration=null]]::ClusterConnectionImpl@1859383896[nodeUUID=bbaf0d1a-a975-11e9-9556-0242ac110009, connector=TransportConfiguration(name=amq-activemq-artemis-master-0, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?port=61616&host=amq-activemq-artemis-master-0-amq-activemq-artemis-master-activemq-svc-cluster-local, address=jms, server=ActiveMQServerImpl::serverUUID=bbaf0d1a-a975-11e9-9556-0242ac110009])) [initialConnectors=[TransportConfiguration(name=amq-activemq-artemis-master-1, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?port=61616&host=amq-activemq-artemis-master-1-amq-activemq-artemis-master-activemq-svc-cluster-local], discoveryGroupConfiguration=null]] is connected

right before giving:

2019-07-18 16:05:12,003 ERROR [org.apache.activemq.artemis.core.server] AMQ224088: Timeout (10 seconds) while handshaking has occurred

Please note the ?port=61616&host=amq-activemq-artemis-master-1-amq-activemq-artemis-master-activemq-svc-cluster-local], discoveryGroupConfiguration=null]] is connected

@irizzant - from what I observe on my local deployments, a temporary missing connection to "master-1" would not prevent the system from coming up OK, because the JMS port is established before it connects to the cluster (so master-0 is ready). The stateful set for masters starts in-sequence (so it won't try and start master-1 until master-0 is ready), whereas the slaves are marked as 'parallel' so that a non-ready slave-0 won't prevent slave-1 from starting to standby state. Is that what you see, or do you see something else?

The timeout/handshaking error can be ignored, and isn't related to the startup - that's just the readiness probe.

@DanSalt , the logs I posted are from master 0 which is trying to connect to master 1, so master 0 is actually trying to connect to master 1 and it does not succeed on it at the beginning but it does after.

Master 0 logs are filled up with a sequence of:

2019-07-18 16:05:00,708 ERROR [org.apache.activemq.artemis.core.client] AMQ214016: Failed to create netty connection: java.net.UnknownHostException: amq-activemq-artemis-master-1.amq-activemq-artemis-master.activemq.svc.cluster.local

at java.net.InetAddress.getAllByName0(InetAddress.java:1281) [rt.jar:1.8.0_212]

at java.net.InetAddress.getAllByName(InetAddress.java:1193) [rt.jar:1.8.0_212]

at java.net.InetAddress.getAllByName(InetAddress.java:1127) [rt.jar:1.8.0_212]

at java.net.InetAddress.getByName(InetAddress.java:1077) [rt.jar:1.8.0_212]

at io.netty.util.internal.SocketUtils$8.run(SocketUtils.java:146) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.internal.SocketUtils$8.run(SocketUtils.java:143) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at java.security.AccessController.doPrivileged(Native Method) [rt.jar:1.8.0_212]

at io.netty.util.internal.SocketUtils.addressByName(SocketUtils.java:143) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.DefaultNameResolver.doResolve(DefaultNameResolver.java:43) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.SimpleNameResolver.resolve(SimpleNameResolver.java:63) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.SimpleNameResolver.resolve(SimpleNameResolver.java:55) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.InetSocketAddressResolver.doResolve(InetSocketAddressResolver.java:57) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.InetSocketAddressResolver.doResolve(InetSocketAddressResolver.java:32) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.resolver.AbstractAddressResolver.resolve(AbstractAddressResolver.java:108) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap.doResolveAndConnect0(Bootstrap.java:208) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap.access$000(Bootstrap.java:49) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap$1.operationComplete(Bootstrap.java:188) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.bootstrap.Bootstrap$1.operationComplete(Bootstrap.java:174) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:511) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:485) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:424) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.DefaultPromise.trySuccess(DefaultPromise.java:103) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.DefaultChannelPromise.trySuccess(DefaultChannelPromise.java:84) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetSuccess(AbstractChannel.java:978) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe.register0(AbstractChannel.java:512) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe.access$200(AbstractChannel.java:423) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.AbstractChannel$AbstractUnsafe$1.run(AbstractChannel.java:482) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:404) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:309) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:884) [netty-all-4.1.24.Final.jar:4.1.24.Final]

at org.apache.activemq.artemis.utils.ActiveMQThreadFactory$1.run(ActiveMQThreadFactory.java:118) [artemis-commons-2.6.2.jar:2.6.2]

until, as I wrote, all of a sudden the log shows:

2019-07-18 16:05:02,224 INFO [org.apache.activemq.artemis.core.server] AMQ221027: Bridge ClusterConnectionBridge@5f27366e [name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, queue=QueueImpl[name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, postOffice=PostOfficeImpl [server=ActiveMQServerImpl::serverUUID=bbaf0d1a-a975-11e9-9556-0242ac110009], temp=false]@7edf50ef targetConnector=ServerLocatorImpl (identity=(Cluster-connection-bridge::ClusterConnectionBridge@5f27366e [name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, queue=QueueImpl[name=$.artemis.internal.sf.amq-activemq-artemis.c6020664-a975-11e9-a48b-0242ac11000c, postOffice=PostOfficeImpl [server=ActiveMQServerImpl::serverUUID=bbaf0d1a-a975-11e9-9556-0242ac110009], temp=false]@7edf50ef targetConnector=ServerLocatorImpl [initialConnectors=[TransportConfiguration(name=amq-activemq-artemis-master-1, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?port=61616&host=amq-activemq-artemis-master-1-amq-activemq-artemis-master-activemq-svc-cluster-local], discoveryGroupConfiguration=null]]::ClusterConnectionImpl@1859383896[nodeUUID=bbaf0d1a-a975-11e9-9556-0242ac110009, connector=TransportConfiguration(name=amq-activemq-artemis-master-0, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?port=61616&host=amq-activemq-artemis-master-0-amq-activemq-artemis-master-activemq-svc-cluster-local, address=jms, server=ActiveMQServerImpl::serverUUID=bbaf0d1a-a975-11e9-9556-0242ac110009])) [initialConnectors=[TransportConfiguration(name=amq-activemq-artemis-master-1, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?port=61616&host=amq-activemq-artemis-master-1-amq-activemq-artemis-master-activemq-svc-cluster-local], discoveryGroupConfiguration=null]] is connected

stating that it's connected. That's what I see. Your reply seems to confirm what I said, since master 1 is started after master 0 the latter can try to connect to master 1 and fail.

@irizzant - Yes. That's right. Because the configuration is static (all nodes defined in configuration), during the startup, there will be some time where nodes configured are not available. But once all nodes come up, everything resolves.

@DanSalt I think this could be fixed using a sort of check on the master 1 status in the initcontainers here https://github.com/vromero/activemq-artemis-helm/blob/698454db87ed95db44629fb55f2e493ee17fe2ec/activemq-artemis/templates/master-statefulset.yaml#L51

I have the same issue