fiftyone

fiftyone copied to clipboard

fiftyone copied to clipboard

[?] How to compute an overlap of two labels and combine both label masks into a single label?

FiftyOne has an amazing functionality to find duplicate labels with compute_max_ious() and find_duplicates(). However finding true duplicates only using a thresholding from the IoU metric is not enough.

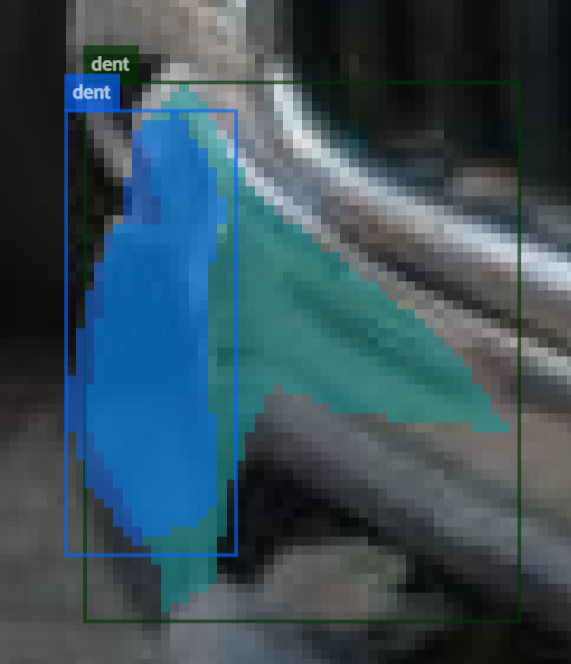

Let's take the following example:

In cases like this, I would either want to delete the mask with the smaller area (blue), or take a union of two masks. Both operation would be conditioned by how much the smaller mask overlaps with the bigger one.

So concretely I'd like to know how to:

- Calculate the % of the area the smaller mask overlaps with the bigger mask

- Take a union of both masks, merging two masks into a single label.

- Not related to above, but is there a way to disable bbox preview? I work only with instance segmentation, and bbox occlude the image too much. Can I simply delete the bbox data, leaving only the mask or should I convert the whole dataset from detections to polylines?

ps. another good example, where a simple IoU thresholding would fail. In this example, the IoU is only 0.035, however the blue mask is a clear duplicate

First of all, glad you're finding compute_max_ious() and find_duplicates() useful!

In general, FiftyOne's primary goal is to provide a standardized data representation, not necessarily to have built-in every type of data transformation you might want to apply.

Merging instance masks, for example, has some free parameters. What should the resolution of the output mask be? Should the output instance's bounding box tightly enclose the union of the masks, or should it be the convex hull of the bounding boxes of the input shapes? (the two might differ)

FiftyOne does provide some foundational transformations, like methods to convert Label types that you can use to build a transformation to your taste:

Here's one possible way to use these methods to merge instance masks:

def merge_instances(detections, frame_size):

"""Returns a single :class:`fiftyone.core.labels.Detection` whose bounding

box tightly encloses the given list of instance segmentations' masks and

whose mask is the union of their masks.

The resolution of the output mask is proportional to the given frame size.

Args:

detections: a list of :class:`fiftyone.core.labels.Detections`

frame_size: the ``(width, height)`` of the image containing the objects

Returns:

a :class:`fiftyone.core.labels.Detection`

"""

width, height = frame_size

label = detections[0].label

s = fo.Segmentation(mask=np.zeros((height, width), dtype=bool))

for d in detections:

s = d.to_segmentation(mask=s.mask, target=True)

d = s.to_detections(mask_targets={True: label}, mask_types="stuff")

return d.detections[0]

Example usage:

import fiftyone as fo

import numpy as np

image_path = "/path/to/image.jpg"

mask = np.zeros((64, 64), dtype=bool)

mask[16:48, 16:48] = True

d1 = fo.Detection(label="object", bounding_box=[0.00, 0.00, 0.75, 0.75], mask=mask)

d2 = fo.Detection(label="object", bounding_box=[0.25, 0.25, 0.75, 0.75], mask=mask)

m = fo.ImageMetadata.build_for(image_path)

d_merged = merge_instances([d1, d2], (m.width, m.height))

sample = fo.Sample(

filepath=image_path,

objects=fo.Detections(detections=[d1, d2]),

merged=fo.Detections(detections=[d_merged]),

)

dataset = fo.Dataset()

dataset.add_sample(sample)

session = fo.launch_app(dataset)

Thanks for the elaborate answer.

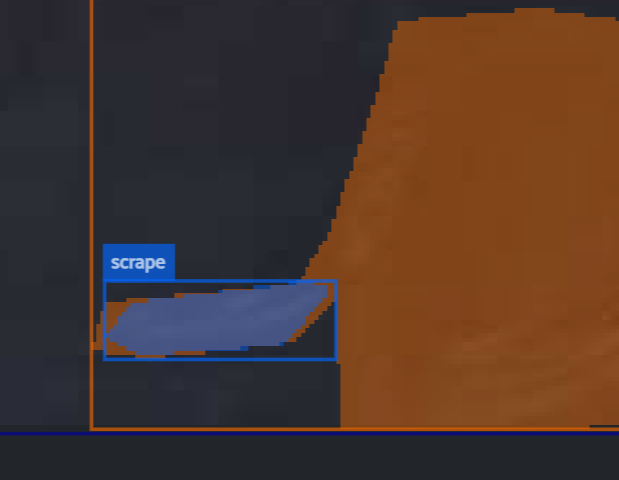

I am still confused as to how to find all masks that are inside other masks, like the example below:

1. I would need to calculate the % of the area the smaller mask (blue) overlaps with the bigger mask (orange). Would I have to convert the masks to polylines? Does FiftyOne provide a functionality to calculate the area of the mask / polyline overlapping with another mask/polyline?

Ideally what I am looking to do is a function, similar to find_duplicates() but instead of finding duplicates with a simple IoU metric I would like to find all masks that are enclosed within a bigger mask, see example image above. An example above shows, that find_duplicates() would fail to mark the blue mask as a duplicate, because the IoU is only 0.035, however it is a clear duplicate mask, since it completely overlaps with the orange mask. In those cases, I would like to take the mask with the bigger area and deleting the smaller one, or take the union of them.

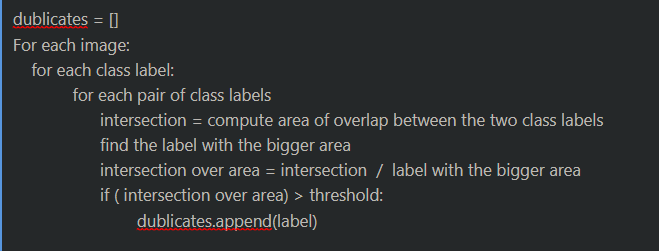

It would be something like this:

2. If I dont care about the bounding boxes, and only instance segmentation, should I convert all my Detections to Segmentation? It is confusing to me that Detections have the information about bounding box and also about the mask. These bounding boxes are also shown in the UI which I dont want to see.

"What should the resolution of the output mask be?" Same as the original mask

"Should the output instance's bounding box tightly enclose the union of the masks" I dont need bounding boxes at all, since I work with instance segmentation

Like I mentioned initially, FiftyOne generally focuses on data representation only, which means that we're not trying to provide a builtin way to compute every possible metric, but we do try to make it as easy as possible to compute your metric from our representation.

In this case, that's why the Detection.to_shapely() and Polyline.to_shapely() methods exist.

Here's a little function that would compute your metric of interest:

import fiftyone as fo

def contains(object1, object2, alpha=0.99, frame_size=None, tolerance=2):

"""Determines whether at least ``alpha%`` of ``object2`` is contained

within ``object1```.

Args:

object1: a :class:`fiftyone.core.labels.Detection` or

:class:`fiftyone.core.labels.Polyline`

object2: a :class:`fiftyone.core.labels.Detection` or

:class:`fiftyone.core.labels.Polyline`

alpha (0.99): the minimum fraction of ``object2`` that must be

contained within ``object1``

frame_size (None): an optional ``(width, height)`` of the frame

containing the objects. If not specified, all calculations are

performed directly on normalized coordinates, which implicitly

assumes the frame is square

tolerance (2): a tolerance, in pixels, when generating an approximate

polyline for an instance mask. Typical values are 1-3 pixels

Returns:

True or False

"""

shape1 = _to_shapely(object1, frame_size, tolerance)

shape2 = _to_shapely(object2, frame_size, tolerance)

return shape1.intersection(shape2).area / shape2.area >= alpha

def _to_shapely(obj, frame_size, tolerance):

if isinstance(obj, fo.Detection):

if obj.mask is not None:

obj = obj.to_polyline(tolerance=tolerance)

return obj.to_shapely(frame_size=frame_size)

Example usage:

import numpy as np

mask = np.zeros((64, 64), dtype=bool)

mask[16:48, 16:48] = True

d1 = fo.Detection(label="object", bounding_box=[0.00, 0.00, 0.75, 0.75], mask=mask)

d2 = fo.Detection(label="object", bounding_box=[0.25, 0.25, 0.75, 0.75], mask=mask)

frame_size = (1280, 960)

print(contains(d1, d2, frame_size=frame_size)) # False

print(contains(d1, d1, frame_size=frame_size)) # True

I added compute_max_ious() and find_duplicates() because, in my judgement, they were "common enough" to warrant direction inclusion in the library.

But, perhaps we can add an extension or variation of the above methods that allows for passing in a generic function like contains() that you want to apply pairwise to your data.

Feel free to make a PR to introduce such a method if you're so inclined 🤗

no activity, closing