fiftyone

fiftyone copied to clipboard

fiftyone copied to clipboard

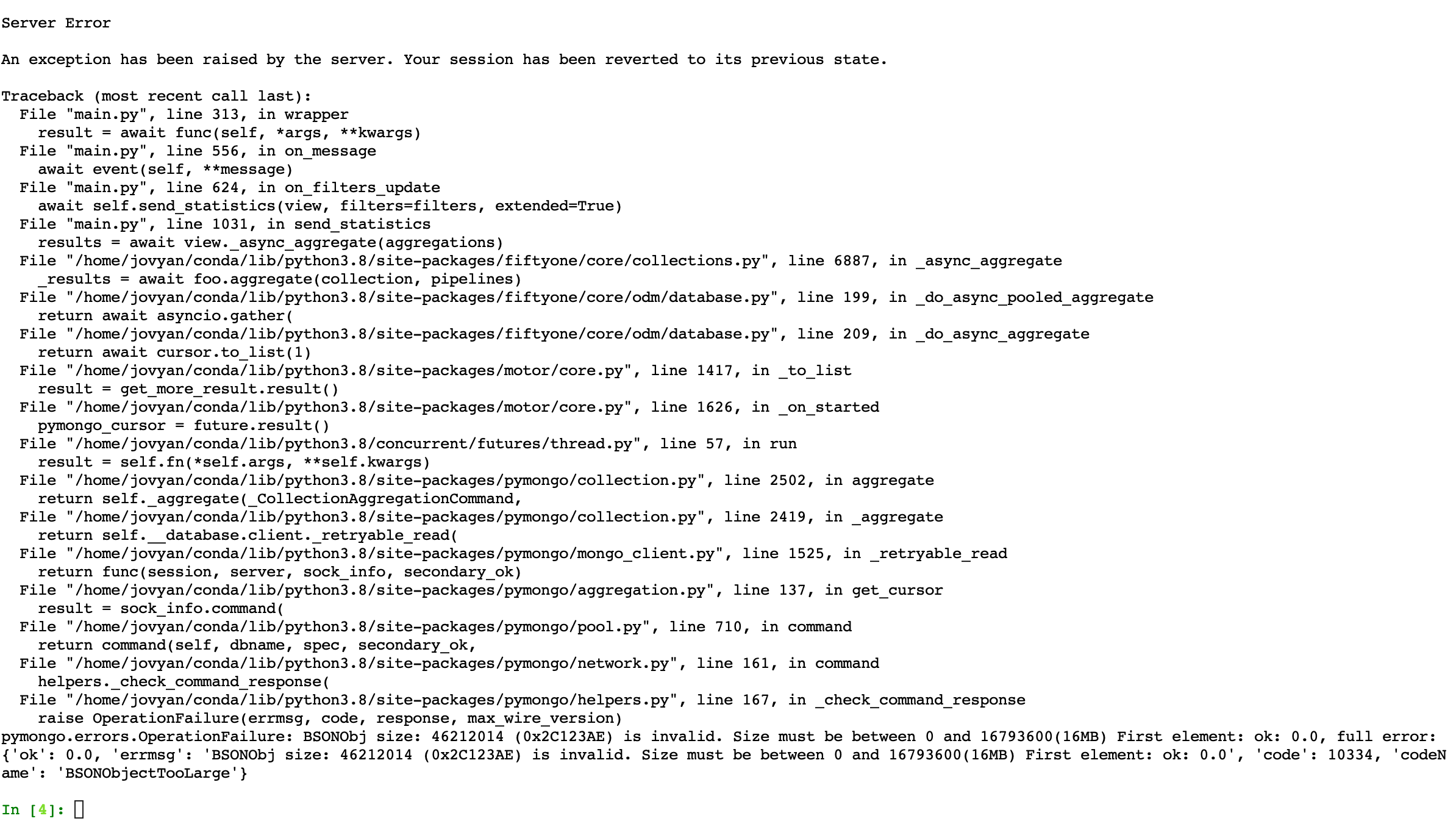

[BUG] OperationFailure: BSONObj size is invalid (video datasets with lots of frame labels)

On fiftyone<=0.16.5, BSONObj errors similar to the one below can arise when trying to work with video datasets whose samples contain >16MB of frame-level labels.

This error can arise:

- When trying to load the video dataset in the App

- When using the App's

label/confidencefilters to filter frame labels - When performing workflows such as filtering the dataset by frame-level content, like so:

view = dataset.filter_labels("frames.field", expr)

This is a consequence of the fact that one cannot write a MongoDB aggregation that uses $lookup to construct a >16MB document (even if it is only used internally by a pipeline and not returned) unless it is immediately followed by an $unwind: https://stackoverflow.com/questions/45724785

This limitation was overlooked when video view supported was originally added and will need to be dealt with in a fairly fundamental way.

In the meantime, the bottom line is that views that manipulate frame-level fields will fail whenever a single video has >16MB worth of frame-level labels on its frames.

https://github.com/voxel51/fiftyone/issues/1822 contains some helpful functions to troubleshoot large video datasets:

- frames_sizes(): the total size of each sample's frame labels

- frame_sizes(): the size of each individual frame

If you find yourself with a video dataset with one or more samples whose frame labels exceed 16MB, here are some strategies to workaround the issue:

First, use frames_sizes() to retrieve the IDs of samples whose frame labels exceed 16MB.

If only a few samples are too large:

Consider either omitting (using exclude()) or deleting (using delete_samples()) the samples from the dataset.

# Option 1: omit large samples when you need to use the App

view = dataset.exclude(ids_of_large_samples)

session = fo.launch_app(view)

# Option 2: permanently delete large samples

dataset.delete_samples(ids_of_large_samples)

session = fo.launch_app(dataset)

If many samples are too large

Option 1: If your frame labels are distributed across multiple frame fields, create separate datasets containing subsets of the frame labels that each total <16MB individually:

print(dataset.get_frame_field_schema())

# field1, field2, etc

dataset1 = dataset.clone()

dataset1.delete_frame_field("field2")

dataset2 = dataset.clone()

dataset2.delete_frame_field("field1")

Option 2: If you want to keep all frame labels together, partition your videos into shorter clips so that the frame labels for each video clip do not exceed 16MB.

This reorganization can be done manually by recreating the datasets from scratch from your source data. Alternatively, if you have decided on (start, stop) frame number ranges for each clip you want to extract, you can use the extract_clips() utility below to perform the necessary clip extraction and data refactoring:

import os

import fiftyone.utils.video as fouv

from fiftyone import ViewField as F

def extract_clips(sample_collection, clips, clips_dir):

"""Creates a new dataset that contains one sample per video clip defined by

the given ``clips`` argument.

Each sample in the output dataset will contain all sample-level fields of

the source sample, together with any frame labels for the specified clip

range.

The specified segment(s) of each video file are extracted via

:func:`fiftyone.utils.video.extract_clip`.

Args:

sample_collection: a :class:`fiftyone.core.collections.SampleCollection`

clips: the clips to extract, in either of the following formats:

- a list of ``[(first1, last1), (first2, last2), ...]`` lists

defining the frame numbers of the clips to extract from each

sample in ``sample_collection``

- a dict mapping sample IDs to

``[(first1, last1), (first2, last2), ...]`` lists

clips_dir: a directory in which to write the extracted clips

Returns:

a :class:`fiftyone.core.dataset.Dataset`

"""

if isinstance(clips, dict):

sample_ids, clips = zip(*clips.items())

sample_collection = sample_collection.select(sample_ids, ordered=True)

# Create a new dataset containing the clip's labels

dataset = (

sample_collection

.to_clips(clips)

.set_field(

"frames.frame_number",

F("frame_number") - F("$support")[0] + 1,

)

.exclude_fields("_sample_id", _allow_missing=True)

).clone()

# Extract the video clips and update the dataset's filepaths

for sample in dataset.select_fields("support").iter_samples(progress=True):

video_path = sample.filepath

root, ext = os.path.splitext(os.path.basename(video_path))

clip_name = "%s-clip-%d-%d%s" % (

root,

sample.support[0],

sample.support[1],

ext,

)

clip_path = os.path.join(clips_dir, clip_name)

fouv.extract_clip(video_path, clip_path, support=sample.support)

sample.filepath = clip_path

sample.save()

# Cleanup

dataset._set_metadata("video")

dataset.compute_metadata(overwrite=True)

dataset.delete_sample_fields(["sample_id", "support"])

return dataset

Here's an example usage of the above script:

import fiftyone as fo

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset("quickstart-video")

# The clips to extract

clips = {

dataset.first().id: [(1, 60), (61, 120)],

dataset.last().id: [(31, 90)],

}

clips_dataset = extract_clips(dataset, clips, "/tmp/quickstart-video-clips")

print(clips_dataset)

session = fo.launch_app(clips_dataset)

I encountered the same bug when I tried to load the segmentation dataset which has a high resolution. When I was saving 4kx4k gt & prediction mask to the sample field, this error happened. Is there any way to put off the loading of masks and keep their path only?

Yes! https://github.com/voxel51/fiftyone/pull/2301 introduced the ability to store segmentations on disk. It's in develop now if you want to do a source install and will be included in the next release fiftyone==0.19.