[Enhancement Request] Enhance capacity scheduling

What would you like to be added:

Capacity scheduling can be integrated into volcano like other container orchestration platforms such as Yarn, and K8s capacity scheduling plugin.

- The API definition of ElasticResourceQuota.

- Implement a scheduler action and plugin to the API of ElasticResourceQuota.

- Implement controller and admission webhook for ElasticResourceQuota.

Why is this needed:

There is increasing demand to improve resource utilization in multi-tenant cluster.A major challenge is ensure that various jobs are scheduled deserved resources. The problem can be partially addressed by the K8s ResourceQuota. The ResourceQuota can limit the upper bound of any tenant resource consumption based on the principle of fairness. However,ResourceQuota is too restrictive for resources to make full use of resources (e.g., There are two teams share a cluster, both limits are 50%, then each team can only use no more than 50%, even if another at holiday).

In addition, the resource allocation under multi-tenant can be partially addressed by queue,which is proposed by volcano. The weight of queue indicates the relative weight of a queue in cluster resource division,and then makes the job run in an orderly manner according to the queue’s quota. However, the resource limits for some queue vary with the sum of queues’ weight. (Assuming that there are two queues in the cluster, called A and B respectively. A’s weight is 999 and B’s is 1, which means B has only 0.1% resource.) when huge gap between queue’s weight, inevitably low-priority tasks will starve to death.

In order to overcome above limitations, the concept of ElasticResourceQuota is proposed based on Yarn capacity scheduler,meanwhile refer to the capacity-scheduling designed by K8s. Basically, the “ElasticResourceQuota” has the notions of “max” and “min”.

- max: the upper bound of the resource consumption of the consumers.

- min: the minimum resources that are guaranteed to ensure the basic.

- functionality/performance of the consumers.

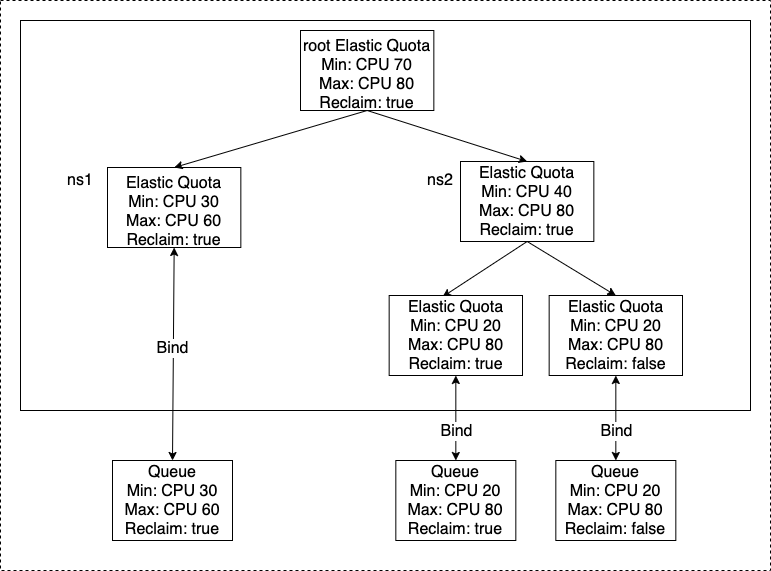

Furthermore, tree structure organized by ElasticResourceQuotas’ label.

- Root quota represent the resource of cluster.

- Recursively, child quota setting min/max depends on parent’s

- leaf quota binding queue one by one.

Here is the diagram for the tree structure:

We proposed a new “ResourceQuota” mechanics to optimize current capacity scheduling as follows:

- Improve resource utilization.

- Avoid starvation of low-priority tasks.

- Divide resource flexibility by tree structure. It is suitable for dividing resources according to the structure of the actual team.

The structure of the resource quota is as follow:

// +genclient

// +genclient:nonNamespaced

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

// +kubebuilder:object:root=true

// +kubebuilder:resource:path=elasticresourcequotas,scope=Cluster,shortName=equota;equota-v1beta1

// +kubebuilder:subresource:status

// Elastic Resource Quota

type ElasticResourceQuota struct {

metav1.TypeMeta `json:",inline"`

// +optional

metav1.ObjectMeta `json:"metadata,omitempty" protobuf:"bytes,1,opt,name=metadata"`

// Specification of the desired behavior of the queue.

// More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

// +optional

Spec ElasticQuotaSpec `json:"spec,omitempty" protobuf:"bytes,2,opt,name=spec"`

// The status of queue.

// +optional

Status ElasticQuotaStatus `json:"status,omitempty" protobuf:"bytes,3,opt,name=status"`

}

// ElasticQuotaSpec represents the template of Elastic Resource Quota.

type ElasticQuotaSpec struct {

// Max is the upper bound of elastic resource quota

Max v1.ResourceList `json:"max,omitempty" protobuf:"bytes,1,opt,name=max"`

// Min is the lower bound of elastic resource quota

Min v1.ResourceList `json:"min,omitempty" protobuf:"bytes,2,opt,name=min"`

// Reclaimable indicate whether the elastic quota can be reclaimed by other elastic quota

Reclaimable bool `json:"reclaimable,omitempty" protobuf:"bytes,3,opt,name=reclaimable"`

// HardwareTypes defines hardware types of elastic quota

HardwareTypes []string `json:"hardwareTypes,omitempty" protobuf:"bytes,4,opt,name=hardwareTypes"`

// Namespace defines resource quota belongs to one namespace

Namespace string `json:"namespace,omitempty" protobuf:"bytes,5,opt,name=namespace"`

}

// ElasticQuotaStatus represents the status of Elastic Resource Quota.

type ElasticQuotaStatus struct {

// IsLeaf defines whether elastic quota is leaf or not

IsLeaf bool `json:"isleaf,omitempty" protobuf:"bytes,1,opt,name=isleaf"`

// Used defines used resource of elastic resource quota

Used v1.ResourceList `json:"used,omitempty" protobuf:"bytes,2,opt,name=used"`

// QueueName indicate bound queue

QueueName string `json:"queueName,omitempty" protobuf:"bytes,3,opt,name=queueName"`

}

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

// +kubebuilder:object:root=true

// ElasticResourceQuotaList is a collection of ElasticResourceQuota.

type ElasticResourceQuotaList struct {

metav1.TypeMeta `json:",inline"`

// Standard list metadata

// More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata

// +optional

metav1.ListMeta `json:"metadata,omitempty"`

// items is the list of PodGroup

Items []ElasticResourceQuota `json:"items"`

}

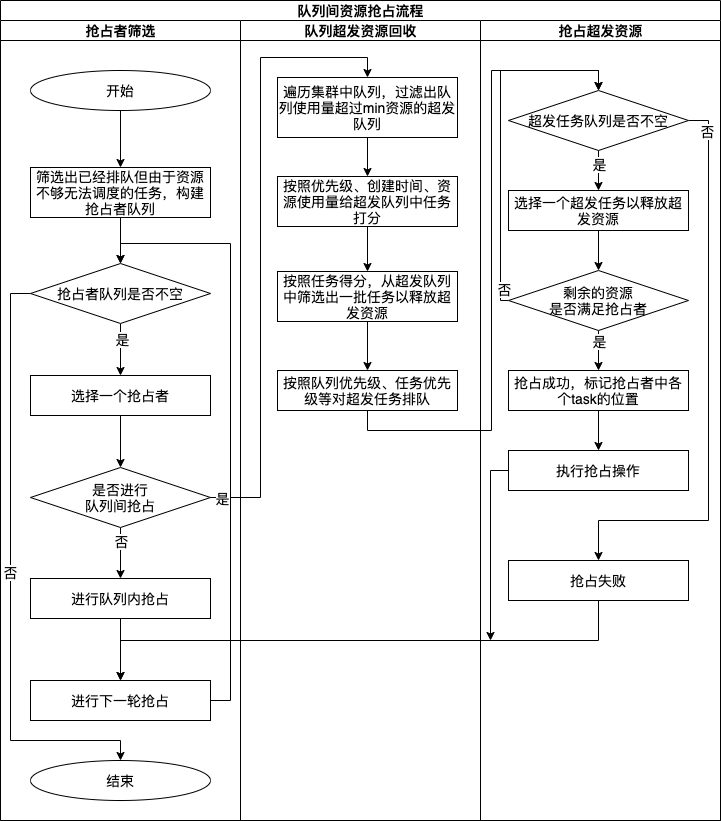

And the workflow for using the elastic resource quota is as follow:

Reference

- https://www.netjstech.com/2018/04/capacity-scheduler-in-yarn-hadoop.html

- https://github.com/kubernetes-sigs/scheduler-plugins/blob/master/kep/9-capacity-scheduling/README.md

@alexqdh @QSS-ZL @D0m021ng are the original authors for this enhancement feature, cc them for future notifications.

Hello 👋 Looks like there was no activity on this issue for last 90 days. Do you mind updating us on the status? Is this still reproducible or needed? If yes, just comment on this PR or push a commit. Thanks! 🤗 If there will be no activity for 60 days, this issue will be closed (we can always reopen an issue if we need!).

Closing for now as there was no activity for last 60 days after marked as stale, let us know if you need this to be reopened! 🤗

Keep tracking of this enhancement request.

I'm also assessing multiple batch schedulers for Kubernetes for running ML workloads, and this one interesting feature that's missing in Volcano compared to another schedulers. I'm not sure if it's allowed to link it here, but the implementation of hierarchal elastic quota management of Koordinator scheduler would be a great basis for this feature. https://koordinator.sh/docs/designs/multi-hierarchy-elastic-quota-management

I hope there will be a chance to keep this enhancement request open. Thank you!