vak

vak copied to clipboard

vak copied to clipboard

A neural network framework for researchers studying acoustic communication

A neural network toolbox for animal vocalizations and bioacoustics

vak is a Python library for bioacoustic researchers studying animal vocalizations such as birdsong, bat calls, and even human speech.

The library has two main goals:

- Make it easier for researchers studying animal vocalizations to apply neural network algorithms to their data

- Provide a common framework that will facilitate benchmarking neural network algorithms on tasks related to animal vocalizations

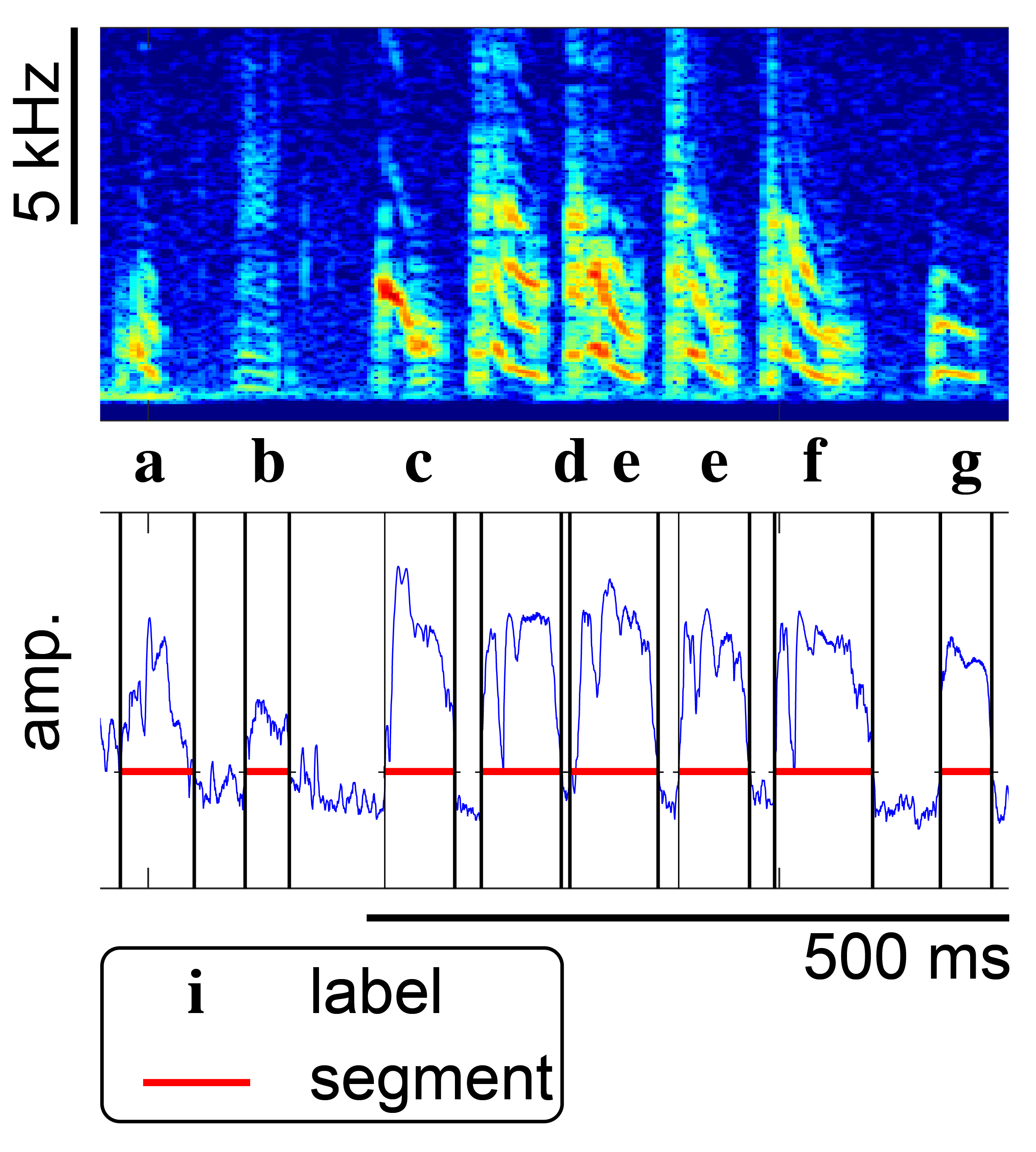

Currently, the main use is an automatic annotation of vocalizations and other animal sounds. By annotation, we mean something like the example of annotated birdsong shown below:

You give vak training data in the form of audio or spectrogram files with annotations,

and then vak helps you train neural network models

and use the trained models to predict annotations for new files.

We developed vak to benchmark a neural network model we call tweetynet.

Please see the eLife article here: https://elifesciences.org/articles/63853

Installation

Short version:

with pip

$ pip install vak

with conda

$ conda install vak -c pytorch -c conda-forge

$ # ^ notice additional channel!

Notice that for conda you specify two channels,

and that the pytorch channel should come first,

so it takes priority when installing the dependencies pytorch and torchvision.

For more details, please see: https://vak.readthedocs.io/en/latest/get_started/installation.html

We test vak on Ubuntu and MacOS. We have run on Windows and

know of other users successfully running vak on that operating system,

but installation on Windows may require some troubleshooting.

A good place to start is by searching the issues.

Usage

Tutorial

Currently the easiest way to work with vak is through the command line.

You run it with configuration files, using one of a handful of commands.

For more details, please see the "autoannotate" tutorial here:

https://vak.readthedocs.io/en/latest/get_started/autoannotate.html

How can I use my data with vak?

Please see the How-To Guides in the documentation here: https://vak.readthedocs.io/en/latest/howto/index.html faq.html#faq

Support / Contributing

For help, please begin by checking out the Frequently Asked Questions:

https://vak.readthedocs.io/en/latest/faq.html.

To ask a question about vak, discuss its development,

or share how you are using it,

please start a new "Q&A" topic on the VocalPy forum

with the vak tag:

https://forum.vocalpy.org/

To report a bug, or to request a feature,

please use the issue tracker on GitHub:

https://github.com/vocalpy/vak/issues

For a guide on how you can contribute to vak, please see:

https://vak.readthedocs.io/en/latest/development/index.html

Citation

If you use vak for a publication, please cite its DOI:

License

About

For more on the history of vak please see: https://vak.readthedocs.io/en/latest/reference/about.html

"Why this name, vak?"

It has only three letters, so it is quick to type, and it wasn't taken on pypi yet. Also I guess it has something to do with speech. "vak" rhymes with "squawk" and "talk".

Does your library have any poems?

Contributors ✨

Thanks goes to these wonderful people (emoji key):

avanikop 🐛 |

Luke Poeppel 📖 |

yardencsGitHub 💻 🤔 📢 📓 💬 |

David Nicholson 🐛 💻 🔣 📖 💡 🤔 🚇 🚧 🧑🏫 📆 👀 💬 📢 ⚠️ ✅ |

marichard123 📖 |

Therese Koch 📖 🐛 |

alyndanoel 🤔 |

adamfishbein 📖 |

vivinastase 🐛 📓 |

kaiyaprovost 💻 🤔 |

ymk12345 🐛 📖 |

neuronalX 🐛 📖 |

Khoa 📖 |

sthaar 📖 🐛 🤔 |

yangzheng-121 🐛 🤔 |

This project follows the all-contributors specification. Contributions of any kind welcome!