Add support for OpenAI `realtime` voice models.

Feature Description

Add support for the new OpenAI realtime modes.

Use Case

Building Voice to Voice realtime apps.

Additional context

https://openai.com/index/introducing-the-realtime-api/

As realtime models use websocket, maybe we have to create abstracted functions first, such as streamText and streamObject.

As realtime models use websocket, maybe we have to create abstracted functions first, such as

streamTextandstreamObject.

I think that is reasonable. Would love to get the @lgrammel take on how this would best implemented.

This is currently limited to openai. In the near term, I recommend using their SDK while we explore how to best implement this feature - there are no gains in compatibility between different providers yet. It'll be a major change and it's only for a single provider at this point, so I want to take some time to think about it.

Okay but it stil in BETA, so...

I have been asked to implement this in an upcoming app, this would be great to have exposed through useChat somehow or another supported hook so we don't have to change things.

now that openai has released this on their own web app it would be great to be able to offer similar support in our web apps that are currently using this sdk\

Did someone made a benchmark between the realtime and completion token usage? Does the realtime send all the history to the model but it's done under the hood?

So long as it moves from beta to GA, is this something Vercel plans to support?

This is currently limited to openai. In the near term, I recommend using their SDK while we explore how to best implement this feature - there are no gains in compatibility between different providers yet. It'll be a major change and it's only for a single provider at this point, so I want to take some time to think about it.

Meanwhile Google Multimodel Live API and Elevenlabs Realtime voice Agents have joined OpenAI and all three are providing realtime voice APIs, did you reconsider the implementation? Would be amazing to have an abstraction layer from vercel.

eleven labs uses multistep approach for theirs realtime voice agent: speech to text -> generateText -> text to speech . maybe it is something you can explore and abstract in vercel ai sdk? so we can easily change models for each step

any update on this now that their are multiple providers with this feature?

Right now this issue is related to #4082 .

Both OpenAI and Google's APIs use WebSocket for real-time streaming. OpenAI's API streams text, while Google's supports multiple data types like images and text. They have similar connection approaches but differ in payload formats and authentication. A unified abstraction layer with provider-specific adapters in the Vercel AI SDK could support both APIs seamlessly.

Any updates on this?

For those of you who want to try it out, we just added this to LlamaIndexTS, here's an example: https://github.com/run-llama/LlamaIndexTS/tree/main/examples/models/openai/live/browser/open-ai-realtime

Any updates on this?

+1

+1

+1

I created this hook for communication with Live API of Google's Gemini.

It has a format that looks like the same format of AI SDK.

Feel free to use it, until AI SDK adds the support for this feature.

The Hook:

The Hook

import {

Modality,

type LiveClientMessage,

type LiveClientSetup,

type LiveServerMessage,

type Part,

type UsageMetadata,

} from "@google/genai";

import { Buffer } from "buffer";

import { useCallback, useEffect, useRef, useState } from "react";

const model = "models/gemini-2.5-flash-preview-native-audio-dialog";

const useGeminiLiveAudio = ({

apiKey,

responseModalities = [Modality.AUDIO],

systemInstruction,

onUsageReporting,

onReceivingMessage,

onSocketError,

onSocketClose,

onAiResponseCompleted,

onResponseChunks,

onUserInterruption,

targetTokens,

voiceName = AvailableVoices[0].voiceName,

onTurnComplete,

}: {

apiKey: string;

responseModalities?: Modality[];

systemInstruction?: string;

onUsageReporting?: (usage: UsageMetadata) => void;

onReceivingMessage?: (message: LiveServerMessage) => void;

onSocketError?: (error: unknown) => void;

onSocketClose?: (reason: unknown) => void;

onAiResponseCompleted?: ({

base64Audio,

responseQueue,

}: {

base64Audio: string;

responseQueue: Part[];

}) => void;

onResponseChunks?: (part: Part[]) => void;

onUserInterruption?: () => void;

targetTokens?: number;

voiceName?: string; // Optional voice name, default to first available voice

onTurnComplete?: () => void;

}) => {

const innerResponseQueue = useRef<Part[]>([]);

const [responseQueue, setResponseQueue] = useState<Part[]>([]);

const socketRef = useRef<WebSocket | null>(null);

const [isConnected, setIsConnected] = useState(false);

const _targetTokens = targetTokens ? `${targetTokens}` : undefined;

const turnCompleteRef = useRef(true);

console.log("isConnected:", isConnected);

const sendMessage = useCallback(

(message: LiveClientMessage) => {

if (!isConnected || !socketRef.current) {

console.warn("WebSocket is not connected");

return;

}

console.log("Sending message:", message);

socketRef.current.send(JSON.stringify(message));

},

[isConnected]

);

const connectSocket = useCallback(() => {

if (socketRef.current?.readyState) {

console.warn("WebSocket is already connected");

return;

}

const ws = new WebSocket(

`wss://generativelanguage.googleapis.com/ws/google.ai.generativelanguage.v1beta.GenerativeService.BidiGenerateContent?key=${apiKey}`

);

socketRef.current = ws;

socketRef.current.onopen = () => {

console.log("WebSocket connection opened");

setIsConnected(true);

};

socketRef.current.onmessage = async (event: MessageEvent<Blob>) => {

const text = await event.data.text();

const message: LiveServerMessage = JSON.parse(text);

console.log("WebSocket message received:", message);

if (message.usageMetadata) {

onUsageReporting?.(message.usageMetadata);

}

onReceivingMessage?.(message);

console.log("turnComplete:", message.serverContent?.turnComplete);

if (message.serverContent?.turnComplete) {

turnCompleteRef.current = true;

onTurnComplete?.();

const combinedBase64 = combineResponseQueueToBase64Pcm({

responseQueue: innerResponseQueue.current,

});

onAiResponseCompleted?.({

base64Audio: combinedBase64,

responseQueue: innerResponseQueue.current,

});

console.log(

"AI Turn completed, base64 audio:",

responseQueue,

combinedBase64,

innerResponseQueue.current

);

setResponseQueue([]);

innerResponseQueue.current = [];

}

if (message?.serverContent?.modelTurn?.parts) {

const parts: Part[] =

message?.serverContent?.modelTurn?.parts.filter(

(part) => part.inlineData !== undefined

) ?? [];

if (parts.length > 0) {

onResponseChunks?.(parts);

const newResponseQueue = [...innerResponseQueue.current, ...parts];

turnCompleteRef.current = false;

setResponseQueue(newResponseQueue);

innerResponseQueue.current = newResponseQueue;

}

}

if (message?.serverContent?.interrupted) {

onUserInterruption?.();

}

};

socketRef.current.onerror = (error) => {

console.log("WebSocket error:", error);

console.debug("Error:", error);

onSocketError?.(error);

};

socketRef.current.onclose = (event) => {

console.debug("Close:", event.reason);

console.log("Session closed:", event);

socketRef.current = null;

onSocketClose?.(event);

setIsConnected(false);

};

}, [

apiKey,

onAiResponseCompleted,

onReceivingMessage,

onResponseChunks,

onSocketClose,

onSocketError,

onTurnComplete,

onUsageReporting,

onUserInterruption,

responseQueue,

]);

useEffect(() => {

if (isConnected) {

const serverConfig: LiveClientSetup = {

model,

generationConfig: {

responseModalities,

speechConfig: {

voiceConfig: {

prebuiltVoiceConfig: {

voiceName,

},

},

},

},

systemInstruction: { role: systemInstruction },

contextWindowCompression: {

slidingWindow: { targetTokens: _targetTokens },

},

};

sendMessage({

setup: serverConfig,

});

} else {

console.log("WebSocket is not connected");

}

// eslint-disable-next-line react-hooks/exhaustive-deps

}, [isConnected]);

const disconnectSocket = useCallback(() => {

socketRef.current?.close();

socketRef.current = null;

}, []);

useEffect(() => {

return () => {

disconnectSocket();

};

}, [disconnectSocket]);

const sendRealtimeInput = useCallback(

(message: string) => {

if (!isConnected || !socketRef.current) {

console.warn("WebSocket is not connected");

return;

}

const messageToSend: LiveClientMessage = {

realtimeInput: {

audio: {

data: message,

mimeType: "audio/pcm;rate=16000",

},

},

};

console.log("Sending message:", messageToSend);

sendMessage(messageToSend);

},

[isConnected, sendMessage]

);

return {

isConnected,

connectSocket,

disconnectSocket,

sendRealtimeInput,

responseQueue,

};

};

const combineResponseQueueToBase64Pcm = ({

responseQueue,

}: {

responseQueue: Part[];

}) => {

const pcmChunks: Uint8Array[] = responseQueue.map((part) => {

if (part?.inlineData?.data) {

const buf = Buffer.from(part.inlineData?.data, "base64"); // decode base64 to raw bytes

const toReturn = new Uint8Array(

buf.buffer,

buf.byteOffset,

buf.byteLength

);

return toReturn;

} else {

return new Uint8Array();

}

});

// Calculate total length

const totalLength = pcmChunks.reduce((acc, chunk) => acc + chunk.length, 0);

// Create one big Uint8Array

const combined = new Uint8Array(totalLength);

let offset = 0;

for (const chunk of pcmChunks) {

combined.set(chunk, offset);

offset += chunk.length;

}

// Convert back to base64

const combinedBase64 = Buffer.from(combined.buffer).toString("base64");

return combinedBase64;

};

const AvailableVoices: {

voiceName: VoiceNameType;

description: string;

}[] = [

{ voiceName: "Zephyr", description: "Bright" },

{ voiceName: "Puck", description: "Upbeat" },

{ voiceName: "Charon", description: "Informative" },

{ voiceName: "Kore", description: "Firm" },

{ voiceName: "Fenrir", description: "Excitable" },

{ voiceName: "Leda", description: "Youthful" },

{ voiceName: "Orus", description: "Firm" },

{ voiceName: "Aoede", description: "Breezy" },

{ voiceName: "Callirrhoe", description: "Easy-going" },

{ voiceName: "Autonoe", description: "Bright" },

{ voiceName: "Enceladus", description: "Breathy" },

{ voiceName: "Iapetus", description: "Clear" },

{ voiceName: "Umbriel", description: "Easy-going" },

{ voiceName: "Algieba", description: "Smooth" },

{ voiceName: "Despina", description: "Smooth" },

{ voiceName: "Erinome", description: "Clear" },

{ voiceName: "Algenib", description: "Gravelly" },

{ voiceName: "Rasalgethi", description: "Informative" },

{ voiceName: "Laomedeia", description: "Upbeat" },

{ voiceName: "Achernar", description: "Soft" },

{ voiceName: "Alnilam", description: "Firm" },

{ voiceName: "Schedar", description: "Even" },

{ voiceName: "Gacrux", description: "Mature" },

{ voiceName: "Pulcherrima", description: "Forward" },

{ voiceName: "Achird", description: "Friendly" },

{ voiceName: "Zubenelgenubi", description: "Casual" },

{ voiceName: "Vindemiatrix", description: "Gentle" },

{ voiceName: "Sadachbia", description: "Lively" },

{ voiceName: "Sadaltager", description: "Knowledgeable" },

{ voiceName: "Sulafat", description: "Warm" },

];

type VoiceNameType =

| "Zephyr"

| "Puck"

| "Charon"

| "Kore"

| "Fenrir"

| "Leda"

| "Orus"

| "Aoede"

| "Callirrhoe"

| "Autonoe"

| "Enceladus"

| "Iapetus"

| "Umbriel"

| "Algieba"

| "Despina"

| "Erinome"

| "Algenib"

| "Rasalgethi"

| "Laomedeia"

| "Achernar"

| "Alnilam"

| "Schedar"

| "Gacrux"

| "Pulcherrima"

| "Achird"

| "Zubenelgenubi"

| "Vindemiatrix"

| "Sadachbia"

| "Sadaltager"

| "Sulafat";

export { AvailableVoices, useGeminiLiveAudio };

export type { VoiceNameType };

Usage Example:

Usage Example

import type {

LiveServerMessage,

MediaModality,

UsageMetadata,

} from "@google/genai";

import { Modality } from "@google/genai/web";

import { useCallback, useRef, useState } from "react";

import {

AvailableVoices,

useGeminiLiveAudio,

type VoiceNameType,

} from "./hooks/useGeminiLiveAudio";

//console.log("Google API Key:", import.meta.env.VITE_GOOGLE_API_KEY);

const App = () => {

const [recording, setRecording] = useState(false);

const mediaRecorderRef = useRef<MediaRecorder | null>(null);

const [messages, setMessages] = useState<LiveServerMessage[]>([]);

const audioContextRef = useRef<AudioContext | null>(null);

const [selectedVoice, setSelectedVoice] = useState<VoiceNameType>(

AvailableVoices[0].voiceName

);

const {

connectSocket,

disconnectSocket,

isConnected,

sendRealtimeInput,

responseQueue,

} = useGeminiLiveAudio({

apiKey: import.meta.env.VITE_GOOGLE_API_KEY,

voiceName: selectedVoice,

responseModalities: [Modality.AUDIO],

systemInstruction:

"You are a helpful assistant and answer in a friendly tone.",

onUsageReporting: (usage) => {

const tokensUsage = reportIfTokensUsage({ usageMetadata: usage });

console.log("New Usage Report:", tokensUsage);

},

onAiResponseCompleted({ base64Audio, responseQueue }) {

console.log("response completed", base64Audio);

if (!(base64Audio && typeof base64Audio === "string")) {

return;

}

if (!audioContextRef.current) {

audioContextRef.current = new AudioContext({ sampleRate: 24000 });

}

try {

const audioBuffer = base64ToAudioBuffer(

base64Audio,

audioContextRef.current

);

const source = audioContextRef.current.createBufferSource();

source.buffer = audioBuffer;

source.connect(audioContextRef.current.destination);

source.start(0);

} catch (err) {

console.error("Playback error:", err);

}

},

onUserInterruption: () => {

audioContextRef.current = null;

},

onReceivingMessage: (message) => {

setMessages((prev) => [...prev, message]);

},

});

const [recordedPCM, setRecordedPCM] = useState<string>("");

const startRecording = useCallback(async () => {

const stream = await navigator.mediaDevices.getUserMedia({ audio: true });

const mediaRecorder = new MediaRecorder(stream);

mediaRecorderRef.current = mediaRecorder;

mediaRecorder.ondataavailable = async (event) => {

const audioChunks = [];

if (event.data.size > 0) {

audioChunks.push(event.data);

const blob = new Blob(audioChunks, { type: "audio/webm" });

const arrayBuffer = await blob.arrayBuffer();

const audioContext = new AudioContext(); // default 48000 Hz

const audioBuffer = await audioContext.decodeAudioData(arrayBuffer);

// ✅ Resample to 16000 Hz PCM

const resampledBuffer = await resampleAudioBuffer(audioBuffer, 16000);

const pcmData = convertToPCM16(resampledBuffer);

const base64String = arrayBufferToBase64(pcmData.buffer);

console.log("data:audio/pcm;rate=16000;base64," + base64String);

setRecordedPCM(base64String);

}

};

setRecordedPCM("");

mediaRecorder.start();

setRecording(true);

}, []);

const stopRecording = useCallback(() => {

mediaRecorderRef.current?.stop();

setRecording(false);

}, []);

return (

<div style={{ padding: "20px" }}>

<h1>Google Gemini Live Audio</h1>

<h2

style={{

color: "lime",

}}

>

IMPORTANT: Before stopping recording, please stay silent for a while, so

that gemini can understand that your turn is over, and now it's his turn

to respond

</h2>

<p>Status: {isConnected ? "Connected" : "Disconnected"}</p>

{isConnected ? (

<div className=" gap-3 flex flex-col">

<button onClick={disconnectSocket}>Disconnect</button>

</div>

) : (

<button onClick={connectSocket}>Connect</button>

)}

<div>

<label htmlFor="voiceSelect">Select Voice:</label>

<select

id="voiceSelect"

value={selectedVoice}

onChange={(e) => setSelectedVoice(e.target.value as VoiceNameType)}

>

{AvailableVoices.map((voice) => (

<option key={voice.voiceName} value={voice.voiceName}>

{`${voice.voiceName} -- ${voice.description}`}

</option>

))}

</select>

<div>

<h4>Selected Voice:</h4>

<p>{selectedVoice}</p>

</div>

</div>

<div style={{ marginTop: "20px" }}>

<h3>Messages:</h3>

{messages.map((message, index) => (

<p key={index}>{JSON.stringify(message)}</p>

))}

</div>

<button

onClick={() => {

console.log(JSON.stringify(responseQueue));

}}

>

Log Response Queue

</button>

<button

onClick={() => {

console.log(JSON.stringify(messages));

}}

>

Log Messages

</button>

<div>

<button onClick={recording ? stopRecording : startRecording}>

{recording ? "Stop Recording" : "Start Recording"}

</button>

</div>

<button

onClick={() => {

audioContextRef.current?.suspend();

audioContextRef.current = null;

}}

>

Stop Speaking

</button>

<button

onClick={() => {

if (recordedPCM.length === 0) {

console.warn("No recorded PCM to play");

return;

}

const playNext = (index = 0) => {

console.log("Playing PCM index:", index);

const audioContext = new AudioContext({ sampleRate: 24000 });

const base64Audio = recordedPCM;

if (!base64Audio) {

console.warn("No recorded PCM to play");

return;

}

const audioBuffer = base64ToAudioBuffer(base64Audio, audioContext);

const source = audioContext.createBufferSource();

source.buffer = audioBuffer;

source.connect(audioContext.destination);

source.start(0);

};

playNext();

}}

>

Play Recorded PCM

</button>

{isConnected && (

<button

onClick={() => {

if (recordedPCM.length === 0) {

console.warn("No recorded PCM to send");

return;

}

sendRealtimeInput(recordedPCM);

}}

>

Send

</button>

)}

<div>

<button

onClick={() => {

playPCMBase64({

base64String: recordedPCM,

sampleRate: 16000,

});

}}

>

Play Recorded PCM

</button>

</div>

</div>

);

};

function playPCMBase64({

base64String,

sampleRate,

}: {

base64String: string;

sampleRate: number;

}) {

// Convert base64 to ArrayBuffer

const binaryString = atob(base64String);

const len = binaryString.length;

const bytes = new Uint8Array(len);

for (let i = 0; i < len; i++) {

bytes[i] = binaryString.charCodeAt(i);

}

// Convert to Int16Array

const pcm16 = new Int16Array(bytes.buffer);

// Convert to Float32Array (range -1.0 to 1.0)

const float32 = new Float32Array(pcm16.length);

for (let i = 0; i < pcm16.length; i++) {

float32[i] = pcm16[i] / 32768; // normalize

}

// Use Web Audio API to play

const context = new AudioContext({ sampleRate });

const buffer = context.createBuffer(1, float32.length, sampleRate);

buffer.copyToChannel(float32, 0);

const source = context.createBufferSource();

source.buffer = buffer;

source.connect(context.destination);

source.start();

}

// Helper: Resample AudioBuffer to 16000 Hz

async function resampleAudioBuffer(

audioBuffer: AudioBuffer,

targetSampleRate: number

) {

const offlineCtx = new OfflineAudioContext(

audioBuffer.numberOfChannels,

audioBuffer.duration * targetSampleRate,

targetSampleRate

);

const source = offlineCtx.createBufferSource();

source.buffer = audioBuffer;

source.connect(offlineCtx.destination);

source.start();

const resampled = await offlineCtx.startRendering();

return resampled;

}

// Helper: Convert AudioBuffer to Int16 PCM

function convertToPCM16(audioBuffer: AudioBuffer) {

const channelData = audioBuffer.getChannelData(0); // mono

const pcm16 = new Int16Array(channelData.length);

for (let i = 0; i < channelData.length; i++) {

const s = Math.max(-1, Math.min(1, channelData[i]));

pcm16[i] = s < 0 ? s * 0x8000 : s * 0x7fff;

}

return pcm16;

}

// Helper: Convert ArrayBuffer to Base64

function arrayBufferToBase64(buffer: ArrayBuffer) {

let binary = "";

const bytes = new Uint8Array(buffer);

for (let i = 0; i < bytes.byteLength; i++) {

binary += String.fromCharCode(bytes[i]);

}

return btoa(binary);

}

function base64ToAudioBuffer(

base64: string,

audioContext: AudioContext

): AudioBuffer {

const binary = atob(base64);

const buffer = new ArrayBuffer(binary.length);

const view = new DataView(buffer);

for (let i = 0; i < binary.length; i++) {

view.setUint8(i, binary.charCodeAt(i));

}

const pcm = new Int16Array(buffer);

const float32 = new Float32Array(pcm.length);

for (let i = 0; i < pcm.length; i++) {

float32[i] = pcm[i] / 32768; // Normalize

}

const audioBuffer = audioContext.createBuffer(

1, // mono

float32.length,

24000 // sampleRate

);

audioBuffer.getChannelData(0).set(float32);

return audioBuffer;

}

const reportIfTokensUsage = ({

usageMetadata,

}: {

usageMetadata: UsageMetadata;

}): TokensUsageType => {

let inputTextTokens = 0;

let inputAudioTokens = 0;

let outputTextTokens = 0;

let outputAudioTokens = 0;

for (const value of usageMetadata.promptTokensDetails ?? []) {

if (value.modality === (Modality.TEXT as unknown as MediaModality)) {

inputTextTokens += value.tokenCount ?? 0;

} else if (

value.modality === (Modality.AUDIO as unknown as MediaModality)

) {

inputAudioTokens += value.tokenCount ?? 0;

}

}

for (const value of usageMetadata.responseTokensDetails ?? []) {

if (value.modality === (Modality.TEXT as unknown as MediaModality)) {

outputTextTokens += value.tokenCount ?? 0;

} else if (

value.modality === (Modality.AUDIO as unknown as MediaModality)

) {

outputAudioTokens += value.tokenCount ?? 0;

}

}

const usage: TokensUsageType = {

input: {

audioTokens: inputAudioTokens,

textTokens: inputTextTokens,

},

output: {

audioTokens: outputAudioTokens,

textTokens: outputTextTokens,

},

};

return usage;

};

type TokensUsageType = {

input: {

textTokens: number;

audioTokens: number;

};

output: {

textTokens: number;

audioTokens: number;

};

};

export default App;

Google Live API Documentation: https://ai.google.dev/gemini-api/docs/live

GitHub Repo: https://github.com/OmarThinks/gemini-live-audio-project

Feature Request: #7907

+1

+1

+1

+1

+1

+100

+1

I created this hook for communicating with OpenAI realtime audio.

Express endpoint:

require("dotenv").config();

const cors = require("cors");

const express = require("express");

const app = express();

const port = 3000; // You can choose any available port

app.use(cors());

// Define a basic route

app.get("/", (req, res) => {

res.send("Hello, Express!");

});

app.get("/session", async (req, res) => {

const r = await fetch("https://api.openai.com/v1/realtime/sessions", {

method: "POST",

headers: {

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "gpt-4o-realtime-preview-2025-06-03",

voice: "verse",

}),

});

const data = await r.json();

// Send back the JSON we received from the OpenAI REST API

res.send(data);

});

// Start the server

app.listen(port, () => {

console.log(`Express app listening at http://localhost:${port}`);

});

useOpenAiRealTime Hook:

import { useCallback, useEffect, useRef, useState } from "react";

import { Buffer } from "buffer";

const useOpenAiRealTime = ({

instructions,

onMessageReceived,

onAudioResponseComplete,

onUsageReport,

onReadyToReceiveAudio,

onSocketClose,

onSocketError,

}: {

instructions: string;

onMessageReceived: (message: object) => void;

onAudioResponseComplete: (base64Audio: string) => void;

onUsageReport: (usage: object) => void;

onReadyToReceiveAudio: () => void;

onSocketClose: () => void;

onSocketError?: (error: any) => void;

}) => {

const webSocketRef = useRef<null | WebSocket>(null);

const [isWebSocketConnecting, setIsWebSocketConnecting] = useState(false);

const [isWebSocketConnected, setIsWebSocketConnected] = useState(false);

const [isInitialized, setIsInitialized] = useState(false);

const [isAiResponseInProgress, setIsAiResponseInProgress] = useState(false);

const [transcription, setTranscription] = useState<string>("");

const responseQueueRef = useRef<string[]>([]);

const resetHookState = useCallback(() => {

webSocketRef.current = null;

setIsWebSocketConnecting(false);

setIsWebSocketConnected(false);

setIsInitialized(false);

responseQueueRef.current = [];

setIsAiResponseInProgress(false);

setTranscription("");

}, []);

const connectWebSocket = useCallback(

async ({ ephemeralKey }: { ephemeralKey: string }) => {

setIsWebSocketConnecting(true);

if (webSocketRef.current) {

return;

}

try {

const url = `wss://api.openai.com/v1/realtime?model=gpt-4o-realtime-preview-2024-12-17&token=${ephemeralKey}`;

const ws = new WebSocket(url, [

"realtime",

"openai-insecure-api-key." + ephemeralKey,

"openai-beta.realtime-v1",

]);

ws.addEventListener("open", () => {

console.log("Connected to server.");

setIsWebSocketConnected(true);

});

ws.addEventListener("close", () => {

console.log("Disconnected from server.");

setIsWebSocketConnected(false);

resetHookState();

onSocketClose();

});

ws.addEventListener("error", (error) => {

console.error("WebSocket error:", error);

onSocketError?.(error);

});

ws.addEventListener("message", (event) => {

//console.log("WebSocket message:", event.data);

// convert message to an object

const messageObject = JSON.parse(event.data);

onMessageReceived(messageObject);

if (messageObject.type === "response.created") {

setIsAiResponseInProgress(true);

setTranscription("");

}

if (messageObject.type === "response.audio.done") {

setIsAiResponseInProgress(false);

const combinedBase64 = combineBase64ArrayList(

responseQueueRef.current

);

responseQueueRef.current = [];

onAudioResponseComplete(combinedBase64);

}

if (messageObject.type === "response.audio.delta") {

const audioChunk = messageObject.delta;

if (audioChunk) {

responseQueueRef.current.push(audioChunk);

}

}

if (messageObject?.response?.usage) {

onUsageReport(messageObject.response.usage);

}

if (messageObject.type === "session.updated") {

setIsInitialized(true);

onReadyToReceiveAudio();

}

if (messageObject.type === "response.audio_transcript.delta") {

setTranscription((prev) => prev + messageObject.delta);

}

});

webSocketRef.current = ws;

} catch (error) {

console.error("Error connecting to WebSocket:", error);

} finally {

setIsWebSocketConnecting(false);

}

},

[

onAudioResponseComplete,

onMessageReceived,

onReadyToReceiveAudio,

onSocketClose,

onSocketError,

onUsageReport,

resetHookState,

]

);

const disconnectSocket = useCallback(() => {

if (webSocketRef.current) {

webSocketRef.current.close();

}

}, []);

useEffect(() => {

return () => {

disconnectSocket();

};

// eslint-disable-next-line react-hooks/exhaustive-deps

}, []);

useEffect(() => {

if (isWebSocketConnected) {

const event = {

type: "session.update",

session: {

instructions,

},

};

webSocketRef.current?.send(JSON.stringify(event));

}

}, [instructions, isWebSocketConnected]);

const sendMessage = useCallback(

(messageObject: { [key: string]: any }) => {

if (

webSocketRef.current &&

webSocketRef.current.readyState === WebSocket.OPEN &&

isWebSocketConnected &&

isInitialized

) {

webSocketRef.current.send(JSON.stringify(messageObject));

}

},

[isInitialized, isWebSocketConnected]

);

const sendBase64AudioStringChunk = useCallback(

(base64String: string) => {

if (webSocketRef.current) {

sendMessage({

type: "input_audio_buffer.append",

audio: base64String,

});

}

},

[sendMessage]

);

return {

isWebSocketConnected,

connectWebSocket,

disconnectSocket,

isWebSocketConnecting,

sendBase64AudioStringChunk,

isInitialized,

isAiResponseInProgress,

transcription,

};

};

const combineBase64ArrayList = (base64Array: string[]): string => {

const pcmChunks: Uint8Array[] = base64Array.map((base64Text) => {

if (base64Text) {

const buf = Buffer.from(base64Text, "base64"); // decode base64 to raw bytes

const toReturn = new Uint8Array(

buf.buffer,

buf.byteOffset,

buf.byteLength

);

return toReturn;

} else {

return new Uint8Array();

}

});

// Calculate total length

const totalLength = pcmChunks.reduce((acc, chunk) => acc + chunk.length, 0);

// Create one big Uint8Array

const combined = new Uint8Array(totalLength);

let offset = 0;

for (const chunk of pcmChunks) {

combined.set(chunk, offset);

offset += chunk.length;

}

// Convert back to base64

const combinedBase64 = Buffer.from(combined.buffer).toString("base64");

return combinedBase64;

};

export { useOpenAiRealTime, combineBase64ArrayList };

Example:

import "./App.css";

// Just a dummy base64 24K audio for pinging, it says "Hey, can you hear me?"

import { dummyBase64Audio24K } from "./samples/dummyBase64Audio";

import {

combineBase64ArrayList,

useOpenAiRealTime,

} from "./hooks/useOpenAiRealTimeHook";

import { useCallback, useEffect, useRef, useState } from "react";

function App() {

const [messages, setMessages] = useState<object[]>([]);

const isAudioPlayingRef = useRef(false);

const onIsAudioPlayingUpdate = useCallback((playing: boolean) => {

isAudioPlayingRef.current = playing;

}, []);

const { isAudioPlaying, playAudio, stopPlayingAudio } = useAudioPlayer({

onIsAudioPlayingUpdate,

});

const enqueueMessage = useCallback((message: object) => {

console.log("Got response chunk");

setMessages((prevMessages) => [...prevMessages, message]);

}, []);

const onAudioResponseComplete = useCallback(

(base64String: string) => {

console.log("Playing full response");

playAudio({

sampleRate: 24000,

base64Text: base64String,

});

},

[playAudio]

);

const onUsageReport = useCallback((usage: object) => {

console.log("Usage report:", usage);

}, []);

const onSocketClose = useCallback(() => {

console.log("onSocketClose");

//stopStreaming();

stopPlayingAudio();

}, [stopPlayingAudio]);

const onReadyToReceiveAudio = useCallback(() => {

//startStreaming();

}, []);

const {

isWebSocketConnected,

connectWebSocket,

disconnectSocket,

isWebSocketConnecting,

sendBase64AudioStringChunk,

isAiResponseInProgress,

isInitialized,

transcription,

} = useOpenAiRealTime({

instructions: "You are a helpful assistant.",

onMessageReceived: enqueueMessage,

onAudioResponseComplete,

onUsageReport,

onSocketClose,

onReadyToReceiveAudio,

});

const ping = useCallback(() => {

sendBase64AudioStringChunk(dummyBase64Audio24K);

}, [sendBase64AudioStringChunk]);

const [chunks, setChunks] = useState<string[]>([]);

console.log("before onAudioStreamerChunk: ", isAiResponseInProgress);

const onAudioStreamerChunk = useCallback(

(chunk: string) => {

setChunks((prev) => [...prev, chunk]);

if (

isWebSocketConnected &&

isInitialized &&

!isAiResponseInProgress &&

!isAudioPlayingRef.current

) {

console.log("Sending audio chunk:", chunk.slice(0, 50) + "..."); // base64 string

sendBase64AudioStringChunk(chunk);

}

},

[

isAiResponseInProgress,

isInitialized,

isWebSocketConnected,

sendBase64AudioStringChunk,

]

);

const { isStreaming, startStreaming, stopStreaming } = useAudioStreamer({

sampleRate: 16000, // e.g., 16kHz - // TODO : The documentation doesn't specify the exact requirements for this. It tried 16K and 24K. I think 16k is better.

interval: 250, // emit every 250 milliseconds

onAudioChunk: onAudioStreamerChunk,

});

const playAudioRecorderChunks = useCallback(() => {

const combined = combineBase64ArrayList(chunks);

playAudio({ base64Text: combined, sampleRate: 16000 });

}, [chunks, playAudio]);

const _connectWebSocket = useCallback(async () => {

const tokenResponse = await fetch("http://localhost:3000/session");

const data = await tokenResponse.json();

const EPHEMERAL_KEY = data.client_secret.value;

connectWebSocket({ ephemeralKey: EPHEMERAL_KEY });

}, [connectWebSocket]);

useEffect(() => {

if (isWebSocketConnected) {

if (isInitialized) {

console.log("Starting audio streaming");

startStreaming();

}

} else {

console.log("Stopping audio streaming");

stopStreaming();

}

// eslint-disable-next-line react-hooks/exhaustive-deps

}, [isWebSocketConnected, isInitialized]);

return (

<div

className=""

style={{

width: "100vw",

backgroundColor: "black",

minHeight: "100vh",

gap: 16,

display: "flex",

flexDirection: "column",

padding: 16,

}}

>

<div>

<button

onClick={() => {

playAudio({

base64Text: dummyBase64Audio24K,

sampleRate: 24000,

});

}}

>

Play 24K string

</button>

</div>

<div>

{isWebSocketConnected && <button onClick={ping}>Ping</button>}

{isWebSocketConnecting ? (

<span>Connecting...</span>

) : isWebSocketConnected ? (

<button onClick={disconnectSocket}>disconnectSocket</button>

) : (

<button onClick={_connectWebSocket}>connectWebSocket</button>

)}

<button

onClick={() => {

console.log("Log Messages:", messages);

}}

>

Log Messages

</button>

</div>

<hr />

<div>

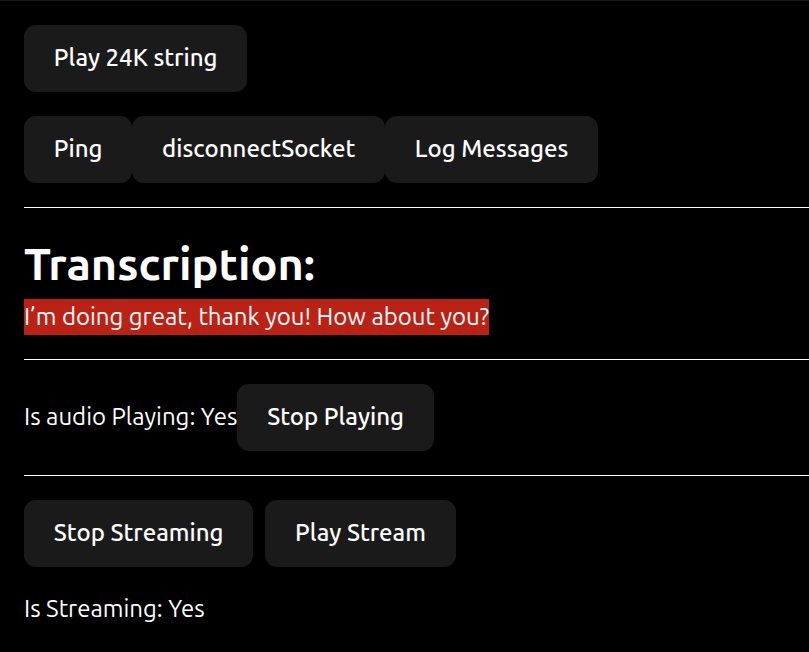

<h2 className=" text-[30px] font-bold">Transcription:</h2>

<p>{transcription}</p>

</div>

<hr />

<div className=" flex-row flex items-center">

<p>Is audio Playing: {isAudioPlaying ? "Yes" : "No"}</p>

{isAudioPlaying && (

<button onClick={stopPlayingAudio}>Stop Playing</button>

)}

</div>

<hr />

<div className=" flex flex-row items-center gap-2">

{!isStreaming && (

<button onClick={startStreaming}>Start Streaming</button>

)}

{isStreaming && <button onClick={stopStreaming}>Stop Streaming</button>}

{isStreaming && (

<button onClick={playAudioRecorderChunks}>Play Stream</button>

)}

<br />

</div>

<p>Is Streaming: {isStreaming ? "Yes" : "No"}</p>

</div>

);

}

function floatTo16BitPCM(float32Array: Float32Array): Int16Array {

const buffer = new Int16Array(float32Array.length);

for (let i = 0; i < float32Array.length; i++) {

const s = Math.max(-1, Math.min(1, float32Array[i]));

buffer[i] = s < 0 ? s * 0x8000 : s * 0x7fff;

}

return buffer;

}

function encodePCMToBase64(int16Array: Int16Array): string {

const buffer = new Uint8Array(int16Array.buffer);

let binary = "";

const chunkSize = 0x8000;

for (let i = 0; i < buffer.length; i += chunkSize) {

const chunk = buffer.subarray(i, i + chunkSize);

binary += String.fromCharCode.apply(null, chunk as unknown as number[]);

}

return btoa(binary);

}

const useAudioStreamer = ({

sampleRate,

interval,

onAudioChunk,

}: {

sampleRate: number;

interval: number;

onAudioChunk: (audioChunk: string) => void;

}) => {

const [isStreaming, setIsStreaming] = useState(false);

const updateIsStreaming = useCallback((streaming: boolean) => {

setIsStreaming(streaming);

}, []);

const mediaStreamRef = useRef<MediaStream | null>(null);

const audioContextRef = useRef<AudioContext | null>(null);

const processorRef = useRef<ScriptProcessorNode | null>(null);

const bufferRef = useRef<Float32Array[]>([]);

const intervalIdRef = useRef<number | null>(null);

const startStreaming = useCallback(async () => {

if (isStreaming) return;

try {

const stream = await navigator.mediaDevices.getUserMedia({ audio: true });

mediaStreamRef.current = stream;

const audioContext = new AudioContext({ sampleRate });

audioContextRef.current = audioContext;

const source = audioContext.createMediaStreamSource(stream);

// ScriptProcessorNode (deprecated but still widely supported).

const processor = audioContext.createScriptProcessor(4096, 1, 1);

processorRef.current = processor;

source.connect(processor);

processor.connect(audioContext.destination);

processor.onaudioprocess = (e) => {

const inputData = e.inputBuffer.getChannelData(0);

bufferRef.current.push(new Float32Array(inputData));

};

// Send chunks every interval

intervalIdRef.current = window.setInterval(() => {

if (bufferRef.current.length === 0) return;

// Flatten buffered audio

const length = bufferRef.current.reduce(

(acc, cur) => acc + cur.length,

0

);

const merged = new Float32Array(length);

let offset = 0;

for (const chunk of bufferRef.current) {

merged.set(chunk, offset);

offset += chunk.length;

}

bufferRef.current = [];

// Convert -> PCM16 -> Base64

const pcm16 = floatTo16BitPCM(merged);

const base64 = encodePCMToBase64(pcm16);

onAudioChunk(base64);

}, interval);

updateIsStreaming(true);

} catch (err) {

console.error("Error starting audio stream:", err);

}

}, [interval, isStreaming, onAudioChunk, sampleRate, updateIsStreaming]);

const stopStreaming = useCallback(() => {

if (!isStreaming) return;

if (intervalIdRef.current) {

clearInterval(intervalIdRef.current);

intervalIdRef.current = null;

}

if (processorRef.current) {

processorRef.current.disconnect();

processorRef.current = null;

}

if (audioContextRef.current) {

audioContextRef.current.close();

audioContextRef.current = null;

}

if (mediaStreamRef.current) {

mediaStreamRef.current.getTracks().forEach((t) => t.stop());

mediaStreamRef.current = null;

}

bufferRef.current = [];

updateIsStreaming(false);

}, [isStreaming, updateIsStreaming]);

return { isStreaming, startStreaming, stopStreaming };

};

function base64ToFloat32Array(base64String: string): Float32Array {

// Decode base64 → Uint8Array

const binaryString = atob(base64String);

const len = binaryString.length;

const bytes = new Uint8Array(len);

for (let i = 0; i < len; i++) {

bytes[i] = binaryString.charCodeAt(i);

}

// Convert Uint8Array → Int16Array

const pcm16 = new Int16Array(bytes.buffer);

// Convert Int16 → Float32 (-1 to 1)

const float32 = new Float32Array(pcm16.length);

for (let i = 0; i < pcm16.length; i++) {

float32[i] = pcm16[i] / 32768;

}

return float32;

}

const useAudioPlayer = ({

onIsAudioPlayingUpdate,

}: {

onIsAudioPlayingUpdate: (isAudioPlaying: boolean) => void;

}): {

isAudioPlaying: boolean;

playAudio: ({

sampleRate,

base64Text,

}: {

sampleRate: number;

base64Text: string;

}) => void;

stopPlayingAudio: () => void;

} => {

const [isAudioPlaying, setIsAudioPlaying] = useState(false);

const updateIsAudioPlaying = useCallback(

(playing: boolean) => {

setIsAudioPlaying(playing);

onIsAudioPlayingUpdate(playing);

},

[onIsAudioPlayingUpdate]

);

const audioContextRef = useRef<AudioContext | null>(null);

const sourceRef = useRef<AudioBufferSourceNode | null>(null);

const stopPlayingAudio = useCallback(() => {

if (sourceRef.current) {

try {

sourceRef.current.stop();

} catch {

//

}

sourceRef.current.disconnect();

sourceRef.current = null;

}

if (audioContextRef.current) {

audioContextRef.current.close();

audioContextRef.current = null;

}

updateIsAudioPlaying(false);

}, [updateIsAudioPlaying]);

const playAudio = useCallback(

({

sampleRate,

base64Text,

}: {

sampleRate: number;

base64Text: string;

}) => {

stopPlayingAudio(); // stop any currently playing audio first

const float32 = base64ToFloat32Array(base64Text);

const audioContext = new AudioContext({ sampleRate });

audioContextRef.current = audioContext;

const buffer = audioContext.createBuffer(1, float32.length, sampleRate);

buffer.copyToChannel(float32, 0);

const source = audioContext.createBufferSource();

source.buffer = buffer;

source.connect(audioContext.destination);

source.onended = () => {

updateIsAudioPlaying(false);

stopPlayingAudio();

};

source.start();

sourceRef.current = source;

updateIsAudioPlaying(true);

},

[stopPlayingAudio, updateIsAudioPlaying]

);

return { isAudioPlaying, playAudio, stopPlayingAudio };

};

export default App;

Links:

- GitHub Repo: https://github.com/OmarThinks/openai-realtime-api-project

- YouTube Video: https://www.youtube.com/watch?v=hPoZjt1Pg7k

Related: #8259

OpenAI Realtime API + React Native project

- GitHub: https://github.com/OmarThinks/react-native-openai-realtime-api-project

- YouTube: https://www.youtube.com/watch?v=_XE5-ETkUdo

ExpressJS Endpoint:

require("dotenv").config();

const cors = require("cors");

const express = require("express");

const app = express();

const port = 3000; // You can choose any available port

app.use(cors());

// Define a basic route

app.get("/", (req, res) => {

res.send("Hello, Express!");

});

app.get("/session", async (req, res) => {

const r = await fetch("https://api.openai.com/v1/realtime/sessions", {

method: "POST",

headers: {

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "gpt-4o-realtime-preview-2025-06-03",

voice: "verse",

}),

});

const data = await r.json();

// Send back the JSON we received from the OpenAI REST API

res.send(data);

});

// Start the server

app.listen(port, () => {

console.log(`Express app listening at http://localhost:${port}`);

});

useOpenAiRealTime

import { useCallback, useEffect, useRef, useState } from "react";

import { Buffer } from "buffer";

const useOpenAiRealTime = ({

instructions,

onMessageReceived,

onAudioResponseComplete,

onUsageReport,

onReadyToReceiveAudio,

onSocketClose,

onSocketError,

}: {

instructions: string;

onMessageReceived: (message: object) => void;

onAudioResponseComplete: (base64Audio: string) => void;

onUsageReport: (usage: object) => void;

onReadyToReceiveAudio: () => void;

onSocketClose: (closeEvent: CloseEvent) => void;

onSocketError?: (error: Event) => void;

}) => {

const webSocketRef = useRef<null | WebSocket>(null);

const [isWebSocketConnecting, setIsWebSocketConnecting] = useState(false);

const [isWebSocketConnected, setIsWebSocketConnected] = useState(false);

const [isInitialized, setIsInitialized] = useState(false);

const [isAiResponseInProgress, setIsAiResponseInProgress] = useState(false);

const [transcription, setTranscription] = useState<string>("");

const responseQueueRef = useRef<string[]>([]);

const resetHookState = useCallback(() => {

webSocketRef.current = null;

setIsWebSocketConnecting(false);

setIsWebSocketConnected(false);

setIsInitialized(false);

responseQueueRef.current = [];

setIsAiResponseInProgress(false);

setTranscription("");

}, []);

const connectWebSocket = useCallback(

async ({ ephemeralKey }: { ephemeralKey: string }) => {

setIsWebSocketConnecting(true);

if (webSocketRef.current) {

return;

}

try {

const url = `wss://api.openai.com/v1/realtime?model=gpt-realtime&token=${ephemeralKey}`;

console.log("url", url);

const ws = new WebSocket(url, [

"realtime",

"openai-insecure-api-key." + ephemeralKey,

"openai-beta.realtime-v1",

]);

ws.addEventListener("open", () => {

console.log("Connected to server.");

setIsWebSocketConnected(true);

});

ws.addEventListener("close", (closeEvent) => {

console.log("Disconnected from server.");

setIsWebSocketConnected(false);

resetHookState();

onSocketClose(closeEvent);

});

ws.addEventListener("error", (error) => {

console.error("WebSocket error:", error);

onSocketError?.(error);

});

ws.addEventListener("message", (event) => {

//console.log("WebSocket message:", event.data);

// convert message to an object

const messageObject = JSON.parse(event.data);

onMessageReceived(messageObject);

if (messageObject.type === "response.created") {

setIsAiResponseInProgress(true);

setTranscription("");

}

if (messageObject.type === "response.audio.done") {

setIsAiResponseInProgress(false);

const combinedBase64 = combineBase64ArrayList(

responseQueueRef.current

);

responseQueueRef.current = [];

onAudioResponseComplete(combinedBase64);

}

if (messageObject.type === "response.audio.delta") {

const audioChunk = messageObject.delta;

if (audioChunk) {

responseQueueRef.current.push(audioChunk);

}

}

if (messageObject?.response?.usage) {

onUsageReport(messageObject.response.usage);

}

if (messageObject.type === "session.updated") {

setIsInitialized(true);

onReadyToReceiveAudio();

}

if (messageObject.type === "response.audio_transcript.delta") {

setTranscription((prev) => prev + messageObject.delta);

}

});

webSocketRef.current = ws;

} catch (error) {

console.error("Error connecting to WebSocket:", error);

} finally {

setIsWebSocketConnecting(false);

}

},

[

onAudioResponseComplete,

onMessageReceived,

onReadyToReceiveAudio,

onSocketClose,

onSocketError,

onUsageReport,

resetHookState,

]

);

const disconnectSocket = useCallback(() => {

if (webSocketRef.current) {

webSocketRef.current.close();

}

}, []);

useEffect(() => {

return () => {

disconnectSocket();

};

// eslint-disable-next-line react-hooks/exhaustive-deps

}, []);

useEffect(() => {

if (isWebSocketConnected) {

const event = {

type: "session.update",

session: {

instructions,

},

};

webSocketRef.current?.send(JSON.stringify(event));

}

}, [instructions, isWebSocketConnected]);

const sendMessage = useCallback(

(messageObject: { [key: string]: any }) => {

if (

webSocketRef.current &&

webSocketRef.current.readyState === WebSocket.OPEN &&

isWebSocketConnected &&

isInitialized

) {

webSocketRef.current.send(JSON.stringify(messageObject));

}

},

[isInitialized, isWebSocketConnected]

);

const sendBase64AudioStringChunk = useCallback(

(base64String: string) => {

if (webSocketRef.current) {

sendMessage({

type: "input_audio_buffer.append",

audio: base64String,

});

}

},

[sendMessage]

);

return {

isWebSocketConnected,

connectWebSocket,

disconnectSocket,

isWebSocketConnecting,

sendBase64AudioStringChunk,

isInitialized,

isAiResponseInProgress,

transcription,

};

};

const combineBase64ArrayList = (base64Array: string[]): string => {

const pcmChunks: Uint8Array[] = base64Array.map((base64Text) => {

if (base64Text) {

const buf = Buffer.from(base64Text, "base64"); // decode base64 to raw bytes

const toReturn = new Uint8Array(

buf.buffer,

buf.byteOffset,

buf.byteLength

);

return toReturn;

} else {

return new Uint8Array();

}

});

// Calculate total length

const totalLength = pcmChunks.reduce((acc, chunk) => acc + chunk.length, 0);

// Create one big Uint8Array

const combined = new Uint8Array(totalLength);

let offset = 0;

for (const chunk of pcmChunks) {

combined.set(chunk, offset);

offset += chunk.length;

}

// Convert back to base64

const combinedBase64 = Buffer.from(combined.buffer).toString("base64");

return combinedBase64;

};

export { useOpenAiRealTime, combineBase64ArrayList };

Example Screen:

import {

combineBase64ArrayList,

useOpenAiRealTime,

} from "@/hooks/ai/useOpenAiRealTimeHook";

import { dummyBase64Audio24K } from "@/samples/dummyBase64Audio";

import { requestRecordingPermissionsAsync } from "expo-audio";

import React, { memo, useCallback, useEffect, useRef, useState } from "react";

import { Alert, Button, Text, View } from "react-native";

import {

AudioBuffer,

AudioBufferSourceNode,

AudioContext,

AudioRecorder,

} from "react-native-audio-api";

import { SafeAreaView } from "react-native-safe-area-context";

// TODO: Replace with your internal ip address

const localIpAddress = "http://192.168.8.103";

const New = () => {

const [messages, setMessages] = useState<object[]>([]);

const isAudioPlayingRef = useRef(false);

const isAiResponseInProgressRef = useRef(false);

const onIsAudioPlayingUpdate = useCallback((playing: boolean) => {

isAudioPlayingRef.current = playing;

}, []);

const { isAudioPlaying, playAudio, stopPlayingAudio } = useAudioPlayer({

onIsAudioPlayingUpdate,

});

const enqueueMessage = useCallback((message: object) => {

console.log("Got response chunk");

setMessages((prevMessages) => [...prevMessages, message]);

}, []);

const onAudioResponseComplete = useCallback(

(base64String: string) => {

console.log("Playing full response");

playAudio({

sampleRate: 24000,

base64Text: base64String,

});

},

[playAudio]

);

const onUsageReport = useCallback((usage: object) => {

console.log("Usage report:", usage);

}, []);

const onSocketClose = useCallback(

(closeEvent: CloseEvent) => {

console.log("onSocketClose", closeEvent);

//stopStreaming();

stopPlayingAudio();

},

[stopPlayingAudio]

);

const onReadyToReceiveAudio = useCallback(() => {

//startStreaming();

}, []);

const {

isWebSocketConnected,

connectWebSocket,

disconnectSocket,

isWebSocketConnecting,

sendBase64AudioStringChunk,

isAiResponseInProgress,

isInitialized,

transcription,

} = useOpenAiRealTime({

instructions: "You are a helpful assistant.",

onMessageReceived: enqueueMessage,

onAudioResponseComplete,

onUsageReport,

onSocketClose,

onReadyToReceiveAudio,

});

const ping = useCallback(() => {

sendBase64AudioStringChunk(dummyBase64Audio24K);

}, [sendBase64AudioStringChunk]);

const [chunks, setChunks] = useState<string[]>([]);

//console.log("before onAudioStreamerChunk: ", isAiResponseInProgress);

const onAudioStreamerChunk = useCallback(

(audioBuffer: AudioBuffer) => {

const chunk = convertAudioBufferToBase64(audioBuffer);

setChunks((prev) => [...prev, chunk]);

if (

isWebSocketConnected &&

isInitialized &&

!isAiResponseInProgressRef.current &&

!isAudioPlayingRef.current

) {

console.log(

`Sending AUdio Chunk. isWebSocketConnected: ${isWebSocketConnected}, isInitialized: ${isInitialized}, isAiResponseInProgress: ${

isAiResponseInProgressRef.current

}, isAudioPlayingRef.current: ${isAudioPlayingRef.current}, ${

chunk.slice(0, 50) + "..."

}`

);

sendBase64AudioStringChunk(chunk);

}

},

[isInitialized, isWebSocketConnected, sendBase64AudioStringChunk]

);

const { isStreaming, startStreaming, stopStreaming } = useAudioStreamer({

sampleRate: 16000, // e.g., 16kHz - // TODO : The documentation doesn't specify the exact requirements for this. It tried 16K and 24K. I think 16k is better.

interval: 250, // emit every 250 milliseconds

onAudioReady: onAudioStreamerChunk,

});

const playAudioRecorderChunks = useCallback(() => {

const combined = combineBase64ArrayList(chunks);

playAudio({ base64Text: combined, sampleRate: 16000 });

}, [chunks, playAudio]);

const _connectWebSocket = useCallback(async () => {

const tokenResponse = await fetch(`${localIpAddress}:3000/session`);

const data = await tokenResponse.json();

const EPHEMERAL_KEY = data.client_secret.value;

connectWebSocket({ ephemeralKey: EPHEMERAL_KEY });

}, [connectWebSocket]);

useEffect(() => {

if (isWebSocketConnected) {

if (isInitialized) {

console.log("Starting audio streaming");

startStreaming();

}

} else {

console.log("Stopping audio streaming");

stopStreaming();

}

// eslint-disable-next-line react-hooks/exhaustive-deps

}, [isWebSocketConnected, isInitialized]);

useEffect(() => {

isAiResponseInProgressRef.current = isAiResponseInProgress;

}, [isAiResponseInProgress]);

return (

<SafeAreaView

className=" self-stretch flex-1"

edges={["top", "left", "right"]}

>

<View className=" self-stretch flex-1">

<View

className=" self-stretch flex-1"

style={{

backgroundColor: "black",

gap: 16,

display: "flex",

flexDirection: "column",

padding: 16,

}}

>

<View>

<Button

onPress={() => {

playAudio({

base64Text: dummyBase64Audio24K,

sampleRate: 24000,

});

}}

title="Play 24K string"

/>

</View>

<View>

{isWebSocketConnected && <Button onPress={ping} title="Ping" />}

{isWebSocketConnecting ? (

<Text style={{ color: "white", fontSize: 32 }}>

Connecting...

</Text>

) : isWebSocketConnected ? (

<Button onPress={disconnectSocket} title="disconnectSocket" />

) : (

<Button onPress={_connectWebSocket} title="connectWebSocket" />

)}

<Button

onPress={() => {

console.log("Log Messages:", messages);

}}

title="Log Messages"

/>

</View>

<HR />

<View>

<Text

style={{ color: "white", fontSize: 32 }}

className=" text-[30px] font-bold"

>

Transcription:

</Text>

<Text style={{ color: "white", fontSize: 32 }}>

{transcription}

</Text>

</View>

<HR />

<View className=" flex-row flex items-center">

<Text style={{ color: "white", fontSize: 32 }}>

Is audio Playing: {isAudioPlaying ? "Yes" : "No"}

</Text>

{isAudioPlaying && (

<Button onPress={stopPlayingAudio} title="Stop Playing" />

)}

</View>

<HR />

<View className=" flex flex-row items-center gap-2">

{!isStreaming && (

<Button

onPress={() => {

setChunks([]);

startStreaming();

}}

title="Start Streaming"

/>

)}

{isStreaming && (

<Button onPress={stopStreaming} title="Stop Streaming" />

)}

{!isStreaming && chunks.length > 0 && (

<Button onPress={playAudioRecorderChunks} title="Play Stream" />

)}

</View>

<Text style={{ color: "white", fontSize: 32 }}>

Is Streaming: {isStreaming ? "Yes" : "No"}

</Text>

</View>

</View>

</SafeAreaView>

);

};

const HR = memo(function HR_() {

return <View className=" self-stretch bg-white h-[2px] " />;

});

const useAudioPlayer = ({

onIsAudioPlayingUpdate,

}: {

onIsAudioPlayingUpdate: (playing: boolean) => void;

}) => {

const audioContextRef = useRef<AudioContext | null>(null);

const audioBufferSourceNodeRef = useRef<AudioBufferSourceNode | null>(null);

const [isAudioPlaying, setIsAudioPlaying] = useState(false);

const updateIsAudioPlaying = useCallback(

(newValue: boolean) => {

setIsAudioPlaying(newValue);

onIsAudioPlayingUpdate(newValue);

},

[onIsAudioPlayingUpdate]

);

const cleanUp = useCallback(() => {

updateIsAudioPlaying(false);

try {

audioBufferSourceNodeRef.current?.stop?.();

} catch {}

audioBufferSourceNodeRef.current = null;

}, [updateIsAudioPlaying]);

const playAudio = useCallback(

async ({

base64Text,

sampleRate,

}: {

sampleRate: number;

base64Text: string;

}) => {

const audioContext = new AudioContext({ sampleRate });

const audioBuffer = await audioContext.decodePCMInBase64Data(base64Text);

const audioBufferSourceNode = audioContext.createBufferSource();

audioBufferSourceNode.connect(audioContext.destination);

audioBufferSourceNode.buffer = audioBuffer;

updateIsAudioPlaying(true);

audioBufferSourceNode.onEnded = () => {

cleanUp();

};

audioBufferSourceNode.start();

audioBufferSourceNodeRef.current = audioBufferSourceNode;

audioContextRef.current = audioContext;

},

[cleanUp, updateIsAudioPlaying]

);

const stopPlayingAudio = useCallback(() => {

audioBufferSourceNodeRef.current?.stop?.();

}, []);

return {

isAudioPlaying,

playAudio,

stopPlayingAudio,

};

};

const useAudioStreamer = ({

sampleRate,

interval,

onAudioReady,

}: {

sampleRate: number;

interval: number;

onAudioReady: (audioBuffer: AudioBuffer) => void;

}) => {

const audioContextRef = useRef<AudioContext | null>(null);

const audioRecorderRef = useRef<AudioRecorder | null>(null);

const [isStreaming, setIsStreaming] = useState(false);

const resetState = useCallback(() => {

setIsStreaming(false);

try {

audioRecorderRef.current?.stop?.();

} catch {}

}, []);

useEffect(() => {

return resetState;

}, [resetState]);

const startStreaming = useCallback(async () => {

const permissionResult = await requestRecordingPermissionsAsync();

if (!permissionResult.granted) {

Alert.alert("Permission Error", "Audio recording permission is required");

return;

}

const audioContext = new AudioContext({ sampleRate });

const audioRecorder = new AudioRecorder({

sampleRate: sampleRate,

bufferLengthInSamples: (sampleRate * interval) / 1000,

});

const recorderAdapterNode = audioContext.createRecorderAdapter();

audioRecorder.connect(recorderAdapterNode);

audioRecorder.onAudioReady((event) => {

const { buffer } = event;

onAudioReady(buffer);

});

audioRecorder.start();

setIsStreaming(true);

audioContextRef.current = audioContext;

audioRecorderRef.current = audioRecorder;

}, [interval, onAudioReady, sampleRate]);

return {

isStreaming,

startStreaming,

stopStreaming: resetState,

};

};

const convertAudioBufferToBase64 = (audioBuffer: AudioBuffer) => {

const float32Array = audioBuffer.getChannelData(0);

// Convert Float32Array to 16-bit PCM

const pcmData = new Int16Array(float32Array.length);

for (let i = 0; i < float32Array.length; i++) {

// Convert float32 (-1 to 1) to int16 (-32768 to 32767)

const sample = Math.max(-1, Math.min(1, float32Array[i]));

pcmData[i] = Math.round(sample * 32767);

}

// Convert to bytes

const bytes = new Uint8Array(pcmData.buffer);

// Convert to base64

let binary = "";

const chunkSize = 0x8000; // 32KB chunks to avoid call stack limits

for (let i = 0; i < bytes.length; i += chunkSize) {

const chunk = bytes.subarray(i, i + chunkSize);

binary += String.fromCharCode.apply(null, Array.from(chunk));

}

const base64String = btoa(binary);

return base64String;

};

export default New;

export {

convertAudioBufferToBase64,

combineBase64ArrayList,

useAudioPlayer,

useAudioStreamer,

};

Don't forget these 2 things to run the app:

- Provide API key to the Express JS endpoint

- React Native can't see

localhost, instead we must provide the local ip address for endpoints that run locally. In the example, you will find this variable:const localIpAddress = "http://192.168.8.103";, replace it with your local IP address, to enable React native to communicate with the express JS endpoint.

Thanks a lot! 🌹

👀

+1