ai

ai copied to clipboard

ai copied to clipboard

Properly handle client function/tool calls that appear partway through the response stream.

The Issue

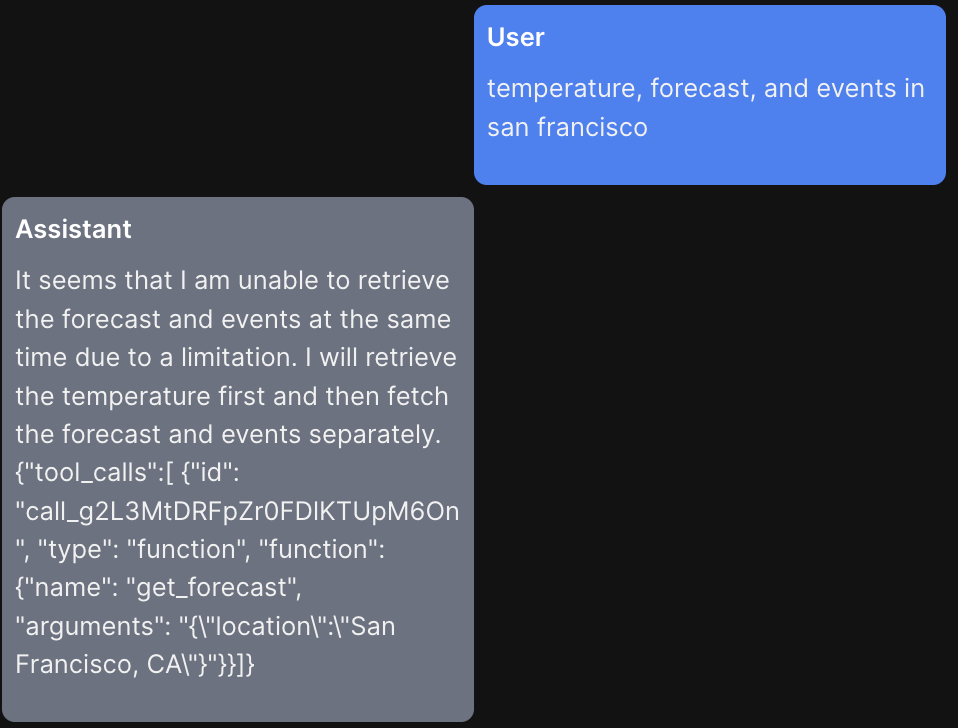

Currently, if a LLM response starts with regular content and then calls a tool or function partway through, the entire response will still be put into the message content field rather than populating the appropriate function_call or tool_calls field of the message (which will trigger the client callbacks to handle the function/tool calls).

Diagnosis / Root Cause

When l looked at the underlying implementation, I saw that callChatApi() was only checking whether the streamed response started with the function/tool call prefix (and not whether they were present midway though).

Proposed Solution

I added some extra logic to detect whether an intermediate chunk starts with the tool/function call prefix.

By default, all streamed chunks will populate the content field. The moment a function/tool call prefix is detected, a flag is set to parse the remainder of the message as either a function or tool call.

This change seems to solve the issue entirely, and I'm no longer able to replicate the original bug on my local environment despite my best efforts.

Hey @lgrammel,

Apologies if you're not the right person to tag for this, but I was wondering when someone would be able to take a look through (and hopefully approve) the PR.

Thanks for the PR. We are planning to switch to the StreamData protocol in the next major release and remove this part of the code: https://github.com/vercel/ai/pull/1192

In the meantime, you can already use experimental_streamData: true to activate the stream data protocol which should not have these issues.