Hongxin Liu

Hongxin Liu

Hi, please rebase the main branch as we've fixed some bugs

> @Fazziekey @FrankLeeeee Same OOM issue. The same is A100 40GB, 1 gpu running llama7B model, batch=1, max_seq_len=512, colossalai_zero2 placement_policy='cuda', use torch.cuda.memory_allocated() to analyze memory usage, in SFTTrainer self.optimizer =...

I've added "colossalai_zero2_cpu" strategy for this script. I tested on 4x 40GA100 and it works.

> Can you update the test files in `tests/test_fx/test_tracer/test_torchaudio_model`? DONE

How many nodes did you use?

Hi, it only offload optimizer states to disk. It seems your error is not related to optimizer.

Can you provide more info?

What is your python env?

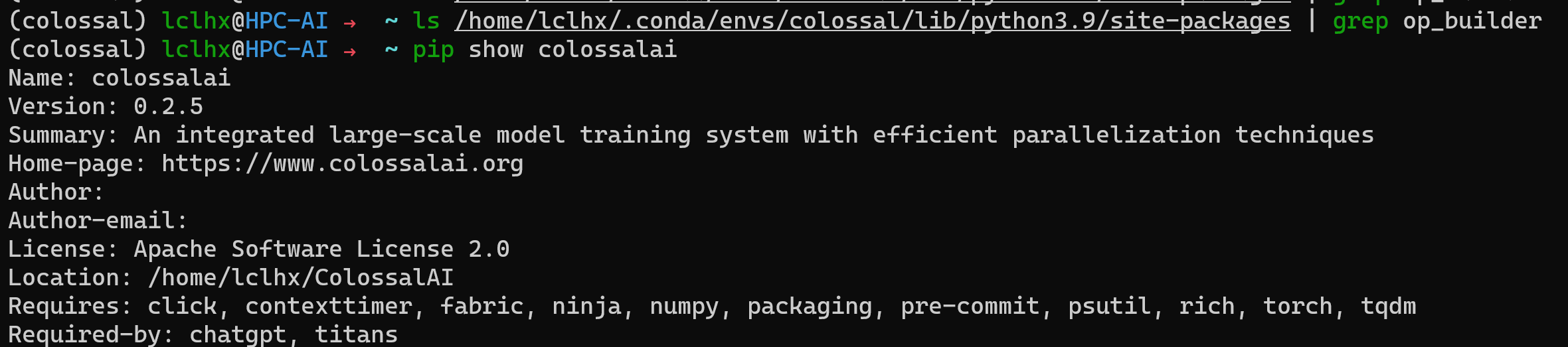

How did you install colossalai? There is no `op_builder/` folder in my `site-packages/` folder.

@Fazziekey Could you answer this question?