Windows K8s - kubernetes_logs source

A note for the community

- Please vote on this issue by adding a 👍 reaction to the original issue to help the community and maintainers prioritize this request

- If you are interested in working on this issue or have submitted a pull request, please leave a comment

Problem

I was working on testing and creating what was needed (windows container support via github actions / helm chart changes) I've got to a point where it deploys with minimal divergence from the norm (linux) helm chart

But I'm struggling with the kubernetes_logs source on windows.

The provider, even in trace, doesn't give much away. The paths should be the same /var/log/pods/* which seems to match the rust

// We seek to match the paths like

// `<pod_logs_dir>/<container_name>/<n>.log` - paths managed by

// the `kubelet` as part of Kubernetes core logging

// architecture.

// In some setups, there will also be paths like

// `<pod_logs_dir>/<hash>.log` - those we want to skip.

C:\var\log\pods>dir

Volume in drive C has no label.

Volume Serial Number is ECF5-86C4

Directory of C:\var\log\pods

01/11/2023 05:37 PM <DIR> .

01/11/2023 05:37 PM <DIR> ..

12/16/2022 05:48 PM <DIR> datadog_datadog-windows-c4t8f_f422afd5-6b9e-43c7-92c5-7ab0b51f9ed7

01/10/2023 02:46 PM <DIR> usernamespace_userdeployment3-74ccf469f7-blwk7_49eebc6f-ac31-4686-887a-3dfc76925d03

01/10/2023 02:46 PM <DIR> usernamespace_userdeployment2-69857cb544-wr49c_087bb2e8-a56f-4700-b2be-8043d68ce5c4

12/19/2022 02:40 PM <DIR> usernamespace_userdeployment-574d967fc6-mvsqq_f990e29e-3f28-4384-8447-0730d6dbc0ab

12/16/2022 05:53 PM <DIR> kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9

01/11/2023 05:37 PM <DIR> vector_vector-logs-windows-vector-logagent-windows-kpnfx_4b348658-003e-4edc-bf43-a2108cf43f7d

0 File(s) 0 bytes

8 Dir(s) 19,350,544,384 bytes free

---

Directory of C:\var\log\pods\datadog_datadog-windows-c4t8f_f422afd5-6b9e-43c7-92c5-7ab0b51f9ed7

12/16/2022 05:48 PM <DIR> .

12/16/2022 05:48 PM <DIR> ..

12/16/2022 05:48 PM <DIR> agent

12/16/2022 05:48 PM <DIR> init-config

12/16/2022 05:48 PM <DIR> init-volume

12/16/2022 05:48 PM <DIR> process-agent

0 File(s) 0 bytes

6 Dir(s) 19,351,310,336 bytes free

Directory of C:\var\log\pods\datadog_datadog-windows-c4t8f_f422afd5-6b9e-43c7-92c5-7ab0b51f9ed7

12/16/2022 05:48 PM <DIR> .

12/16/2022 05:48 PM <DIR> ..

12/16/2022 05:48 PM <DIR> agent

12/16/2022 05:48 PM <DIR> init-config

12/16/2022 05:48 PM <DIR> init-volume

12/16/2022 05:48 PM <DIR> process-agent

0 File(s) 0 bytes

6 Dir(s) 19,351,310,336 bytes free

All the pod log folders contain a log subfolder

Directory of C:\var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\ebs-plugin

12/16/2022 05:53 PM <DIR> .

12/16/2022 05:53 PM <DIR> ..

12/16/2022 05:53 PM <SYMLINK> 0.log [C:\ProgramData\docker\containers\5d93152341f7f8ad2fc6e80ced99b603ace1fc4d86382b73a8cb30a3dcf08b3e\5d93152341f7f8ad2fc6e80ced99b603ace1fc4d86382b73a8cb30a3dcf08b3e-json.log]

1 File(s) 0 bytes

2 Dir(s) 19,350,798,336 bytes free

Which I believe wouldn't match <pod_logs_dir>/<container_name>/<n>.log expectation in the rust?

I can see logentries like

2023-01-11T17:38:14.004338Z DEBUG source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: file_source::file_server: event_throughput=0.000/sec bytes_throughput=0.000/sec ratios={"discovery": 4.516799e-8, "other": 2.4253599e-5, "sending": 1.655407e-5, "sleeping": 0.9999593}

2023-01-11T17:38:14.004410Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Verifying Namespace metadata for pod. pod=Some("datadog-windows-c4t8f")

but they lead nowhere and the code for the sink doesn't seem to contain any trace messages that would say it found x in the kubeapi data and found x in pod logs but didn't go further.

Configuration

## Helm

## Had to change proc/sys to DirectoryOrCreate as the helm charts configured for linux

## Windows has some sockets available for interrogation i.e.

## runtimesocket Bind-Mount \\.\pipe\docker_engine

## containerdsocket Bind-Mount \\.\pipe\containerd-containerd

##

## - hostPath:

## path: /proc

## type: DirectoryOrCreate

## name: procfs

## - hostPath:

## path: /sys

## type: DirectoryOrCreate

## name: sysfs

###

nameOverride: 'vector-logagent-windows'

role: Agent

rollWorkload: true

image:

repository: chrismckee/vector-nt

tag: 0.26.0-windowsserver.nano.ltsc2019

sha: 085f48a22ea8377c306549d6ad03df860f07b188a4f69d39d47fd0d8dbebc374

env:

- name: VECTOR_LOG

value: debug

tolerations:

- effect: NoSchedule

key: OS

operator: Equal

value: 'Windows'

nodeSelector:

kubernetes.io/arch: amd64

kubernetes.io/os: windows

nodeclass: windows2019

command: ['/vector/bin/vector.exe']

args:

- --config-dir

- '/etc/vector/'

persistence:

hostPath:

path: "/etc/temp"

extraVolumes:

- name: temp

hostPath:

path: /etc/temp/

type: DirectoryOrCreate

customConfig:

data_dir: /etc/temp/

api:

enabled: true

address: 127.0.0.1:8686

playground: true

sources:

kubernetes_logs:

type: kubernetes_logs

data_dir: /etc/temp/

sinks:

loki_exporter:

type: loki

inputs:

- kubernetes_logs

endpoint: https://xxx-xxx-xxx.xxx.xxx

auth:

user: 'xxx'

password: xxx

strategy: basic

labels:

forwarder: vector

cluster: dev-cluster

namespace: |-

{{ print "{{ kubernetes.pod_namespace }}" }}

pod: |-

{{ print "{{ kubernetes.pod_name }}" }}

container: |-

{{ print "{{ kubernetes.container_image }}" }}

ip: |-

{{ print "{{ kubernetes.pod_ip }}" }}

app: |-

{{ print "{{ kubernetes.pod_labels.app }}" }}

acknowledgements: false

healthcheck:

enabled: false

compression: snappy

encoding:

codec: text

timestamp_format: rfc3339

Version

26

Debug Output

2023-01-11T17:37:11.935771Z INFO vector::app: Internal log rate limit configured. internal_log_rate_secs=10

2023-01-11T17:37:11.936047Z INFO vector::app: Log level is enabled. level="trace"

2023-01-11T17:37:11.936809Z INFO vector::app: Loading configs. paths=["/etc\\vector"]

2023-01-11T17:37:11.945249Z DEBUG vector::config::loading: No secret placeholder found, skipping secret resolution.

2023-01-11T17:37:11.958569Z DEBUG vector::topology::builder: Building new source. component=kubernetes_logs

2023-01-11T17:37:11.959176Z INFO vector::sources::kubernetes_logs: Obtained Kubernetes Node name to collect logs for (self). self_node_name="ip-AWSIP.eu-west-2.compute.internal"

2023-01-11T17:37:11.960776Z TRACE kube_client::config: no local config found, falling back to local in-cluster config error=failed to find the path of kubeconfig

2023-01-11T17:37:11.983846Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:11.984157Z INFO vector::sources::kubernetes_logs: Excluding matching files. exclude_paths=["**/*.gz", "**/*.tmp"]

2023-01-11T17:37:11.984269Z DEBUG vector::topology::builder: Building new sink. component=loki_exporter

2023-01-11T17:37:11.987872Z DEBUG vector_core::tls::settings: Fetching system root certs.

2023-01-11T17:37:11.996304Z DEBUG vector_core::tls::settings: Fetching system root certs.

2023-01-11T17:37:12.002604Z INFO vector::topology::running: Running healthchecks.

2023-01-11T17:37:12.002663Z DEBUG vector::topology::running: Connecting changed/added component(s).

2023-01-11T17:37:12.002689Z DEBUG vector::topology::running: Configuring outputs for source. component=kubernetes_logs

2023-01-11T17:37:12.002726Z DEBUG vector::topology::running: Configuring output for component. component=kubernetes_logs output_id=None

2023-01-11T17:37:12.002758Z DEBUG vector::topology::running: Connecting inputs for sink. component=loki_exporter

2023-01-11T17:37:12.002804Z DEBUG vector::topology::running: Adding component input to fanout. component=loki_exporter fanout_id=kubernetes_logs

2023-01-11T17:37:12.002876Z DEBUG vector::topology::running: Spawning new source. key=kubernetes_logs

2023-01-11T17:37:12.002944Z TRACE vector::topology::running: Spawning new sink. key=loki_exporter

2023-01-11T17:37:12.003096Z INFO vector: Vector has started. debug="false" version="0.26.0" arch="x86_64" revision="c6b5bc2 2022-12-05"

2023-01-11T17:37:12.003145Z INFO vector::internal_events::api: API server running. address=127.0.0.1:8686 playground=http://127.0.0.1:8686/playground

2023-01-11T17:37:12.006061Z DEBUG source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::topology::builder: Source starting.

2023-01-11T17:37:12.006052Z DEBUG source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::topology::builder: Source pump supervisor starting.

2023-01-11T17:37:12.006140Z DEBUG source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::topology::builder: Source pump starting.

2023-01-11T17:37:12.006223Z DEBUG sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}: vector::topology::builder: Sink starting.

2023-01-11T17:37:12.006670Z TRACE tower::buffer::service: sending request to buffer worker

2023-01-11T17:37:12.006703Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.006711Z TRACE tower::buffer::worker: processing new request

2023-01-11T17:37:12.006725Z TRACE tower::buffer::worker: resumed=false worker received request; waiting for service readiness

2023-01-11T17:37:12.006740Z DEBUG tower::buffer::worker: service.ready=true processing request

2023-01-11T17:37:12.006768Z TRACE tower::buffer::worker: returning response future

2023-01-11T17:37:12.006780Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.006906Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/pods?&fieldSelector=spec.nodeName%3Dip-AWSIP.eu-west-2.compute.internal&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: kube_client::client::builder: requesting

2023-01-11T17:37:12.006953Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/pods?&fieldSelector=spec.nodeName%3Dip-AWSIP.eu-west-2.compute.internal&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::pool: checkout waiting for idle connection: ("https", 172.20.0.1)

2023-01-11T17:37:12.007093Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/pods?&fieldSelector=spec.nodeName%3Dip-AWSIP.eu-west-2.compute.internal&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::connect::http: Http::connect; scheme=Some("https"), host=Some("172.20.0.1"), port=None

2023-01-11T17:37:12.007132Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/pods?&fieldSelector=spec.nodeName%3Dip-AWSIP.eu-west-2.compute.internal&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::connect::http: connecting to 172.20.0.1:443

2023-01-11T17:37:12.007246Z TRACE tower::buffer::service: sending request to buffer worker

2023-01-11T17:37:12.007266Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.007273Z TRACE tower::buffer::worker: processing new request

2023-01-11T17:37:12.007289Z TRACE tower::buffer::service: sending request to buffer worker

2023-01-11T17:37:12.007384Z INFO vector::topology::builder: Healthcheck: Disabled.

2023-01-11T17:37:12.007830Z TRACE tower::buffer::worker: resumed=false worker received request; waiting for service readiness

2023-01-11T17:37:12.007911Z TRACE vector: Beep.

2023-01-11T17:37:12.007913Z DEBUG tower::buffer::worker: service.ready=true processing request

2023-01-11T17:37:12.007934Z TRACE tower::buffer::worker: returning response future

2023-01-11T17:37:12.007941Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.007947Z TRACE tower::buffer::worker: processing new request

2023-01-11T17:37:12.007952Z TRACE tower::buffer::worker: resumed=false worker received request; waiting for service readiness

2023-01-11T17:37:12.007957Z DEBUG tower::buffer::worker: service.ready=true processing request

2023-01-11T17:37:12.007932Z DEBUG sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}: vector::utilization: utilization=0.04264367137203939

2023-01-11T17:37:12.007965Z TRACE tower::buffer::worker: returning response future

2023-01-11T17:37:12.007974Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.008009Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: kube_client::client::builder: requesting

2023-01-11T17:37:12.008027Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::pool: checkout waiting for idle connection: ("https", 172.20.0.1)

2023-01-11T17:37:12.008035Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/nodes?&fieldSelector=metadata.name%3Dip-AWSIP.eu-west-2.compute.internal otel.name="list" otel.kind="client"}: kube_client::client::builder: requesting

2023-01-11T17:37:12.008055Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/nodes?&fieldSelector=metadata.name%3Dip-AWSIP.eu-west-2.compute.internal otel.name="list" otel.kind="client"}: hyper::client::pool: checkout waiting for idle connection: ("https", 172.20.0.1)

2023-01-11T17:37:12.008056Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::connect::http: Http::connect; scheme=Some("https"), host=Some("172.20.0.1"), port=None

2023-01-11T17:37:12.008074Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::connect::http: connecting to 172.20.0.1:443

2023-01-11T17:37:12.008102Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/nodes?&fieldSelector=metadata.name%3Dip-AWSIP.eu-west-2.compute.internal otel.name="list" otel.kind="client"}: hyper::client::connect::http: Http::connect; scheme=Some("https"), host=Some("172.20.0.1"), port=None

2023-01-11T17:37:12.008116Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/nodes?&fieldSelector=metadata.name%3Dip-AWSIP.eu-west-2.compute.internal otel.name="list" otel.kind="client"}: hyper::client::connect::http: connecting to 172.20.0.1:443

2023-01-11T17:37:12.008436Z TRACE mio::poll: registering event source with poller: token=Token(0), interests=READABLE | WRITABLE

2023-01-11T17:37:12.008753Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/pods?&fieldSelector=spec.nodeName%3Dip-AWSIP.eu-west-2.compute.internal&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: mio::poll: registering event source with poller: token=Token(1), interests=READABLE | WRITABLE

2023-01-11T17:37:12.008756Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/nodes?&fieldSelector=metadata.name%3Dip-AWSIP.eu-west-2.compute.internal otel.name="list" otel.kind="client"}: mio::poll: registering event source with poller: token=Token(2), interests=READABLE | WRITABLE

2023-01-11T17:37:12.008760Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: mio::poll: registering event source with poller: token=Token(3), interests=READABLE | WRITABLE

2023-01-11T17:37:12.012316Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: file_source::checkpointer: Loaded checkpoint data.

2023-01-11T17:37:12.014255Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::connect::http: connected to 172.20.0.1:443

2023-01-11T17:37:12.282696Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::conn: client handshake Http1

2023-01-11T17:37:12.282734Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::client: handshake complete, spawning background dispatcher task

2023-01-11T17:37:12.282813Z TRACE want: signal: Want

2023-01-11T17:37:12.282827Z TRACE want: signal found waiting giver, notifying

2023-01-11T17:37:12.282846Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: Init, keep_alive: Busy }

2023-01-11T17:37:12.282874Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: want: poll_want: taker wants!

2023-01-11T17:37:12.282887Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&labelSelector=vector.dev%2Fexclude%21%3Dtrue otel.name="list" otel.kind="client"}: hyper::client::pool: checkout dropped for ("https", 172.20.0.1)

2023-01-11T17:37:12.282944Z TRACE encode_headers: hyper::proto::h1::role: Client::encode method=GET, body=None

2023-01-11T17:37:12.283159Z DEBUG hyper::proto::h1::io: flushed 1287 bytes

2023-01-11T17:37:12.283172Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.292565Z TRACE hyper::proto::h1::conn: Conn::read_head

2023-01-11T17:37:12.292665Z TRACE hyper::proto::h1::io: received 1179 bytes

2023-01-11T17:37:12.292695Z TRACE parse_headers: hyper::proto::h1::role: Response.parse bytes=1179

2023-01-11T17:37:12.292718Z TRACE parse_headers: hyper::proto::h1::role: Response.parse Complete(341)

2023-01-11T17:37:12.292767Z DEBUG hyper::proto::h1::io: parsed 7 headers

2023-01-11T17:37:12.292778Z DEBUG hyper::proto::h1::conn: incoming body is chunked encoding

2023-01-11T17:37:12.292817Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Size, 0)

2023-01-11T17:37:12.292838Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.292844Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.292849Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.292854Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.292860Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.292866Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.292873Z TRACE hyper::proto::h1::decode: Chunk size is 179604

2023-01-11T17:37:12.292879Z DEBUG hyper::proto::h1::decode: incoming chunked header: 0x2BD94 (179604 bytes)

2023-01-11T17:37:12.292885Z TRACE hyper::proto::h1::decode: Chunked read, remaining=179604

2023-01-11T17:37:12.292896Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 178773)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.298377Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 178773)

2023-01-11T17:37:12.298406Z TRACE hyper::proto::h1::decode: Chunked read, remaining=178773

2023-01-11T17:37:12.298612Z TRACE hyper::proto::h1::io: received 2358 bytes

2023-01-11T17:37:12.298640Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 176415)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.298677Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 176415)

2023-01-11T17:37:12.298689Z TRACE hyper::proto::h1::decode: Chunked read, remaining=176415

2023-01-11T17:37:12.298699Z TRACE hyper::proto::h1::io: received 559 bytes

2023-01-11T17:37:12.298707Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 175856)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.298733Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 175856)

2023-01-11T17:37:12.298752Z TRACE hyper::proto::h1::decode: Chunked read, remaining=175856

2023-01-11T17:37:12.298812Z TRACE hyper::proto::h1::io: received 4716 bytes

2023-01-11T17:37:12.298834Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 171140)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.298865Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 171140)

2023-01-11T17:37:12.298874Z TRACE hyper::proto::h1::decode: Chunked read, remaining=171140

2023-01-11T17:37:12.298916Z TRACE hyper::proto::h1::io: received 5895 bytes

2023-01-11T17:37:12.298935Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 165245)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.298964Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 165245)

2023-01-11T17:37:12.298973Z TRACE hyper::proto::h1::decode: Chunked read, remaining=165245

2023-01-11T17:37:12.299034Z TRACE hyper::proto::h1::io: received 7074 bytes

2023-01-11T17:37:12.299050Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 158171)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299067Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 158171)

2023-01-11T17:37:12.299075Z TRACE hyper::proto::h1::decode: Chunked read, remaining=158171

2023-01-11T17:37:12.299222Z TRACE hyper::proto::h1::io: received 8192 bytes

2023-01-11T17:37:12.299243Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 149979)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299293Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 149979)

2023-01-11T17:37:12.299302Z TRACE hyper::proto::h1::decode: Chunked read, remaining=149979

2023-01-11T17:37:12.299312Z TRACE hyper::proto::h1::io: received 61 bytes

2023-01-11T17:37:12.299321Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 149918)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299333Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 149918)

2023-01-11T17:37:12.299340Z TRACE hyper::proto::h1::decode: Chunked read, remaining=149918

2023-01-11T17:37:12.299445Z TRACE hyper::proto::h1::io: received 9432 bytes

2023-01-11T17:37:12.299456Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 140486)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299478Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 140486)

2023-01-11T17:37:12.299486Z TRACE hyper::proto::h1::decode: Chunked read, remaining=140486

2023-01-11T17:37:12.299561Z TRACE hyper::proto::h1::io: received 10611 bytes

2023-01-11T17:37:12.299572Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 129875)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299591Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 129875)

2023-01-11T17:37:12.299599Z TRACE hyper::proto::h1::decode: Chunked read, remaining=129875

2023-01-11T17:37:12.299629Z TRACE hyper::proto::h1::io: received 11790 bytes

2023-01-11T17:37:12.299639Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 118085)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299706Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 118085)

2023-01-11T17:37:12.299714Z TRACE hyper::proto::h1::decode: Chunked read, remaining=118085

2023-01-11T17:37:12.299764Z TRACE hyper::proto::h1::io: received 12969 bytes

2023-01-11T17:37:12.299773Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 105116)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299793Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 105116)

2023-01-11T17:37:12.299800Z TRACE hyper::proto::h1::decode: Chunked read, remaining=105116

2023-01-11T17:37:12.299938Z TRACE hyper::proto::h1::io: received 14148 bytes

2023-01-11T17:37:12.299950Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 90968)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.299970Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 90968)

2023-01-11T17:37:12.299977Z TRACE hyper::proto::h1::decode: Chunked read, remaining=90968

2023-01-11T17:37:12.300006Z TRACE hyper::proto::h1::io: received 15327 bytes

2023-01-11T17:37:12.300016Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 75641)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.300107Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 75641)

2023-01-11T17:37:12.300117Z TRACE hyper::proto::h1::decode: Chunked read, remaining=75641

2023-01-11T17:37:12.300246Z TRACE hyper::proto::h1::io: received 16384 bytes

2023-01-11T17:37:12.300258Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 59257)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.300283Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 59257)

2023-01-11T17:37:12.300291Z TRACE hyper::proto::h1::decode: Chunked read, remaining=59257

2023-01-11T17:37:12.300333Z TRACE hyper::proto::h1::io: received 16384 bytes

2023-01-11T17:37:12.300342Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 42873)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.300364Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 42873)

2023-01-11T17:37:12.300708Z TRACE hyper::proto::h1::decode: Chunked read, remaining=42873

2023-01-11T17:37:12.300824Z TRACE hyper::proto::h1::io: received 16384 bytes

2023-01-11T17:37:12.300839Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 26489)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.300870Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 26489)

2023-01-11T17:37:12.300877Z TRACE hyper::proto::h1::decode: Chunked read, remaining=26489

2023-01-11T17:37:12.300911Z TRACE hyper::proto::h1::io: received 16384 bytes

2023-01-11T17:37:12.300922Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 10105)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.300947Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 10105)

2023-01-11T17:37:12.300954Z TRACE hyper::proto::h1::decode: Chunked read, remaining=10105

2023-01-11T17:37:12.300983Z TRACE hyper::proto::h1::io: received 10105 bytes

2023-01-11T17:37:12.300992Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(BodyCr, 0)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.301010Z TRACE hyper::proto::h1::decode: decode; state=Chunked(BodyCr, 0)

2023-01-11T17:37:12.301023Z TRACE hyper::proto::h1::io: received 7 bytes

2023-01-11T17:37:12.301030Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.301036Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.301043Z TRACE hyper::proto::h1::decode: Chunk size is 0

2023-01-11T17:37:12.301058Z TRACE hyper::proto::h1::decode: end of chunked

2023-01-11T17:37:12.301064Z DEBUG hyper::proto::h1::conn: incoming body completed

2023-01-11T17:37:12.301098Z TRACE hyper::proto::h1::conn: maybe_notify; read_from_io blocked

2023-01-11T17:37:12.301114Z TRACE want: signal: Want

2023-01-11T17:37:12.301121Z TRACE want: signal found waiting giver, notifying

2023-01-11T17:37:12.301130Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: Init, keep_alive: Idle }

2023-01-11T17:37:12.301139Z TRACE want: signal: Want

2023-01-11T17:37:12.301145Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: Init, keep_alive: Idle }

2023-01-11T17:37:12.301154Z TRACE want: poll_want: taker wants!

2023-01-11T17:37:12.301161Z TRACE hyper::client::pool: put; add idle connection for ("https", 172.20.0.1)

2023-01-11T17:37:12.301178Z TRACE hyper::client::pool: put; found waiter for ("https", 172.20.0.1)

2023-01-11T17:37:12.301212Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/pods?&fieldSelector=spec.nodeName%

-- Huge blob of api response--

2023-01-11T17:37:12.315257Z TRACE tower::buffer::service: sending request to buffer worker

2023-01-11T17:37:12.315310Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.315321Z TRACE tower::buffer::worker: processing new request

2023-01-11T17:37:12.315329Z TRACE tower::buffer::worker: resumed=false worker received request; waiting for service readiness

2023-01-11T17:37:12.315337Z DEBUG tower::buffer::worker: service.ready=true processing request

2023-01-11T17:37:12.315353Z TRACE tower::buffer::worker: returning response future

2023-01-11T17:37:12.315349Z TRACE encode_headers: hyper::proto::h1::role: Client::encode method=GET, body=None

2023-01-11T17:37:12.315360Z TRACE tower::buffer::worker: worker polling for next message

2023-01-11T17:37:12.315733Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: kube_client::client::builder: requesting

2023-01-11T17:37:12.315769Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::pool: checkout waiting for idle connection: ("https", 172.20.0.1)

2023-01-11T17:37:12.315776Z DEBUG hyper::proto::h1::io: flushed 1352 bytes

2023-01-11T17:37:12.315785Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.315801Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::connect::http: Http::connect; scheme=Some("https"), host=Some("172.20.0.1"), port=None

2023-01-11T17:37:12.315814Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::connect::http: connecting to 172.20.0.1:443

2023-01-11T17:37:12.316324Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: mio::poll: registering event source with poller: token=Token(4), interests=READABLE | WRITABLE

2023-01-11T17:37:12.317781Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::connect::http: connected to 172.20.0.1:443

2023-01-11T17:37:12.330715Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::conn: client handshake Http1

2023-01-11T17:37:12.330753Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::client: handshake complete, spawning background dispatcher task

2023-01-11T17:37:12.330789Z TRACE want: signal: Want

2023-01-11T17:37:12.330796Z TRACE want: signal found waiting giver, notifying

2023-01-11T17:37:12.330802Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: Init, keep_alive: Busy }

2023-01-11T17:37:12.330816Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: want: poll_want: taker wants!

2023-01-11T17:37:12.330826Z TRACE HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/namespaces?&watch=true&resourceVersion=491323450&timeoutSeconds=290&labelSelector=vector.dev%2Fexclude%21%3Dtrue&allowWatchBookmarks=true otel.name="watch" otel.kind="client"}: hyper::client::pool: checkout dropped for ("https", 172.20.0.1)

2023-01-11T17:37:12.330854Z TRACE encode_headers: hyper::proto::h1::role: Client::encode method=GET, body=None

2023-01-11T17:37:12.331029Z DEBUG hyper::proto::h1::io: flushed 1368 bytes

2023-01-11T17:37:12.331058Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.333815Z TRACE hyper::proto::h1::conn: Conn::read_head

2023-01-11T17:37:12.333881Z TRACE hyper::proto::h1::io: received 341 bytes

2023-01-11T17:37:12.333901Z TRACE parse_headers: hyper::proto::h1::role: Response.parse bytes=341

2023-01-11T17:37:12.333915Z TRACE parse_headers: hyper::proto::h1::role: Response.parse Complete(341)

2023-01-11T17:37:12.333944Z DEBUG hyper::proto::h1::io: parsed 7 headers

2023-01-11T17:37:12.333951Z DEBUG hyper::proto::h1::conn: incoming body is chunked encoding

2023-01-11T17:37:12.333967Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Size, 0)

2023-01-11T17:37:12.333974Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.333992Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Size, 0)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.334031Z TRACE kube_client::client: headers: {"audit-id": "09950b10-3328-4daa-87d3-4a752841776a", "cache-control": "no-cache, private", "content-type": "application/json", "x-kubernetes-pf-flowschema-uid": "56847d98-65de-47d9-86ba-67cef57766df", "x-kubernetes-pf-prioritylevel-uid": "53f2ea6d-3bf3-4231-a47a-e164ef55cc2c", "date": "Wed, 11 Jan 2023 17:37:12 GMT", "transfer-encoding": "chunked"}

2023-01-11T17:37:12.338098Z TRACE hyper::proto::h1::conn: Conn::read_head

2023-01-11T17:37:12.338251Z TRACE hyper::proto::h1::io: received 4096 bytes

2023-01-11T17:37:12.338277Z TRACE parse_headers: hyper::proto::h1::role: Response.parse bytes=4096

2023-01-11T17:37:12.338294Z TRACE parse_headers: hyper::proto::h1::role: Response.parse Complete(341)

2023-01-11T17:37:12.338330Z DEBUG hyper::proto::h1::io: parsed 7 headers

2023-01-11T17:37:12.338339Z DEBUG hyper::proto::h1::conn: incoming body is chunked encoding

2023-01-11T17:37:12.338356Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Size, 0)

2023-01-11T17:37:12.338364Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.338370Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.338377Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.338383Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.338389Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.338395Z TRACE hyper::proto::h1::decode: Chunk size is 51397

2023-01-11T17:37:12.338402Z DEBUG hyper::proto::h1::decode: incoming chunked header: 0xC8C5 (51397 bytes)

2023-01-11T17:37:12.338409Z TRACE hyper::proto::h1::decode: Chunked read, remaining=51397

2023-01-11T17:37:12.338421Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 47648)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.338497Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 47648)

2023-01-11T17:37:12.338508Z TRACE hyper::proto::h1::decode: Chunked read, remaining=47648

2023-01-11T17:37:12.338539Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 47648)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.343184Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 47648)

2023-01-11T17:37:12.343231Z TRACE hyper::proto::h1::decode: Chunked read, remaining=47648

2023-01-11T17:37:12.343396Z TRACE hyper::proto::h1::io: received 16384 bytes

2023-01-11T17:37:12.343421Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 31264)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.343457Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 31264)

2023-01-11T17:37:12.343465Z TRACE hyper::proto::h1::decode: Chunked read, remaining=31264

2023-01-11T17:37:12.343508Z TRACE hyper::proto::h1::io: received 16384 bytes

2023-01-11T17:37:12.343523Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(Body, 14880)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.343582Z TRACE hyper::proto::h1::decode: decode; state=Chunked(Body, 14880)

2023-01-11T17:37:12.343591Z TRACE hyper::proto::h1::decode: Chunked read, remaining=14880

2023-01-11T17:37:12.343718Z TRACE hyper::proto::h1::io: received 14880 bytes

2023-01-11T17:37:12.343730Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Body(Chunked(BodyCr, 0)), writing: KeepAlive, keep_alive: Busy }

2023-01-11T17:37:12.343758Z TRACE hyper::proto::h1::decode: decode; state=Chunked(BodyCr, 0)

2023-01-11T17:37:12.343772Z TRACE hyper::proto::h1::io: received 7 bytes

2023-01-11T17:37:12.343780Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.343786Z TRACE hyper::proto::h1::decode: Read chunk hex size

2023-01-11T17:37:12.343793Z TRACE hyper::proto::h1::decode: Chunk size is 0

2023-01-11T17:37:12.343801Z TRACE hyper::proto::h1::decode: end of chunked

2023-01-11T17:37:12.343807Z DEBUG hyper::proto::h1::conn: incoming body completed

2023-01-11T17:37:12.343824Z TRACE hyper::proto::h1::conn: maybe_notify; read_from_io blocked

2023-01-11T17:37:12.343842Z TRACE want: signal: Want

2023-01-11T17:37:12.343852Z TRACE want: signal found waiting giver, notifying

2023-01-11T17:37:12.343879Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: Init, keep_alive: Idle }

2023-01-11T17:37:12.343892Z TRACE want: signal: Want

2023-01-11T17:37:12.343901Z TRACE hyper::proto::h1::conn: flushed({role=client}): State { reading: Init, writing: Init, keep_alive: Idle }

2023-01-11T17:37:12.343910Z TRACE want: poll_want: taker wants!

2023-01-11T17:37:12.343920Z TRACE hyper::client::pool: put; add idle connection for ("https", 172.20.0.1)

2023-01-11T17:37:12.343947Z TRACE hyper::client::pool: put; found waiter for ("https", 172.20.0.1)

2023-01-11T17:37:12.343986Z DEBUG HTTP{http.method=GET http.url=https://172.20.0.1/api/v1/nodes?

-- huge blob of api response --

2023-01-11T17:38:13.021669Z TRACE vector: Beep.

2023-01-11T17:38:14.004338Z DEBUG source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: file_source::file_server: event_throughput=0.000/sec bytes_throughput=0.000/sec ratios={"discovery": 4.516799e-8, "other": 2.4253599e-5, "sending": 1.655407e-5, "sleeping": 0.9999593}

2023-01-11T17:38:14.004410Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Verifying Namespace metadata for pod. pod=Some("datadog-windows-c4t8f")

2023-01-11T17:38:14.004431Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Providing log paths for pod. pod=Some("datadog-windows-c4t8f")

2023-01-11T17:38:14.008186Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Verifying Namespace metadata for pod. pod=Some("usercontainer-69857cb544-wr49c")

2023-01-11T17:38:14.008231Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Providing log paths for pod. pod=Some("usercontainer-69857cb544-wr49c")

2023-01-11T17:38:14.009865Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Verifying Namespace metadata for pod. pod=Some("usercontainer-574d967fc6-mvsqq")

2023-01-11T17:38:14.009895Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Providing log paths for pod. pod=Some("usercontainer-574d967fc6-mvsqq")

2023-01-11T17:38:14.011606Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Verifying Namespace metadata for pod. pod=Some("ebs-csi-node-windows-9hsp8")

2023-01-11T17:38:14.011634Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Providing log paths for pod. pod=Some("ebs-csi-node-windows-9hsp8")

2023-01-11T17:38:14.014001Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Verifying Namespace metadata for pod. pod=Some("usercontainer-74ccf469f7-blwk7")

2023-01-11T17:38:14.014031Z TRACE source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::sources::kubernetes_logs::k8s_paths_provider: Providing log paths for pod. pod=Some("usercontainer-74ccf469f7-blwk7")

2023-01-11T17:38:14.018311Z TRACE vector: Beep.

2023-01-11T17:38:15.026038Z TRACE vector: Beep.

2023-01-11T17:38:16.022042Z TRACE vector: Beep.

2023-01-11T17:38:17.016227Z TRACE vector: Beep.

2023-01-11T17:38:17.016220Z DEBUG sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}: vector::utilization: utilization=0.000000021816179173424115

2023-01-11T17:38:18.015835Z TRACE vector: Beep.

2023-01-11T17:38:19.012122Z TRACE vector: Beep.

2023-01-11T17:38:20.019769Z TRACE vector: Beep.

2023-01-11T17:38:21.019000Z TRACE vector: Beep.

2023-01-11T17:38:22.019491Z DEBUG sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}: vector::utilization: utilization=0.000000020170037771018576

2023-01-11T17:38:22.019558Z TRACE vector: Beep.

2023-01-11T17:38:23.022995Z TRACE vector: Beep.

2023-01-11T17:38:24.009459Z TRACE vector: Beep.

2023-01-11T17:38:25.016605Z TRACE vector: Beep.

2023-01-11T17:38:26.018239Z TRACE vector: Beep.

2023-01-11T17:38:27.009109Z TRACE vector: Beep.

Repeats

Example Data

No response

Additional Context

Container repo https://github.com/ChrisMcKee/vector-nt

References

No response

Hey @ChrisMcKee - very interesting! I'm not familiar with Windows containers, so I'm not sure if this is a bug, or just something we can't currently support without additional features.

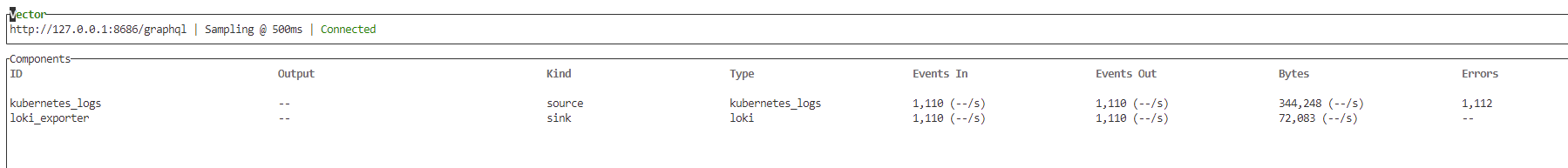

It seems like we identified the log files, but aren't processing them? I'm curious if you can view the internal metrics around the source during your tests. I suspect some of the metrics could be useful in identifying what's going wrong.

Hi, running the tap against it during run showed no data being processed. I can tell its read the API and it appears to be looking at the base folder. I can't tell if it's entering the nested folder or following the symlink to the file. Assuming the reading code is the same I can try the file reader to read the logs from path. Might at least show if it's reading the files

Running an internal_metrics source -> prometheus_exporter (or whatever convenient metrics sink) should giver richer metrics, I believe there are metrics exporter on a per file basis - which could answer that question

2023-01-12T16:02:41.685028Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Found new file to watch. file=/var\log\pods\datadog_datadog-windows-c4t8f_f422afd5-6b9e-43c7-92c5-7ab0b51f9ed7\agent\0.log

Changed the container from Windows Server Nano to Windows Server Core (changed my repo to publish both); I guess the issue with accessing the logs is the same reason datadog chose the fat image over nano. Bit annoying.

It's still not getting to the destination though I rebooted a log to force some sign of life and can see errors

2023-01-12T17:56:43.852946Z INFO vector::app: Internal log rate limit configured. internal_log_rate_secs=10

2023-01-12T17:56:43.853195Z INFO vector::app: Log level is enabled. level="Info"

2023-01-12T17:56:43.853998Z INFO vector::app: Loading configs. paths=["/etc\\vector"]

2023-01-12T17:56:43.876623Z INFO vector::sources::kubernetes_logs: Obtained Kubernetes Node name to collect logs for (self). self_node_name="ip-10-0-3-68.eu-west-2.compute.internal"

2023-01-12T17:56:43.899654Z INFO vector::sources::kubernetes_logs: Excluding matching files. exclude_paths=["**/*datadog*/**"]

2023-01-12T17:56:44.020700Z INFO vector::topology::running: Running healthchecks.

2023-01-12T17:56:44.020992Z INFO vector: Vector has started. debug="false" version="0.26.0" arch="x86_64" revision="c6b5bc2 2022-12-05"

2023-01-12T17:56:44.021038Z INFO vector::internal_events::api: API server running. address=127.0.0.1:8686 playground=off

2023-01-12T17:56:44.026274Z INFO vector::topology::builder: Healthcheck: Disabled.

2023-01-12T17:56:44.058653Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: file_source::checkpointer: Loaded checkpoint data.

2023-01-12T17:56:50.555274Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\ebs-plugin\0.log file_position=576

2023-01-12T17:56:50.556497Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\liveness-probe\0.log file_position=2191

2023-01-12T17:56:50.557950Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\node-driver-registrar\0.log file_position=2459

2023-01-12T17:56:50.558592Z WARN source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Currently ignoring file too small to fingerprint. file=/var\log\pods\userapp-de-test_userapp-api-de-test-554f548957-p4h6h_9946cbed-66c2-40c7-ac86-c067e6893a85\userapp-api-de-test\0.log

2023-01-12T17:56:50.559906Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\userapp-de-test_appplat-kernel-scheduler-69857cb544-wr49c_087bb2e8-a56f-4700-b2be-8043d68ce5c4\kernel-scheduler\0.log file_position=1031

2023-01-12T17:56:50.561292Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\userapp-de-test_appplat-kernel-74ccf469f7-tc5xv_3391618f-7481-41b7-9d18-15e70d2dc8cd\kernel\0.log file_position=345358

2023-01-12T17:56:50.561881Z WARN source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Currently ignoring file too small to fingerprint. file=/var\log\pods\userapp-uk-test_userapp-api-uk-test-574d967fc6-mvsqq_f990e29e-3f28-4384-8447-0730d6dbc0ab\userapp-api-uk-test\0.log

2023-01-12T17:57:27.675816Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Found new file to watch. file=/var\log\pods\userapp-de-test_appplat-kernel-74ccf469f7-hgchq_f2e29ef4-cea9-42f3-aaec-e0d1283f3227\kernel\0.log

2023-01-12T17:57:27.676751Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Failed to annotate event with pod metadata. event=Log(LogEvent { inner: Inner { fields: Object({"file": Bytes(b"/var\\log\\pods\\userapp-de-test_appplat-kernel-74ccf469f7-hgchq_f2e29ef4-cea9-42f3-aaec-e0d1283f3227\\kernel\\0.log"), "message": Bytes(b"{\"log\":\"KernelServiceHost setting up logging\\r\\n\",\"stream\":\"stdout\",\"time\":\"2023-01-12T17:57:26.7951258Z\"}"), "source_type": Bytes(b"kubernetes_logs"), "timestamp": Timestamp(2023-01-12T17:57:27.676535400Z)}), size_cache: AtomicCell { value: None }, json_encoded_size_cache: AtomicCell { value: Some(333) } }, metadata: EventMetadata { value: Object({}), secrets: {}, finalizers: EventFinalizers([]), schema_definition: Definition { event_kind: Kind { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), undefined: Some(()), array: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }), object: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }) }, metadata_kind: Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: None, array: None, object: Some(Collection { known: {}, unknown: Unknown(Exact(Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: Some(()), array: None, object: None })) }) }, meaning: {}, log_namespaces: {Vector, Legacy} } } }) error_code="annotation_failed" error_type="reader_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T17:57:27.677077Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] is being rate limited.

2023-01-12T17:57:28.699006Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}:http: vector::internal_events::http_client: HTTP error. error=error trying to connect: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failed:ssl\statem\statem_clnt.c:1921:: unable to get local issuer certificate error_type="request_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T17:57:28.699154Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}: vector::sinks::util::retries: Retrying after error. error=Failed to make HTTP(S) request: Failed to make HTTP(S) request: error trying to connect: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failed:ssl\statem\statem_clnt.c:1921:: unable to get local issuer certificate internal_log_rate_limit=true

2023-01-12T17:57:29.740972Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}:http: vector::internal_events::http_client: Internal log [HTTP error.] is being rate limited.

2023-01-12T17:57:29.741095Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}: vector::sinks::util::retries: Internal log [Retrying after error.] is being rate limited.

2023-01-12T17:57:31.984214Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Stopped watching file. file=/var\log\pods\userapp-de-test_appplat-kernel-74ccf469f7-tc5xv_3391618f-7481-41b7-9d18-15e70d2dc8cd\kernel\0.log

2023-01-12T17:57:37.683475Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] has been rate limited 188 times.

2023-01-12T17:57:37.683512Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Failed to annotate event with pod metadata. event=Log(LogEvent { inner: Inner { fields: Object({"file": Bytes(b"/var\\log\\pods\\userapp-de-test_appplat-kernel-74ccf469f7-hgchq_f2e29ef4-cea9-42f3-aaec-e0d1283f3227\\kernel\\0.log"), "message": Bytes(b"{\"log\":\"2023-01-12 17:57:37.6634|INFO|EventHandlerProcess|handler=\\\"Handler\\\" handler=\\\"IHandleEvents\\u003cApplicantSubmitted\\u003e\\\" state=\\\"calling subscribe\\\"\\r\\n\",\"stream\":\"stdout\",\"time\":\"2023-01-12T17:57:37.6643146Z\"}"), "source_type": Bytes(b"kubernetes_logs"), "timestamp": Timestamp(2023-01-12T17:57:37.683165100Z)}), size_cache: AtomicCell { value: None }, json_encoded_size_cache: AtomicCell { value: Some(489) } }, metadata: EventMetadata { value: Object({}), secrets: {}, finalizers: EventFinalizers([]), schema_definition: Definition { event_kind: Kind { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), undefined: Some(()), array: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }), object: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }) }, metadata_kind: Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: None, array: None, object: Some(Collection { known: {}, unknown: Unknown(Exact(Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: Some(()), array: None, object: None })) }) }, meaning: {}, log_namespaces: {Vector, Legacy} } } }) error_code="annotation_failed" error_type="reader_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T17:57:37.731546Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] is being rate limited.

2023-01-12T17:57:40.876281Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}:http: vector::internal_events::http_client: Internal log [HTTP error.] has been rate limited 4 times.

2023-01-12T17:57:40.876324Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}:http: vector::internal_events::http_client: HTTP error. error=error trying to connect: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failed:ssl\statem\statem_clnt.c:1921:: unable to get local issuer certificate error_type="request_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T17:57:40.876420Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}: vector::sinks::util::retries: Internal log [Retrying after error.] has been rate limited 4 times.

2023-01-12T17:57:40.876434Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}: vector::sinks::util::retries: Retrying after error. error=Failed to make HTTP(S) request: Failed to make HTTP(S) request: error trying to connect: error:1416F086:SSL routines:tls_process_server_certificate:certificate verify failed:ssl\statem\statem_clnt.c:1921:: unable to get local issuer certificate internal_log_rate_limit=true

2023-01-12T17:58:01.933252Z WARN sink{component_kind="sink" component_id=loki_exporter component_type=loki component_name=loki_exporter}:request{request_id=1}: vector::sinks::util::retries: Internal log [Retrying after error.] has been rate limited 1 times.

--

The cert on loki seems ok though (grafana cloud cert)

Checking from the logging pod seems ok

curl --verbose https://logs-prod-008.grafana.net

* Trying xxxx:443...

* Connected to logs-prod-xxx.grafana.net (xxx) port 443 (#0)

* schannel: disabled automatic use of client certificate

* ALPN: offers http/1.1

* ALPN: server accepted http/1.1

> GET / HTTP/1.1

> Host: logs-prod-xxx.grafana.net

> User-Agent: curl/7.83.1

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Date: Thu, 12 Jan 2023 18:05:37 GMT

< Content-Length: 2

< Content-Type: text/plain; charset=utf-8

< Via: 1.1 google

< Alt-Svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000

<

OK* Connection #0 to host logs-prod-xxx.grafana.net left intact

I set verify cert false on loki and the tls error vanished but I still have

: Unknown(Exact(Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: Some(()), array: None, object: None })) }) }, meaning: {}, log_namespaces: {Vector, Legacy} } } }) error_code="annotation_failed" error_type="reader_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T18:03:50.095739Z INFO vector::app: Internal log rate limit configured. internal_log_rate_secs=10

2023-01-12T18:03:50.095993Z INFO vector::app: Log level is enabled. level="info"

2023-01-12T18:03:50.096693Z INFO vector::app: Loading configs. paths=["/etc\\vector"]

2023-01-12T18:03:50.119415Z INFO vector::sources::kubernetes_logs: Obtained Kubernetes Node name to collect logs for (self). self_node_name="ip-10-0-3-68.eu-west-2.compute.internal"

2023-01-12T18:03:50.145318Z INFO vector::sources::kubernetes_logs: Excluding matching files. exclude_paths=["**/*datadog*/**"]

2023-01-12T18:03:50.145608Z WARN vector_core::tls::settings: The `verify_certificate` option is DISABLED, this may lead to security vulnerabilities.

2023-01-12T18:03:50.246430Z INFO vector::topology::running: Running healthchecks.

2023-01-12T18:03:50.246694Z INFO vector: Vector has started. debug="false" version="0.26.0" arch="x86_64" revision="c6b5bc2 2022-12-05"

2023-01-12T18:03:50.246737Z INFO vector::internal_events::api: API server running. address=127.0.0.1:8686 playground=off

2023-01-12T18:03:50.252628Z INFO vector::topology::builder: Healthcheck: Disabled.

2023-01-12T18:03:50.285012Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: file_source::checkpointer: Loaded checkpoint data.

2023-01-12T18:03:51.664931Z WARN source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Currently ignoring file too small to fingerprint. file=/var\log\pods\userapp-uk-test_userapp-api-uk-test-574d967fc6-mvsqq_f990e29e-3f28-4384-8447-0730d6dbc0ab\userapp-api-uk-test\0.log

2023-01-12T18:03:51.666146Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\userapp-de-test_userapp-p-kernel-74ccf469f7-hgchq_f2e29ef4-cea9-42f3-aaec-e0d1283f3227\kernel\0.log file_position=345348

2023-01-12T18:03:51.666567Z WARN source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Currently ignoring file too small to fingerprint. file=/var\log\pods\userapp-de-test_userapp-api-de-test-554f548957-p4h6h_9946cbed-66c2-40c7-ac86-c067e6893a85\userapp-api-de-test\0.log

2023-01-12T18:03:51.667689Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\ebs-plugin\0.log file_position=576

2023-01-12T18:03:51.668812Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\liveness-probe\0.log file_position=2191

2023-01-12T18:03:51.669954Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\kube-system_ebs-csi-node-windows-9hsp8_4e7050cb-cb97-41ff-8838-7af69bf7c5b9\node-driver-registrar\0.log file_position=2459

2023-01-12T18:03:51.671057Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Resuming to watch file. file=/var\log\pods\userapp-de-test_userapp-p-kernel-scheduler-69857cb544-wr49c_087bb2e8-a56f-4700-b2be-8043d68ce5c4\kernel-scheduler\0.log file_position=1031

2023-01-12T18:07:19.766573Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Found new file to watch. file=/var\log\pods\userapp-de-test_userapp-p-kernel-74ccf469f7-c5b22_998099fc-6535-492a-b8de-6b054db540f7\kernel\0.log

2023-01-12T18:07:19.766713Z INFO source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}:file_server: vector::internal_events::file::source: Stopped watching file. file=/var\log\pods\userapp-de-test_userapp-p-kernel-74ccf469f7-hgchq_f2e29ef4-cea9-42f3-aaec-e0d1283f3227\kernel\0.log

2023-01-12T18:07:19.767636Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Failed to annotate event with pod metadata. event=Log(LogEvent { inner: Inner { fields: Object({"file": Bytes(b"/var\\log\\pods\\userapp-de-test_userapp-p-kernel-74ccf469f7-c5b22_998099fc-6535-492a-b8de-6b054db540f7\\kernel\\0.log"), "message": Bytes(b"{\"log\":\"KernelServiceHost setting up logging\\r\\n\",\"stream\":\"stdout\",\"time\":\"2023-01-12T18:07:19.0844228Z\"}"), "source_type": Bytes(b"kubernetes_logs"), "timestamp": Timestamp(2023-01-12T18:07:19.767438100Z)}), size_cache: AtomicCell { value: None }, json_encoded_size_cache: AtomicCell { value: Some(333) } }, metadata: EventMetadata { value: Object({}), secrets: {}, finalizers: EventFinalizers([]), schema_definition: Definition { event_kind: Kind { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), undefined: Some(()), array: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }), object: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }) }, metadata_kind: Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: None, array: None, object: Some(Collection { known: {}, unknown: Unknown(Exact(Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: Some(()), array: None, object: None })) }) }, meaning: {}, log_namespaces: {Vector, Legacy} } } }) error_code="annotation_failed" error_type="reader_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T18:07:19.767943Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] is being rate limited.

2023-01-12T18:07:29.801713Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] has been rate limited 187 times.

2023-01-12T18:07:29.801754Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Failed to annotate event with pod metadata. event=Log(LogEvent { inner: Inner { fields: Object({"file": Bytes(b"/var\\log\\pods\\userapp-de-test_userapp-p-kernel-74ccf469f7-c5b22_998099fc-6535-492a-b8de-6b054db540f7\\kernel\\0.log"), "message": Bytes(b"{\"log\":\"2023-01-12 18:07:29.7809|INFO|EventHandlerProcess|p=EventHandlerProcess Declared Consumer. queue='ApplicationHandlers', consumer tag='05f42a88-15a1-4f64-84ba-7953db518774' prefetchcount=10 priority=0 x-cancel-on-ha-failover=False\\r\\n\",\"stream\":\"stdout\",\"time\":\"2023-01-12T18:07:29.7809427Z\"}"), "source_type": Bytes(b"kubernetes_logs"), "timestamp": Timestamp(2023-01-12T18:07:29.801578200Z)}), size_cache: AtomicCell { value: None }, json_encoded_size_cache: AtomicCell { value: Some(582) } }, metadata: EventMetadata { value: Object({}), secrets: {}, finalizers: EventFinalizers([]), schema_definition: Definition { event_kind: Kind { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), undefined: Some(()), array: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }), object: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }) }, metadata_kind: Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: None, array: None, object: Some(Collection { known: {}, unknown: Unknown(Exact(Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: Some(()), array: None, object: None })) }) }, meaning: {}, log_namespaces: {Vector, Legacy} } } }) error_code="annotation_failed" error_type="reader_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T18:07:29.823422Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] is being rate limited.

2023-01-12T18:07:39.834532Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] has been rate limited 447 times.

2023-01-12T18:07:39.834563Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Failed to annotate event with pod metadata. event=Log(LogEvent { inner: Inner { fields: Object({"file": Bytes(b"/var\\log\\pods\\userapp-de-test_userapp-p-kernel-74ccf469f7-c5b22_998099fc-6535-492a-b8de-6b054db540f7\\kernel\\0.log"), "message": Bytes(b"{\"log\":\"2023-01-12 18:07:39.8131|INFO|EventHandlerProcess|p=EventHandlerProcess Declared App. queue='userappship.Consumer.From.p.Deleted.userappshipPublisher', prefetchcount=10 priority=0 x-cancel-on-ha-failover=False\\r\\n\",\"stream\":\"stdout\",\"time\":\"2023-01-12T18:07:39.8131542Z\"}"), "source_type": Bytes(b"kubernetes_logs"), "timestamp": Timestamp(2023-01-12T18:07:39.834397200Z)}), size_cache: AtomicCell { value: None }, json_encoded_size_cache: AtomicCell { value: Some(592) } }, metadata: EventMetadata { value: Object({}), secrets: {}, finalizers: EventFinalizers([]), schema_definition: Definition { event_kind: Kind { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), undefined: Some(()), array: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }), object: Some(Collection { known: {}, unknown: Unknown(Infinite(Infinite { bytes: Some(()), integer: Some(()), float: Some(()), boolean: Some(()), timestamp: Some(()), regex: Some(()), null: Some(()), array: Some(()), object: Some(()) })) }) }, metadata_kind: Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: None, array: None, object: Some(Collection { known: {}, unknown: Unknown(Exact(Kind { bytes: None, integer: None, float: None, boolean: None, timestamp: None, regex: None, null: None, undefined: Some(()), array: None, object: None })) }) }, meaning: {}, log_namespaces: {Vector, Legacy} } } }) error_code="annotation_failed" error_type="reader_failed" stage="processing" internal_log_rate_limit=true

2023-01-12T18:07:39.863210Z ERROR source{component_kind="source" component_id=kubernetes_logs component_type=kubernetes_logs component_name=kubernetes_logs}: vector::internal_events::kubernetes_logs: Internal log [Failed to annotate event with pod metadata.] is being rate limited.

If I re-enable verification and turn on healthcheck I get a DNS error

2023-01-12T18:30:16.787200Z WARN http: vector::internal_events::http_client: HTTP error. error=error trying to connect: dns error: No such host is known. (os error 11001) error_type="request_failed" stage="processing" internal_log_rate_limit=true

Bit confusing as the earlier logs with tls errors showed it had resolved the IP address

The TLS error reminds me of https://github.com/vectordotdev/vector/issues/13238 / https://github.com/vectordotdev/vector/issues/2256, but I'll do some additional digging - as well as trying to figure out why annotation is failing as well.

🤔 This might be as simple as the component having hardcoded /'s all over the place: https://github.com/vectordotdev/vector/blob/master/src/sources/kubernetes_logs/path_helpers.rs

🤔 This might be as simple as the component having hardcoded

/'s all over the place: https://github.com/vectordotdev/vector/blob/master/src/sources/kubernetes_logs/path_helpers.rs

I don't think the pathing is the issue as containerisation works fine with / it just; i.e. /var/log/vector would translate automatically to c:\var\log\vector; the machine doesn't care. https://github.com/MicrosoftDocs/Virtualization-Documentation/blob/main/windows-container-samples/nginx/Dockerfile even msft mix and match; as I can browse the paths on the container in k8s the mapping works fine; The logs themselves are also being read (content visible in the logs).

Not sure about the TLS issue in #13238 as in the container curl works fine

* Trying 34.zz.zz.zz:443...

* Connected to logs-zz-zz.grafana.net (34.zz.zz.zz) port 443 (#0)

* schannel: disabled automatic use of client certificate

* ALPN: offers http/1.1

* ALPN: server accepted http/1.1

> GET / HTTP/1.1

> Host: logs-zz-zz.grafana.net

> User-Agent: curl/7.83.1

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Date: Fri, 13 Jan 2023 11:48:08 GMT

< Content-Length: 2

< Content-Type: text/plain; charset=utf-8

< Via: 1.1 google

< Alt-Svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000

<

OK* Connection #0 to host logs-zz-zz.grafana.net left intact

You do get some weird directories

Directory of C:\etc\vector

01/13/2023 10:54 AM <DIR> .

01/13/2023 10:54 AM <DIR> ..

01/13/2023 10:54 AM <DIR> ..2023_01_13_10_54_17.855863858

01/13/2023 10:54 AM <SYMLINKD> ..data [..2023_01_13_10_54_17.855863858]

01/13/2023 10:54 AM <SYMLINK> vector.yaml [..data\vector.yaml]

But vector seems to be reading the config fine (..data and ..2023(etc) just contain the vector.yaml from the configmap)

The temp data (writeable volume - pv) seems to work fine

C:\etc\temp\kubernetes_logs>type checkpoints.json

{"version":"1","checkpoints":[{"fingerprint":{"first_lines_checksum":35318952718615088},"position":807,"modified":"2023-01-13T11:06:57.446020700Z"},{"fingerprint":{"first_lines_checksum":107399312636167417},"position":576,"modified":"2023-01-13T11:02:32.317490300Z"},{"fingerprint":{"first_lines_checksum":16198873184416428945},"position":2459,"modified":"2023-01

-13T11:02:36.039260400Z"}]}

Wondering if its similar to

https://github.com/actix/actix-web/issues/1560

https://github.com/bluejekyll/trust-dns

as nslookup works fine on both machines but running the command with -vvv gives me

2023-01-13T12:22:14.725308Z WARN http: vector::internal_events::http_client: HTTP error. error=error trying to connect: dns error: No such host is known. (os error 11001) error_type="request_failed" stage="processing" internal_log_rate_limit=true

I was trying to build vector on windows but got stuck bouncing between one exception and the next (openssl building fun then jemalloc build blew up)

I was trying to build vector on windows but got stuck bouncing between one exception and the next (openssl building fun then jemalloc build blew up)

For this note, make sure you are setting --no-default-features --features default-msvc if running cargo build. The Windows build shouldn't be using jemalloc. Hopefully that'll help with some of the build issues.