fasthttp

fasthttp copied to clipboard

fasthttp copied to clipboard

Blocking BodyStreamWriter

Hi,

I've come across this issue when dealing with large files and slow clients. When using the example code below:

case "/test":

{

ctx.SetBodyStreamWriter(func(w *bufio.Writer) {

for i := 0; i < 3; i++ {

mb := make([]byte, 10_000_000)

rand.Read(mb)

written, err := w.Write(mb)

w.Flush()

log.Printf("%d written %s", written, err)

}

})

}

(ignore the fact i'm allocating memory inside the loop, in production this is done using a pool, this is just purely to illustrate the problem)

In the example above if you download it with:

curl --limit 100K http://192.168.1.101:9876/test --output deleteme

all chunks get read into memory at once and then written to the user at the speed of 100K. This is fine for smaller files but if i increase the loop or concurrency then things start to blow up. I'd want to loop and read 10MB into memory, write it (however long it takes), read another 10MB, write, etc. as opposed to reading 30MB into memory all at once and writing it as slow as they dictate.

I've messed around with writing to the underlying Conn object, which does seem to block as you'd expect, but this doesn't really work well.

I'm just wondering what i'm doing wrong here, or if there is a way to block until each set of bytes has been truly written to the the user/client having to manually throttle requests by adding a sleep between loop iterations. Any help is massively appreciated as i've been banging my head against the wall all day trying to think of a proper way around this. Thanks!

Just doing a bit more testing on this... if i split the initial 10MB into 1000 smaller 10K chunks, loop through and call flush after writing each (now 10K) chunk, i get the behaviour expected, in that the next 10MB chunk does not continue until the first is written

That is exactly how it should work. Calling Flush will flush all remaining data in the buffer to the underlying socket. It should also work if you write 10MB into the buffer before calling Flush. The buffer itself is only 4KB big so anything more you write to it will already be flushed to the socket.

Have you tried just reading 4kb of your file at a time and writing that without calling flush. And then only call flush at the end?

Hi Erik,

Thanks for the reply. Splitting the larger chunk into smaller chunks does indeed fix the issue. I've created a gist to illustrate some of the problems that i'm facing now with this. https://gist.github.com/Jon-Murray/37a05ec4091aad55d396d98371f0ac0b

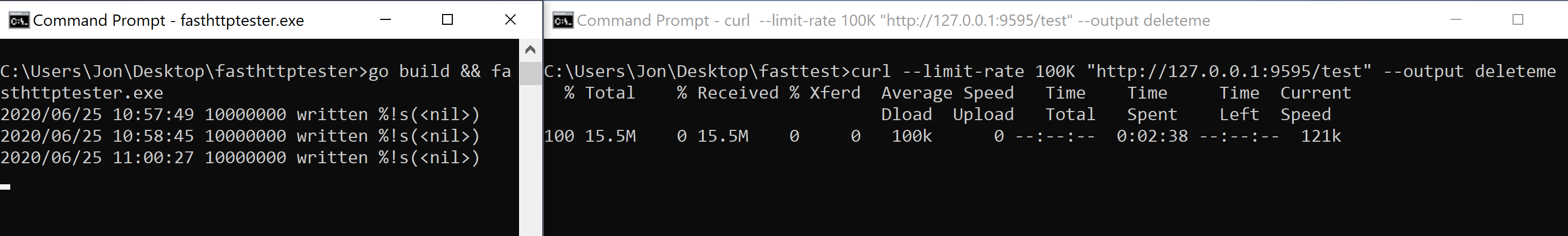

The first issue (which is what the thread is about) is the behaviour of the write when dealing with the larger files. All of these are attempting to download a few hundred MB (i never get past 3-4 because i don't bother waiting) over a connection limited to 100KB/s The results i've showed here:

(see the timestamps on the left) which is showing that 50MB has been read into memory, whereas only 1.5MB have been written. Regardless of where i move the flush to, this still happens. IF i change it so i further split the 10MB file into 4K chunks and write each 4k chunk, then this behaves as you'd expect (in that only 1 10MB chunk is read into memory at a time) which can be seen here:

(notice the ~1min timestamp difference on the left)

This is compared to the stlib implementation which just writes the entire 10MB chunk at once and behaves as you'd expect (blocking) which can be seen here:

The first issue, I guess, is that this behaviour doesn't seem to be documented anywhere. Every example i've found just seems to be "fire and forget" in that you can write arbitrary chunks into the response stream and they will just work, which doesn't seem to be the case (ie. it fills 50MB of memory when only 1.5MB is written). The workaround by writing smaller chunks isn't ideal, but it does work.

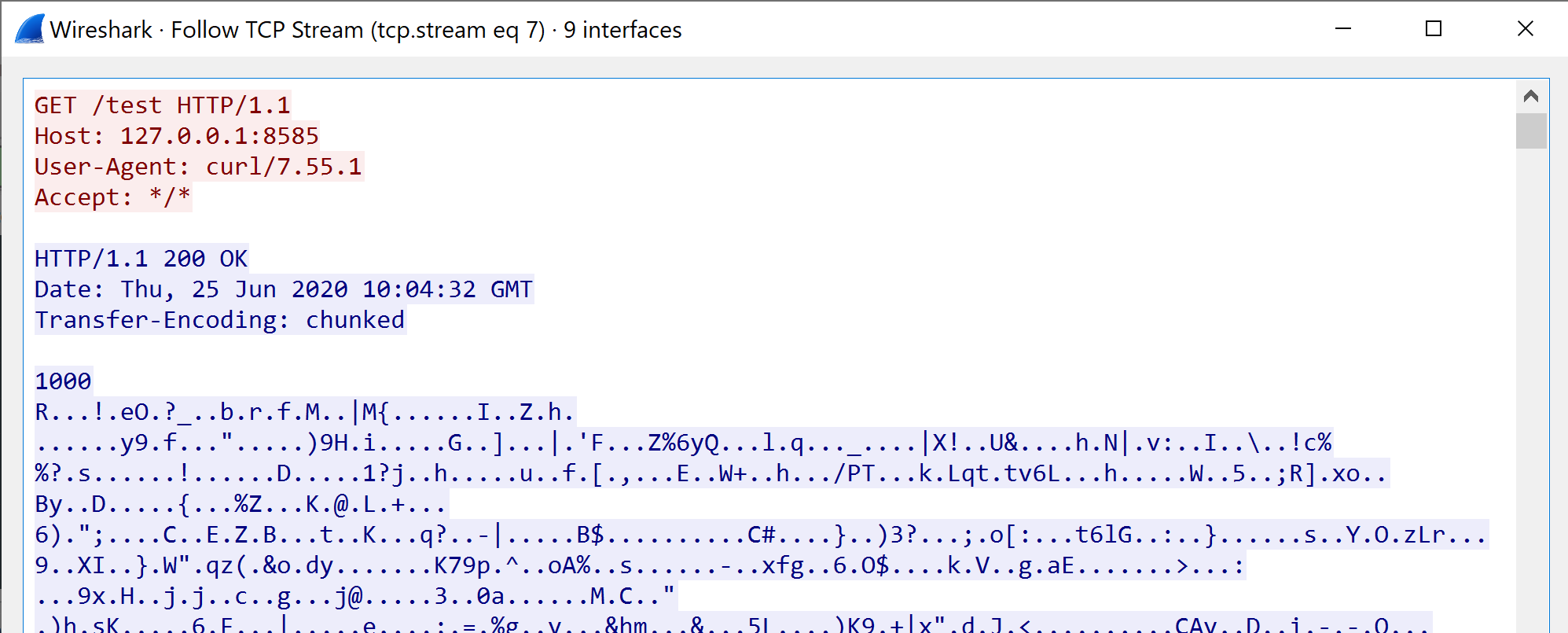

The second issue that i'm facing is that the writing out of chunks seems to only be 4096 bytes, no matter what i do. In the gist you can see i've set the WriteBufferSize to 10MB. I'd expect this to write 10MB to each chunked response. However, if i write the entire 10MB, only 4096 (hex: 1000) bytes are written to each chunk.

This can be seen here:

(fasthttp small and big):

vs the stdlib implementation which writes 10MB (hex: 989680) to each response chunk:

(again ~1min between writes)

This seems to be a bit of a problem. If a user were to download a 100GB file (chunked, of course) - which, unfortunately, in my situation isn't unusual - then this would be 100_000_000_000 / 4096 = 24414063 chunks... each chunk being 8 bytes ("\r\n, 1000, \r\n) this adds up to an extra 200MB of extra data downloaded vs the stdlib implementation of writing 10MB chunks at once (100_000_000_000 / 10_000_000) = 10_000 chunks written. Each chunk being 10 bytes ("\r\n", 989680, "\r\n"), this adds up to only an extra 100KB. This is not to mention the overhead (albeit tiny) of having to process/create the response stream headers (ie. calculating hex number for bytes of n length) for 100GB's worth of 4k chunks in fasthttp.

I guess my question is this:

I don't mind writing 4k chunks at once, but is there any way I could only actually process or write the chunked encoding header when I am ready? Alternatively, is there a way to have a setting which would allow me to fill a buffer of an arbitrary size (ie. 10MB) before a response is written? I thought that setting WriteBufferSize would do this, but it apparently does not. I hope that my wall of text illustrates the issue i'm having. It will most likely come down to a case of RTFM on my side but i have looked around a lot and am not able to find an answer to my question or any examples.

Thanks again!

I have done some more digging in the code (I never looked at this part much before) and it's not optimal at all...

When you call ctx.SetBodyStreamWriter() a goroutine is started which executes your function and allows it to write into a bufio.Writer. This bufio.Writer is allocated with the default size which is 4096 bytes. Writes bigger than this size bypass the buffer and writes smaller are buffered until they reach this threshold. We could increase this but that would only help in some cases. A better solution might be to allow the caller of ctx.SetBodyStreamWriter() to specify the buffer size.

The bufio.Writer is being flushed to a fasthttputil.PipeConns. This copies the contents of the bufio.Writer into a []byte to pass to the reading end.

The reading end is writeBodyChunked which reads the contents from the fasthttputil.PipeConns into a fixed []byte of again 4096. This is the value that makes all chunks 4096 bytes.

This value should be increased but I'm not sure what to. One options would be Server.WriteBufferSize. But smaller or bigger than that would also make sense. Maybe this should be a configurable option? (with a default to 4096 so the default behavior stays the same as it is now).

One thing that makes it difficult to implement this is that the sync.Pool that contains these buffers is shared between many components. I guess we could separate this pool and allocate it per Server for this use case (and leave it like it is for copyZeroAlloc mainly).

Another thing is that this whole process means that all the data you write in ctx.SetBodyStreamWriter() is first copied into a []byte by fasthttputil.PipeConns. Then again copied into a []byte by writeBodyChunked and then again copied into a bufio.Writer which then in turn writes it into the socket.

This is a lot of copies and not optimal at all.

In theory you would want each Write of the bufio.Writer in ctx.SetBodyStreamWriter() to have a chunk header written then just a copy of the data and a chunk footer added. Without any of the internal buffers. This is in theory possible if we redesign fasthttputil.PipeConns to not copy the data but instead just pass the written slice to writeBodyChunked. But these are some big changes that I'm afraid I currently don't have time to work on 😞

When you work with large dynamic req/res bodies you immediately stumble against high memory usage and slow output using FastHTTP, this is unfortunate and I think this problem should be solved.

@erikdubbelboer, do you have time to review a POC @ReneWerner87 and I are working on and provide some directions/feedback?

Sure, where can I find the code?

It's not quite finished yet, we stumble across some problems. We will do some finishing touches, and make it work.

Hi, any updates on this? It should be possible to set the output buffer size from the handler. I can take care of preparing a pull request to implement this feature

What would your suggestion be?

I think the flush mechanism implemented for the Writer should simply represent the final socket buffer. I don't know yet the implementation details, but you seemed to give a nice set of code-links between the various pieces. Is anyone already working on this?

My current hack is to fill a buffer with null chars, to reach a multiple of 2048 based on the message I want to be sent (this just works "ok" for my use case, that is streaming realtime data.)

I don't think anyone is working on this. I certainly don't have time at the moment.

Doesn't Flush already work properly?

From my experiments with netcat, nothing is sent until the buffer threshold is reached. Even if you flush the buffer.