fastapi-pagination

fastapi-pagination copied to clipboard

fastapi-pagination copied to clipboard

Wrong Pagination items from query , on various pages, for query with Out of Order Items

I am getting the wrong pagination items on various pages, which is a bit concerning.

here is the query:

SELECT comments.id AS comments_id, comments.cid AS comments_cid, comments.text AS comments_text, comments.time AS comments_time, comments.is_owner AS comments_is_owner, comments.video_id AS comments_video_id, comments.analysis_id AS comments_analysis_id, comments.author AS comments_author, comments.channel AS comments_channel, comments.votes AS comments_votes, comments.reply_count AS comments_reply_count, comments.sentiment AS comments_sentiment, comments.has_sentiment_negative AS comments_has_sentiment_negative, comments.has_sentiment_positive AS comments_has_sentiment_positive, comments.has_sentiment_neutral AS comments_has_sentiment_neutral, comments.is_reply AS comments_is_reply, comments.is_word_spammer AS comments_is_word_spammer, comments.is_my_spammer AS comments_is_my_spammer, comments.is_most_voted_duplicate AS comments_is_most_voted_duplicate, comments.duplicate_count AS comments_duplicate_count, comments.copy_count AS comments_copy_count, comments.is_copy AS comments_is_copy, comments.is_bot_author AS comments_is_bot_author, comments.has_fuzzy_duplicates AS comments_has_fuzzy_duplicates, comments.fuzzy_duplicate_count AS comments_fuzzy_duplicate_count, comments.is_bot AS comments_is_bot, comments.is_human AS comments_is_human, comments.external_phone_numbers AS comments_external_phone_numbers, comments.keywords AS comments_keywords, comments.phrases AS comments_phrases, comments.custom_keywords AS comments_custom_keywords, comments.custom_phrases AS comments_custom_phrases, comments.external_urls AS comments_external_urls, comments.has_external_phone_numbers AS comments_has_external_phone_numbers, comments.text_external_phone_numbers AS comments_text_external_phone_numbers, comments.unicode_characters AS comments_unicode_characters, comments.unicode_alphabet AS comments_unicode_alphabet, comments.unicode_emojis AS comments_unicode_emojis, comments.unicode_digits AS comments_unicode_digits, comments.has_keywords AS comments_has_keywords, comments.has_phrases AS comments_has_phrases, comments.has_unicode_alphabet AS comments_has_unicode_alphabet, comments.has_unicode_emojis AS comments_has_unicode_emojis, comments.has_unicode_characters AS comments_has_unicode_characters, comments.has_unicode_too_many AS comments_has_unicode_too_many, comments.has_unicode_digits AS comments_has_unicode_digits, comments.has_custom_keywords AS comments_has_custom_keywords, comments.has_custom_phrases AS comments_has_custom_phrases, comments.has_unicode_non_english AS comments_has_unicode_non_english, comments.has_external_urls AS comments_has_external_urls, comments.has_duplicates AS comments_has_duplicates, comments.has_copies AS comments_has_copies, comments.created_at AS comments_created_at

FROM comments

WHERE comments.analysis_id = %(analysis_id_1)s ORDER BY comments.votes DESC

Here is the function:

@router.get("/{analysis_id}/comments", status_code=status.HTTP_200_OK, )

async def read_comments(analysis_id: str, params: Params = Depends(), sort: str | None = None, filters: str | None = None, search_query: str | None = None,

current_user: schemas.User = Depends(oauth2.get_current_active_user), db: Session = Depends(database.get_db),

api_key: APIKey = Depends(apikey.get_api_key)):

# Sort

if sort == 'top' or sort != 'recent':

query = db.query(models.Comment).order_by(models.Comment.votes.desc())

else:

query = db.query(models.Comment).order_by(models.Comment.created_at.desc())

return paginate(

query,

params,

)

The query can include various filters and is dynamic.

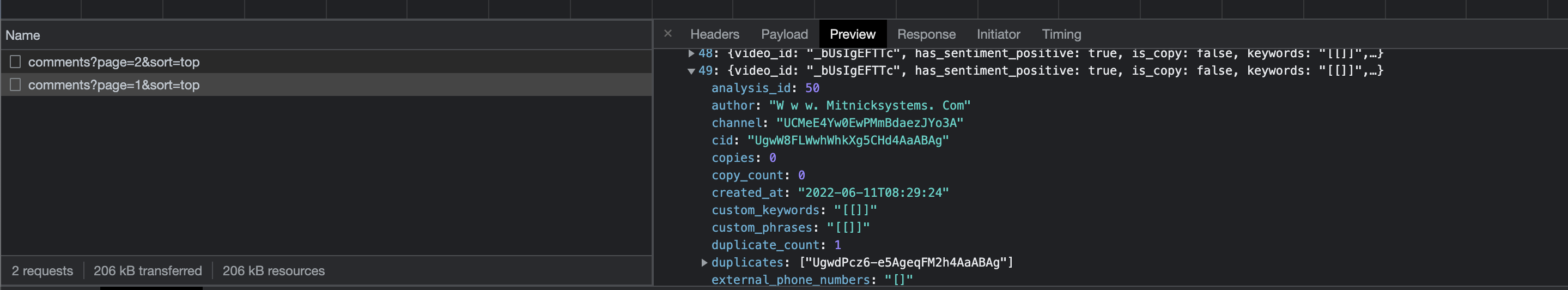

The items on various pages overlap with on item on other pages. Here is one item on page 5:

and then the same id/item is on page 2:

and then the same id/item is on page 2:

The IDs are out of order because they are sorted by voted items and not the id field. If sorted by the id field then there is no issue.

So to replicate this do an order_by another field.

@ikkysleepy Both screenshots look identical, can you update with the one for page 5?

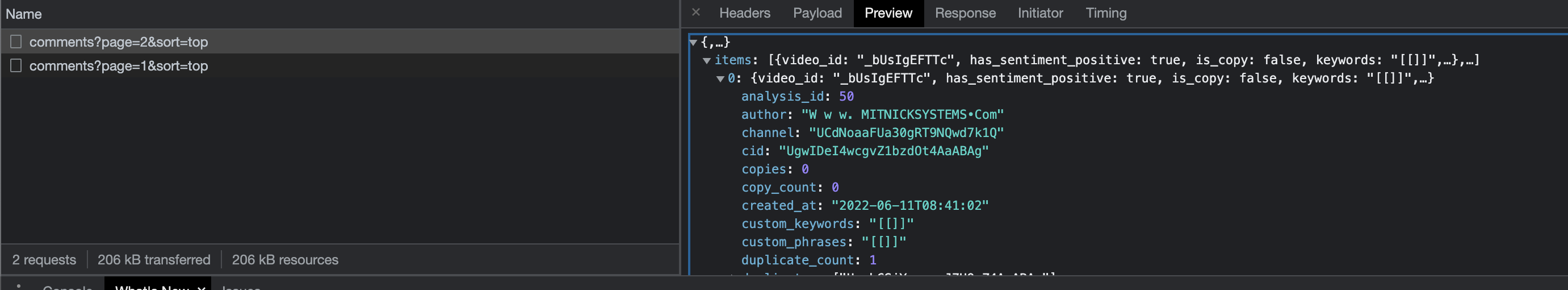

Here is a new query with the last object on page 1 being the same as the first object on page 2

In this example. The last item on the list is the same as the first item on the second page.

Hmmm I'm not sure, looks like created_at is different, as well as author. It's a bit hard to see what's going on with screenshots, do you think you could write a small reproducible test case? Or at least provide the complete json data being returned from each of the api calls?

Long Story short, the issue is with the last item on page 1 is the same id as the first item on the 2nd page:

Though this is not always true. The example above had diff id and so I found one where it had the same id.

Hi @ikkysleepy,

New version 0.10.0 has been released. Could you please check if this issue is still present?

The issue is still present.

See the last CID on the first page: UgwLibS8A51d9vXAPkl4AaABAg

That record is now on the 2nd page as well. This happens on the 2nd page to 3rd page as well, but not after that.

@ikkysleepy

I have created minimal pagination with sorting app:

from contextlib import contextmanager

from typing import Any, Iterator

import uvicorn

from faker import Faker

from fastapi import Depends, FastAPI

from pydantic import BaseModel

from sqlalchemy import Column, Integer, String, create_engine

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import Session, sessionmaker

from fastapi_pagination import Page, add_pagination

from fastapi_pagination.ext.sqlalchemy import paginate

faker = Faker()

engine = create_engine("sqlite:///.db")

SessionLocal = sessionmaker(bind=engine)

Base = declarative_base(bind=engine)

class User(Base):

__tablename__ = "users"

id = Column(Integer, primary_key=True)

name = Column(String, nullable=False)

Base.metadata.drop_all()

Base.metadata.create_all()

class UserOut(BaseModel):

id: int

name: str

class Config:

orm_mode = True

app = FastAPI()

def get_db() -> Iterator[Session]:

db = SessionLocal()

try:

yield db

finally:

db.commit()

db.close()

@app.on_event("startup")

def on_startup() -> None:

with contextmanager(get_db)() as db:

db.bulk_insert_mappings(User, [{"id": id_, "name": faker.name()} for id_ in range(1, 101)])

@app.get("/users", response_model=Page[UserOut])

def get_users(db: Session = Depends(get_db)) -> Any:

return paginate(db.query(User).order_by(User.id.desc()))

add_pagination(app)

if __name__ == "__main__":

uvicorn.run(app)

Sorting is working as expected.

First page:

curl -X 'GET' \

'http://127.0.0.1:8000/users?page=1&size=10' \

-H 'accept: application/json'

{

"items": [

{

"id": 100,

"name": "Veronica Ramirez"

},

{

"id": 99,

"name": "Heidi Pacheco"

},

{

"id": 98,

"name": "Michael Weber"

},

{

"id": 97,

"name": "Mrs. Karen Miller"

},

{

"id": 96,

"name": "Emily Coleman"

},

{

"id": 95,

"name": "Rachael Washington"

},

{

"id": 94,

"name": "Stephanie Weaver"

},

{

"id": 93,

"name": "Thomas Nelson"

},

{

"id": 92,

"name": "Miss Susan Ball"

},

{

"id": 91,

"name": "Robert Smith"

}

],

"total": 100,

"page": 1,

"size": 10

}

Second page:

curl -X 'GET' \

'http://127.0.0.1:8000/users?page=2&size=10' \

-H 'accept: application/json'

{

"items": [

{

"id": 90,

"name": "Ashley Rice"

},

{

"id": 89,

"name": "Michael Trevino"

},

{

"id": 88,

"name": "Carol West"

},

{

"id": 87,

"name": "Andrew Medina"

},

{

"id": 86,

"name": "Vanessa Cruz"

},

{

"id": 85,

"name": "Danny Heath"

},

{

"id": 84,

"name": "James Tate"

},

{

"id": 83,

"name": "Troy Stewart II"

},

{

"id": 82,

"name": "Dawn Simpson"

},

{

"id": 81,

"name": "Matthew Walsh"

}

],

"total": 100,

"page": 2,

"size": 10

}

Maybe you have complex join or relationship loading inside your query?

To have the correct order of items you should use selectinload strategy instead of joinedload.

The issue is with postgresql processing . No complex join relationship. I was able to reproduce the issue if and only if the database is in postgresql and the records are around 500 because of the randomness. So the RAW query in postgresql seems fine, so maybe records are handled a little bit different than sqlite records?

So you need to setup postgresql server and add the user/pass/databasename . Here is the updated code to test:

import random

from contextlib import contextmanager

from typing import Any, Iterator

import uvicorn

from faker import Faker

from fastapi import Depends, FastAPI

from pydantic import BaseModel

from sqlalchemy import Column, Integer, String, create_engine

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import Session, sessionmaker

from sqlalchemy import BigInteger, Float, ForeignKey

from sqlalchemy.sql.sqltypes import TIMESTAMP, Boolean

from fastapi_pagination import Page, add_pagination

from fastapi_pagination.ext.sqlalchemy import paginate

faker = Faker()

from .config import settings

username = "catalina"

password = "SECRETE PASSWORD"

database_url = f'postgresql://{username}:{password}@127.0.0.1:5432/DATABASE_NAME_GOES_HERE'

pool_timeout = settings.pool_timeout

pool_size = settings.pool_size

engine = create_engine(database_url, pool_size=pool_size, pool_timeout=pool_timeout, echo=False)

# engine = create_engine("sqlite:///.db")

SessionLocal = sessionmaker(bind=engine)

Base = declarative_base(bind=engine)

class Comment(Base):

__tablename__ = 'comments_test'

id = Column(BigInteger, primary_key=True, nullable=False)

cid = Column(String(), nullable=True)

text = Column(String(), nullable=True)

thumbnail = Column(String(), nullable=True)

moderation_status = Column(String(), nullable=True)

time = Column(String(), nullable=True)

is_owner = Column(Boolean(), nullable=True)

video_id = Column(String(), nullable=True)

analysis_id = Column(Integer(), nullable=True)

author = Column(String(), nullable=True)

channel = Column(String(), nullable=True)

votes = Column(Integer(), nullable=True)

reply_count = Column(BigInteger(), nullable=True)

sentiment = Column(Float(), nullable=True)

has_sentiment_negative = Column(Boolean(), nullable=True)

has_sentiment_positive = Column(Boolean(), nullable=True)

has_sentiment_neutral = Column(Boolean(), nullable=True)

is_reply = Column(Boolean(), nullable=True)

is_word_spammer = Column(Boolean(), nullable=True)

is_reported_spam = Column(Boolean(), nullable=True)

is_most_voted_duplicate = Column(Boolean(), nullable=True)

duplicate_count = Column(BigInteger(), nullable=True)

copy_count = Column(BigInteger(), nullable=True)

is_copy = Column(Boolean(), nullable=True)

is_bot_author = Column(Boolean(), nullable=True)

has_fuzzy_duplicates = Column(Boolean(), nullable=True)

fuzzy_duplicate_count = Column(BigInteger(), nullable=True)

is_bot = Column(Boolean(), nullable=True)

is_human = Column(Boolean(), nullable=True)

external_phone_numbers = Column(String(), nullable=True)

keywords = Column(String(), nullable=True)

phrases = Column(String(), nullable=True)

custom_keywords = Column(String(), nullable=True)

custom_phrases = Column(String(), nullable=True)

external_urls = Column(String(), nullable=True)

has_external_phone_numbers = Column(Boolean(), nullable=True)

text_external_phone_numbers = Column(String(), nullable=True)

unicode_characters = Column(String(), nullable=True)

unicode_alphabet = Column(String(), nullable=True)

unicode_emojis = Column(String(), nullable=True)

unicode_digits = Column(String(), nullable=True)

hashtags = Column(String(), nullable=True)

has_keywords = Column(Boolean(), nullable=True)

has_phrases = Column(Boolean(), nullable=True)

has_unicode_alphabet = Column(Boolean(), nullable=True)

has_unicode_emojis = Column(Boolean(), nullable=True)

has_unicode_characters = Column(Boolean(), nullable=True)

has_unicode_too_many = Column(Boolean(), nullable=True)

has_unicode_digits = Column(Boolean(), nullable=True)

has_custom_keywords = Column(Boolean(), nullable=True)

has_custom_phrases = Column(Boolean(), nullable=True)

has_unicode_non_english = Column(Boolean(), nullable=True)

has_external_urls = Column(Boolean(), nullable=True)

has_duplicates = Column(Boolean(), nullable=True)

has_copies = Column(Boolean(), nullable=True)

is_rejected = Column(Boolean(), nullable=True)

has_hashtags = Column(Boolean(), nullable=True)

is_all_caps = Column(Boolean(), nullable=True)

is_only_emojis = Column(Boolean(), nullable=True)

is_long_text = Column(Boolean(), nullable=True)

is_fan_boy = Column(Boolean(), nullable=True)

is_spam_boy = Column(Boolean(), nullable=True)

has_mentions = Column(Boolean(), nullable=True)

has_time_mentions = Column(Boolean(), nullable=True)

lang = Column(String(), nullable=True)

toxicity = Column(Float(), nullable=True)

fuzzy_duplicates = Column(String, nullable=True)

duplicates = Column(String, nullable=True)

copies = Column(String, nullable=True)

mentions = Column(String, nullable=True)

updated_at = Column(TIMESTAMP(timezone=True), nullable=True)

created_at = Column(TIMESTAMP(timezone=True), nullable=True)

def __getitem__(self, field):

if field in self.__dict__:

return self.__dict__[field]

Base.metadata.drop_all()

Base.metadata.create_all()

class CommentOut(BaseModel):

id: int

cid: str| None = None

text: str| None = None

thumbnail: str| None = None

moderation_status: str | None = None

time: str | None = None

is_owner: bool | None = None

video_id: str| None = None

analysis_id: int | None = None

author: str| None = None

channel: str| None = None

votes: int| None = None

reply_count: int| None = None

sentiment: float| None = None

has_sentiment_negative: bool | None = None

has_sentiment_positive: bool | None = None

has_sentiment_neutral: bool | None = None

is_reply: bool | None = None

is_word_spammer: bool | None = None

is_reported_spam: bool | None = None

is_most_voted_duplicate: bool | None = None

duplicate_count: int| None = None

copy_count: int| None = None

is_copy: bool | None = None

is_bot_author: bool | None = None

has_fuzzy_duplicates: bool | None = None

fuzzy_duplicate_count: int| None = None

is_bot: bool | None = None

is_human: bool | None = None

external_phone_numbers: str | None = None

keywords: str | None = None

phrases: str | None = None

custom_keywords: str | None = None

custom_phrases: str | None = None

external_urls: str | None = None

has_external_phone_numbers: bool | None = None

text_external_phone_numbers: str | None = None

unicode_characters: str | None = None

unicode_alphabet: str | None = None

unicode_emojis: str | None = None

unicode_digits: str | None = None

hashtags: str | None = None

has_keywords: bool | None = None

has_phrases: bool | None = None

has_unicode_alphabet: bool | None = None

has_unicode_emojis: bool | None = None

has_unicode_characters: bool | None = None

has_unicode_too_many: bool | None = None

has_unicode_digits: bool | None = None

has_custom_keywords: bool | None = None

has_custom_phrases: bool | None = None

has_unicode_non_english: bool | None = None

has_external_urls: bool | None = None

has_duplicates: bool | None = None

has_copies: bool | None = None

is_rejected: bool | None = None

has_hashtags: bool | None = None

is_all_caps: bool | None = None

is_only_emojis: bool | None = None

is_long_text: bool | None = None

is_fan_boy: bool | None = None

is_spam_boy: bool | None = None

has_mentions: bool | None = None

has_time_mentions: bool | None = None

lang: str | None = None

toxicity: float | None = None

class Config:

orm_mode = True

app = FastAPI()

def get_db() -> Iterator[Session]:

db = SessionLocal()

try:

yield db

finally:

db.commit()

db.close()

@app.on_event("startup")

def on_startup() -> None:

votes = 0

with contextmanager(get_db)() as db:

votes +=1

db.bulk_insert_mappings(Comment, [{"id": id_, "votes": votes, "analysis_id": 673, "text": faker.name(), "is_human": random.getrandbits(1)} for id_ in range(1, 501)])

@app.get("/comments", response_model=Page[CommentOut])

def get_comments(db: Session = Depends(get_db)) -> Any:

query = db.query(Comment).order_by(Comment.votes

.desc()).filter(Comment.is_human.is_(True)).filter(Comment.analysis_id == 673)

return paginate(query)

add_pagination(app)

if __name__ == "__main__":

uvicorn.run(app)

I was able to go back and forth with making an sqlite database and postgresql table and see that the issue only happens when using the postgresql table and only when the records where over a record limit.

@ikkysleepy Maybe issue when rows have same votes column value. In such case you can add secondary order by clause.

Could you please check if code above still have your issue:

from contextlib import contextmanager

from itertools import count

from typing import Any, Iterator, Optional

import uvicorn

from faker import Faker

from fastapi import Depends, FastAPI

from pydantic import BaseModel

from sqlalchemy import BigInteger, Float

from sqlalchemy import Column, Integer, String, create_engine

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import Session, sessionmaker

from sqlalchemy.sql.sqltypes import TIMESTAMP, Boolean

from fastapi_pagination import Page, add_pagination

from fastapi_pagination.ext.sqlalchemy import paginate

faker = Faker()

user = ''

password = ''

database_url = f"postgresql://{user}:{password}@127.0.0.1:5432/postgres"

engine = create_engine(database_url, echo=False)

SessionLocal = sessionmaker(bind=engine)

Base = declarative_base(bind=engine)

class Comment(Base):

__tablename__ = "comments_test"

id = Column(BigInteger, primary_key=True, nullable=False)

cid = Column(String(), nullable=True)

text = Column(String(), nullable=True)

thumbnail = Column(String(), nullable=True)

moderation_status = Column(String(), nullable=True)

time = Column(String(), nullable=True)

is_owner = Column(Boolean(), nullable=True)

video_id = Column(String(), nullable=True)

analysis_id = Column(Integer(), nullable=True)

author = Column(String(), nullable=True)

channel = Column(String(), nullable=True)

votes = Column(Integer(), nullable=True)

reply_count = Column(BigInteger(), nullable=True)

sentiment = Column(Float(), nullable=True)

has_sentiment_negative = Column(Boolean(), nullable=True)

has_sentiment_positive = Column(Boolean(), nullable=True)

has_sentiment_neutral = Column(Boolean(), nullable=True)

is_reply = Column(Boolean(), nullable=True)

is_word_spammer = Column(Boolean(), nullable=True)

is_reported_spam = Column(Boolean(), nullable=True)

is_most_voted_duplicate = Column(Boolean(), nullable=True)

duplicate_count = Column(BigInteger(), nullable=True)

copy_count = Column(BigInteger(), nullable=True)

is_copy = Column(Boolean(), nullable=True)

is_bot_author = Column(Boolean(), nullable=True)

has_fuzzy_duplicates = Column(Boolean(), nullable=True)

fuzzy_duplicate_count = Column(BigInteger(), nullable=True)

is_bot = Column(Boolean(), nullable=True)

is_human = Column(Boolean(), nullable=True)

external_phone_numbers = Column(String(), nullable=True)

keywords = Column(String(), nullable=True)

phrases = Column(String(), nullable=True)

custom_keywords = Column(String(), nullable=True)

custom_phrases = Column(String(), nullable=True)

external_urls = Column(String(), nullable=True)

has_external_phone_numbers = Column(Boolean(), nullable=True)

text_external_phone_numbers = Column(String(), nullable=True)

unicode_characters = Column(String(), nullable=True)

unicode_alphabet = Column(String(), nullable=True)

unicode_emojis = Column(String(), nullable=True)

unicode_digits = Column(String(), nullable=True)

hashtags = Column(String(), nullable=True)

has_keywords = Column(Boolean(), nullable=True)

has_phrases = Column(Boolean(), nullable=True)

has_unicode_alphabet = Column(Boolean(), nullable=True)

has_unicode_emojis = Column(Boolean(), nullable=True)

has_unicode_characters = Column(Boolean(), nullable=True)

has_unicode_too_many = Column(Boolean(), nullable=True)

has_unicode_digits = Column(Boolean(), nullable=True)

has_custom_keywords = Column(Boolean(), nullable=True)

has_custom_phrases = Column(Boolean(), nullable=True)

has_unicode_non_english = Column(Boolean(), nullable=True)

has_external_urls = Column(Boolean(), nullable=True)

has_duplicates = Column(Boolean(), nullable=True)

has_copies = Column(Boolean(), nullable=True)

is_rejected = Column(Boolean(), nullable=True)

has_hashtags = Column(Boolean(), nullable=True)

is_all_caps = Column(Boolean(), nullable=True)

is_only_emojis = Column(Boolean(), nullable=True)

is_long_text = Column(Boolean(), nullable=True)

is_fan_boy = Column(Boolean(), nullable=True)

is_spam_boy = Column(Boolean(), nullable=True)

has_mentions = Column(Boolean(), nullable=True)

has_time_mentions = Column(Boolean(), nullable=True)

lang = Column(String(), nullable=True)

toxicity = Column(Float(), nullable=True)

fuzzy_duplicates = Column(String, nullable=True)

duplicates = Column(String, nullable=True)

copies = Column(String, nullable=True)

mentions = Column(String, nullable=True)

updated_at = Column(TIMESTAMP(timezone=True), nullable=True)

created_at = Column(TIMESTAMP(timezone=True), nullable=True)

def __getitem__(self, field):

if field in self.__dict__:

return self.__dict__[field]

Base.metadata.drop_all()

Base.metadata.create_all()

class CommentOut(BaseModel):

id: int

cid: Optional[str] = None

text: Optional[str] = None

thumbnail: Optional[str] = None

moderation_status: Optional[str] = None

time: Optional[str] = None

is_owner: Optional[bool] = None

video_id: Optional[str] = None

analysis_id: Optional[int] = None

author: Optional[str] = None

channel: Optional[str] = None

votes: Optional[int] = None

reply_count: Optional[int] = None

sentiment: Optional[float] = None

has_sentiment_negative: Optional[bool] = None

has_sentiment_positive: Optional[bool] = None

has_sentiment_neutral: Optional[bool] = None

is_reply: Optional[bool] = None

is_word_spammer: Optional[bool] = None

is_reported_spam: Optional[bool] = None

is_most_voted_duplicate: Optional[bool] = None

duplicate_count: Optional[int] = None

copy_count: Optional[int] = None

is_copy: Optional[bool] = None

is_bot_author: Optional[bool] = None

has_fuzzy_duplicates: Optional[bool] = None

fuzzy_duplicate_count: Optional[int] = None

is_bot: Optional[bool] = None

is_human: Optional[bool] = None

external_phone_numbers: Optional[str] = None

keywords: Optional[str] = None

phrases: Optional[str] = None

custom_keywords: Optional[str] = None

custom_phrases: Optional[str] = None

external_urls: Optional[str] = None

has_external_phone_numbers: Optional[bool] = None

text_external_phone_numbers: Optional[str] = None

unicode_characters: Optional[str] = None

unicode_alphabet: Optional[str] = None

unicode_emojis: Optional[str] = None

unicode_digits: Optional[str] = None

hashtags: Optional[str] = None

has_keywords: Optional[bool] = None

has_phrases: Optional[bool] = None

has_unicode_alphabet: Optional[bool] = None

has_unicode_emojis: Optional[bool] = None

has_unicode_characters: Optional[bool] = None

has_unicode_too_many: Optional[bool] = None

has_unicode_digits: Optional[bool] = None

has_custom_keywords: Optional[bool] = None

has_custom_phrases: Optional[bool] = None

has_unicode_non_english: Optional[bool] = None

has_external_urls: Optional[bool] = None

has_duplicates: Optional[bool] = None

has_copies: Optional[bool] = None

is_rejected: Optional[bool] = None

has_hashtags: Optional[bool] = None

is_all_caps: Optional[bool] = None

is_only_emojis: Optional[bool] = None

is_long_text: Optional[bool] = None

is_fan_boy: Optional[bool] = None

is_spam_boy: Optional[bool] = None

has_mentions: Optional[bool] = None

has_time_mentions: Optional[bool] = None

lang: Optional[str] = None

toxicity: Optional[float] = None

class Config:

orm_mode = True

app = FastAPI()

def get_db() -> Iterator[Session]:

db = SessionLocal()

try:

yield db

finally:

db.commit()

db.close()

@app.on_event("startup")

def on_startup() -> None:

with contextmanager(get_db)() as db:

db.bulk_insert_mappings(

Comment,

[

{

"id": id_,

"votes": votes,

"analysis_id": 673,

"text": faker.name(),

"is_human": faker.pybool(),

}

for id_, votes in zip(range(1, 501), count(1))

],

)

@app.get("/comments", response_model=Page[CommentOut])

def get_comments(db: Session = Depends(get_db)) -> Any:

query = (

db.query(Comment)

.order_by(Comment.votes.desc(), Comment.created_at.desc())

.filter(Comment.is_human)

.filter(Comment.analysis_id == 673)

)

return paginate(query)

add_pagination(app)

if __name__ == "__main__":

uvicorn.run(app)

That seemed to work. Yeah that is an issue. Thanks for the update. I think we can close the ticket.

Great, I am closing this issue.