TFLite, ONNX, CoreML, TensorRT Export

📚 This guide explains how to export a trained YOLOv5 🚀 model from PyTorch to ONNX and TorchScript formats. UPDATED 8 December 2022.

Before You Start

Clone repo and install requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7. Models and datasets download automatically from the latest YOLOv5 release.

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install

For TensorRT export example (requires GPU) see our Colab notebook appendix section.

Formats

YOLOv5 inference is officially supported in 11 formats:

💡 ProTip: Export to ONNX or OpenVINO for up to 3x CPU speedup. See CPU Benchmarks. 💡 ProTip: Export to TensorRT for up to 5x GPU speedup. See GPU Benchmarks.

| Format | export.py --include |

Model |

|---|---|---|

| PyTorch | - | yolov5s.pt |

| TorchScript | torchscript |

yolov5s.torchscript |

| ONNX | onnx |

yolov5s.onnx |

| OpenVINO | openvino |

yolov5s_openvino_model/ |

| TensorRT | engine |

yolov5s.engine |

| CoreML | coreml |

yolov5s.mlmodel |

| TensorFlow SavedModel | saved_model |

yolov5s_saved_model/ |

| TensorFlow GraphDef | pb |

yolov5s.pb |

| TensorFlow Lite | tflite |

yolov5s.tflite |

| TensorFlow Edge TPU | edgetpu |

yolov5s_edgetpu.tflite |

| TensorFlow.js | tfjs |

yolov5s_web_model/ |

| PaddlePaddle | paddle |

yolov5s_paddle_model/ |

Benchmarks

Benchmarks below run on a Colab Pro with the YOLOv5 tutorial notebook . To reproduce:

python benchmarks.py --weights yolov5s.pt --imgsz 640 --device 0

Colab Pro V100 GPU

benchmarks: weights=/content/yolov5/yolov5s.pt, imgsz=640, batch_size=1, data=/content/yolov5/data/coco128.yaml, device=0, half=False, test=False

Checking setup...

YOLOv5 🚀 v6.1-135-g7926afc torch 1.10.0+cu111 CUDA:0 (Tesla V100-SXM2-16GB, 16160MiB)

Setup complete ✅ (8 CPUs, 51.0 GB RAM, 46.7/166.8 GB disk)

Benchmarks complete (458.07s)

Format [email protected]:0.95 Inference time (ms)

0 PyTorch 0.4623 10.19

1 TorchScript 0.4623 6.85

2 ONNX 0.4623 14.63

3 OpenVINO NaN NaN

4 TensorRT 0.4617 1.89

5 CoreML NaN NaN

6 TensorFlow SavedModel 0.4623 21.28

7 TensorFlow GraphDef 0.4623 21.22

8 TensorFlow Lite NaN NaN

9 TensorFlow Edge TPU NaN NaN

10 TensorFlow.js NaN NaN

Colab Pro CPU

benchmarks: weights=/content/yolov5/yolov5s.pt, imgsz=640, batch_size=1, data=/content/yolov5/data/coco128.yaml, device=cpu, half=False, test=False

Checking setup...

YOLOv5 🚀 v6.1-135-g7926afc torch 1.10.0+cu111 CPU

Setup complete ✅ (8 CPUs, 51.0 GB RAM, 41.5/166.8 GB disk)

Benchmarks complete (241.20s)

Format [email protected]:0.95 Inference time (ms)

0 PyTorch 0.4623 127.61

1 TorchScript 0.4623 131.23

2 ONNX 0.4623 69.34

3 OpenVINO 0.4623 66.52

4 TensorRT NaN NaN

5 CoreML NaN NaN

6 TensorFlow SavedModel 0.4623 123.79

7 TensorFlow GraphDef 0.4623 121.57

8 TensorFlow Lite 0.4623 316.61

9 TensorFlow Edge TPU NaN NaN

10 TensorFlow.js NaN NaN

Export a Trained YOLOv5 Model

This command exports a pretrained YOLOv5s model to TorchScript and ONNX formats. yolov5s.pt is the 'small' model, the second smallest model available. Other options are yolov5n.pt, yolov5m.pt, yolov5l.pt and yolov5x.pt, along with their P6 counterparts i.e. yolov5s6.pt or you own custom training checkpoint i.e. runs/exp/weights/best.pt. For details on all available models please see our README table.

python export.py --weights yolov5s.pt --include torchscript onnx

💡 ProTip: Add --half to export models at FP16 half precision for smaller file sizes

Output:

export: data=data/coco128.yaml, weights=['yolov5s.pt'], imgsz=[640, 640], batch_size=1, device=cpu, half=False, inplace=False, train=False, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=12, verbose=False, workspace=4, nms=False, agnostic_nms=False, topk_per_class=100, topk_all=100, iou_thres=0.45, conf_thres=0.25, include=['torchscript', 'onnx']

YOLOv5 🚀 v6.2-104-ge3e5122 Python-3.7.13 torch-1.12.1+cu113 CPU

Downloading https://github.com/ultralytics/yolov5/releases/download/v6.2/yolov5s.pt to yolov5s.pt...

100% 14.1M/14.1M [00:00<00:00, 274MB/s]

Fusing layers...

YOLOv5s summary: 213 layers, 7225885 parameters, 0 gradients

PyTorch: starting from yolov5s.pt with output shape (1, 25200, 85) (14.1 MB)

TorchScript: starting export with torch 1.12.1+cu113...

TorchScript: export success ✅ 1.7s, saved as yolov5s.torchscript (28.1 MB)

ONNX: starting export with onnx 1.12.0...

ONNX: export success ✅ 2.3s, saved as yolov5s.onnx (28.0 MB)

Export complete (5.5s)

Results saved to /content/yolov5

Detect: python detect.py --weights yolov5s.onnx

Validate: python val.py --weights yolov5s.onnx

PyTorch Hub: model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.onnx')

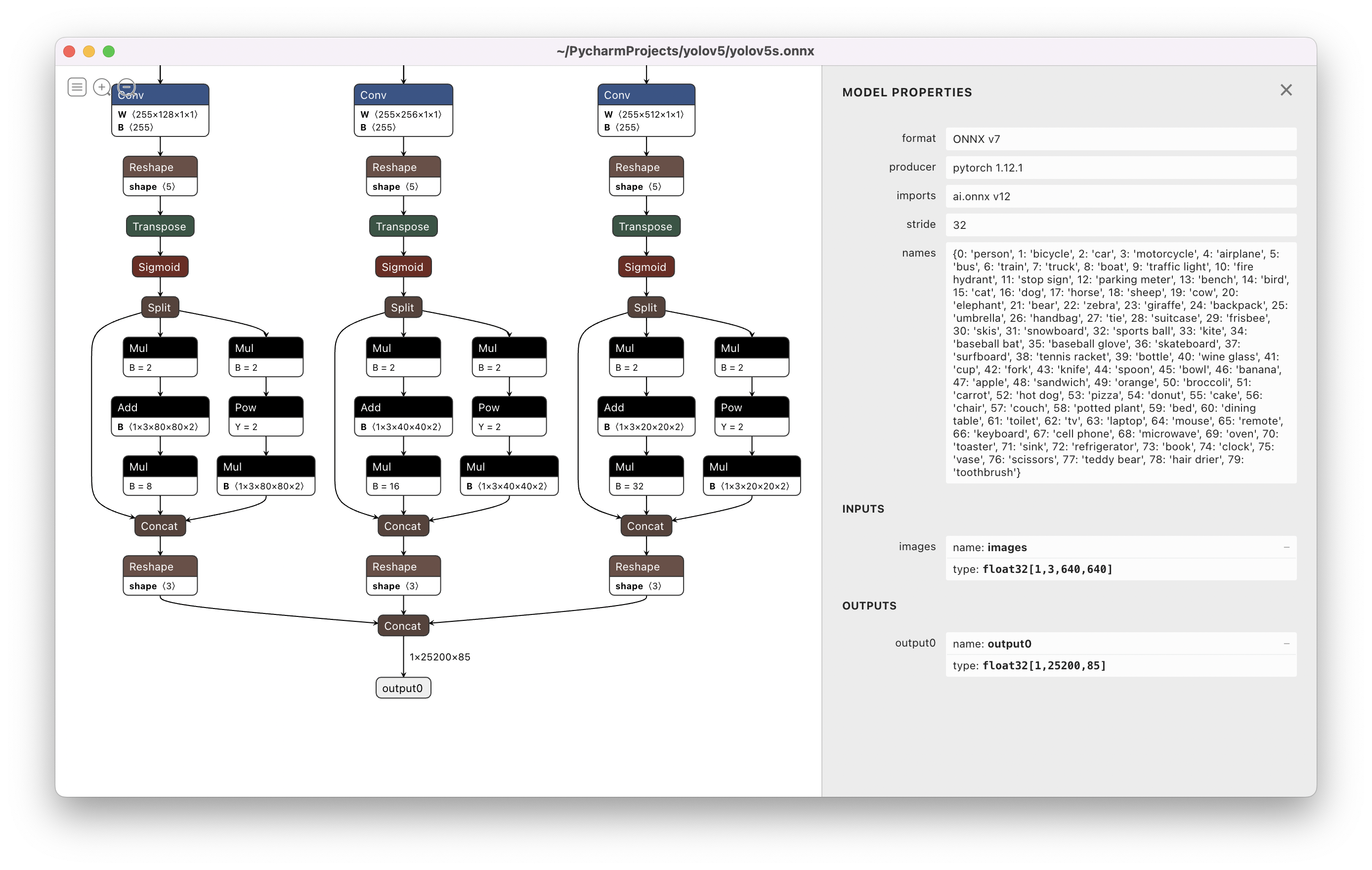

Visualize: https://netron.app/

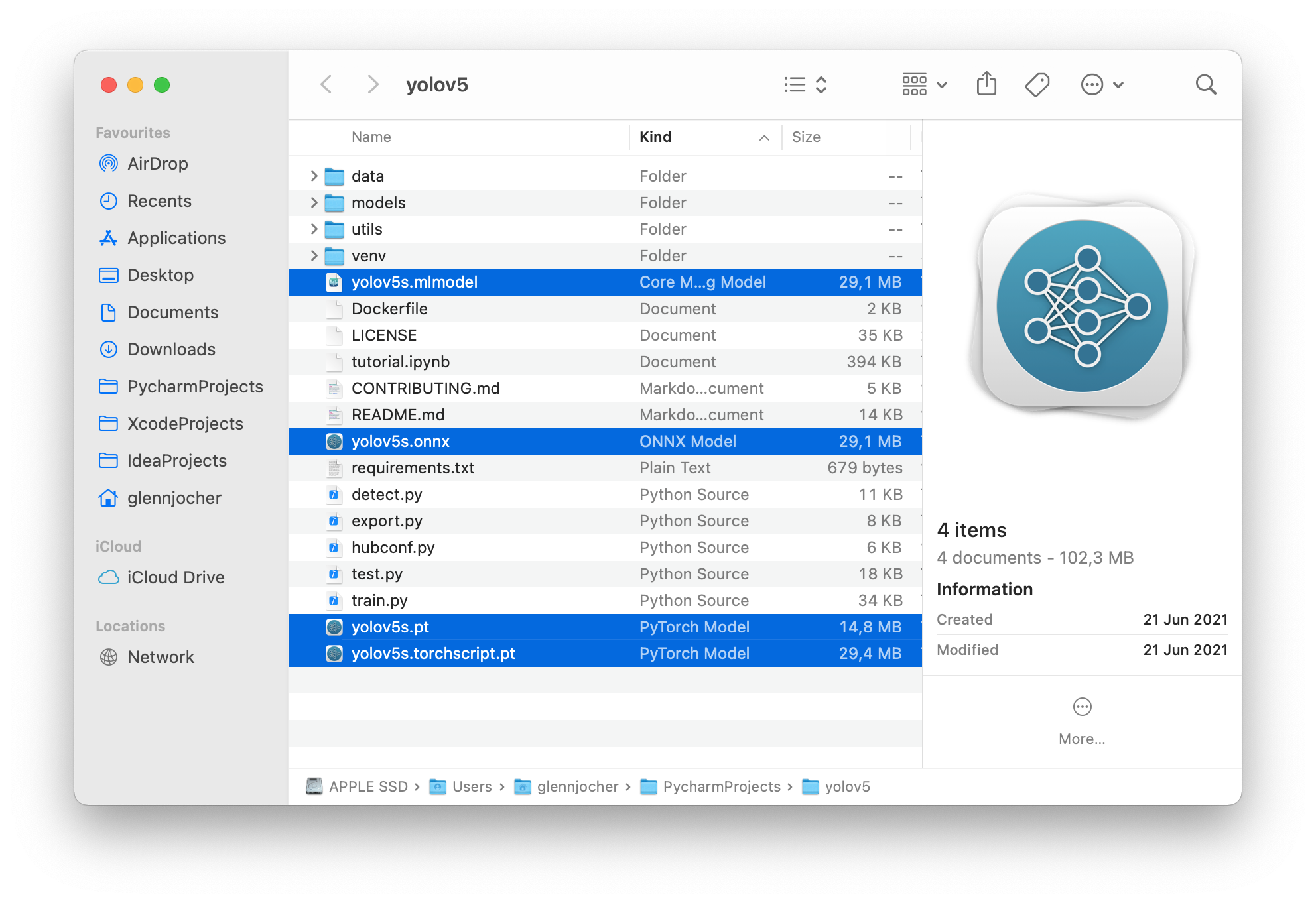

The 3 exported models will be saved alongside the original PyTorch model:

Netron Viewer is recommended for visualizing exported models:

Exported Model Usage Examples

detect.py runs inference on exported models:

python detect.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddle

val.py runs validation on exported models:

python val.py --weights yolov5s.pt # PyTorch

yolov5s.torchscript # TorchScript

yolov5s.onnx # ONNX Runtime or OpenCV DNN with --dnn

yolov5s_openvino_model # OpenVINO

yolov5s.engine # TensorRT

yolov5s.mlmodel # CoreML (macOS Only)

yolov5s_saved_model # TensorFlow SavedModel

yolov5s.pb # TensorFlow GraphDef

yolov5s.tflite # TensorFlow Lite

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

yolov5s_paddle_model # PaddlePaddle

Use PyTorch Hub with exported YOLOv5 models:

import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.pt')

'yolov5s.torchscript ') # TorchScript

'yolov5s.onnx') # ONNX Runtime

'yolov5s_openvino_model') # OpenVINO

'yolov5s.engine') # TensorRT

'yolov5s.mlmodel') # CoreML (macOS Only)

'yolov5s_saved_model') # TensorFlow SavedModel

'yolov5s.pb') # TensorFlow GraphDef

'yolov5s.tflite') # TensorFlow Lite

'yolov5s_edgetpu.tflite') # TensorFlow Edge TPU

'yolov5s_paddle_model') # PaddlePaddle

# Images

img = 'https://ultralytics.com/images/zidane.jpg' # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

OpenCV DNN inference

OpenCV inference with ONNX models:

python export.py --weights yolov5s.pt --include onnx

python detect.py --weights yolov5s.onnx --dnn # detect

python val.py --weights yolov5s.onnx --dnn # validate

C++ Inference

YOLOv5 OpenCV DNN C++ inference on exported ONNX model examples:

- https://github.com/Hexmagic/ONNX-yolov5/blob/master/src/test.cpp

- https://github.com/doleron/yolov5-opencv-cpp-python

YOLOv5 OpenVINO C++ inference examples:

- https://github.com/dacquaviva/yolov5-openvino-cpp-python

- https://github.com/UNeedCryDear/yolov5-seg-opencv-dnn-cpp

TensorFlow.js Web Browser Inference

- https://aukerul-shuvo.github.io/YOLOv5_TensorFlow-JS/

Environments

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

- Notebooks with free GPU:

- Google Cloud Deep Learning VM. See GCP Quickstart Guide

- Amazon Deep Learning AMI. See AWS Quickstart Guide

- Docker Image. See Docker Quickstart Guide

Status

If this badge is green, all YOLOv5 GitHub Actions Continuous Integration (CI) tests are currently passing. CI tests verify correct operation of YOLOv5 training, validation, inference, export and benchmarks on macOS, Windows, and Ubuntu every 24 hours and on every commit.

Thank you so much! I will deploy onnx model on mobile devices!

it only work with 5s pretrained,

@glenn-jocher My onnx is 1.7.0, python is 3.8.3, pytorch is 1.4.0 (your latest recommendation is 1.5.0). But exporting to ONNX is failed because of opset version 12. This is my command line:

export PYTHONPATH="$PWD" && python models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1

And it failed with this error:

Fusing layers... Model Summary: 140 layers, 7.45958e+06 parameters, 7.45958e+06 gradientsONNX export failed: Unsupported ONNX opset version: 12

I don't think it caused by PyTorch version lower than your recommendation. Any advice? Thank you.

I changed opset_version to 11 in export.py, and new error messages came up:

Fusing layers... Model Summary: 140 layers, 7.45958e+06 parameters, 7.45958e+06 gradients Segmentation fault (core dumped)

This is the full message:

$ export PYTHONPATH="$PWD" && python models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1

Namespace(batch_size=1, img_size=[640, 640], weights='./weights/yolov5s.pt')

/home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/serialization.py:593: SourceChangeWarning: source code of class 'torch.nn.modules.conv.Conv2d' has changed. you can retrieve the original source code by accessing the object's source attribute or set `torch.nn.Module.dump_patches = True` and use the patch tool to revert the changes.

warnings.warn(msg, SourceChangeWarning)

/home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/serialization.py:593: SourceChangeWarning: source code of class 'torch.nn.modules.container.ModuleList' has changed. you can retrieve the original source code by accessing the object's source attribute or set `torch.nn.Module.dump_patches = True` and use the patch tool to revert the changes.

warnings.warn(msg, SourceChangeWarning)

TorchScript export failed: Only tensors or tuples of tensors can be output from traced functions (getOutput at /opt/conda/conda-bld/pytorch_1579022027550/work/torch/csrc/jit/tracer.cpp:212)

frame #0: c10::Error::Error(c10::SourceLocation, std::string const&) + 0x47 (0x7fb3a6bdf627 in /home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/lib/libc10.so)

frame #1: torch::jit::tracer::TracingState::getOutput(c10::IValue const&, unsigned long) + 0x334 (0x7fb3b16d2024 in /home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/lib/libtorch.so)

frame #2: torch::jit::tracer::trace(std::vector<c10::IValue, std::allocator<c10::IValue> >, std::function<std::vector<c10::IValue, std::allocator<c10::IValue> > (std::vector<c10::IValue, std::allocator<c10::IValue> >)> const&, std::function<std::string (at::Tensor const&)>, bool, torch::jit::script::Module*) + 0x539 (0x7fb3b16d99f9 in /home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/lib/libtorch.so)

frame #3: <unknown function> + 0x759fed (0x7fb3ddbcafed in /home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/lib/libtorch_python.so)

frame #4: <unknown function> + 0x7720ee (0x7fb3ddbe30ee in /home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/lib/libtorch_python.so)

frame #5: <unknown function> + 0x28b8a7 (0x7fb3dd6fc8a7 in /home/DL-001/anaconda3/envs/pytorch/lib/python3.8/site-packages/torch/lib/libtorch_python.so)

<omitting python frames>

frame #24: __libc_start_main + 0xe7 (0x7fb416e13b97 in /lib/x86_64-linux-gnu/libc.so.6)

Fusing layers...

Model Summary: 140 layers, 7.45958e+06 parameters, 7.45958e+06 gradients

Segmentation fault (core dumped)

I debugged it and found the reason.

It failed at ts = torch.jit.trace(model, img), so I realized it was caused by lower version of PyTorch.

Then I upgraded PyTorch to 1.5.1, and it worked good finally.

why you set Detect() layer export=True? this will let Detect() layer not in the onnx model.

@Ezra-Yu yes that is correct. You are free to set it to False if that suits you better.

@glenn-jocher Why is the input of onnx fixed,but pt is multiple of 32

hi, is there any sample code to use the exported onnx to get the Nx5 bbox?. i tried to use the postprocess from detect.py, but it doesnt work well.

hi, is there any sample code to use the exported onnx to get the Nx5 bbox?. i tried to use the postprocess from detect.py, but it doesnt work well.

Hi @neverrop

I have added guidance over how this could be achieved here: https://github.com/ultralytics/yolov5/issues/343#issuecomment-658021043

Hope this is useful!

hi, is there any sample code to use the exported onnx to get the Nx5 bbox?. i tried to use the postprocess from detect.py, but it doesnt work well.

Hi @neverrop

I have added guidance over how this could be achieved here: #343 (comment)

Hope this is useful!. Thank you so much. I will try it today。

Would CoreML failure as shown below affect the successfully converted onnx model? Thank you.

ONNX export success, saved as weights/yolov5s.onnx WARNING:root:TensorFlow version 2.2.0 detected. Last version known to be fully compatible is 1.14.0 . WARNING:root:Keras version 2.4.3 detected. Last version known to be fully compatible of Keras is 2.2.4 .

Starting CoreML export with coremltools 3.4... CoreML export failure: module 'coremltools' has no attribute 'convert'

Export complete. Visualize with https://github.com/lutzroeder/netron

Hi @shenglih

CoreML export doesn't affect the ONNX one in any way.

Regards

Starting CoreML export with coremltools 3.4... CoreML export failure: module 'coremltools' has no attribute 'convert'

Export complete. Visualize with https://github.com/lutzroeder/netron.

anyone solved it?

Starting CoreML export with coremltools 3.4... CoreML export failure: module 'coremltools' has no attribute 'convert'

Export complete. Visualize with https://github.com/lutzroeder/netron.

anyone solved it?

Hi. I think you need to update to the latest coremltools package version.

Please see this one: https://github.com/ultralytics/yolov5/issues/315#issuecomment-656629623

Starting CoreML export with coremltools 3.4... CoreML export failure: module 'coremltools' has no attribute 'convert'

Export complete. Visualize with https://github.com/lutzroeder/netron.

anyone solved it?

reinstall your coremltools:

pip install coremltools==4.0b2

pip install coremltools==4.0b2

my pytorch version is 1.4, coremltools=4.0b2,but error

Starting ONNX export with onnx 1.7.0... Fusing layers... Model Summary: 284 layers, 8.84108e+07 parameters, 8.45317e+07 gradients ONNX export failure: Unsupported ONNX opset version: 12

Starting CoreML export with coremltools 4.0b2... CoreML export failure: name 'ts' is not defined how to solved it

@zhepherd

Please install torch=1.5.1.

Starting CoreML export with coremltools 3.4... CoreML export failure: module 'coremltools' has no attribute 'convert'

Export complete. Visualize with https://github.com/lutzroeder/netron.

anyone solved it? Try this out:

import coremltools as ct

model = ct.converters.onnx.convert(model='my_model.onnx')

@zhepherd

Please install

torch=1.5.1.

thx it's ok

When I convert the onnx model to trt. I meet this problem:

While parsing node number 164 [Resize]:

ERROR: ModelImporter.cpp:124 In function parseGraph:

[5] Assertion failed: ctx->tensors().count(inputName)

I use tensorRT 7.0 with opset 12

How is the output tensor meant to be read? Currently when I read the tensor it includes negative numbers and has a 5D shape. I'm also new to Yolo

Starting CoreML export with coremltools 3.4... CoreML export failure: module 'coremltools' has no attribute 'convert' Export complete. Visualize with https://github.com/lutzroeder/netron. anyone solved it?

reinstall your coremltools:

pip install coremltools==4.0b2

Yes Brother, Thanks its working now.

Do you have any further step to deploy in ios?

I don't know it is okay to put my questions here. But hopefully someone could answer my questions.

I successfully converted my custom yolov5 model(train it using pre-train model yolov5x using only car, bus, truck data from 2017_train COCO datasets) and made onnx model too following instructions in this github. However, the outputs of onnx model is quite hard for me to understand and I don't know how to draw bounding boxes on original images from the outputs.

the output and my code below

"======================code=======================" #layer name for onnx model followed this, https://github.com/onnx/onnx/issues/2657

import onnx model = onnx.load('xxx.onnx') output =[node.name for node in model.graph.output]

input_all = [node.name for node in model.graph.input] input_initializer = [node.name for node in model.graph.initializer] net_feed_input = list(set(input_all) - set(input_initializer))

print('Inputs: ', net_feed_input) print('Outputs: ', output)

intput: ['images'] output: ['output', '772', '791']

#I followed this link, https://pytorch.org/docs/stable/onnx.html import onnxruntime as ort

ort_session = ort.InferenceSession('best.onnx')

outputs = ort_session.run(None, {'images': np.random.randn(1, 3, 640, 640).astype(np.float32)})

print(outputs[0])`

"======================output========================="

Well, to put it in a nutshell, my questions below.

- Could anyone tell me what it means for each dimension of the output?

- Could anyone tell postpreprocessing after inferencing stages of onnx model? ( For example, https://github.com/onnx/models/blob/master/vision/object_detection_segmentation/yolov4/dependencies/inference.ipynb)

thanks

@jubrowon Follow this guy's script and the thread and you should be fine. https://github.com/ultralytics/yolov5/issues/343#issuecomment-659223637

Going through it will break down most of what you'll need in order to understand what's going on. A quick simplistic overview, by default the final postprocessing layer of the model isn't exported and that's why the ouput seems confusing

@BernardinD Thank you so much!

@glenn-jocher some notes for Windows:

it seems like setting the PYTHONPATH using set PYTHONPATH="%cd%" is not enough for torch to load the model correctly (I get an error ModuleNotFoundError: No module named 'models' from torch.load when trying to load the model). I tried a few things to make the relative import work, but couldn't find a simple solution.

What I did to make it work is to simply move export.py at the root of the project and then it exported correctly following the export command.

@Ownmarc CI tests include export on Windows. All tests are passing. Code below, recent run here. https://github.com/ultralytics/yolov5/blob/d2da5230533db7a2c76af1dde6d91c7e1631a1b8/.github/workflows/ci-testing.yml#L60-L75

@glenn-jocher ah, we have to use bash commands and not the cmd ! I tried it using bash and it worked as intended, I didn't notice I had to use bash there, I rarely use bash on Windows!

i get an error : Can't get attribute 'Hardswish' on <module 'torch.nn.modules.activation' from ...