data-workspace-frontend

data-workspace-frontend copied to clipboard

data-workspace-frontend copied to clipboard

Feature/data flow ide

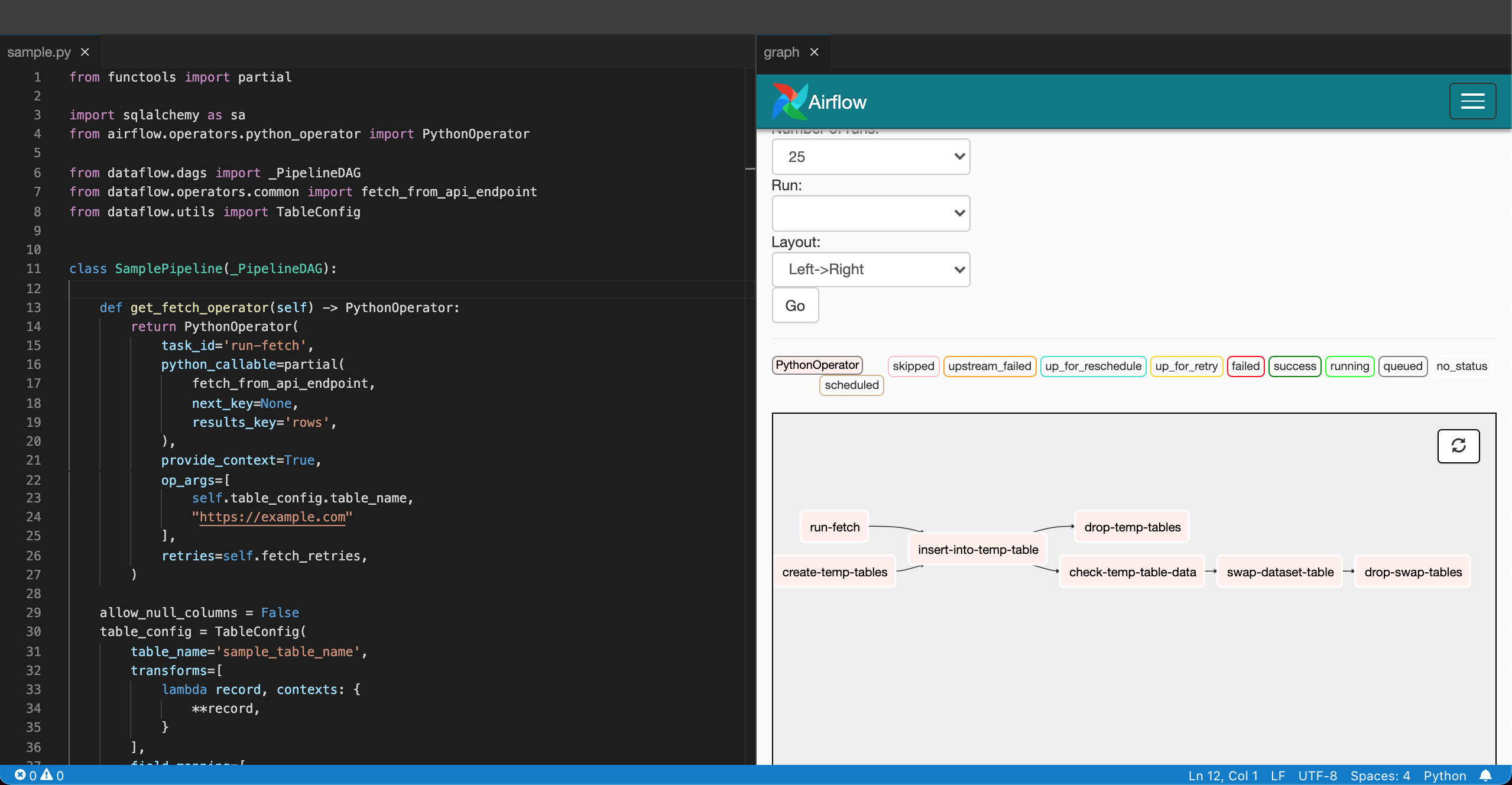

Adds a new tool for developing Data Flow pipelines, code for which can be found at https://github.com/uktrade/data-flow-sandbox:

Main changes:

-

Add the terraform infrastructure for the tool. The ECS task is made up of two containers, the main one being the front end which is a Theia image, and the other being Data Flow itself. The Data Flow image has been stripped down and ships with a sample pipeline that is automatically opened when the tool is launched. Static env vars are stored in the tool's spawner options, and dynamic ones (the database URL to store the datasets/airflow tables and the S3 bucket to store the fetched data) are passed when the tool launches.

-

Set the temporary db user's search_path to the user's schema to allow the airflow tables to be created there. This is suggested in the airflow docs because sqlalchemy doesn't provide a way to specify a schema in the connection URL, see https://airflow.apache.org/docs/apache-airflow/stable/howto/set-up-database.html#setting-up-a-postgresql-database. Currently this is only done for the Data Flow IDE tool in case it causes some unwanted side effects elsewhere, but I think it should be safe to do for all tools.

The airflow tables are stored in the user's schema for the following reasons:

- Simplifies the infrastructure, no separate schema or database required for the airflow tables

- No permission model required to ensure users can only interact with their dags

- No need for "master" credentials to be passed to the tool via env vars, which can be easily accessed by logging os.environ

-

Add a 'frame-src' CSP header to the proxy to allow the IDE iframe to load subdomain URLs

Known issues

- Adding the dags volume to s3sync doesn't work, mobius3 deletes all the files locally for some reason. I had some issues with it picking up docker volumes locally so it may be related. This means any changes to the sample pipeline will be lost when the tool is stopped and launched again

- The spawner options for a tool application template get overwritten after a jenkins / terraform deployment so needs to be manually updated each time

- The environment variables contained in the spawner options are passed to all containers in the task definition, this means S3Sync and Theia receive Data Flow specific environment variables