onnxruntime_backend

onnxruntime_backend copied to clipboard

onnxruntime_backend copied to clipboard

Engine files are not created using TensorRTExecutionProvider optimization when trt_engine_cache_enable is true on latest tritonserver releases (22.08)

Description

Engine files are not created using TensorRTExecutionProvider optimization when trt_engine_cache_enable is true. The fact that trt_engine_cache_path is defined or not doesn't seem to change anything.

Triton Information

I tried several triton server versions.

It works with tritonserver version 21.10: nvcr.io/nvidia/tritonserver:21.10-py

It doesn't work anymore with version: 22.07: nvcr.io/nvidia/tritonserver:22.07-py

I'm using docker images of triton server without any modification for the sake of reproducibility.

To Reproduce

- you can download any onnx model from https://github.com/onnx/models. I personnaly took this one: https://github.com/onnx/models/blob/main/vision/classification/resnet/model/resnet50-v2-7.onnx

- Place the model in a directory called "test_onnx" in the model repository of the triton server, I used "/models" mounted in the tritonserver.

- run triton:

docker run -ti --rm --gpus '"device=all"' -v /your_triton_path/models:/models nvcr.io/nvidia/tritonserver:22.07-py3 bash

- Use this config:

name: "test_onnx"

platform: "onnxruntime_onnx"

max_batch_size: 0

optimization { execution_accelerators {

gpu_execution_accelerator : [ {

name : "tensorrt"

parameters { key: "precision_mode" value: "FP32" }

parameters { key: "trt_engine_cache_enable" value: "true" }

parameters { key: "trt_engine_cache_path" value: "/models/test_onnx/1" }

parameters { key: "max_workspace_size_bytes" value: "2147483648" }}

]

}}

- launch it with

tritonserver --model-store=/models

Expected behavior

I version 21.10 if you use this config you will end up with an engine file like so: TensorrtExecutionProvider_TRTKernel_graph_torch_jit_7371022985846014763_1_0.engine in /models/test_onnx/1

The file is not created in latest releases of triton server.

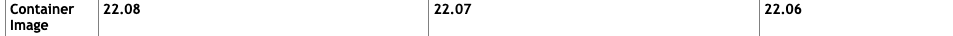

EDIT: I found out that it was related to the version ONNX 1.12.0. The bug appears from the version 22.07 of the triton server. And if we look at the support matrix:

Also I found out that what changed in that version is that they are saving the engine in the destructor of the ONNX session instead at the beginning whereas before it was made in the constructor. They changed the behaviour because for some use cases the engine changes after the session creation. The behaviour is back to the old one in the new version 1.12.1 because too many people complained as in: https://github.com/microsoft/onnxruntime/issues/12322