crawler

crawler copied to clipboard

crawler copied to clipboard

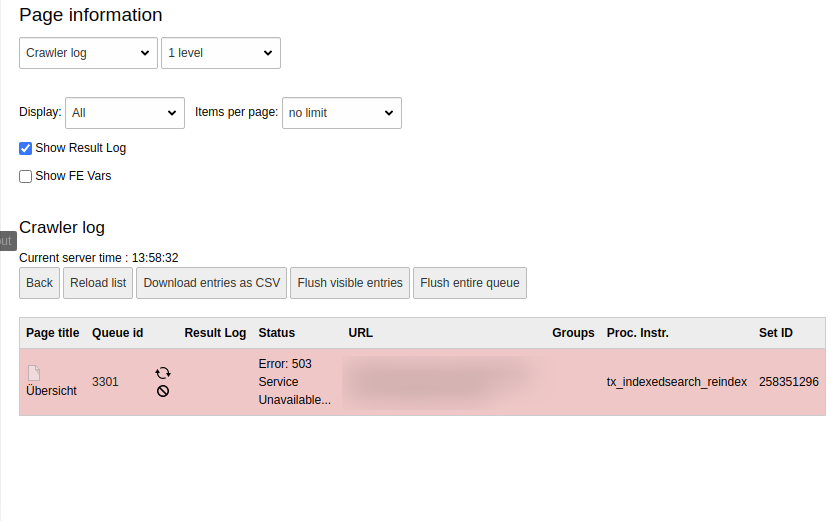

Crawler Process status : 503 Service Unavailable

Bug Report

Current Behavior I have installed plugin and trying to index pages by crawler process manually. I am using crawler 9.2.6 on TYPO3 9.5.28 non composer mode. When I add Process it always show me status : 503 service Unavailable and page is not indexed Could you please help me to sort this. While adding configuration in baseurl field is empty. (Also try with adding baseurl)

Expected behavior/output Status should be OK and page should be indexed.

Steps to reproduce

- Start Crawling ->select Configuration->Crawl URLs

- Continue and show log

- Add Process Queue

Environment

- OS : Linux 5.4.0-44-generic

- Php : 7.3.25

- CLI path : /usr/local/bin/php /home/www/XXX/html/typo3/typo3/sysext/core/bin/typo3 crawler:processQueue

- TYPO3 9.5.28

- Crawler 9.2.6

- no Composer mode

- Page has no htaccess password protection added.

Additional context For FE search I am using Indexed Search plugin.

Does the "Error: 503" say more when you hover over the text?

Does the "Error: 503" say more when you hover over the text?

No :(

And the frontend is working like expected? A 503 is a server error, so do you have any information from sys_log or apache/php log?

Do you have the crawler settings at directRequest active or not? https://docs.typo3.org/p/aoepeople/crawler/master/en-us/Configuration/ExtensionManagerConfiguration/Index.html#extension-manager-configuration

@tomasnorre I could not find any error log related to this in sys_log. This is on mittwald server.

And right now crawler settings at directRequest is not active. Even I tried to make it active but in that case nothing happened not even 503 error in status or ok shows. No process happen if I make it active.

Also regarding to your question frontend is working --> then yes there is no issue with FE , it is working fine as it is.

Thanks for the update. I'll look into this.

Please update the issue if you find any additional information.

Could it be related to? https://github.com/AOEpeople/crawler/issues/758

That's also on a mittwald server.

I don' t think it is related to that because cleanup process is working fine.

ok. Thanks for the info.

As both Crawler 9.x.y and 10.x.y are compatible with TYPO3 9, would you mind trying to update the crawler to see if the issue persists?

Hi, we are having similar problems with the crawler. I closed #820 and pasted my issue here.

We can build the queue but processing (--mode exec) gives us: e.g. 347/357 [===========================>] 97%Error checking Crawler Result: ... for Indexed_search. The error is "..." only if we use the option "Make direct requests", otherwise we get a 403 error.

Enabling Indexing in Frontend works. Luckily after building the queue without exec we can also manually crawl Pdf's in the Info screen of the Backend by clicking the circle arrows button. We have a quite big site, with almost daily edits, so it would be really great if we could figure out the problem. We were successfully running crawler 6.7.3 with Typo3 8.7 before,

Next step would be to migrate the Typo3 installation to a Composer install.

Environment

Crawler version(s): 10.0.3 TYPO3 version(s): 9.5.30 Indexed Search 9.5.30 Not Composer installed Windows Server 2012 R2 Crawler Configuration is very standard: "Keeps page configured protocol" "Re-indexing [tx_indexedsearch_reindex]" We tried both blank BaseURL or manual according to our installation No Pids only, no Excluded pages, empty Configuration, empty Processing instruction Parameters

LocalConfiguration: 'crawler' => [ 'cleanUpOldQueueEntries' => '1', 'cleanUpProcessedAge' => '2', 'cleanUpScheduledAge' => '7', 'countInARun' => '100', 'crawlHiddenPages' => '0', 'enableTimeslot' => '1', 'frontendBasePath' => '', 'makeDirectRequests' => '1', 'maxCompileUrls' => '1000', 'phpBinary' => 'php', 'phpPath' => 'C:/php/php.exe', 'processDebug' => '1', 'processLimit' => '1', 'processMaxRunTime' => '300', 'processVerbose' => '0', 'purgeQueueDays' => '14', 'sleepAfterFinish' => '10', 'sleepTime' => '1000', ],

Can this be related to #851 where the issue is the "baseURL" not been taking from the Sites configuration?

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.