TRUNCATE statement is not propagated correctly to Elasticsearch whereas INSERT, UPDATE and DELETE works fine!

PGSync version: 2.1.7

Postgres version: 13

Elasticsearch version: 7.14.2

Redis version: 2.4.6

Python version: 3.9

Problem Description: Truncate Statement is not propagated correctly to elasticsearch and error given below is thrown!

pgsync Schema:

[

{

"database":"user_service",

"index": "user-service",

"nodes":{

"table":"laptops",

"columns":[

"laptop_brand",

"laptop_processor",

"laptop_ram",

"laptop_quality"

],

"children":[

{

"table":"users",

"columns":[

"name",

"email",

"phone",

"laptop_id"

],

"relationship":{

"variant":"object",

"type":"one_to_many",

"foreign_key": {

"child": ["laptop_id"],

"parent": ["laptop_uuid"]

}

}

}

]

}

}

]

Error Message (if any):

2021-10-21 16:45:13.012:ERROR:pgsync.sync: Exception 'bool' object is not callable

Traceback (most recent call last):

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\sync.py", line 884, in sync

self.es.bulk(self.index, docs)

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\elastichelper.py", line 111, in bulk

for _ in helpers.parallel_bulk(

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\elasticsearch\helpers\actions.py", line 472, in parallel_bulk

for result in pool.imap(

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 870, in next

raise value

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 144, in _helper_reraises_exception

raise ex

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 388, in _guarded_task_generation

for i, x in enumerate(iterable):

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\elasticsearch\helpers\actions.py", line 155, in _chunk_actions

for action, data in actions:

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\sync.py", line 714, in _payloads

filters = self._truncate(node, root, filters)

TypeError: 'bool' object is not callable

Exception in thread Thread-16:

Traceback (most recent call last):

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\threading.py", line 973, in _bootstrap_inner

self.run()

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\threading.py", line 910, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\sync.py", line 913, in poll_redis

self.on_publish(payloads)

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\sync.py", line 1001, in on_publish

self.sync(self._payloads(_payloads))

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\sync.py", line 884, in sync

self.es.bulk(self.index, docs)

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\elastichelper.py", line 111, in bulk

for _ in helpers.parallel_bulk(

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\elasticsearch\helpers\actions.py", line 472, in parallel_bulk

for result in pool.imap(

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 870, in next

raise value

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 144, in _helper_reraises_exception

raise ex

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\multiprocessing\pool.py", line 388, in _guarded_task_generation

for i, x in enumerate(iterable):

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\elasticsearch\helpers\actions.py", line 155, in _chunk_actions

for action, data in actions:

File "C:\Users\Inam\AppData\Local\Programs\Python\Python39\lib\site-packages\pgsync\sync.py", line 714, in _payloads

filters = self._truncate(node, root, filters)

Also Why does pgsync make use of triggers, does it not affect performance? can't we just use WAL?

- Yes there is a problem here with the

TRUNCATEop. I will investigate and fix - If you are relying on the WAL, you are constantly polling a data source for changes even when there is None

- With triggers, it is more even driven. You get notified when there is a change. So triggers are more efficient and faster this way

What I am concerned about with the triggers is performance, is this something I should worry about when we are going to production environment? Obviously there will be 1000s of insert, update and delete requests per second and on each change a trigger will be called. So I was worried about performance there, if you can offer some insight on that, it would be great.

On Fri, Oct 22, 2021, 12:07 AM Tolu Aina @.***> wrote:

- Yes there is a problem here with the TRUNCATE op. I will investigate and fix

- If you are relying on the WAL, you are constantly polling a data source for changes even when there is None

- With triggers, it is more even driven. You get notified when there is a change. So triggers are more efficient and faster this way

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/toluaina/pgsync/issues/197#issuecomment-948919355, or unsubscribe https://github.com/notifications/unsubscribe-auth/AODNGHXLOAOZQSFURUTKZ73UIBQF5ANCNFSM5GN53TNQ . Triage notifications on the go with GitHub Mobile for iOS https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675 or Android https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub.

Do you have a high constant write rate to your db? I would suggest doing a benchmark to see how this works in prod. Maybe you can use this as a starting point to simulate the db writes You might also want to measure the writes per second with and without the triggers.

Sure, I will do that.

On Fri, Oct 22, 2021, 12:59 AM Tolu Aina @.***> wrote:

Do you have a high constant write rate to your db? I would suggest doing a benchmark to see how this works in prod. Maybe you can use this https://github.com/toluaina/pgsync/blob/master/examples/book/benchmark.py as a starting point to simulate the db writes You might also want to measure the writes per second with and without the triggers.

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/toluaina/pgsync/issues/197#issuecomment-948958302, or unsubscribe https://github.com/notifications/unsubscribe-auth/AODNGHVMW2LYYT6QE6HQ3DDUIBWKZANCNFSM5GN53TNQ . Triage notifications on the go with GitHub Mobile for iOS https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675 or Android https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub.

My laptop specs, core i7 10510u , 16gb ram and an nvme ssd 500GB

I did the benchmark on book example you asked.

Here are the result of avg of 5 runs without triggers: Added 5000 books in 0.60sec Result of avg 5 runs with triggers: Added 5000 books in 0.84sec

Thats a 240ms difference. Isn't that huge?

On Fri, Oct 22, 2021, 12:59 AM Tolu Aina @.***> wrote:

Do you have a high constant write rate to your db? I would suggest doing a benchmark to see how this works in prod. Maybe you can use this https://github.com/toluaina/pgsync/blob/master/examples/book/benchmark.py as a starting point to simulate the db writes You might also want to measure the writes per second with and without the triggers.

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/toluaina/pgsync/issues/197#issuecomment-948958302, or unsubscribe https://github.com/notifications/unsubscribe-auth/AODNGHVMW2LYYT6QE6HQ3DDUIBWKZANCNFSM5GN53TNQ . Triage notifications on the go with GitHub Mobile for iOS https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675 or Android https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub.

for 5000 records added 240ms consider huge? I doubt in multiple server environment the data flow between server that consider small. I'm very interested in this test and can you repeat the test, by running postgresql and elastic search in 2 separate server, connected with gb network? or under 2 vm in aws?

Sure will try that too.

On Sat, Oct 23, 2021, 8:03 PM Ks Tan @.***> wrote:

for 5000 records added 240ms consider huge? I doubt in multiple server environment the data flow between server that consider small. I'm very interested in this test and can you repeat the test, by running postgresql and elastic search in 2 separate server, connected with gb network?

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/toluaina/pgsync/issues/197#issuecomment-950165378, or unsubscribe https://github.com/notifications/unsubscribe-auth/AODNGHSJKRYV3EYEKJCVC63UILFDXANCNFSM5GN53TNQ . Triage notifications on the go with GitHub Mobile for iOS https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675 or Android https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub.

@toluaina, I have been reading the code and I was wondering if one could sync the db to elasticsearch like the initial sync where pgsync reads the replication slots and parse the transaction logs and load the data to elasticsearch and set the poll timeout variable for reading transaction logs without creating triggers! what would be the effect?

I'm not the developer, but I wish to give some comment here. I not that recommend change any algorithm/backend since this plugin developed with simple but stable design concept, and solve specific problem. Changing design will cause new problem at the end create never ending story.

I believe most of the develop shall insert data into full text search engine from application level, instead of let rdms auto generate it. There is plenty of reason:

- you may have GDPR requirement, which require you encrypt some column in your RDMS, then this solution no longer suitable.

- data modification of sub table, which is needed in your search may break this solution too (you need need way to regenerate all effected record at elastic search. your parent table nothing change at all)

so i suggest you to simply use what it has, and prove that beside performance everything just nice and enough in your use case. As developer I'm feel that reading log file may not robust enough cause every plugin user may have different environment configuration.

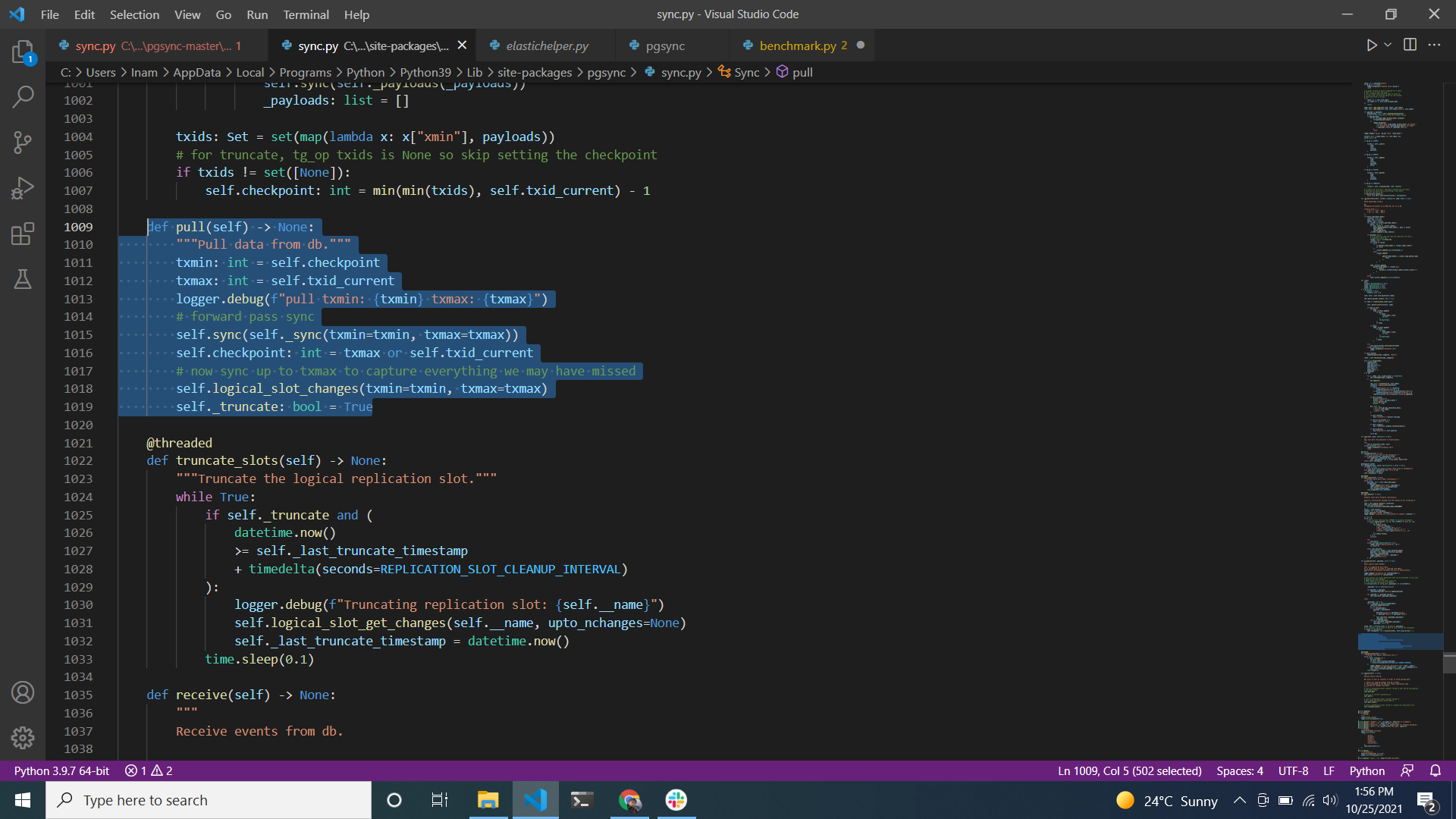

Also in the sync.py

if a transaction is commited and it will be logged, means we cant miss anything from transaction logs. then cant we remove the necessity of triggers? just simply check the transaction logs every few secs or set the poll_timeout var for it

I'm not the developer, but I wish to give some comment here. I not that recommend change any algorithm/backend since this plugin developed with simple but stable design concept, and solve specific problem. Changing design will cause new problem at the end create never ending story.

I believe most of the develop shall insert data into full text search engine from application level, instead of let rdms auto generate it. There is plenty of reason:

- you may have GDPR requirement, which require you encrypt some column in your RDMS, then this solution no longer suitable.

- data modification of sub table, which is needed in your search may break this solution too (you need need way to regenerate all effected record at elastic search. your parent table nothing change at all)

so i suggest you to simply use what it has, and prove that beside performance everything just nice and enough in your use case. As developer I'm feel that reading log file may not robust enough cause every plugin user may have different environment configuration.

Thank you for your comment bro, I like what pgsync has to offer and I do not intend to remove any functionality @toluaina has developed, because I know he will have done it for a reason, I just wants to know if I can make changes to the code to better serve my purpose!