alpaca-lora

alpaca-lora copied to clipboard

alpaca-lora copied to clipboard

How to load a model pre-trained on a 52k dataset and continue fine-tuning with another dataset.json?

I've been trying to load a LoRA model that I trained on a 52k dataset and continue fine-tuning it with another data.json file. I have referred to the discussions in the following issues: #52 and #44, but I'm still unable to figure out which part of the finetune.py script I need to modify to accomplish this.

I want to start fine-tuning from a checkpoint instead of from scratch because I believe that the model might be able to learn more task-specific knowledge this way. However, I'm not sure if my assumption is correct.

Could you please guide me on how to modify the finetune.py script to load a pre-trained LoRA model and continue fine-tuning from a checkpoint using a new data.json file?

Thank you in advance for your assistance!

I'm no expert but I think you can load the pre-trained LoRA after loading the llama model like this in finetune.py:

model = LlamaForCausalLM.from_pretrained(

"decapoda-research/llama-7b-hf",

load_in_8bit=True,

device_map=device_map,

)

# here you load the pre-trained LoRA model from the lora-alpaca folder

model = PeftModel.from_pretrained(model,'./lora-alpaca', torch_dtype=torch.float16)

Thank you very much for your reply!

Is the pre trained LoRA weight in the'./lora-alpaca'path this file?

https://huggingface.co/tloen/alpaca-lora-7b

And should I use trainer.train(resume_from_checkpoint=xx)?

@T-Atlas I was thinking if training already fine tuned model again and again with new datasets is efficient or not.

Could you please come back here and tell us about the result?

- if you managed to teach the fine tuned model with new things from new dataset

- And more important does new model forget anything from the previous training

Did u guys find a solution? I also want to finetune the model on a specific topic, starting from the checkpoint of alpaca cleaned and finetune with a new dataset.

Did u guys find a solution? I also want to finetune the model on a specific topic, starting from the checkpoint of alpaca cleaned and finetune with a new dataset.

not yet

Thank you for your message. For your concern, I believe that fine-tuning an already fine-tuned model with new datasets can be an effective way to adapt the model to new tasks or domains. However, this approach depends on various factors, and catastrophic forgetting is a major challenge. Regarding the results, to be honest, I have not conducted any specific experiments on this topic yet. However, I will definitely share my findings once I have identified potential solutions to the current issue.

@T-Atlas Yeah, I believe that it's possible and will be effective to train already fine tuned model to do a new task. What I was thinking of "can I add to already existed knowledge a new one?" For example, adding math/chemistry/physics examples dataset to current alpaca in order to do those instructions much better, but to keep an old knowledge in place.

@T-Atlas Yeah, I believe that it's possible and will be effective to train already fine tuned model to do a new task. What I was thinking of "can I add to already existed knowledge a new one?" For example, adding math/chemistry/physics examples dataset to current alpaca in order to do those instructions much better, but to keep an old knowledge in place.

Yes, your idea is correct. Models that have been fine-tuned can be trained to add new knowledge while retaining old knowledge. I remember this being called incremental learning.

@T-Atlas do you have any idea of how to do this with alpaca?

@T-Atlas do you have any idea of how to do this with alpaca?

Not yet. I plan to think again at work tomorrow.

Is the pre trained LoRA weight in the'./lora-alpaca'path this file? https://huggingface.co/tloen/alpaca-lora-7b

the link is the LoRA model trained by the repo owner, he made it public so we don't need to run it again, the lora-alpaca folder will be the path where your LoRA finetuned model will be created, after training you can just change generate.py file to point the function to the folder

model = PeftModel.from_pretrained(model,'./lora-alpaca', torch_dtype=torch.float16)

And should I use trainer.train(resume_from_checkpoint=xx)?

good question, I just got back into my pc and I'll start trying some stuff as I'm lost too

Just going to put a . in here as I'm facing the same issue. I've talked with T-Atlas a little bit over e-mail and we're hitting the exact same wall

./lora-alpaca contains the lora model alone.

You can re-train from an existing lora instead of from scratch.

Just, you'll start with a blank state optimizer.

If you run the initial learning yourself, you'll get 3 checkpoint-nnn in lora-alpaca dir (one per epoch) There, you have the lora but also the optimizer full state. With these, you can "resume_from_checkpoint" keeping the full state, instead of resetting the training. (then you'll need to train for more than 3 epochs, since you'll start at 3 already)

Hi @T-Atlas, this can be a silly answer, but did you try using this?

trainer.train(resume_from_checkpoint=checkpoint_local_path)

Where the checkpoint_local_path variable is the relative path of a local folder containing the pre-trained weights.

It's perfectly working on my side for flan-t5 (seq2seq) or gpt-j (causal) model training, so it should work out of the box even with PEFT.

EDIT: The previous statement doesn't work because the saved .bin model is not a full checkpoint but just the updated LoRA weights (as far as I understand). You need to use the export_hf_checkpoint.py file to merge your fine-tuned weights into a new model that could be used for inference or for later fine-tuning tasks.

Hi @T-Atlas, this can be a silly answer, but did you try using this?

trainer.train(resume_from_checkpoint=checkpoint_local_path)Where the

checkpoint_local_pathvariable is the relative path of a local folder containing the pre-trained weights.It's perfectly working on my side for

flan-t5(seq2seq) orgpt-j(causal) model training, so it should work out of the box even withPEFT.EDIT: The previous statement doesn't work because the saved .bin model is not a full checkpoint but just the updated LoRA weights (as far as I understand). You need to use the

export_hf_checkpoint.pyfile to merge your fine-tuned weights into a new model that could be used for inference or for later fine-tuning tasks.

@ChainYo Thank you very much for your detailed reply! So what you mean is that I don't need the code

from peft import PeftModel

model = PeftModel.from_pretrained(model, <PATH>)

Instead, I just need to export the hf checkpoint according to your settings like this

trainer.train(resume_from_checkpoint=“./hf_ckpt”)

I will try!

@T-Atlas If the ./hf_ckpt are containing checkpoints of past training, yes.

But it seems easier to take LoRA-trained parameters, merges them with the base model (for example decapoda-research/llama-7b-hf) and then start a new training with another dataset by using the newly created model (the output of the parameters merge).

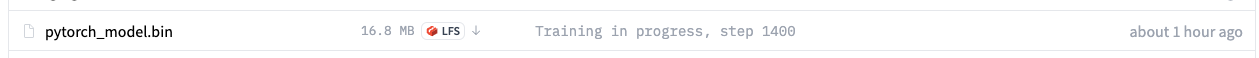

Because from what I see on my side, the save_step and push_to_hub are not saving the full checkpoints but only the adapter model (based on the file size):

@T-Atlas If the

./hf_ckptare containing checkpoints of past training, yes.But it seems easier to take LoRA-trained parameters, merges them with the base model (for example decapoda-research/llama-7b-hf) and then start a new training with another dataset by using the newly created model (the output of the parameters merge).

Because from what I see on my side, the

save_stepandpush_to_hubare not saving the full checkpoints but only the adapter model (based on the file size):

I tried trainer.train(resume_from_checkpoint=“./lora-alpaca/checkpoint-1400”) and ./lora-alpaca/checkpoint-1400 are containing checkpoints of past training checkpoint.

But I see warning on my console when I run the fineture.py

There were missing keys in the checkpoint model loaded: ['base_model.model.model.embed_tokens.weight', 'base_model.model.model.layers.0.self_attn.q_proj.weight', 'base_model.model.model.layers.0.self_attn.k_proj.weight', 'base_model.model.model.layers.0.self_attn.v_proj.weight', 'base_model.model.model.layers.0.self_attn.o_proj.weight', 'base_model.model.model.layers.0.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.0.mlp.gate_proj.weight', 'base_model.model.model.layers.0.mlp.down_proj.weight', 'base_model.model.model.layers.0.mlp.up_proj.weight', 'base_model.model.model.layers.0.input_layernorm.weight', 'base_model.model.model.layers.0.post_attention_layernorm.weight', 'base_model.model.model.layers.1.self_attn.q_proj.weight', 'base_model.model.model.layers.1.self_attn.k_proj.weight', 'base_model.model.model.layers.1.self_attn.v_proj.weight', 'base_model.model.model.layers.1.self_attn.o_proj.weight', 'base_model.model.model.layers.1.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.1.mlp.gate_proj.weight', 'base_model.model.model.layers.1.mlp.down_proj.weight', 'base_model.model.model.layers.1.mlp.up_proj.weight', 'base_model.model.model.layers.1.input_layernorm.weight', 'base_model.model.model.layers.1.post_attention_layernorm.weight', 'base_model.model.model.layers.2.self_attn.q_proj.weight', 'base_model.model.model.layers.2.self_attn.k_proj.weight', 'base_model.model.model.layers.2.self_attn.v_proj.weight', 'base_model.model.model.layers.2.self_attn.o_proj.weight', 'base_model.model.model.layers.2.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.2.mlp.gate_proj.weight', 'base_model.model.model.layers.2.mlp.down_proj.weight', 'base_model.model.model.layers.2.mlp.up_proj.weight', 'base_model.model.model.layers.2.input_layernorm.weight', 'base_model.model.model.layers.2.post_attention_layernorm.weight', 'base_model.model.model.layers.3.self_attn.q_proj.weight', 'base_model.model.model.layers.3.self_attn.k_proj.weight', 'base_model.model.model.layers.3.self_attn.v_proj.weight', 'base_model.model.model.layers.3.self_attn.o_proj.weight', 'base_model.model.model.layers.3.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.3.mlp.gate_proj.weight', 'base_model.model.model.layers.3.mlp.down_proj.weight', 'base_model.model.model.layers.3.mlp.up_proj.weight', 'base_model.model.model.layers.3.input_layernorm.weight', 'base_model.model.model.layers.3.post_attention_layernorm.weight', 'base_model.model.model.layers.4.self_attn.q_proj.weight', 'base_model.model.model.layers.4.self_attn.k_proj.weight', 'base_model.model.model.layers.4.self_attn.v_proj.weight', 'base_model.model.model.layers.4.self_attn.o_proj.weight', 'base_model.model.model.layers.4.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.4.mlp.gate_proj.weight', 'base_model.model.model.layers.4.mlp.down_proj.weight', 'base_model.model.model.layers.4.mlp.up_proj.weight', 'base_model.model.model.layers.4.input_layernorm.weight', 'base_model.model.model.layers.4.post_attention_layernorm.weight', 'base_model.model.model.layers.5.self_attn.q_proj.weight', 'base_model.model.model.layers.5.self_attn.k_proj.weight', 'base_model.model.model.layers.5.self_attn.v_proj.weight', 'base_model.model.model.layers.5.self_attn.o_proj.weight', 'base_model.model.model.layers.5.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.5.mlp.gate_proj.weight', 'base_model.model.model.layers.5.mlp.down_proj.weight', 'base_model.model.model.layers.5.mlp.up_proj.weight', 'base_model.model.model.layers.5.input_layernorm.weight', 'base_model.model.model.layers.5.post_attention_layernorm.weight', 'base_model.model.model.layers.6.self_attn.q_proj.weight', 'base_model.model.model.layers.6.self_attn.k_proj.weight', 'base_model.model.model.layers.6.self_attn.v_proj.weight', 'base_model.model.model.layers.6.self_attn.o_proj.weight', 'base_model.model.model.layers.6.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.6.mlp.gate_proj.weight', 'base_model.model.model.layers.6.mlp.down_proj.weight', 'base_model.model.model.layers.6.mlp.up_proj.weight', 'base_model.model.model.layers.6.input_layernorm.weight', 'base_model.model.model.layers.6.post_attention_layernorm.weight', 'base_model.model.model.layers.7.self_attn.q_proj.weight', 'base_model.model.model.layers.7.self_attn.k_proj.weight', 'base_model.model.model.layers.7.self_attn.v_proj.weight', 'base_model.model.model.layers.7.self_attn.o_proj.weight', 'base_model.model.model.layers.7.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.7.mlp.gate_proj.weight', 'base_model.model.model.layers.7.mlp.down_proj.weight', 'base_model.model.model.layers.7.mlp.up_proj.weight', 'base_model.model.model.layers.7.input_layernorm.weight', 'base_model.model.model.layers.7.post_attention_layernorm.weight', 'base_model.model.model.layers.8.self_attn.q_proj.weight', 'base_model.model.model.layers.8.self_attn.k_proj.weight', 'base_model.model.model.layers.8.self_attn.v_proj.weight', 'base_model.model.model.layers.8.self_attn.o_proj.weight', 'base_model.model.model.layers.8.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.8.mlp.gate_proj.weight', 'base_model.model.model.layers.8.mlp.down_proj.weight', 'base_model.model.model.layers.8.mlp.up_proj.weight', 'base_model.model.model.layers.8.input_layernorm.weight', 'base_model.model.model.layers.8.post_attention_layernorm.weight', 'base_model.model.model.layers.9.self_attn.q_proj.weight', 'base_model.model.model.layers.9.self_attn.k_proj.weight', 'base_model.model.model.layers.9.self_attn.v_proj.weight', 'base_model.model.model.layers.9.self_attn.o_proj.weight', 'base_model.model.model.layers.9.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.9.mlp.gate_proj.weight', 'base_model.model.model.layers.9.mlp.down_proj.weight', 'base_model.model.model.layers.9.mlp.up_proj.weight', 'base_model.model.model.layers.9.input_layernorm.weight', 'base_model.model.model.layers.9.post_attention_layernorm.weight', 'base_model.model.model.layers.10.self_attn.q_proj.weight', 'base_model.model.model.layers.10.self_attn.k_proj.weight', 'base_model.model.model.layers.10.self_attn.v_proj.weight', 'base_model.model.model.layers.10.self_attn.o_proj.weight', 'base_model.model.model.layers.10.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.10.mlp.gate_proj.weight', 'base_model.model.model.layers.10.mlp.down_proj.weight', 'base_model.model.model.layers.10.mlp.up_proj.weight', 'base_model.model.model.layers.10.input_layernorm.weight', 'base_model.model.model.layers.10.post_attention_layernorm.weight', 'base_model.model.model.layers.11.self_attn.q_proj.weight', 'base_model.model.model.layers.11.self_attn.k_proj.weight', 'base_model.model.model.layers.11.self_attn.v_proj.weight', 'base_model.model.model.layers.11.self_attn.o_proj.weight', 'base_model.model.model.layers.11.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.11.mlp.gate_proj.weight', 'base_model.model.model.layers.11.mlp.down_proj.weight', 'base_model.model.model.layers.11.mlp.up_proj.weight', 'base_model.model.model.layers.11.input_layernorm.weight', 'base_model.model.model.layers.11.post_attention_layernorm.weight', 'base_model.model.model.layers.12.self_attn.q_proj.weight', 'base_model.model.model.layers.12.self_attn.k_proj.weight', 'base_model.model.model.layers.12.self_attn.v_proj.weight', 'base_model.model.model.layers.12.self_attn.o_proj.weight', 'base_model.model.model.layers.12.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.12.mlp.gate_proj.weight', 'base_model.model.model.layers.12.mlp.down_proj.weight', 'base_model.model.model.layers.12.mlp.up_proj.weight', 'base_model.model.model.layers.12.input_layernorm.weight', 'base_model.model.model.layers.12.post_attention_layernorm.weight', 'base_model.model.model.layers.13.self_attn.q_proj.weight', 'base_model.model.model.layers.13.self_attn.k_proj.weight', 'base_model.model.model.layers.13.self_attn.v_proj.weight', 'base_model.model.model.layers.13.self_attn.o_proj.weight', 'base_model.model.model.layers.13.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.13.mlp.gate_proj.weight', 'base_model.model.model.layers.13.mlp.down_proj.weight', 'base_model.model.model.layers.13.mlp.up_proj.weight', 'base_model.model.model.layers.13.input_layernorm.weight', 'base_model.model.model.layers.13.post_attention_layernorm.weight', 'base_model.model.model.layers.14.self_attn.q_proj.weight', 'base_model.model.model.layers.14.self_attn.k_proj.weight', 'base_model.model.model.layers.14.self_attn.v_proj.weight', 'base_model.model.model.layers.14.self_attn.o_proj.weight', 'base_model.model.model.layers.14.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.14.mlp.gate_proj.weight', 'base_model.model.model.layers.14.mlp.down_proj.weight', 'base_model.model.model.layers.14.mlp.up_proj.weight', 'base_model.model.model.layers.14.input_layernorm.weight', 'base_model.model.model.layers.14.post_attention_layernorm.weight', 'base_model.model.model.layers.15.self_attn.q_proj.weight', 'base_model.model.model.layers.15.self_attn.k_proj.weight', 'base_model.model.model.layers.15.self_attn.v_proj.weight', 'base_model.model.model.layers.15.self_attn.o_proj.weight', 'base_model.model.model.layers.15.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.15.mlp.gate_proj.weight', 'base_model.model.model.layers.15.mlp.down_proj.weight', 'base_model.model.model.layers.15.mlp.up_proj.weight', 'base_model.model.model.layers.15.input_layernorm.weight', 'base_model.model.model.layers.15.post_attention_layernorm.weight', 'base_model.model.model.layers.16.self_attn.q_proj.weight', 'base_model.model.model.layers.16.self_attn.k_proj.weight', 'base_model.model.model.layers.16.self_attn.v_proj.weight', 'base_model.model.model.layers.16.self_attn.o_proj.weight', 'base_model.model.model.layers.16.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.16.mlp.gate_proj.weight', 'base_model.model.model.layers.16.mlp.down_proj.weight', 'base_model.model.model.layers.16.mlp.up_proj.weight', 'base_model.model.model.layers.16.input_layernorm.weight', 'base_model.model.model.layers.16.post_attention_layernorm.weight', 'base_model.model.model.layers.17.self_attn.q_proj.weight', 'base_model.model.model.layers.17.self_attn.k_proj.weight', 'base_model.model.model.layers.17.self_attn.v_proj.weight', 'base_model.model.model.layers.17.self_attn.o_proj.weight', 'base_model.model.model.layers.17.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.17.mlp.gate_proj.weight', 'base_model.model.model.layers.17.mlp.down_proj.weight', 'base_model.model.model.layers.17.mlp.up_proj.weight', 'base_model.model.model.layers.17.input_layernorm.weight', 'base_model.model.model.layers.17.post_attention_layernorm.weight', 'base_model.model.model.layers.18.self_attn.q_proj.weight', 'base_model.model.model.layers.18.self_attn.k_proj.weight', 'base_model.model.model.layers.18.self_attn.v_proj.weight', 'base_model.model.model.layers.18.self_attn.o_proj.weight', 'base_model.model.model.layers.18.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.18.mlp.gate_proj.weight', 'base_model.model.model.layers.18.mlp.down_proj.weight', 'base_model.model.model.layers.18.mlp.up_proj.weight', 'base_model.model.model.layers.18.input_layernorm.weight', 'base_model.model.model.layers.18.post_attention_layernorm.weight', 'base_model.model.model.layers.19.self_attn.q_proj.weight', 'base_model.model.model.layers.19.self_attn.k_proj.weight', 'base_model.model.model.layers.19.self_attn.v_proj.weight', 'base_model.model.model.layers.19.self_attn.o_proj.weight', 'base_model.model.model.layers.19.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.19.mlp.gate_proj.weight', 'base_model.model.model.layers.19.mlp.down_proj.weight', 'base_model.model.model.layers.19.mlp.up_proj.weight', 'base_model.model.model.layers.19.input_layernorm.weight', 'base_model.model.model.layers.19.post_attention_layernorm.weight', 'base_model.model.model.layers.20.self_attn.q_proj.weight', 'base_model.model.model.layers.20.self_attn.k_proj.weight', 'base_model.model.model.layers.20.self_attn.v_proj.weight', 'base_model.model.model.layers.20.self_attn.o_proj.weight', 'base_model.model.model.layers.20.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.20.mlp.gate_proj.weight', 'base_model.model.model.layers.20.mlp.down_proj.weight', 'base_model.model.model.layers.20.mlp.up_proj.weight', 'base_model.model.model.layers.20.input_layernorm.weight', 'base_model.model.model.layers.20.post_attention_layernorm.weight', 'base_model.model.model.layers.21.self_attn.q_proj.weight', 'base_model.model.model.layers.21.self_attn.k_proj.weight', 'base_model.model.model.layers.21.self_attn.v_proj.weight', 'base_model.model.model.layers.21.self_attn.o_proj.weight', 'base_model.model.model.layers.21.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.21.mlp.gate_proj.weight', 'base_model.model.model.layers.21.mlp.down_proj.weight', 'base_model.model.model.layers.21.mlp.up_proj.weight', 'base_model.model.model.layers.21.input_layernorm.weight', 'base_model.model.model.layers.21.post_attention_layernorm.weight', 'base_model.model.model.layers.22.self_attn.q_proj.weight', 'base_model.model.model.layers.22.self_attn.k_proj.weight', 'base_model.model.model.layers.22.self_attn.v_proj.weight', 'base_model.model.model.layers.22.self_attn.o_proj.weight', 'base_model.model.model.layers.22.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.22.mlp.gate_proj.weight', 'base_model.model.model.layers.22.mlp.down_proj.weight', 'base_model.model.model.layers.22.mlp.up_proj.weight', 'base_model.model.model.layers.22.input_layernorm.weight', 'base_model.model.model.layers.22.post_attention_layernorm.weight', 'base_model.model.model.layers.23.self_attn.q_proj.weight', 'base_model.model.model.layers.23.self_attn.k_proj.weight', 'base_model.model.model.layers.23.self_attn.v_proj.weight', 'base_model.model.model.layers.23.self_attn.o_proj.weight', 'base_model.model.model.layers.23.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.23.mlp.gate_proj.weight', 'base_model.model.model.layers.23.mlp.down_proj.weight', 'base_model.model.model.layers.23.mlp.up_proj.weight', 'base_model.model.model.layers.23.input_layernorm.weight', 'base_model.model.model.layers.23.post_attention_layernorm.weight', 'base_model.model.model.layers.24.self_attn.q_proj.weight', 'base_model.model.model.layers.24.self_attn.k_proj.weight', 'base_model.model.model.layers.24.self_attn.v_proj.weight', 'base_model.model.model.layers.24.self_attn.o_proj.weight', 'base_model.model.model.layers.24.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.24.mlp.gate_proj.weight', 'base_model.model.model.layers.24.mlp.down_proj.weight', 'base_model.model.model.layers.24.mlp.up_proj.weight', 'base_model.model.model.layers.24.input_layernorm.weight', 'base_model.model.model.layers.24.post_attention_layernorm.weight', 'base_model.model.model.layers.25.self_attn.q_proj.weight', 'base_model.model.model.layers.25.self_attn.k_proj.weight', 'base_model.model.model.layers.25.self_attn.v_proj.weight', 'base_model.model.model.layers.25.self_attn.o_proj.weight', 'base_model.model.model.layers.25.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.25.mlp.gate_proj.weight', 'base_model.model.model.layers.25.mlp.down_proj.weight', 'base_model.model.model.layers.25.mlp.up_proj.weight', 'base_model.model.model.layers.25.input_layernorm.weight', 'base_model.model.model.layers.25.post_attention_layernorm.weight', 'base_model.model.model.layers.26.self_attn.q_proj.weight', 'base_model.model.model.layers.26.self_attn.k_proj.weight', 'base_model.model.model.layers.26.self_attn.v_proj.weight', 'base_model.model.model.layers.26.self_attn.o_proj.weight', 'base_model.model.model.layers.26.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.26.mlp.gate_proj.weight', 'base_model.model.model.layers.26.mlp.down_proj.weight', 'base_model.model.model.layers.26.mlp.up_proj.weight', 'base_model.model.model.layers.26.input_layernorm.weight', 'base_model.model.model.layers.26.post_attention_layernorm.weight', 'base_model.model.model.layers.27.self_attn.q_proj.weight', 'base_model.model.model.layers.27.self_attn.k_proj.weight', 'base_model.model.model.layers.27.self_attn.v_proj.weight', 'base_model.model.model.layers.27.self_attn.o_proj.weight', 'base_model.model.model.layers.27.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.27.mlp.gate_proj.weight', 'base_model.model.model.layers.27.mlp.down_proj.weight', 'base_model.model.model.layers.27.mlp.up_proj.weight', 'base_model.model.model.layers.27.input_layernorm.weight', 'base_model.model.model.layers.27.post_attention_layernorm.weight', 'base_model.model.model.layers.28.self_attn.q_proj.weight', 'base_model.model.model.layers.28.self_attn.k_proj.weight', 'base_model.model.model.layers.28.self_attn.v_proj.weight', 'base_model.model.model.layers.28.self_attn.o_proj.weight', 'base_model.model.model.layers.28.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.28.mlp.gate_proj.weight', 'base_model.model.model.layers.28.mlp.down_proj.weight', 'base_model.model.model.layers.28.mlp.up_proj.weight', 'base_model.model.model.layers.28.input_layernorm.weight', 'base_model.model.model.layers.28.post_attention_layernorm.weight', 'base_model.model.model.layers.29.self_attn.q_proj.weight', 'base_model.model.model.layers.29.self_attn.k_proj.weight', 'base_model.model.model.layers.29.self_attn.v_proj.weight', 'base_model.model.model.layers.29.self_attn.o_proj.weight', 'base_model.model.model.layers.29.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.29.mlp.gate_proj.weight', 'base_model.model.model.layers.29.mlp.down_proj.weight', 'base_model.model.model.layers.29.mlp.up_proj.weight', 'base_model.model.model.layers.29.input_layernorm.weight', 'base_model.model.model.layers.29.post_attention_layernorm.weight', 'base_model.model.model.layers.30.self_attn.q_proj.weight', 'base_model.model.model.layers.30.self_attn.k_proj.weight', 'base_model.model.model.layers.30.self_attn.v_proj.weight', 'base_model.model.model.layers.30.self_attn.o_proj.weight', 'base_model.model.model.layers.30.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.30.mlp.gate_proj.weight', 'base_model.model.model.layers.30.mlp.down_proj.weight', 'base_model.model.model.layers.30.mlp.up_proj.weight', 'base_model.model.model.layers.30.input_layernorm.weight', 'base_model.model.model.layers.30.post_attention_layernorm.weight', 'base_model.model.model.layers.31.self_attn.q_proj.weight', 'base_model.model.model.layers.31.self_attn.k_proj.weight', 'base_model.model.model.layers.31.self_attn.v_proj.weight', 'base_model.model.model.layers.31.self_attn.o_proj.weight', 'base_model.model.model.layers.31.self_attn.rotary_emb.inv_freq', 'base_model.model.model.layers.31.mlp.gate_proj.weight', 'base_model.model.model.layers.31.mlp.down_proj.weight', 'base_model.model.model.layers.31.mlp.up_proj.weight', 'base_model.model.model.layers.31.input_layernorm.weight', 'base_model.model.model.layers.31.post_attention_layernorm.weight', 'base_model.model.model.norm.weight', 'base_model.model.lm_head.0.weight'].

Should I take any further step to solve it?

EDIT: I found print("\n If there's a warning about missing keys above, please disregard :)")

I'm doing the same thing and the approach suggested by @chainyo in https://github.com/tloen/alpaca-lora/issues/92#issuecomment-1479065352 worked for me.

Here are the exact steps I'm using:

- Merge the LLaMA weights with the LoRA weights by running the "export_hf_checkpoint.py" script. This will combine "decapoda-research/llama-7b-hf" and "tloen/alpaca-lora-7b". The combined model will be put in "./hf_ckpt". You can verify this is the fine-tuned model by testing it with https://github.com/oobabooga/text-generation-webui

- Update "finetune.py": change the model path from "decapoda-research/llama-7b-hf" to "./hf_ckpt" so it will fine-tune on top of the fine-tuned model; change "DATA_PATH" to my own dataset file.

- Run "finetune.py". This will create a fine-tuned LoRA on top of the already fine-tuned model from "./hf_ckpt" and output new LoRA weights to "./lora-alpaca".

- Test the fine-tuned LoRA using the "generate.py" script. You need to change BASE_MODEL to "./hf_ckpt" and LORA_WEIGHTS to "./lora-alpaca".

Although the process works, the result is far from ideal for my use case. It seems quite hard for the model to remember specific things like "This API does XYZ" by including just that in the dataset.

I'm doing the same thing and the approach suggested by @chainyo in #92 (comment) worked for me.

Here are the exact steps I'm using:

- Merge the LLaMA weights with the LoRA weights by running the "export_hf_checkpoint.py" script. This will combine "decapoda-research/llama-7b-hf" and "tloen/alpaca-lora-7b". The combined model will be put in "./hf_ckpt". You can verify this is the fine-tuned model by testing it with https://github.com/oobabooga/text-generation-webui

- Update "finetune.py": change the model path from "decapoda-research/llama-7b-hf" to "./hf_ckpt" so it will fine-tune on top of the fine-tuned model; change "DATA_PATH" to my own dataset file.

- Run "finetune.py". This will create a fine-tuned LoRA on top of the already fine-tuned model from "./hf_ckpt" and output new LoRA weights to "./lora-alpaca".

- Test the fine-tuned LoRA using the "generate.py" script. You need to change BASE_MODEL to "./hf_ckpt" and LORA_WEIGHTS to "./lora-alpaca".

Although the process works, the result is far from ideal for my use case. It seems quite hard for the model to remember specific things like "This API does XYZ" by including just that in the dataset.

I'm happy to let you know that I was able to resolve the issue successfully by following your approach

Merging is subobtimal on many sides.

I was able to continue a previous training successfully without merging the weights, just going on with the previous LoRA. This works either with just a previous LoRA and no more data, or with saved checkpoints that include optimizer states as well (in this case, it's really "continue/extend" the training) Quite useful when you asked for too few epochs and want to push further on.

I can share my current tweaks to the code if this is of help.

Merging is subobtimal on many sides.

I was able to continue a previous training successfully without merging the weights, just going on with the previous LoRA. This works either with just a previous LoRA and no more data, or with saved checkpoints that include optimizer states as well (in this case, it's really "continue/extend" the training) Quite useful when you asked for too few epochs and want to push further on.

I can share my current tweaks to the code if this is of help.

Could you please share your code, how to continue training with existing lora?

My running version is more heavily customized, but here are the minimal needed changes: https://github.com/tloen/alpaca-lora/pull/154

My running version is more heavily customized, but here are the minimal needed changes: #154

Thank you! Is your loss after loading checkpoint/lora is somewhere near the loss it stopped training the last time?

The last time I tried to continue training (not with your code) it showed way bigger loss which VERY slowly goes down then. It looks like it starting from begging or I just don't get it. As an example - the last checkpoint loss was ~0.7917 after restored training it becomes 8.7 (x11 times bigger) and after 60 steps it still shows 5.72.

Thank you! Is your loss after loading checkpoint/lora is somewhere near the loss it stopped training the la

When using a full checkpoint (inc. optimizer state) the loss is continuous and continues to drop.

If using a different dataset than stanford's, beware of the changing steps and warmup setting.

I save the checkpoints by epoch instead of steps myself, and tuned the warmup steps so they match one epoch. lr raises for 1 epoch to the proper value, then goes down. When resuming from a full checkpoint, there is no more warmup and the lr continues to go down. (this supposes you kept the same dataset, or you should not do a full resume with optimizer state)

If you change the dataset in between, like further tune an alpaca lora on your own dataset, then only load the LoRA weights, but no trainer state (then it starts a new train, with warmup and clear optimizer state)

My running version is more heavily customized, but here are the minimal needed changes: #154

I set CONTINUE_FROM_CHECKPOINT = "./alpaca-lora-7b" which is download from huggingface

and find

Restarting from ./alpaca-lora-7b/adapter_model.bin

Traceback (most recent call last):

File "/DATA/lianjh/alpaca-lora/finetune.py", line 70, in <module>

adapters_weights = torch.load(checkpoint_name)

File "/home/lianjh/miniconda3/envs/alpaca/lib/python3.9/site-packages/torch/serialization.py", line 815, in load

return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

File "/home/lianjh/miniconda3/envs/alpaca/lib/python3.9/site-packages/torch/serialization.py", line 1033, in _legacy_load

magic_number = pickle_module.load(f, **pickle_load_args)

_pickle.UnpicklingError: invalid load key, 'v'.

What should I do? #154

I tried the same to make sure. Got the weights from hf, continued training from them, no issue

Restarting from ./lora-alpaca/alpaca-lora-7b/adapter_model.bin

trainable params: 4194304 || all params: 6742609920 || trainable%: 0.06220594176090199

[...]

Your error seems to be a pickle error at loading the .bin, even before anything happens. So I'd suspect a corrupted download maybe or a version error (torch, python?) Does that very same file work for inference in the same env?

Double check you have the full lora file and not just a lfs link. Download manually and check the size, 16.8 Mb

I tried the same to make sure. Got the weights from hf, continued training from them, no issue

Restarting from ./lora-alpaca/alpaca-lora-7b/adapter_model.bin trainable params: 4194304 || all params: 6742609920 || trainable%: 0.06220594176090199 [...]Your error seems to be a pickle error at loading the .bin, even before anything happens. So I'd suspect a corrupted download maybe or a version error (torch, python?) Does that very same file work for inference in the same env?

Double check you have the full lora file and not just a lfs link. Download manually and check the size, 16.8 Mb

Thank you very much. I checked my files again and found that they were not downloaded correctly. My problem has been solved. Thanks for your contribution and patience.