OpenPrompt

OpenPrompt copied to clipboard

OpenPrompt copied to clipboard

An Open-Source Framework for Prompt-Learning.

Acoording to your source code(https://github.com/thunlp/OpenPrompt/blob/0d7774c9bd537c96512a22ada1b3c9bf466df8f2/openprompt/prompts/prefix_tuning_template.py#L188), it seems that you only add key-value pairs for self-attention in the encoder and decoder but ignore the cross-attention in decoder. If my understanding is...

学长学姐们好~目前tutorial 中的0_basic.py学习完成了,但不知道怎么自动搜索生成模板,框架提供对应的函数调用了吗

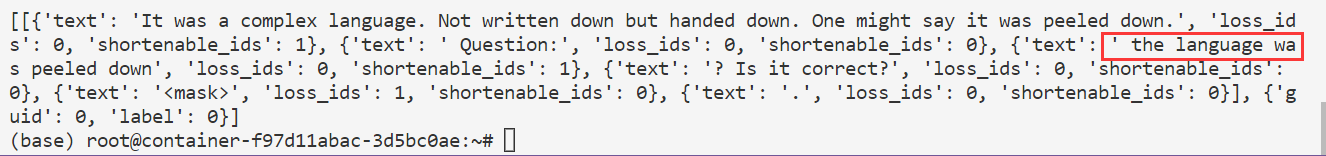

when I try to run the 0_basic.py code, as I printed the wrap_one_example, I get the following result.  please notice the empty in the red rectangular. Intutively, I guess...

Using SoftTemplate, is the following code the hard (textual) template? `text='Text: {"placeholder":"text_a"} Summarization: {"mask"} ` Or, it only uses the text to initialize the soft template?

Hello, thanks for the great program, it saves me a lot of time and energy. But I have a question about state_dict when using prefix_tuning_template in T5. I find that...

This tutorial uses the webnlg dataset and the preprocessor is for webnlg. However, looking at the preprocessor code(conditional_generation_dataset.py), the e2e and bart preprocessors are also implemented. Could you provide these...

Could we get the encoder embedding of sentence after prompt learning? thank you very much!

When doing prompt-learning without any verbalizer, I used `forward_without_verbalize` and encountered an error: ``` Traceback (most recent call last): File "~/OpenPrompt/openprompt/pipeline_base.py", line 309, in forward_without_verbalize outputs = self.verbalizer.gather_outputs(outputs) AttributeError: 'NoneType'...

When I was experimenting with prompt learning, I found that the performance of the model was very unstable, with performance fluctuating from high to low. Is there any way I...