thanos

thanos copied to clipboard

thanos copied to clipboard

Vertical dedup compaction results in erratic blocks

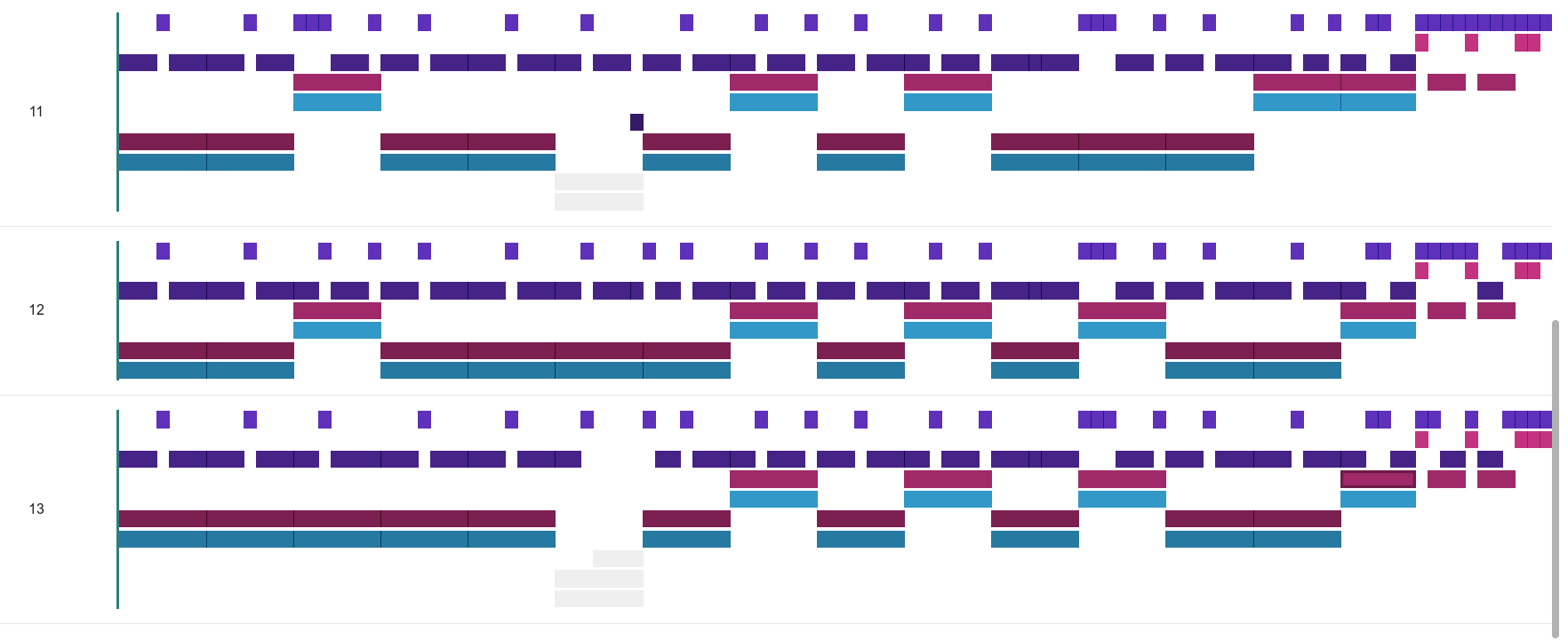

With vertical compaction (deduped) enabled here is how the blocks look like in the UI:

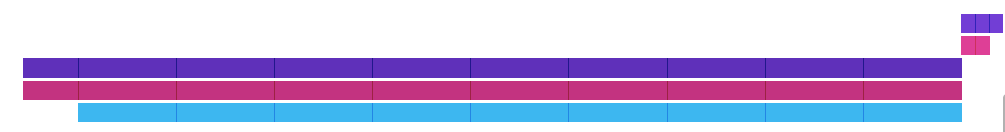

I would expect these to be of roughly equal size like here:

I wonder what is happening here :thinking: at the very least it seems like it leads to unnecessary space wastage

Hello 👋 Looks like there was no activity on this issue for the last two months.

Do you mind updating us on the status? Is this still reproducible or needed? If yes, just comment on this PR or push a commit. Thanks! 🤗

If there will be no activity in the next two weeks, this issue will be closed (we can always reopen an issue if we need!). Alternatively, use remind command if you wish to be reminded at some point in future.

We have the same issue as @GiedriusS .Vertical compaction is enabled, but two streams are not merged together. I thought it should happen when correct --deduplication.replica-label is specified and --compact.enable-vertical-compaction is set. We also have --deduplication.func=penalty set. Logs of Compactor shows that vertical compaction is enabled, but as I've wrote before, streams are not merged together.

We use thanos v0.27.0.

Yeah, there's something wrong :( perhaps @yeya24 you know something about this? I had to disable vertical compaction for now due to this because it results in overhead for Thanos Store due to extra blocks that it needs to read

Hello 👋 Looks like there was no activity on this issue for the last two months.

Do you mind updating us on the status? Is this still reproducible or needed? If yes, just comment on this PR or push a commit. Thanks! 🤗

If there will be no activity in the next two weeks, this issue will be closed (we can always reopen an issue if we need!). Alternatively, use remind command if you wish to be reminded at some point in future.

the same situation here, i see that vertical compaction is enabled

caller=tls_config.go:277 level=info service=http/server component=compact msg="TLS is disabled." http2=false address=[::]:10902

caller=tls_config.go:274 level=info service=http/server component=compact msg="Listening on" address=[::]:10902

2024-03-27 09:24:34.308

aller=compact.go:1461 level=info msg="start sync of metas"

2024-03-27 09:24:34.308

caller=http.go:73 level=info service=http/server component=compact msg="listening for requests and metrics" address=0.0.0.0:10902

2024-03-27 09:24:34.308

caller=fetcher.go:407 level=debug component=block.BaseFetcher msg="fetching meta data" concurrency=60

2024-03-27 09:24:34.308

caller=intrumentation.go:75 level=info msg="changing probe status" status=healthy

2024-03-27 09:24:34.308

caller=intrumentation.go:56 level=info msg="changing probe status" status=ready

2024-03-27 09:24:34.307

caller=compact.go:679 level=info msg="starting compact node"

2024-03-27 09:24:34.307

aller=compact.go:405 level=info msg="retention policy of 5 min aggregated samples is enabled" duration=8760h0m0s

2024-03-27 09:24:34.307

caller=compact.go:398 level=info msg="retention policy of raw samples is enabled" duration=4320h0m0s

2024-03-27 09:24:34.306

caller=compact.go:256 level=info msg="vertical compaction is enabled" compact.enable-vertical-compaction=true

2024-03-27 09:24:34.306

caller=compact.go:251 level=info msg="deduplication.replica-label specified, enabling vertical compaction" dedupReplicaLabels=prometheus_replica

2024-03-27 09:24:34.306

caller=factory.go:53 level=info msg="loading bucket configuration"

2024-03-27 09:24:34.306

caller=main.go:67 level=debug msg="maxprocs: Updating GOMAXPROCS=[32]: determined from CPU quota"

but Later all I can see is

caller=fetcher.go:820 level=debug msg="removed replica label" label=prometheus_replica count=29948

caller=fetcher.go:407 level=debug component=block.BaseFetcher msg="fetching meta data" concurrency=60

caller=fetcher.go:557 level=info component=block.BaseFetcher msg="successfully synchronized block metadata" duration=9m16.907085522s duration_ms=556907 cached=36212 returned=36212 partial=0

caller=blocks_cleaner.go:58 level=info msg="cleaning of blocks marked for deletion done"

and upload compaction of 2 weeks and ~ 2 days

@GiedriusS is this normal? the spikes gets up when I restart the compactor

and thanos_compact_iterations_total is always 0

I'm running thanos HelmChart-13.3.0

Sorry for the delay from my end. This was to be expected because index files became larger as a result of vertical compaction. The relevant problem is here: https://github.com/prometheus/prometheus/issues/5868. The blocks look like this because Thanos tries to avoid compacting blocks if it detects that the index size would become bigger than 64GiB. It avoids that by dropping blocks from compaction groups starting with the biggest index files and since they vary, this is why you see this pattern.

thanks, do you know how to solve Error executing query: sum and count timestamps not aligned