Capture profile on tensorboard profiler not capturing the profiling data

Describe the problem the feature is intended to solve

tensorboard profiler not working with latest tensorflow serving master commit

System information

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04):Linux ubuntu 18.04

- TensorFlow Serving installed from (source or binary):built from source

- TensorFlow Serving version: master

Describe the problem

tensorboard profile not working with tensorflow serving built from master

Exact Steps to Reproduce

Steps: (1) Build tensorflow model server for master using below docker https://github.com/tensorflow/serving/blob/master/tensorflow_serving/tools/docker/Dockerfile.devel make sure tensorflow serving api is saved for installing in the client

bazel build --color=yes --curses=yes \

${TF_SERVING_BAZEL_OPTIONS} \

--verbose_failures \

--output_filter=DONT_MATCH_ANYTHING \

${TF_SERVING_BUILD_OPTIONS} \

tensorflow_serving/tools/pip_package:build_pip_package && \

bazel-bin/tensorflow_serving/tools/pip_package/build_pip_package \

/tmp/pip

(2) deploy tensorflow model server using docker https://github.com/tensorflow/serving/blob/master/tensorflow_serving/tools/docker/Dockerfile (3) Create a client virtual environment and install tensorflow-serving-api built from (1) (4) start tensorboard and go to profile on tensorboard (5) from the client run the model deployed (6) click on capture profile while model is running - no profile data - only empty profile in the logs directory of tensorboard.

Same steps using r2.3 tensorflow serving works fine.

Describe alternatives you've considered

Tried installing tf-nightly / tb-nightly / tbp-nightly didnt help Built tensorflow serving api by updating https://github.com/tensorflow/serving/blob/master/tensorflow_serving/tools/pip_package/setup.py#L47 to _TF_REQ = ['tf-nightly'] this installs tf-nightly tb-nightly but still capture profile data is empty

Source code / logs

Include any logs or source code that would be helpful to diagnose the problem. If including tracebacks, please include the full traceback. Large logs and files should be attached. Try to provide a reproducible test case that is the bare minimum necessary to generate the problem.

@ganand1, Can you please refer this Documentation on how to use Tensorboard Profiler while performing Inference using TF Serving. Thanks!

TThank you for the link. I did follow the link and try. On clicking on cpature profile it shows up empty profile- doesnt capture profile properly

If i use 2.3 it works fine

When i use master, capture profile is empty.

@ganand1,

For Tensorflow Serving, since the Latest Stable Version is 2.3.0,AFAIK, it is recommended to use that version. Once the internal testing is done on the Master Branch, a Stable Version will be cut later.

Thanks. but when we use master code - we are not able to capture profile. Any suggestions how to get profiling data when tensorboard is not capturing the profile.

thank you

We today fixed a bug in the profiler side (https://github.com/tensorflow/tensorflow/commit/2baf9981d73fb3d12ba4331a3b3f5d82894e6aae). Can you again try this on TF master in ~1 day (once TF Serving catches up with the above TF commit)?

@yisitu do you know if https://github.com/tensorflow/tensorflow/commit/2baf9981d73fb3d12ba4331a3b3f5d82894e6aae will fix this issue (seen on master TF serving repo, but not on 2.3 release)?

Hi, I believe it will, but have not verified it.

i tried running on the 2.4.0 i couldnt capture profile.[https://github.com/tensorflow/serving/tree/2.4.0] I had used the branch and built using https://github.com/tensorflow/serving/blob/master/tensorflow_serving/tools/docker/Dockerfile.devel

please find the pip. list wondering why 2.4.0 release is pointing to tensorflow 2.4.0rc4. piplist.txt

installing from pypi seems fine. I do see the tagged version

installing from pypi seems fine. I do see the tagged version

Could you elaborate? Do you mean that with an installation from pypi you can capture a profile?

so i did pip install tensorflow-serving-api and that has the versions in the attached pip list piplist_pypi.txt - this looks fine to me.

still profile didnt work - i got empty profiles

I'm using official tensorflow/serving:2.4.0-gpu and I can't capture profiling data either. Tensorboard created events.out.tfevents.1608174858.faf5bf56d686.profile-empty in the logdir, prompted that Capture profile successfully, please refresh and shows "No profile data was found." after an automatic page refresh.

same problem here, but I am on tf serving 2.1.0, is it a known bug for older versions?

same problem here, but I am on tf serving 2.1.0, is it a known bug for older versions?

more info, tensorflow/serving:2.1.0, tensorboard 2.4.1 on clicking capture, it says something like Capture profile successfully, but generate file events.out.tfevents.1611836545.VM-28-227-centos.profile-empty

it has been happening since 2.3.0 as far as i tried... you might have to use tensorflow 2.1.0 with tensorboard 2.1.0 ..will be good to keep the pypi packages version same.

it has been happening since 2.3.0 as far as i tried... you might have to use tensorflow 2.1.0 with tensorboard 2.1.0 ..will be good to keep the pypi packages version same.

Thanks for your suggestions! it did capture some useful information as I switched my tensorboard and ternsorflow to previous versions, but then it failed to show anything on the screen, and all I got was a blank page under the profile tab. So I tried to load the trace data using tensorboard 2.4.1 and somehow it works. Kinda weird that I have to switch between versions back and forth to get something done. But still, appreciate your advice!

Hi, could you reassign the case to me? Thanks!

Hi, sorry for the late reply. I think the missing step is to bind a host volume location where tensorboard expects the profile data to be written to.

(venv_TfModelServer) yisitu@a-yisitu-tensorflow-serving:~$ docker run -it --rm -p 8500:8500 -p 8501:8501 \

--network=host \

+ -v /tmp/tensorboard:/tmp/tensorboard \

-v /tmp/tfs:/tmp/tfs \

-v "$TESTDATA/saved_model_half_plus_two_cpu:/models/half_plus_two" -e MODEL_NAME=half_plus_two yisitu/mybuild2

Then start tensorboard and point it to the mounted host directory.

(venv_tensorboard) yisitu@a-yisitu-tensorflow-serving:~$ tensorboard \

+--logdir=/tmp/tensorboard \

--bind_all --logtostderr --verbosity=9

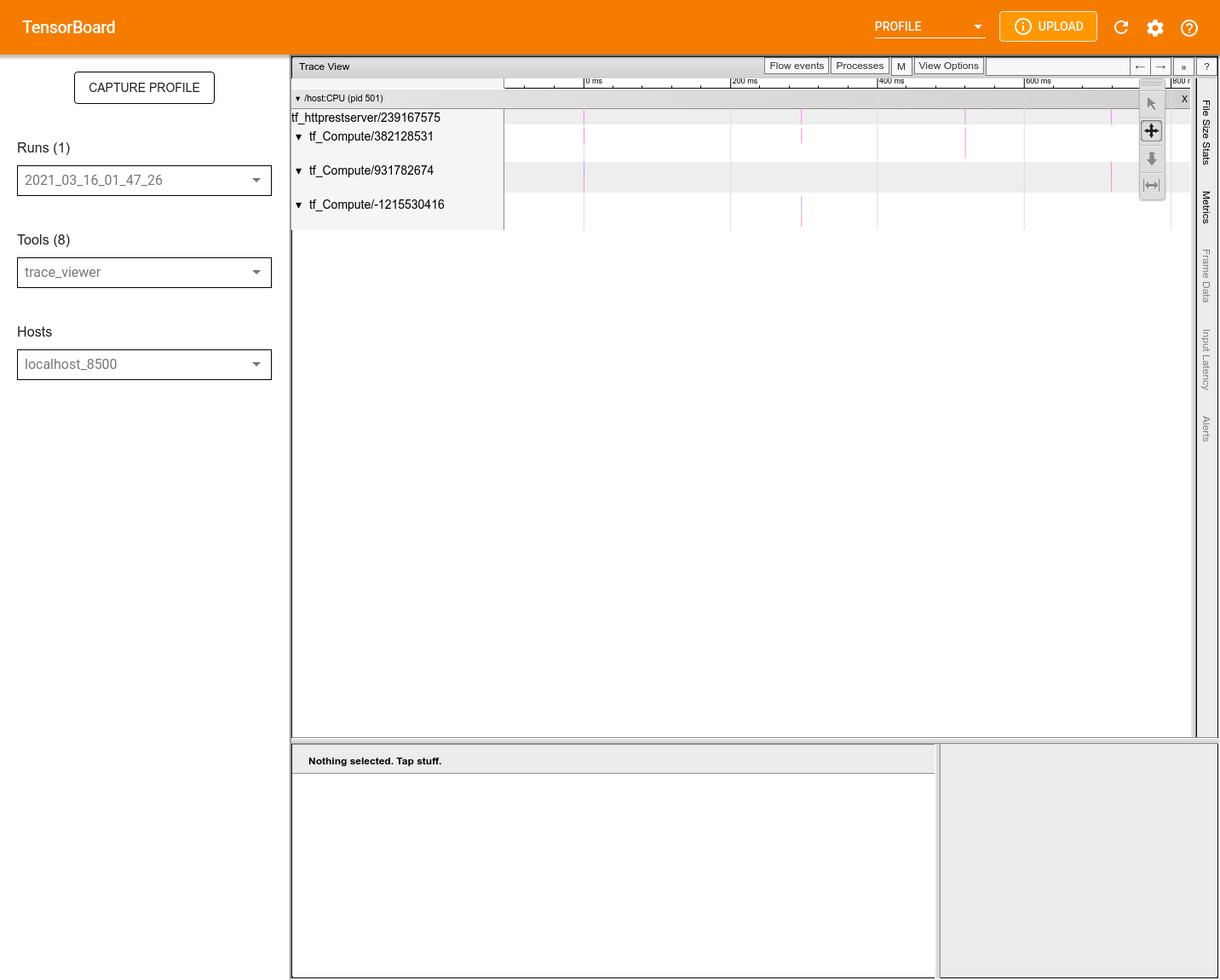

With that I was able to get get a profile (image below) with the setup described in comment 1:

tensorboard 2.4.1

tensorboard-plugin-wit 1.8.0

tensorflow 2.4.1

tensorflow-estimator 2.4.0

tensorflow-serving-api 0.0.0

Hi, sorry for the late reply. I think the missing step is to bind a host volume location where tensorboard expects the profile data to be written to.

(venv_TfModelServer) yisitu@a-yisitu-tensorflow-serving:~$ docker run -it --rm -p 8500:8500 -p 8501:8501 \ --network=host \ + -v /tmp/tensorboard:/tmp/tensorboard \ -v /tmp/tfs:/tmp/tfs \ -v "$TESTDATA/saved_model_half_plus_two_cpu:/models/half_plus_two" -e MODEL_NAME=half_plus_two yisitu/mybuild2Then start tensorboard and point it to the mounted host directory.

(venv_tensorboard) yisitu@a-yisitu-tensorflow-serving:~$ tensorboard \ +--logdir=/tmp/tensorboard \ --bind_all --logtostderr --verbosity=9With that I was able to get get a profile (image below) with the setup described in comment 1:

tensorboard 2.4.1 tensorboard-plugin-wit 1.8.0 tensorflow 2.4.1 tensorflow-estimator 2.4.0 tensorflow-serving-api 0.0.0

I mounted /home via -v /home:/home when launching both containers listed below:

- container (with profiler installed via

pip) from imagetensorflow/tensorflow:2.4.1-gpu, for launching tensorboard - container from image

tensorflow/serving:2.4.1-gpufor launching model server).

and connected the two container to the same network via docker connect. Then I specified --logdir as some sub-path under /home, profile service url as <tensorflow-serving-container-name>:8500. The pages prompts please refresh and no data was displayed after clicking capture. All I got is a events.out.tfevents.1616482451.d4794e6c1ed5.profile-empty file created by tensorboard. I've also tried using previous versions of tensorflow & serving without success.

Do I have to mount -v /tmp/tfs:/tmp/tfs for both containers besides connecting them to the same docker network?

Do I have to mount -v /tmp/tfs:/tmp/tfs for both containers besides connecting them to the same docker network? No I don't think that is necessary.

Could you login to the docker, write a file to /tmp/tensorboard to see if that file is visible (to TensorBoard) outside of docker.

It also could be that your capture duration is too small.

Also check that you have these versions: tensorboard 2.4.1 tensorboard-plugin-wit 1.8.0 tensorflow 2.4.1 tensorflow-estimator 2.4.0 tensorflow-serving-api 0.0.0

Do I have to mount -v /tmp/tfs:/tmp/tfs for both containers besides connecting them to the same docker network? No I don't think that is necessary.

Could you login to the docker, write a file to /tmp/tensorboard to see if that file is visible (to TensorBoard) outside of docker.

It also could be that your capture duration is too small.

Also check that you have these versions: tensorboard 2.4.1 tensorboard-plugin-wit 1.8.0 tensorflow 2.4.1 tensorflow-estimator 2.4.0 tensorflow-serving-api 0.0.0

Could you login to the docker, write a file to /tmp/tensorboard to see if that file is visible (to TensorBoard) outside of docker

No it isn't. (Since I didn't mount /tmp/tenosorboard for both both containers.)

It also could be that your capture duration is too small.

No, I set the duration to 5 seconds and the pages refreshes as soon as I send the request. I've tested multiple times already.

I would expect that if I requests between the two containers (the one that launches tensorboard and the other running the model server) could be delivered to each other via docker network configuration, then the profiling should work, without sharing a specific volume (in this case /tmp/tensorboard. Please clarify if the transmission of profiling data between the containers relies on writing/reading intermediate data to/from shared disk storage besides network connection.

I have used the steps mentioned here for profiling tfserve but I am getting 'Failed to capture profile: empty trace result.' Though 'events.out.tfevents.1617711931.**.profile-empty' is created but events are not getting created.

Tensorboard error logs for it

2021-04-06 12:47:33.467885: I tensorflow/core/profiler/rpc/client/capture_profile.cc:198] Profiler delay_ms was 0, start_timestamp_ns set to 1617713253467873633 [2021-04-06T12:47:33.467873633+00:00]

Starting to trace for 1000 ms. Remaining attempt(s): 0

2021-04-06 12:47:33.467933: I tensorflow/core/profiler/rpc/client/remote_profiler_session_manager.cc:75] Deadline set to 2021-04-06T12:48:33.459801425+00:00 because max_session_duration_ms was 60000 and session_creation_timestamp_ns was 1617713253459801425 [2021-04-06T12:47:33.459801425+00:00]

2021-04-06 12:47:33.468318: I tensorflow/core/profiler/rpc/client/profiler_client.cc:113] Asynchronous gRPC Profile() to localhost:6006

2021-04-06 12:47:33.468386: I tensorflow/core/profiler/rpc/client/remote_profiler_session_manager.cc:96] Issued Profile gRPC to 1 clients

2021-04-06 12:47:33.468403: I tensorflow/core/profiler/rpc/client/profiler_client.cc:131] Waiting for completion.

E0406 12:47:33.469405 139666310461184 _internal.py:113] 127.0.0.1 - - [06/Apr/2021 12:47:33] code 505, message Invalid HTTP version (2.0)

2021-04-06 12:47:33.469757: E tensorflow/core/profiler/rpc/client/profiler_client.cc:154] Unknown: Stream removed

2021-04-06 12:47:33.469786: W tensorflow/core/profiler/rpc/client/capture_profile.cc:133] No trace event is collected from localhost:6006

2021-04-06 12:47:33.469795: W tensorflow/core/profiler/rpc/client/capture_profile.cc:145] localhost:6006 returned Unknown: Stream removed

No trace event is collected after 4 attempt(s). Perhaps, you want to try again (with more attempts?).

Tip: increase number of attempts with --num_tracing_attempts.```

No it isn't. (Since I didn't mount /tmp/tenosorboard for both both containers.)

The design does not work the way you have described. The serving container produces profile data, which is written to disk. TensorBoard needs to load this data from disk. Since your setup has TensorBoard running in another container, you'll need to make sure that the location of the profile data (written by the serving container) to is also readable by the TensorBoard container.

I suggest mounting the host's /tmp/tensorboard (as described above) to both serving and tensorboard containers and trying again.

No it isn't. (Since I didn't mount /tmp/tenosorboard for both both containers.)

The design does not work the way you have described. The serving container produces profile data, which is written to disk. TensorBoard needs to load this data from disk. Since your setup has TensorBoard running in another container, you'll need to make sure that the location of the profile data (written by the serving container) to is also readable by the TensorBoard container.

I suggest mounting the host's /tmp/tensorboard (as described above) to both serving and tensorboard containers and trying again.

I tried creating a docker volume and mounted it as /tmp/tensorboard for both containers (BTW for container that launches tensorboard I specified read-only option when mounting /tmp/tensorboard) this time. I have confirmed that the two containers now shares /tmp/tensorboard by manually crating test files. However I did not see anything written to /tmp/tensorboard after specifying tensorflow-serving:8500(I assume that this should be the correct url and port, if I specify any port/url other than this tensorboard will immediately prompt "empty trace result" instead of "capture profile successfully") and clicking "capture". No profiling data was displayed in tensorboard as described in previous posts.

It's a simple understanding issue. TB is expecting an event in 1000ms (set while setting up profile service url) but no event is not getting triggered in that duration. You can try to increase this to 10000ms and once you press capture, you need to do a prediction call from the model within the next 10s. This should solve the error.

@BorisPolonsky @ganand1 Did you folks ever solve this? I'm having similar issues seeing any profiling data with tf serving > 2.3

Silly mistake in my case - in case anyone comes here in the future struggling, I found it useful to run a setup like this

#!/bin/bash

SCRIPT_DIR=$(dirname $(readlink -f $0))

TENSORFLOW_SERVING_VERSION=2.7.4

TENSORFLOW_VERSION_FOR_PROFILER=2.7.4

DOCKER_TF_SERVING_HOSTNAME=serving

TF_SERVING_MODEL_NAME=<YOUR_MODEL_NAME>

LOCAL_DIR_FOR_BIND_MOUNTS=tf_logs_dir

TMP_TF_LOGDIR=${SCRIPT_DIR}/${LOCAL_DIR_FOR_BIND_MOUNTS}

# Create directory to be mounted to profiler and tf serving container

MKDIR_CMD="mkdir -p ${TMP_TF_LOGDIR}"

echo $MKDIR_CMD

eval $MKDIR_CMD

# Create dockerfile for profiler:

DOCKER_PROFILER_TAG=tensorboard_profiler:latest

echo """FROM tensorflow/tensorflow:${TENSORFLOW_VERSION_FOR_PROFILER}

RUN pip install -U tensorboard-plugin-profile

ENTRYPOINT ["tensorboard", "--logdir", "/tmp/tensorboard", "--bind_all"]

""">Dockerfile_tfprofile

# Build the profiler image

docker build -t ${DOCKER_PROFILER_TAG} -f Dockerfile_tfprofile .

# Create docker compose file

echo """version: "3.3"

services:

${DOCKER_TF_SERVING_HOSTNAME}:

image: tensorflow/serving:${TENSORFLOW_SERVING_VERSION}

ports:

- '8510:8500' # Whatever you need for client

- '8511:8501' # Whatever you need for client

environment:

- MODEL_NAME=${TF_SERVING_MODEL_NAME}

hostname: '${DOCKER_TF_SERVING_HOSTNAME}'

volumes:

- '<LOCAL_MODEL_DIR>:/models/${TF_SERVING_MODEL_NAME}/1'

- '${TMP_TF_LOGDIR}:/tmp/tensorboard'

profiler:

image: ${DOCKER_PROFILER_TAG}

ports:

- '6006:6006'

volumes:

- '${TMP_TF_LOGDIR}:/tmp/tensorboard'

""">docker-compose.yaml

docker-compose up

# Profile url = ${DOCKER_TF_SERVING_HOSTNAME}:8500

# Set profile duration

# Make request within duration

@ganand1,

Please refer to above comment which shows working setup to get profiling data from TF serving to tensorboard. Please let us know if this works.

Thank you!

Closing this due to inactivity. Please take a look into the answers provided above, feel free to reopen and post your comments(if you still have queries on this). Thank you!