io

io copied to clipboard

io copied to clipboard

Added ArrowS3Dataset

I Added a ArrowS3Dataset, this dataset could be used to deal parquet files on s3.

by the way, how to be a contributor? I noticed that I had submitted code before, but I wasn't included in the Contributor list

@LinGeLin I can see you are a contributor to the repo though it is not showing up in Contributors' graph map yet. Maybe there is a lag in GitHub updating the graph map.

As long as your commit's email address is associated with your github handle I assume it will eventually show up. Otherwise I think we can create a support ticket to GitHub to check.

lint check failed.it seems need to degrade protobuf

- Downgrade the protobuf package to 3.20.x or lower.

- Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

@LinGeLin It looks like some symbols are missing. Can you apply the following two commits https://github.com/tensorflow/io/pull/1700/commits/01a671e723c6c6c4449672117ea06011705c8668 and https://github.com/tensorflow/io/pull/1700/commits/5ab9e2b66585a6252aea4afcba80bf3e675fc25c (or just copy the https://github.com/tensorflow/io/blob/5ab9e2b66585a6252aea4afcba80bf3e675fc25c/third_party/arrow.BUILD file).

I think this will solve most of the linkage with missing symbol issues.

@LinGeLin It looks like some symbols are missing. Can you apply the following two commits 01a671e and 5ab9e2b (or just copy the https://github.com/tensorflow/io/blob/5ab9e2b66585a6252aea4afcba80bf3e675fc25c/third_party/arrow.BUILD file).

I think this will solve most of the linkage with missing symbol issues.

At first, I wanted to read Parquet files in S3 through TFIO, and this PR was also to solve this problem. The dataset can process the structured data of some recommended scenes, which we have verified and is OK. However, if Parquet is used to store binary data such as images, It seems that this dataset can't meet the requirements. Do you have any suggestions? Or other schemes to store and read unstructured data?

I see that there is a dataset called ParquetIODataset, should I develop a dataset that reads S3 Parquet based on this dataset instead of arrow? Very embarrassed.

In addition, thank you for helping me debug check

@LinGeLin That will depends on how you want to use unstructured data in TF. If the unstructured data is merely image which you can assume it is an image (jpg/bmp/etc) then tf.string is normally the way to go. if the "unstructured" means stored as "unstructured" but later transformed to structure then there are some other ways of achieve the goal.

Can you explain a little on the input of the data and the transformation you want to apply?

Also, maybe you can try to apply ff92b4b77a3311701d5c1794a0244e25fe691a41 c5716b9020edceb97d7ae6767a50d2d67e6fb011 and 6f6a780637d6df1f1367cca45aec1dd42edbd864 which will solve the windows build failure (or you can just copy https://github.com/tensorflow/io/blob/6f6a780637d6df1f1367cca45aec1dd42edbd864/third_party/arrow.BUILD).

@LinGeLin That will depends on how you want to use unstructured data in TF. If the unstructured data is merely image which you can assume it is an image (jpg/bmp/etc) then tf.string is normally the way to go. if the "unstructured" means stored as "unstructured" but later transformed to structure then there are some other ways of achieve the goal.

Can you explain a little on the input of the data and the transformation you want to apply?

Also, maybe you can try to apply ff92b4b c5716b9 and 6f6a780 which will solve the windows build failure (or you can just copy https://github.com/tensorflow/io/blob/6f6a780637d6df1f1367cca45aec1dd42edbd864/third_party/arrow.BUILD).

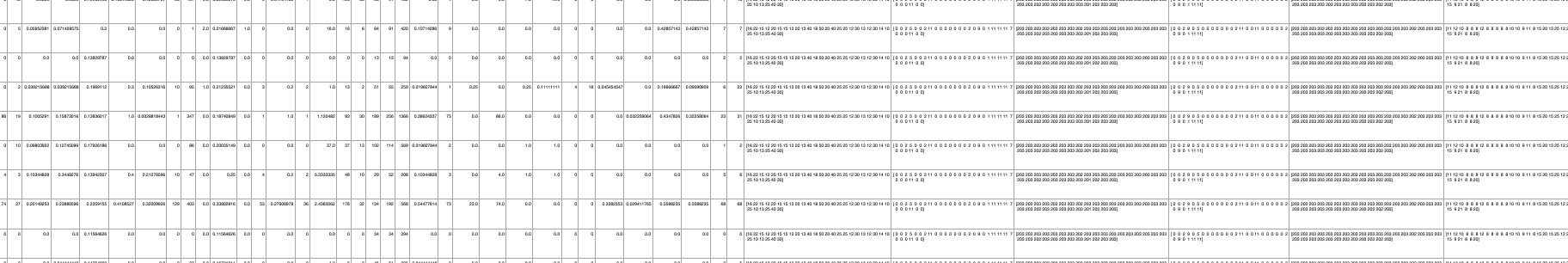

我说的非结构化数据是图片这种。但是还有其他的可能,比如语音,视频。不过打算用parquet存储的只有图片。 这个dataset的输入是存在s3上的parquet文件,parquet文件中存储的是训练推荐模型所需要的数据。一般都有上百维的特征。跟下图差不多,我们之前将特征存储成tfrecord,但是当特征非常大的时候,tfrecord这种方式有很大的弊端,我们的特征最少的几个T,最大的可以达到100T。在我们的系统中,数据和代码是分开的,意味着每次训练的时候,都需要将数据通过挂载的方式挂载到训练机器上。但是挂载总是会出问题,另外数据在小组之间的共享也不好。每个算法工程师都有自己独特的需求。我们想通过读S3 parquet的方式解决这些问题,于是就有了这个pr。

The unstructured data I'm talking about is pictures.But there are other possibilities, such as voice, video. However, only pictures are intended to be stored in parquet.

The input of this dataset is the parquet file stored on s3, and the parquet file stores the data required for training the recommendation model. Generally, there are hundreds of dimensions. Similar to the figure below, we previously stored the features as tfrecord, but when the features are very large, the tfrecord method has great drawbacks. Our features are at least a few T, and the maximum can reach 100T. In our system, the data and the code are separated, which means that each time you train, you need to mount the data to the training machine by mounting. But mounts always go wrong, and the sharing of data between groups is not good. Every algorithm engineer has his own unique needs. We want to solve these problems by reading S3 parquet, so we have this pr.