Memory requirements for c4/webtextlike

What I need help with / What I was wondering

I am trying to build c4/webtextlike, which the documentation reports as 14MiB download size, 18GiB dataset size:

https://www.tensorflow.org/datasets/catalog/c4#c4webtextlike

I'm getting failures during download_and_prepare that I'm 99.9% sure are the result of memory capacity, and I'm wondering whether there's documentation of the memory required during the build process.

What I've tried so far

I ran download_and_prepare on a fairly gargantuan VM (512GB of RAM). That got further than when I tried it on my laptop, and further than it got on a VM with 32GB of RAM, but still not quite enough; it died after several hours ("Killed" was the entirety of the stderr content).

It would be nice if...

I'm about 90% sure I'm guilty of a fundamental misunderstanding of what "dataset size" means in this context; something isn't adding up.

Probably beyond the scope of this issue, but what I actually want is just a word frequency distribution for web-like text that includes a long tail (longer than the ~20k lemmas I can find in publicly-available word distribution tables), and this dataset seemed perfect; 18GiB is practical for me to work with, and I don't need more precision or a longer tail than I would get from 18GiB of text (e.g., I don't need the full c4/en corpus). If someone reading this issue has suggestions what I should be looking at instead of c4/webtextlike, I'm all ears... though I would also like to understand whether I'm fundamentally missing something in the concept of "dataset size", or whether there's any documentation of the build environment requirements if not using DataFlow.

Environment information

- Operating System: Ubuntu 18.04

- Python version: 3.7

--

Thanks!

I'm pretty sure dataset size means the size of the raw text.

Did you figure out what the problem was in the end? :)

Nope. Because I really only needed a distribution of individual words, I ended up using the Reuters corpus frequency list. It would have been nice to be able to build my own distribution, from a source corpus, but it wasn't critical for this project.

Gotcha, thanks for the quick update.

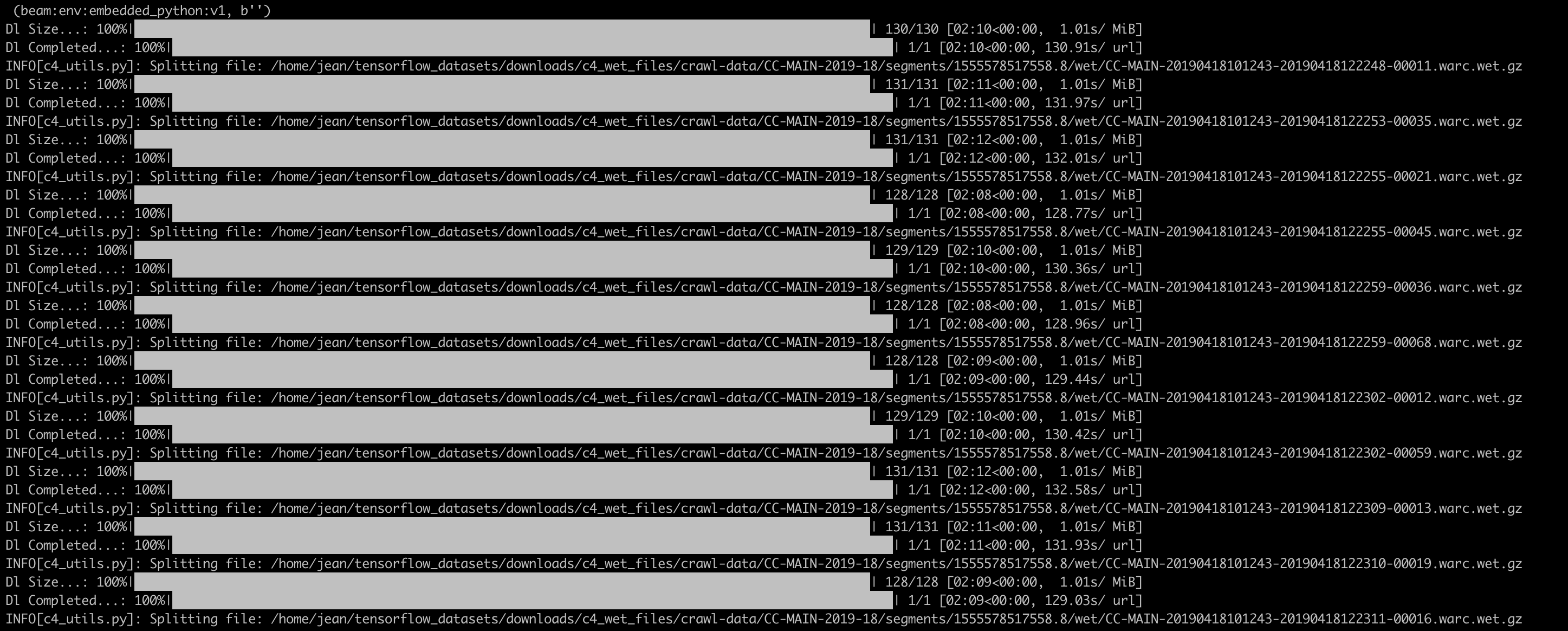

I'm running this now on my 128 GB Ram machine, and I have no clue how long it will take:

I'm not even sure if it will first download the complete C4 and then extract the website-like dataset or directly download the latter; do you happen to know what's going on? Judging by the filenames, I'd suspect it first downloads the full C4?

Started this an hour ago or so; so it still may crash like it did when you tried. If so, I don't get why so much data has to be stored in RAM, though...