used MultiOptimizer as optimizer ,after model.fit (),can't saved tensorflow-Addons=0.12.1 with Python=3.8 and Tensorflow=2.3.1

System information

- Ubuntu 18.04

- TensorFlow version : tensorflow-gpu 2.3.1 installed by binary

- TensorFlow-Addons :tensorflow-addons 0.12.1 installed by binary

- Python version:3.8

- Is GPU used? : no

Describe the bug i'm using MultiOptimizer as my model optimizer,but after model.fit() training my own data, then i run model.save() to save the model to local , raise TypeError: ('Not JSON Serializable:'...

Code to reproduce the issue below is the code i copy from the discriminative_layer_training.py,and i just add one line to save the model,also raise the same error.

import tensorflow as tf from tensorflow_addons.optimizers import MultiOptimizer import numpy as np

def get_model(): inputs = tf.keras.Input(shape=(4,)) outputs = tf.keras.layers.Dense(1)(inputs) return tf.keras.Model(inputs, outputs)

model1 = get_model() model2 = get_model() model3 = get_model()

inputs = tf.keras.Input(shape=(4,)) y1 = model1(inputs) y2 = model2(inputs) y3 = model3(inputs) outputs = tf.keras.layers.Average()([y1, y2, y3]) model = tf.keras.Model(inputs, outputs)

optimizers_and_layers = [ (tf.keras.optimizers.SGD(), model1), (tf.keras.optimizers.SGD(learning_rate=0.0), model2), (tf.keras.optimizers.SGD(), model3), ]

model1_weights_before_train = [weight.numpy() for weight in model1.weights] model2_weights_before_train = [weight.numpy() for weight in model2.weights] model3_weights_before_train = [weight.numpy() for weight in model3.weights]

multi_optimizer = MultiOptimizer(optimizers_and_layers)

model.compile(multi_optimizer, loss="mse")

x = np.ones((128, 4)).astype(np.float32) y = np.ones((128, 32)).astype(np.float32) model.fit(x, y) model.save("tf_model")

Provide a reproducible test case that is the bare minimum necessary to generate the problem.

Other info / logs raise TypeError('Not JSON Serializable:', obj) TypeError: ('Not JSON Serializable:', <tf.Tensor 'gradient_tape/functional_7/functional_1/dense/MatMul:0' shape=(4, 1) dtype=float32>)

Include any logs or source code that would be helpful to diagnose the problem. If including tracebacks, please include the full traceback. Large logs and files should be attached.

Can you test with Addons nightly?

Can you test with Addons nightly?

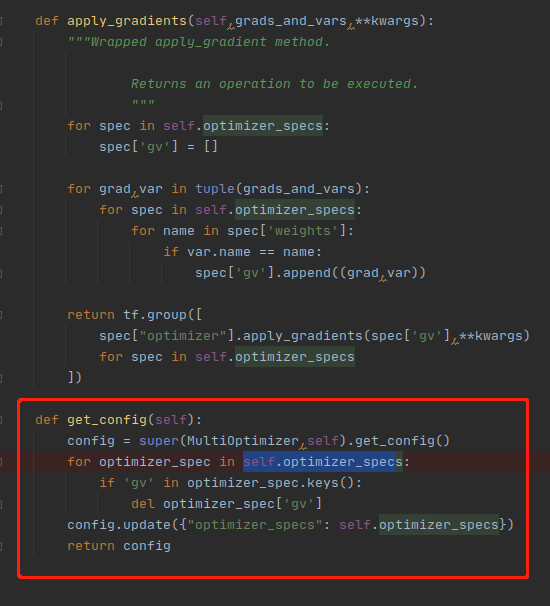

yes,i try it out ,and got the same error . i have debug myself ,and fixed it in my way: when you run MultiOptimizer.apply_gradients(), becase the variable self.optimizer_specs is a list of dict, the graident_value tensor has store into the dict like spec['gv'']. when call model.save() ,the MultiOptimizer.get_config() try to serialize the self.optimizer_specs variable, becase the Tensor in self.optimizer_specs can't serialized, so raise ('Not JSON Serializable:', <tf.Tensor 'training/MultiOptimizer/gradients/gradients/functional_1/dense/MatMul_grad/MatMul_1:0'

i just modify the MultiOptimizer.get_config(), before serialize ,i just delete the key name 'gv' in self.optimizer_spec . and then i call tf.keras.models.save_model(), it go right

i don't know this is the right way to solve the problem

/cc @hyang0129

hmm I think this is my fault for using dictionaries to store tensors. I remember encountering similar issues with serializing tensors stored in dictionaries.

The easy fix is listed by @fanfanfeng, which is to remove the grads and vars element when grabbing the config. This makes the most sense because deserializing an optimizer to resume training generally never restores gradients and vars. However, you may not restore the optimizer's parameters (eg. velocity value of the weights when using nesterov). If this is concern, I'd recommend testing with nesterov and see what the velocity value of the weights are prior to serialization and post serialization.

The hard fix is to rewrite the code to allow serialization of the grads and vars. IMO this could be done, but may not be worth it. @fanfanfeng has a fix that solves the immediate problem.

I would recommend adding a new test to ensure optimizer parameters are being saved and restored correctly. Something in the form of the pseudo code below. Then see if @fanfanfeng 's solution passes the test. If so, we gucci.

model.fit(data)

velocity = model.optimizer.velocity

model.save(with_optimizer=True)

loaded_model = load_model()

assert velocity == loaded_model.optimizer.velocity

The most lazy option is to simply not support model.save(include_optimizer=True) and only support model.save(include_optimizer=False). Add the note to the documentation and on get config, print a warning that this optimizer cannot be serialized using the normal process and you should use include_optimizer=False.

I would prefer to avoid over promising on the functionality, so I would recommend we do the super quick temporary fix of updating the doc to only support model.save(include_optimizer=False) and add a warning when get_config is called. Once that fix is in, we can work on the solution that @fanfanfeng listed.

I may not be available to work on this for the next two weeks, so if @fanfanfeng is not available to implement the fix either, then I would recommend we update the docs to note that we don't support saving the model with optimizer. If @fanfanfeng is available and can make the changes within a few days, there's not much reason to slap a temporary patch.