envd

envd copied to clipboard

envd copied to clipboard

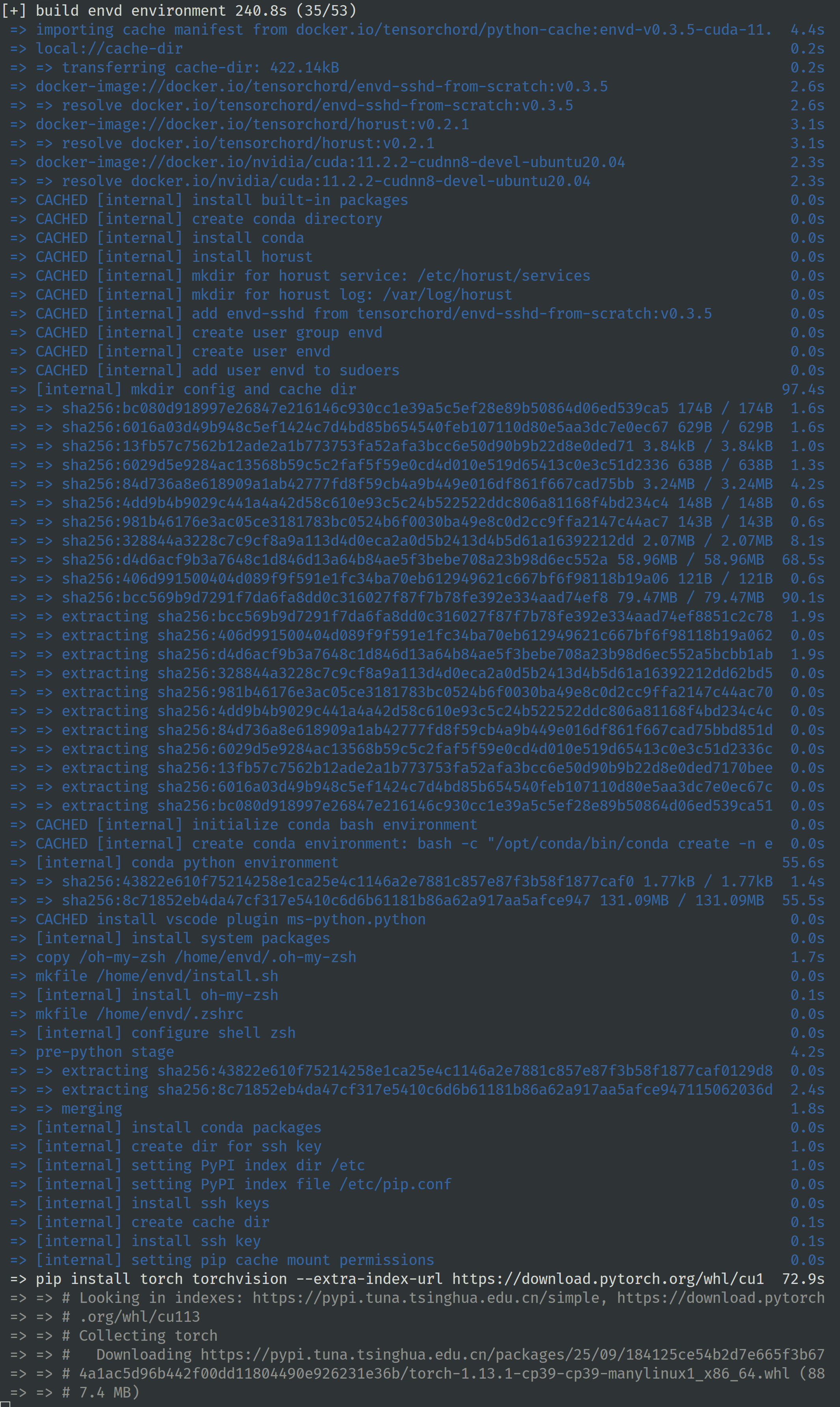

enhancement: First GPU build takes too long

https://hub.docker.com/r/tensorchord/python/tags

Our GPU image is 2.5GB, CPU image is 173MB.

It may take ~30min to download the GPU image. It hurts the user experience.

Perhaps we can trim it using docker-slim: https://github.com/docker-slim/docker-slim

Another solution is to support estargz format with lazy loading. However not sure how to make this work with the building stage

MNIST CPU example takes 400s to up.

Released a new version v0.3.5 and run

envd up -p examples/mnist -f :build_gpu

The cache works.

Could you also try on gitpods? We found it will repetitively download cuda images no matter the build process is successful or failed in last version.

Sure, will take a look.

A GPU MNIST build takes 3600s with a nvidia/cuda image cache and the remote cache.

=> pip install torch torchvision --extra-index-url https://download.pytorch.org/whl/c 3250.2s

pip install is the main part.

And the build cache works after a destroy:

envd destroy

envd up -f :build_gpu

[+] ⌚ parse build.envd and download/cache dependencies 0.0s ✅ (finished)

=> 💽 (cached) download oh-my-zsh 0.0s

=> 💽 (cached) download ms-python.python 0.0s

[+] build envd environment 46.0s (54/54) FINISHED

=> importing cache manifest from docker.io/tensorchord/python-cache:envd-v0.3.5-cuda-11. 3.7s

=> local://cache-dir 0.2s

=> => transferring cache-dir: 422.14kB 0.1s

=> docker-image://docker.io/tensorchord/envd-sshd-from-scratch:v0.3.5 2.1s

=> => resolve docker.io/tensorchord/envd-sshd-from-scratch:v0.3.5 2.1s

=> docker-image://docker.io/tensorchord/horust:v0.2.1 2.2s

=> => resolve docker.io/tensorchord/horust:v0.2.1 2.2s

=> docker-image://docker.io/nvidia/cuda:11.2.2-cudnn8-devel-ubuntu20.04 2.1s

=> => resolve docker.io/nvidia/cuda:11.2.2-cudnn8-devel-ubuntu20.04 2.1s

=> CACHED [internal] install built-in packages 0.0s

=> CACHED [internal] create conda directory 0.0s

=> CACHED [internal] install conda 0.0s

=> CACHED [internal] install horust 0.0s

=> CACHED [internal] mkdir for horust service: /etc/horust/services 0.0s

=> CACHED [internal] mkdir for horust log: /var/log/horust 0.0s

=> CACHED [internal] add envd-sshd from tensorchord/envd-sshd-from-scratch:v0.3.5 0.0s

=> CACHED [internal] create user group envd 0.0s

=> CACHED [internal] create user envd 0.0s

=> CACHED [internal] add user envd to sudoers 0.0s

=> CACHED [internal] mkdir config and cache dir 0.0s

=> CACHED [internal] install system packages 0.0s

=> CACHED [internal] initialize conda bash environment 0.0s

=> CACHED [internal] create conda environment: bash -c "/opt/conda/bin/conda create -n e 0.0s

=> CACHED [internal] conda python environment 0.0s

=> CACHED copy /oh-my-zsh /home/envd/.oh-my-zsh 0.0s

=> CACHED mkfile /home/envd/install.sh 0.0s

=> CACHED [internal] install oh-my-zsh 0.0s

=> CACHED mkfile /home/envd/.zshrc 0.0s

=> CACHED [internal] configure shell zsh 0.0s

=> CACHED pre-python stage 0.0s

=> CACHED [internal] install conda packages 0.0s

=> CACHED [internal] setting PyPI index dir /etc 0.0s

=> CACHED [internal] setting PyPI index file /etc/pip.conf 0.0s

=> CACHED [internal] create cache dir 0.0s

=> CACHED [internal] setting pip cache mount permissions 0.0s

=> CACHED pip install torch torchvision --extra-index-url https://download.pytorch.org/w 0.0s

=> CACHED pip install jupyter 0.0s

=> CACHED [internal] install PyPI packages 0.0s

=> CACHED [internal] create dir for ssh key 0.0s

=> CACHED [internal] install ssh keys 0.0s

=> CACHED [internal] install ssh key 0.0s

=> CACHED install vscode plugin ms-python.python 0.0s

=> CACHED [internal] generating the image 0.0s

=> CACHED [internal] update alternative python to envd 0.0s

=> CACHED [internal] update alternative python3 to envd 0.0s

=> CACHED [internal] update alternative pip to envd 0.0s

=> CACHED [internal] update alternative pip3 to envd 0.0s

=> CACHED [internal] init conda zsh env 0.0s

=> CACHED [internal] add conda environment to /home/envd/.zshrc 0.0s

=> CACHED [internal] creating config dir 0.0s

=> CACHED [internal] setting prompt starship config 0.0s

=> CACHED [internal] setting prompt bash config 0.0s

=> CACHED [internal] setting prompt zsh config 0.0s

=> CACHED [internal] configure user permissions for /opt/conda/envs/envd/conda-meta 0.0s

=> CACHED [internal] create work dir: /home/envd/mnist 0.0s

=> CACHED [internal] create file /etc/horust/services/sshd.toml 0.0s

=> CACHED [internal] create file /etc/horust/services/jupyter.toml 0.0s

=> exporting to oci image format 42.1s

=> => exporting layers 0.0s

=> => exporting manifest sha256:29457221a2c9fd407560f505f07430cf7b459eb2617f2c8c2c73b794 0.0s

=> => exporting config sha256:374d0701d53f36bc8f38be3e12cd44e2fc54fc4ff78b477331fe6fbeb3 0.0s

=> => sending tarball 42.0s

gitpod cannot cache the conda env creation stage.

=> => transferring cache-dir: 76.62MB 3.7s

=> docker-image://docker.io/tensorchord/envd-sshd-from-scratch:v0.3.5 3.1s

=> => resolve docker.io/tensorchord/envd-sshd-from-scratch:v0.3.5 3.1s

=> docker-image://docker.io/tensorchord/horust:v0.2.1 2.9s

=> => resolve docker.io/tensorchord/horust:v0.2.1 2.9s

=> docker-image://docker.io/nvidia/cuda:11.2.2-cudnn8-devel-ubuntu20.04 3.3s

=> => resolve docker.io/nvidia/cuda:11.2.2-cudnn8-devel-ubuntu20.04 3.2s

=> install vscode plugin ms-python.python 1.3s

=> CACHED [internal] install built-in packages 0.0s

=> CACHED [internal] create conda directory 0.0s

=> CACHED [internal] install conda 0.0s

=> CACHED [internal] install horust 0.0s

=> CACHED [internal] mkdir for horust service: /etc/horust/services 0.0s

=> CACHED [internal] mkdir for horust log: /var/log/horust 0.0s

=> CACHED [internal] add envd-sshd from tensorchord/envd-sshd-from-scratch:v0.3.5 0.0s

=> [internal] create user group envd 20.6s

I think it is related to the UID. gitpod uses gitpod as user, the its UID is 33333

buildkit in gitpod triggers GC in the GPU build.

/cc @VoVAllen

/ # df -h

Filesystem Size Used Available Use% Mounted on

overlay 30.0G 28.8G 1.2G 96% /

tmpfs 31.4G 0 31.4G 0% /proc/acpi

tmpfs 64.0M 0 64.0M 0% /proc/kcore

tmpfs 64.0M 0 64.0M 0% /proc/keys

tmpfs 64.0M 0 64.0M 0% /proc/timer_list

tmpfs 31.4G 0 31.4G 0% /proc/scsi

tmpfs 64.0M 0 64.0M 0% /dev

tmpfs 31.4G 0 31.4G 0% /sys/firmware

shm 64.0M 0 64.0M 0% /dev/shm

/dev/mapper/lvm--disk-gitpod--workspaces

1.2T 134.2G 1.0T 11% /etc/resolv.conf

/dev/mapper/lvm--disk-gitpod--workspaces

1.2T 134.2G 1.0T 11% /etc/hostname

/dev/mapper/lvm--disk-gitpod--workspaces

1.2T 134.2G 1.0T 11% /etc/hosts

/dev/mapper/lvm--disk-gitpod--workspaces

30.0G 28.8G 1.2G 96% /var/lib/buildkit

tmpfs 64.0M 0 64.0M 0% /dev/null

tmpfs 64.0M 0 64.0M 0% /dev/random

tmpfs 64.0M 0 64.0M 0% /dev/full

tmpfs 64.0M 0 64.0M 0% /dev/tty

tmpfs 64.0M 0 64.0M 0% /dev/zero

tmpfs 64.0M 0 64.0M 0% /dev/urandom

overlay 30.0G 28.8G 1.2G 96% /tmp/containerd-mount3239759598

overlay 30.0G 28.8G 1.2G 96% /tmp/containerd-mount2280222997