temporal

temporal copied to clipboard

temporal copied to clipboard

Prevent incorrect service discovery with multiple Temporal clusters

Is your feature request related to a problem? Please describe.

I run multiple separate Temporal clusters within a single k8s cluster. Each Temporal cluster has its own separate set of frontend, history, and matching services as well as persistence. Let's say I am running two Temporal clusters called "A" and "B" in a single k8s cluster. Note that in my setup, there are no networking restrictions on pods within the k8s cluster -- any pod may connect to any other pod if the IP address is known.

I recently encountered a problem where it appeared that a frontend service from Temporal cluster A was talking to a matching service from Temporal cluster B. This happened during a time where the pods in both of the Temporal clusters were getting cycled a lot due to some AZ balancing automation. It also happens that this particular k8s cluster is configured in such a way that pod IP address reuse is more likely than usual.

Both Temporal cluster A and B are running 3 matching nodes each. However, I saw this log line on Temporal cluster A's frontend service:

{"level":"info","ts":"2021-01-27T00:34:15.414Z","msg":"Current reachable members","service":"frontend","component":"service-resolver","service":"matching","addresses":"[100.123.207.80:7235 100.123.65.65:7235 100.123.120.28:7235 100.123.60.187:7235 100.123.17.255:7235 100.123.203.172:7235]","logging-call-at":"rpServiceResolver.go:266"}

This is saying that Temporal cluster A's frontend service is seeing 6 matching nodes, three from A and three from B. Yikes.

I believe what led to this is something like:

- A matching pod in cluster A gets replaced, releasing its IP address. This IP address remains in cluster A's

cluster_metadatatable. - A matching pod is created in cluster B re-using this IP address.

- An event occurs that causes a frontend in cluster A to re-read the the node membership for its matching nodes. It finds the original matching node's IP address still in the table and it can still connect to it even though it is actually now a matching node in cluster B.

- Through this matching node in cluster B the the other cluster B matching nodes are located.

My fix for this is to make sure that each Temporal cluster has its own set of membership ports for each service. This would have prevented the discovery process in cluster A from seeing the pods in cluster B since it would be trying to connect on a different port.

Describe the solution you'd like

It may be possible to prevent this by including a check that a given node is indeed part of the correct cluster before adding it to the ring.

Describe alternatives you've considered

I don't believe our k8s environment has an easy way to prevent this using networking restrictions.

Also ran into this issue. My resolution was also to change the membership ports for the different clusters. Normally, I would not expect this to be an issue, but we had two deployments overlap for a time, which would've opened the door for IP reuse.

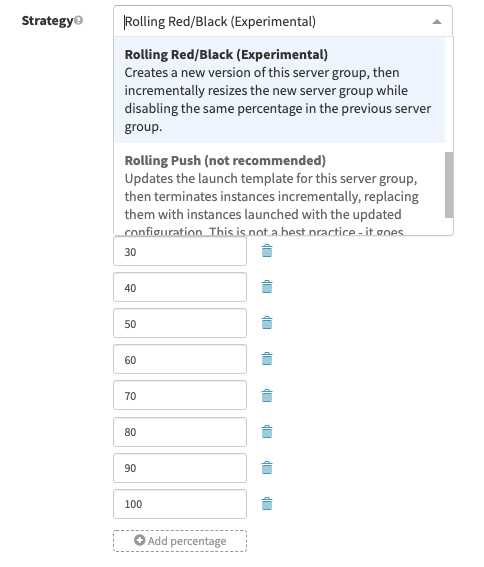

As requested, our deployment for Temporal using a "rolling red black", which deploys X percentage of the total fleet, then disables a matching X percentage, stepping up until 100% deployment is complete (as seen here).

Note: When Spinnaker disables a Temporal instance in service discovery, we have a signal that shuts down the Temporal process for that particular instance. So while the infrastructure is not destroyed, the Temporal process on that infrastructure is no longer running.

We also experienced exactly the same problem. We have different temporal clusters setup in our lower environments and we use k8s namespaces to mimic different DCs and also, we don't have dedicated network pools in each namespace i.e. any pod can connect to any other pod belonging to a different namespace.

We observed a similar problem where namespace ids were unknown to the frontend service. We were getting logs of namespace id not found very frequently. This was because namespace id was unknown to the pod which which was present in different cluster. Since we had very limited IP range allocated for k8s pods, it increased the probability of getting a pod assigned with the same IP in different cluster.

With help from @wxing1292 , we resolved this issue by assigning different membership ports to each temporal cluster.

It seems like this issue was faced by others. We have temporarily adopted the different port approach but it would be great if we can get a more reliable fix in temporal. Probably attaching a cluster id to membership would help. During pod discovery, we can consider pod IP, port and cluster ID. Just a thought.

During pod discovery, we can consider pod IP, port and cluster ID. Just a thought.

In such a solution, I'd expect the cluster ID to be an internal UUID or something, rather than use the cluster name from the configuration, as our environments re-use cluster names.

IMO this is a major issue. This brought down multiple production temporal clusters for me.

How does the service discovery work? In Cadence there were multiple strategies and DNS based discovery worked quite well in Kubernetes. This issue suggests that it now automatically discovers Temporal nodes on the network (?) which causes clusters to collapse, yes, but also rings all my security related bells as well.

I wouldn't say using mTLS in a Kubernetes cluster between services is an absolute must, but it would be the only protection if this is the case.

Unless I completely misunderstood what is happening.

for now this might be mitigated by using network policies to limit connectivity between pods within the same k8s namespace https://kubernetes.io/docs/concepts/services-networking/network-policies/ With exposed services via ingress that may be a bit more problematic :D

Another option would be to use different certs/keys/ca per cluster and enforcing tls verification, this way it is possible to deny connection betwee pods from different clusters, because it would be aborted by non-matching certs

FWIW.. we ran into this issue twice in our clusters

while we wait for this experience to be improved at source, i've gone ahead and added some advice here https://github.com/temporalio/documentation/pull/662. Wholly out of my depth on this one, reviews and additions welcome as it seems to be a common pain point.

The documentation update is a welcome move. Thanks. We were hit by this hard a couple of days ago. We have had two Temporal instances (staging and production) running in a single kubernetes cluster for almost a year without any issues. An automated GKE upgrade changed something and triggered this issue. Pods from another environment were still listed in the matcher/frontend logs even after we changed the ports for some reason. NetworkPolicy wouldn't have made much sense for us because of how we interact with Temporal.

Has anybody added network policies as a stop-gap solution for time being ? I would appreciate if someone can post the policies they added?

this really depends on your setup, you need to understand network policies, interactions between services and write your own rules.

we have a scenario where there are multiple temporal namespaces within a cluster.

e.g: ns1 and ns2 are the 2 namespaces

I want to restrict the communication between the 2 namespaces so that temporal services don't match with each other instead of defining different membership ports within each namespace.

The policy for ns1 will look something like -

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: temporal-network-policy

namespace: ns1

spec:

egress:

- to:

- namespaceSelector:

matchLabels:

name: ns1

ingress:

- from:

- namespaceSelector:

matchLabels:

name: ns1

podSelector:

matchLabels:

networpolicy: temporal

policyTypes:

- Ingress

- Egress

here the ns1 does have a label already defined

apiVersion: v1

kind: Namespace

labels:

name:namespace1

name: namespace1

and the temporal pods within ns1 have the label networpolicy= temporal

This policy should restricts the ingress/egress traffic for temporal pods within the namespace ns1 itself. However, I still temporal pods in ns1 matching with services in ns2.

Is this not the way to go about resolving this issue ?

Will appreciate your help.

First, you need to set policy to drop all Ingress/egress per namespace and allow only specific traffic - this is safer but needs more work.

Or you may add policy to drop traffic from specific pods from outside of the given namespace.

On Wed, 27 Oct 2021, 00:59 Madhura Bhogate, @.***> wrote:

we have a scenario where there are multiple temporal namespaces within a cluster. e.g: ns1 and ns2 are the 2 namespaces I want to restrict the communication between the 2 namespaces so that temporal services don't match with each other instead of defining different membership ports within each namespace.

The policy for ns1 will look something like -

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: temporal-network-policy namespace: ns1 spec: egress:

- to:

- namespaceSelector: matchLabels: name: ns1 ingress:

- from:

- namespaceSelector: matchLabels: name: ns1 podSelector: matchLabels: networpolicy: temporal policyTypes:

- Ingress

- Egress

here the ns1 does have a label already defined

apiVersion: v1 kind: Namespace labels: name:namespace1 name: namespace1

and the temporal pods within ns1 have the label networpolicy= temporal

This policy should restricts the ingress/egress traffic for temporal pods within the namespace ns1 itself. However, I still temporal pods in ns1 matching with services in ns2.

Is this not the way to go about resolving this issue ?

Will appreciate your help.

— You are receiving this because you commented. Reply to this email directly, view it on GitHub https://github.com/temporalio/temporal/issues/1234#issuecomment-952391014, or unsubscribe https://github.com/notifications/unsubscribe-auth/AALJMPCKRYREHEYO6FJJKDDUI4XDZANCNFSM4WV3B2RQ . Triage notifications on the go with GitHub Mobile for iOS https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675 or Android https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub.

I also hit this issue while running multiple temporal clusters in single k8s cluster, as noted here on the community forums: https://community.temporal.io/t/not-found-namespace-id-xxxxxx-xxxxx-xxxxx-xxxxx-xxxxxxxxxxxx-not-found/3320/7

A documentation update would be highly appreciated for those that will try to adopt temporal after us, obviously would love the fix as well.

@sw-yx re the added doc "FAQ: Multiple deployments on a single cluster" documentation the membership port change suggestion for Temporal2 uses port numbers from the default grpcPorts (7233 to 8233, etc.). Clearer would be to base on the default membershipPorts (e.g. 6933 to 16933, etc).

The cluster id is added into the membership app name. https://github.com/temporalio/temporal/pull/3415.