Old resource deletion hangs when `alpha` flag is off

Expected Behavior

Users should be able to delete any taskrun object using kubectl delete tr --all no matter if alpha feature flag is enabled.

Actual Behavior

After a new pipeline controller is deployed with alpha feature flag off, kubectl delete tr --all command hangs on the taskruns that worked fine under previous controller with alpha feature flag enabled.

Steps to Reproduce the Problem

- Clone the pipeline repo. Change

stablefeature flag toalphain this file. - Run

ko apply -R -f config/ - After the new controller is running, run the example yaml. You can see the pipelinerun will be successfully executed.

- Back to step 1, change the flag back to

stable - Rerun step 2

- Run

kubectl delete tr --allto delete all taskruns that were previously created. You will see the deletion is hanging.

Additional Info

-

Kubernetes version:

Output of

kubectl version:Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.1", GitCommit:"86ec240af8cbd1b60bcc4c03c20da9b98005b92e", GitTreeState:"clean", BuildDate:"2021-12-16T11:41:01Z", GoVersion:"go1.17.5", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.12-gke.1500", GitCommit:"6c11aec6ce32cf0d66a2631eed2eb49dd65c89f8", GitTreeState:"clean", BuildDate:"2022-05-11T09:25:37Z", GoVersion:"go1.16.15b7", Compiler:"gc", Platform:"linux/amd64"} WARNING: version difference between client (1.23) and server (1.21) exceeds the supported minor version skew of +/-1 -

Tekton Pipeline version:

Output of

tkn versionorkubectl get pods -n tekton-pipelines -l app=tekton-pipelines-controller -o=jsonpath='{.items[0].metadata.labels.version}'latest main

@chuangw6 any additionnal elements you can see ? Can you share the logs of the controller and webhook ?

I can't reproduce this - deletion went through right away for me...

Hi @vdemeester ,

If you search the keyworkd alpha in the controller log, you can see the complaints about it. This alpha flag was added here.

I can't reproduce this - deletion went through right away for me...

Hi @abayer , Instead of following step 4 & 5 to turn off alpha flag, can you try to just apply the latest release to see if you can reproduce? Thanks

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.38.0/release.yaml

@chuangw6 Aaaaah - that's fixed in #5215.

@chuangw6 Aaaaah - that's fixed in #5215.

Thanks @abayer for the pointer. I pulled the latest main and followed the above steps. Now the controller doesn't have the complaints, but deleting the old taskruns still hangs.

Any idea?

I was looking at the webhook logs, which is where I saw the conversion errors. Could you post your new webhook log with latest main?

Aaaah, I see https://gist.github.com/chuangw6/c1907a1d1f10a2962e9718b0c21ca4fd#file-pipeline-webhook-log-L342 - that's a problem for sure, but it's fixed in #5210.

I was looking at the webhook logs, which is where I saw the conversion errors. Could you post your new webhook log with latest main?

sure!

Interesting - https://gist.github.com/chuangw6/7c68774399baaf689a7bc0aa189c5ded#file-new-webhook-log-L276 is there, with references to Chains, though I think that's just service account stuff?

Interesting - https://gist.github.com/chuangw6/7c68774399baaf689a7bc0aa189c5ded#file-new-webhook-log-L276 is there,

Interesting. Why can pipeline webhook pick up chains-related stuff? lol

This is chains Controller log. It complaints about alpha flag too.

with references to Chains, though I think that's just service account stuff?

But the line[system:serviceaccounts system:serviceaccounts:tekton-chains system:authenticated] says it's authenticated.

It's on an UPDATE event, so it does validation and fails there. Chains tries to update the object, but cannot because some fields are behind alpha and stable is set. I am not sure why it would block deletion, except maybe if it fails to sign, put the object into the queue to retry, making it somehow un-deletable ?

@chuangw6 can you try to disable chains and see if that happens ?

It's on an

UPDATEevent, so it does validation and fails there. Chains tries to update the object, but cannot because some fields are behind alpha and stable is set. I am not sure why it would block deletion, except maybe if it fails to sign, put the object into the queue to retry, making it somehow un-deletable ?@chuangw6 can you try to disable chains and see if that happens ?

Hi @vdemeester, I tried two things:

-

Had a new cluster with only pipeline installed. No resource deletion hanging problem at all under either

stableoralphaflag. -

Tried to disable chains in my old cluster where both chains and pipelines are installed. I tried to disable chains by two ways

- scale the controller 0.

- delete all the resources under chains namespace by running

ko delete -R -f config/.

Neither of them works. And things even get tricker: after chains is disabled, I cannot delete the taskruns after

alphais turn on again.

It's on an UPDATE event, so it does validation and fails there. Chains tries to update the object, but cannot because some fields are behind alpha and stable is set. I am not sure why it would block deletion, except maybe if it fails to sign, put the object into the queue to retry, making it somehow un-deletable ?

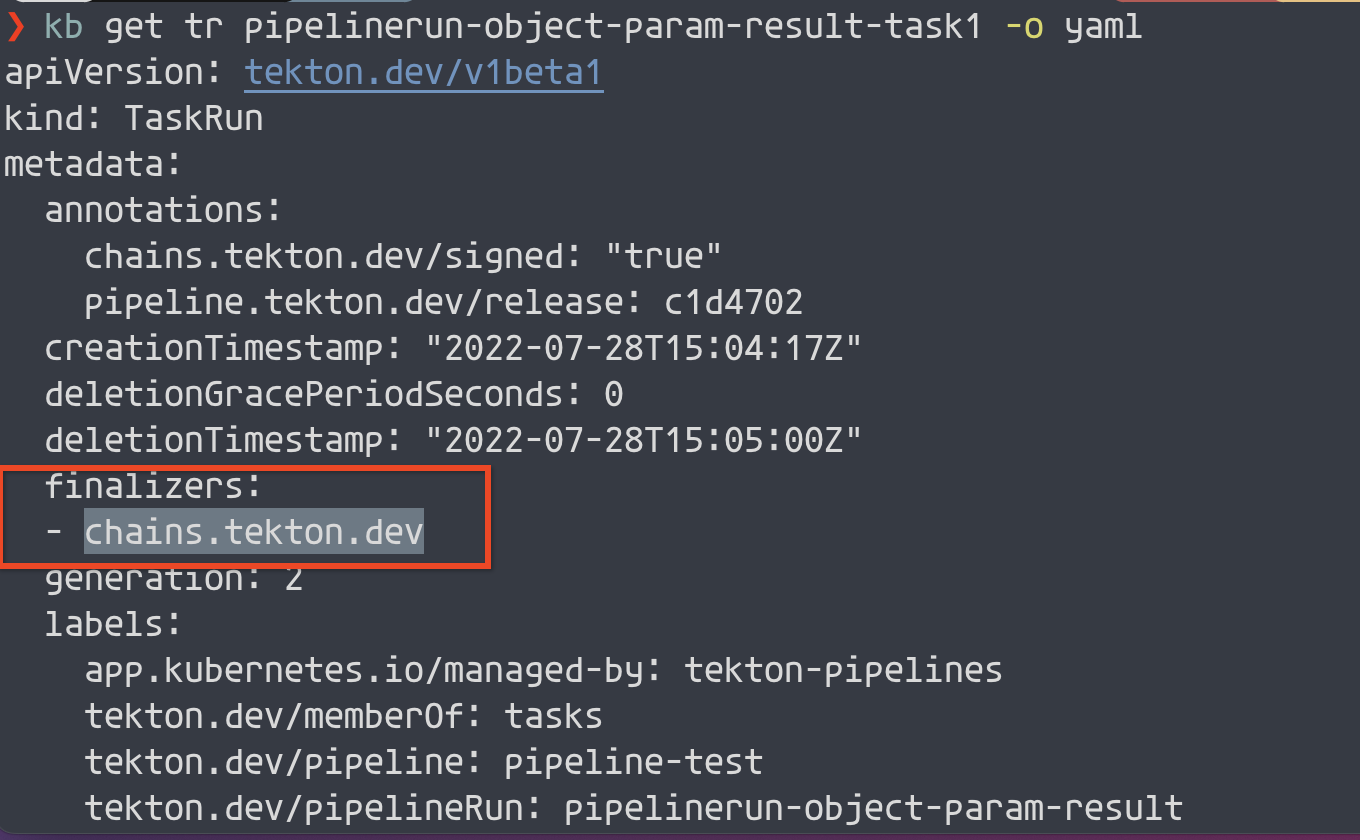

^ @vdemeester this is correct. Chains uses a finalizer to make sure that Run objects don't disappear before they can be attested. Normally, the finalizer is removed once chains has processed the object, but because the alpha flag was disabled, chains can't complete its reconcile (probably when trying to add the chains.tekton.dev/signed annotation) so the finalizer is never removed.

I don't think chains is unique here - any client that tries to update a beta object with alpha fields would fail in a similar way. This kind of unexpected behavior was what I was worried about in https://github.com/tektoncd/pipeline/pull/5005#issuecomment-1170484289 (cc @lbernick @afrittoli)

^ @vdemeester this is correct. Chains uses a finalizer to make sure that Run objects don't disappear before they can be attested. Normally, the finalizer is removed once chains has processed the object, but because the alpha flag was disabled, chains can't complete its reconcile (probably when trying to add the chains.tekton.dev/signed annotation) so the finalizer is never removed.

Thanks @wlynch . That explains why it's still not deletable after chains is disabled - because the taskruns have been added a finalizer.

Quick question: When a client like chains is trying to add a finalizer to the object, it's an UPDATE operation. And whenever update operation happens, pipeline's webhook will be triggered in which alpha flag is checked. Is that correct understanding?

FYI: For those who want to delete the taskruns that hang because of the finalizer, you can use the patch command to remove finalizer, which can help remove resources immediately.

kubectl patch tr/<NAME-OF-THE-TASKRUN-OBJECT> --type json --patch='[ { "op": "remove", "path": "/metadata/finalizers" } ]'

@wlynch this is solely on the v1beta1 object (with or without the api fields to alpha or not). Quick question, is chains using PATCH ? I think we "could" detect it's a PATCH on metadata and not run the validation.

In any case, this is one "potential" problem we have with enable-api-fields, if users enable alpha API to try something and switch back to stable with an object with alpha api fields, that are, thus, not valid anymore. Maybe the webhook could "annotate" objects depending on whether it adds enable-api-fields to alpha (or to any value), so that on later validation, we could act based on that ? 🤔

Quick question: When a client like chains is trying to add a finalizer to the object, it's an UPDATE operation. And whenever update operation happens, pipeline's webhook will be triggered in which alpha flag is checked. Is that correct understanding?

I think it really depends on when you switch back the enable-api-fields to stable. If it's during the execution of a TaskRun, then chains will pick it up once done and.. updating it will fail the validation. I think it also might depend when chains put the finalizer in.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale with a justification.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close with a justification.

If this issue should be exempted, mark the issue as frozen with /lifecycle frozen with a justification.

/lifecycle stale

Send feedback to tektoncd/plumbing.

I think this should be fixed by https://github.com/tektoncd/pipeline/pull/5610; @chuangw6 are you able to check whether this is still happening?

Hey @lbernick ,

I just tried on the latest main. It seems the issue still persists because of the reason above mentioned by @wlynch.

^ @vdemeester this is correct. Chains uses a finalizer to make sure that Run objects don't disappear before they can be attested. Normally, the finalizer is removed once chains has processed the object, but because the alpha flag was disabled, chains can't complete its reconcile (probably when trying to add the chains.tekton.dev/signed annotation) so the finalizer is never removed. I don't think chains is unique here - any client that tries to update a beta object with alpha fields would fail in a similar way. This kind of unexpected behavior was what I was worried about in https://github.com/tektoncd/pipeline/pull/5005#issuecomment-1170484289 (cc @lbernick @afrittoli)

Stale issues rot after 30d of inactivity.

Mark the issue as fresh with /remove-lifecycle rotten with a justification.

Rotten issues close after an additional 30d of inactivity.

If this issue is safe to close now please do so with /close with a justification.

If this issue should be exempted, mark the issue as frozen with /lifecycle frozen with a justification.

/lifecycle rotten

Send feedback to tektoncd/plumbing.

Rotten issues close after 30d of inactivity.

Reopen the issue with /reopen with a justification.

Mark the issue as fresh with /remove-lifecycle rotten with a justification.

If this issue should be exempted, mark the issue as frozen with /lifecycle frozen with a justification.

/close

Send feedback to tektoncd/plumbing.

@tekton-robot: Closing this issue.

In response to this:

Rotten issues close after 30d of inactivity. Reopen the issue with

/reopenwith a justification. Mark the issue as fresh with/remove-lifecycle rottenwith a justification. If this issue should be exempted, mark the issue as frozen with/lifecycle frozenwith a justification./close

Send feedback to tektoncd/plumbing.

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.