Switch PipelineRun timeout -> TaskRun logic to instead signal the TaskRuns to stop

Changes

Fixes #5127

An alternative approach vs #5133, attempting to eliminate the problem entirely rather than just working around it.

As noted in #5127, the logic around calculating a timeout for a PipelineRun's TaskRun to make sure that the TaskRun's timeout is always going to end before the PipelineRun's timeout ends is problematic. It can result in race conditions where a TaskRun gets timed out, immediately followed by the PipelineRun being reconciled while not yet having hit the end of its own timeout. This change gets rid of that behavior, and instead sets the TaskRun.Spec.Status to a new value, TaskRunTimedOut, with the TaskRun reconciler handling that in the same way it does setting TaskRun.Spec.Status to TaskRunCancelled.

By doing this, we can unify the behavior for both TaskRuns and Runs upon PipelineRuns timing out, given that we already cancel Runs upon PipelineRun timeout, and we can kill off a bunch of flaky outcomes for PipelineRuns.

Also, rather than setting a 1s timeout on tasks which are created after the .timeouts.pipeline, .timeouts.tasks, or .timeouts.finally timeouts have been passed, this change will skip creation of those TaskRuns or Runs completely. However, it will still report those "tasks" as failed, not skipped, since attempted creation of tasks after the appropriate timeout has been reached is a failure case, and that's the existing expected behavior for PipelineTasks which try to start after timeouts have elapsed.

Huge thanks to @jerop for suggesting this approach!

/kind bug

Submitter Checklist

As the author of this PR, please check off the items in this checklist:

- [x] Has Docs included if any changes are user facing

- [x] Has Tests included if any functionality added or changed

- [x] Follows the commit message standard

- [x] Meets the Tekton contributor standards (including functionality, content, code)

- [x] Has a kind label. You can add one by adding a comment on this PR that contains

/kind <type>. Valid types are bug, cleanup, design, documentation, feature, flake, misc, question, tep - [x] Release notes block below has been updated with any user facing changes (API changes, bug fixes, changes requiring upgrade notices or deprecation warnings)

- [x] Release notes contains the string "action required" if the change requires additional action from users switching to the new release

Release Notes

Change PipelineRun timeout behavior for child TaskRuns and Runs to behave like cancellation rather than explicitly setting timeouts on the child tasks at runtime.

/wip /assign @jerop @pritidesai @dibyom @afrittoli @vdemeester

(marking this as WIP because I want to get a good amount of test runs in to be sure it actually fixes the TestPipelineRunTimeout flaky test)

This is a rather significant change to how we actually time out TaskRuns for a timed-out PipelineRun, but I think it's reasonable/safe - this is what we do for cancellation already, so it's not that new, and it's frankly significantly more logical than the current "subtract the elapsed time from the PR start time plus PR timeout, and tada, that's the TR timeout" behavior.

I cherry-picked this onto #5077, since I'm running the e2e tests over and over on there anyway to validate kind jobs, and in the 19 *-integration-tests jobs that have run to completion (1 failed fast to ghcr.io issues) since I did the cherry-pick, none have failed due to TestPipelineRunTimeout. In previous testing on that branch, I saw it fail 7 times out of 34 runs. So...I think this does the trick. =)

/assign @lbernick

since I did the cherry-pick, none have failed due to

TestPipelineRunTimeout. In previous testing on that branch, I saw it fail 7 times out of 34 runs. So...I think this does the trick. =)

@abayer glad to see these results, and thank you! 😀

SGTM 👍🏼

https://github.com/tektoncd/pipeline/pull/5134/commits/6275baa83e1406d2545094f87bb1c276a3ff0597 changes handling of Timeouts.Tasks and Timeouts.Finally to also be done via signaling - I think (or at least hope!) that this is the comprehensive solution. =)

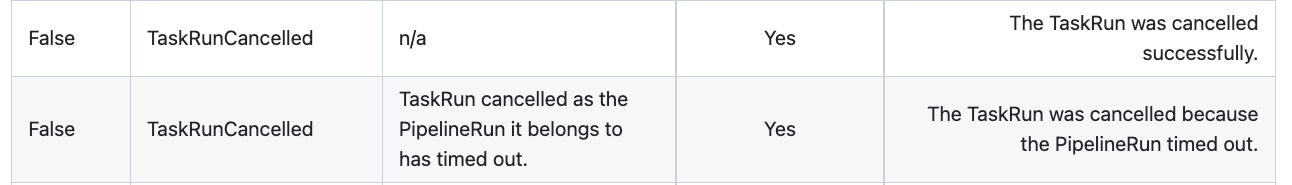

Ok, an annoying quirk of the "just use TaskRunSpecStatusCancelled" approach vs the distinct TaskRunSpecStatusTimedOut - depending on which of the TaskRun or PipelineRun gets reconciled first after the overall PipelineRun timeout is reached, you'll either get a reason of TaskRunCancelled if the PipelineRun gets timed out first, or TaskRunTimedOut if the TaskRun gets reconciled first. That inconsistency is not ideal.

I'm rolling back to having TaskRunSpecStatusTimedOut for now so that at least there's a consistent reason for the TaskRun being killed.

would it make sense to block on https://github.com/tektoncd/pipeline/issues/5150 being implemented first?

Ok, an annoying quirk of the "just use

TaskRunSpecStatusCancelled" approach vs the distinctTaskRunSpecStatusTimedOut- depending on which of theTaskRunorPipelineRungets reconciled first after the overallPipelineRuntimeout is reached, you'll either get a reason ofTaskRunCancelledif thePipelineRungets timed out first, orTaskRunTimedOutif theTaskRungets reconciled first. That inconsistency is not ideal.

If we swap the TaskRun timeout to be just based on the PipelineTask timeout, it seems like this would only happen if the PipelineTask timeout happened to expire at the same time one of the PipelineRun's timeouts expired. Am I missing something?

If we swap the TaskRun timeout to be just based on the PipelineTask timeout, it seems like this would only happen if the PipelineTask timeout happened to expire at the same time one of the PipelineRun's timeouts expired. Am I missing something?

Yup, that's exactly what I was seeing happen, hence rolling back to TaskRunSpecStatusTimedOut for the moment, at least.

And yeah, might make sense to hold this until #5150 lands. I felt like it could go in either order, but that was in part because I was planning on switching to TaskRunSpecStatusCancelled until discovering the timing issue. But #5150's approach should let us set the failure reason for the TaskRun to timed out for a cancellation with a timeout reason, maybe?

I've marked this as WIP until we nail down the ordering between this change and #5150.

chatted with Andrew-- will be easier to implement once #5150 is landed

cc @lbernick, @jerop, @afrittoli, @vdemeester - with #5224 merged, I've updated this PR accordingly, and I think it's ready to go.

Whoops - missed a couple things! Fixing.

/hold

ping @lbernick as a reminder that you said you'd take a look at my attempt to rework after-pipeline-timeout TaskRun/Run creation handling. =)

/hold cancel

Hey @abayer, I would like to understand this before we merge. I will take a look at this after I am done reviewing https://github.com/tektoncd/pipeline/pull/5039 which is not related but open since early this year. I am putting this on hold to make sure I get a chance to review. Please feel free to cancel the hold if you get sufficient reviews 🙏

/hold

Adding this to the v0.39 milestone, because I'd really like to get this done. cc @pritidesai @lbernick for updated reviews. Thanks!

This looks really good other than the one bug I mentioned! Just want to double check that this still fixes the race condition after your changes?

This looks really good other than the one bug I mentioned! Just want to double check that this still fixes the race condition after your changes?

As far as I can tell so far, yes. I haven't been able to reproduce the race, at least, and previously was able to after enough test runs.

EDIT: That is to say - I haven't been able to reproduce the e2e failures caused by the race condition in a whole bunch of runs.

ping @pritidesai - I'd really like to get this merged. =)

really appreciate all your hard work here @abayer, excited to put the timeout flakiness behind us 😁

/approve

Change PipelineRun timeout behavior for child TaskRuns and Runs to behave like cancellation rather than explicitly setting timeouts on the child tasks at runtime.

Hi @abayer, @lbernick, and @jerop, I would like to understand the status transition as part of this fix compared to what we have today.

Change PipelineRun timeout behavior for child TaskRuns and Runs to behave like cancellation rather than explicitly setting timeouts on the child tasks at runtime.

Hi @abayer, @lbernick, and @jerop, I would like to understand the

statustransition as part of this fix compared to what we have today.

I'm not sure what you mean? When the PipelineRunSpec.Timeouts.{Tasks,Finally,Pipeline} is reached, as appropriate, the TaskRun is cancelled. If an explicit PipelineTask.Timeout is specified, then the TaskRun is created with that as its timeout, and will timeout via the TaskRun reconciler if that timeout is reached, or be cancelled if the appropriate PipelineRun timeout is reached first.

Change PipelineRun timeout behavior for child TaskRuns and Runs to behave like cancellation rather than explicitly setting timeouts on the child tasks at runtime.

Hi @abayer, @lbernick, and @jerop, I would like to understand the

statustransition as part of this fix compared to what we have today.I'm not sure what you mean?

What I mean or rather my concern is - is there any change in the state transition which might impact existing use case.

For example, when a pipelineRun is cancelled - it's state transition looks like:

Unknown/Started (controller picked up this pipelineRun) -> Unknown/Running (validated and started running) -> Unknown/Cancelled (user requested cancellation) -> False/Cancelled (cancelled successfully)

Thanks for documenting new status/reason introduced in this PR.

TaskRunCancelled is now overloaded with taskrun cancellation and pipelinerun timing out. The distinguishing factor might be the message. Is it enough for the users to distinguish between cancellation and timeout?

Please know that I am not in favor of raising objection on any changes but I want to make sure we are not making our users unhappy by accidentally breaking/changing existing functionalities just like all of you 👼

What I mean or rather my concern is - is there any change in the state transition which might impact existing use case.

Aaaah - then no. The state transitions for the PipelineRun are the same - well, technically, they're more accurate to what we expect them to be than they were without this, since the race condition caused by slightly-off timing between calculated timeouts for TaskRuns and the timeout for the PipelineRun, sometimes resulting in the PipelineRun getting marked as cancelled when it should be marked as timed out. This change's whole point is to get rid of that inconsistency and always mark the PipelineRun as timed out when it has, in fact, timed out. =)

The change to not create TaskRuns/Runs if we've already passed the relevant PipelineRunSpec.Timeouts.{Tasks,Finally,Pipeline} and instead record the relevant PipelineTask as skipped very explicitly retains the previous state transition as well, in that the PipelineRun will be marked the same way it would now, where a TaskRun/Run that would get created after the relevant PipelineRun timeout had elapsed does get created, but with a 1s timeout on its spec. The old approach results in the "shouldn't really run" pipeline tasks showing up as instantly-failed TaskRuns/Runs, and the new approach specifically records the count of pipeline tasks which are skipped due to being past the relevant timeout, and for the overall PipelineRun status, those tasks are treated equivalently to the instantly-failed ones in the old approach.

Basically, the whole change is built around making sure that the state transitions and overall experience match before and after the change, even though the underlying implementation is radically different. If anything, I tend to err in the direction of avoiding API/status/etc changes too much. =)

TaskRunCancelledis now overloaded with taskrun cancellation and pipelinerun timing out. The distinguishing factor might be the message. Is it enough for the users to distinguish between cancellation and timeout?

So my original idea was to have a new spec status value specifically for "time out this TaskRun because its parent PipelineRun timed out", but the consensus was that it was better to just overload the existing cancellation spec status value with the additional message for clarity. I do think it's sufficient.

Please know that I am not in favor of raising objection on any changes but I want to make sure we are not making our users unhappy by accidentally breaking/changing existing functionalities just like all of you 👼

No worries! =)

Thanks @abayer (once again appreciate all your efforts on this 💯)

So my original idea was to have a new spec status value specifically for "time out this TaskRun because its parent PipelineRun timed out", but the consensus was that it was better to just overload the existing cancellation spec status value with the additional message for clarity. I do think it's sufficient.

Overloading existing cancellation spec status value will not be consistent with how we have added very explicit and specific status values such as "CancelledRunFinally" and "StoppedRunFinally". Those could have been distinguished with messages rather than creating a new status. But it's always easier (and common practice) to rely on status to identify the execution status. The other concern this overloading is, the systems watching this status TaskRunCancelled will start seeing cancelled events/status updates for timed out tasks too. Do you follow?

@pritidesai to share some context (@abayer feel free to respond as well 😁), there's a discussion thread from earlier in this PR - https://github.com/tektoncd/pipeline/pull/5134#discussion_r922197115 - where @afrittoli pointed out that:

I would argue that if it's the

PipelineRunthat times out, theTaskRunitself did not timeout, but it was cancelled because of some timeout logic that theTaskRunitself is not aware of. I think using cancellation might actually help users identity where the timeout occurred. We could add a place for the canceller to give an explanation or reason for the cancellation. Using cancellation might be even more helpful when doing pipeline in pipeline, where the chain of cancellations may go deeper.

I believe this is what influenced the decision to reuse cancellation

I'm honestly open to either using TaskRunCancelled as we are now, or my original approach of a new timeout-specific spec status, but I do think that TaskRunCancelled is more accurate. If a TaskRun times out due to the PipelineTask.Timeout, it'll show up as TaskRunTimedOut, but if it's cancelled due to the PipelineRun timeout settings, it'll show up as TaskRunCancelled. That's entirely accurate, and also matches TaskRun behavior with what Run behavior for PipelineRun timeouts has always been up to this point.

Thank you @jerop for the reference 🤗 thank you @abayer, @lbernick, @vdemeester, and @afrittoli for the feedback 🤗

If a TaskRun times out due to the PipelineTask.Timeout, it'll show up as TaskRunTimedOut, but if it's cancelled due to the PipelineRun timeout settings, it'll show up as TaskRunCancelled.

I am still having hard time settling on this but if most of you have voted for this, please go ahead with it. I still see this as inconsistent and mixing cancellation with timeout. Why is a taskRun cancelled with TaskRunCancelled when pipelineRun has timed out. Irrespective of whether it was my own timeout or my parent's (pipelineRun that I belong to) timeout, as a taskRun, I timed out.

Also, I treat runs a little different from taskRuns since runs are managed by their own controllers whereas taskRuns are managed by the taskRun controller which is part of the same pipelineRun controller.

BTW, I didn't find any mention of RunTimedOut other than the constant that we have defined - https://github.com/tektoncd/pipeline/blob/217b12de93b0211687cee930f703c5c5d4ad8f43/pkg/apis/pipeline/v1alpha1/run_types.go#L121

Also, I haven't got chance to review the code further, I will resume reviewing back next week if it's still open 🤣