AST struct transfer, serialization, deserialization performance enhancement

As mentioned in #2123 and #1392, parse() is currently quite slow.

SWC's Rust parser is of course extremely fast, but the bottleneck is passing ASTs back into JS.

I've done some experiments, and believe I may have the beginnings of a solution to speed it up by 5x or more, enough so it out-performs Babel at least.

https://github.com/overlookmotel/swc-parse-test

The problem

There are 2 bottlenecks involved in transferring AST from Rust to JS:

- Cost of passing AST from Rust to JS (as JSON string)

- Cost of parsing JSON in JS (using

JSON.parse())

Potential solution

I've attempted to alleviate these pinch points by outputting a buffer (JsBuffer) from Rust instead of a JSON string. The buffer is a binary serialization of the AST, and I've written a matching deserializer in JS.

This has 2 advantages:

JsBuffercan be created in main thread's memory so passing it from Rust to JS is zero cost.- AST can be encoded and deserialized more efficiently in binary form.

The results appear to be pretty good.

Provisos

The implementation is only a proof of concept. So far it can only deal with const x = 1; statements. I see no reason why it shouldn't maintain it's speed when support for all AST node types is included, but I could be wrong.

It may also be naive. Until this weekend, I've never written a line of Rust before, so I may be totally missing a reason why it's not possible to integrate this approach into SWC.

The Rust code I've written is, for the same reason, quite embarrassingly bad. The JS code is also hacked together very quickly. So potentially it could get significantly faster with some optimization.

Questions

-

Does this seem like a promising direction?

-

If

parse()(andprint()) can be made much faster, might this make it feasible to maintain support for plugins written in Javascript?

As mentioned above, I don't know Rust at all, so I have limited ability to help with an effort to flesh out these experiments into fully-fledged features. However, I would be very happy to help out on the JS side (the deserializer etc).

Yeah, if we can improve performance of passing ast, I expect the js plugin api to be really useful.

Maybe deserialize via https://github.com/rkyv/rkyv in the wasm and convert into JsObject will be more faster.

@Brooooooklyn rkyv looks great, in particular the zero-cost deserialization would be very efficient for getting an AST into print().

But I'm afraid I don't understand your point about WASM. Can you possibly elaborate?

From benchmark results before, the performance bottleneck in this scenario is Create JsObject in the non-JavaScript side.

And in my experience create JsObject in wasm is faster than in Native side.

Thanks for quick reply. Are you suggesting that SWC would run as WASM in NodeJS, rather than via N-API? Or that you'd have a layer of WASM sitting in between JS and N-API?

Please excuse me if I have the terminology wrong. As I mentioned above, this is my first experience of Rust or Node native module development, so I am really just feeling my way here...

And maybe this is a really stupid idea but...

Do we need to serialize at all? The AST already exists in Rust's memory in a binary format with a well-defined schema (the Rust types). Could that memory be wrapped in a JsBuffer (without copy), passed to JS, and a deserializer in JS would parse this buffer and convert it into JS objects?

The JS deserializer could be generated programmatically from the Rust type definitions.

For plugins, the deserializer could be lazy i.e. only deserialize sections of the buffer for AST nodes as and when they are accessed in the plugin's visitors. For plugins which only operate on a subset of AST nodes, the serialization + deserialization overhead could be avoided entirely for the majority of the AST.

This sounds too good to be true. Am I totally misunderstanding what you can / can't do with memory across the JS/Rust boundary?

For plugins, the deserializer could be lazy i.e. only deserialize sections of the buffer for AST nodes as and when they are accessed in the plugin's visitors.

simd_json has similar appraoch https://github.com/luizperes/simdjson_nodejs/issues/28

For plugins, the deserializer could be lazy i.e. only deserialize sections of the buffer for AST nodes as and when they are accessed in the plugin's visitors. For plugins which only operate on a subset of AST nodes, the serialization + deserialization overhead could be avoided entirely for the majority of the AST.

I expect this to slow down operations by a margin. Visitors in plugins are expected to visit all nodes.

I think we can use binary ser/de library like protobuf, which already has js-based deserializer.

Visitors in plugins are expected to visit all nodes.

Yes, there'd be no point in lazy-deserializing AST nodes if they're all going to be visited.

What I had in mind is to modify the implementation of the plugin system so it minimizes the number of nodes accessed.

For example, the ConsoleStripper plugin example in SWC docs only needs to visit a small fraction of nodes in an AST - CallExpressions and a fairly shallow subset of their child nodes.

I imagined it working something like this:

Plugins would be objects rather than functions (more like Babel I suppose):

const consoleStripperPlugin = {

visitor: {

CallExpression(e: CallExpression): Expression {

if (e.callee.type !== "MemberExpression") return e;

// ... etc ...

return e;

}

}

}

transform()examines the visitor and compiles an array of all the node types the visitor needs to visit initially (in this case just['CallExpression']).transform()calls parse with the source JS + this array of node types.parse()returns serialized AST (as buffer) + an array of pointers into that buffer for where allCallExpressionnodes are located.transform()deserializes all theCallExpressionnodes to JS objects.transform()callsvisitor.CallExpression()with each of these node objects.- When

CallExpression()accessese.callee, that AST node is lazily deserialized.

The plugin can complete its work without accessing the majority of the AST, therefore there's no need to deserialize most of it.

Whatever the serialization format, deserialization in JS is going to be the slowest link in the chain, so it seems worthwhile to avoid as much of that work as possible.

I don't know if you'd be open to changing how plugins work in this direction? Implementation in SWC is more complex, but I imagine could be much more performant and, from a consumer point of view, probably a bit more familiar from Babel.

@kdy1 I tried using serde to serialize to bincode instead of JSON, but was getting errors. I'll give protobuf a go.

Can anyone advise if my idea of using the binary representations of AST nodes in Rust's heap as the source for deserializing is (in theory at least) possible? Maybe it's a bad idea, but I'm curious as to whether it's in the realm of possibility, or whether it falls under "no, it doesn't work like that".

Here is a similar discussion happened in Deno: https://github.com/denoland/deno/issues/2121

deno used similar techniques to optimize in the past, but their structure is much simpler than the AST structure of SWC. The tree of SWC is very deep, which will bring challenges.

Thanks very much for this. Really interesting. I'll dig into it.

@overlookmotel Can you make a PR if it's good enough? I can add some patches to the PR if required. (e.g. rust code)

@kdy1 Yes I'd be happy to. And thanks for offer of help with the Rust code.

My plan was:

- Benchmark different serialization frameworks (protobuf, bincode, cap'n proto, rkyv) on a small set of JS syntax and see which works faster.

- Try to get the winner working for the entire set of AST nodes (I say "try" because I might need some help with that - the errors I was getting with serde/bincode were incomprehensible to me).

- Apply same to

print(). - Sketch out what new plugin system would look like to take advantage of lazy deserialization.

I'm very busy with day job, so this may all take me some weeks/months, but I'm really interested so will progress it whenever I get a chance.

One question:

In my mind, the ideal end point is:

- For SWC to have a Babel-compatible plugin system (i.e. SWC can use Babel plugins without modification).

- For those to run at minimum faster than in Babel.

- Hopefully they run fast enough that there isn't an order of magnitude difference between writing an SWC plugin in JS and in Rust.

i.e. aim of the game is compatibility with existing JS ecosystem, and for writing SWC plugins in JS to be a viable (and still fairly speedy) option rather than "if you don't know Rust, you can't do it".

However, I'm new to the project, so I'm not aware what your vision/priorities for SWC are. Is the above compatible with the direction you want to take?

Sorry, one other question: Are the Typescript type definitions in types.ts and Visitor class in Visitor.ts written by hand, or programmatically generated somehow from the Rust type definitions? If the latter, can you point me to the code which does this please?

However, I'm new to the project, so I'm not aware what your vision/priorities for SWC are. Is the above compatible with the direction you want to take?

Yes, I think compatibility with babel plugin is the most important.

But I don't expect js plugins to be speedy as rust plugins, because they can't be called in threads. It's enough I think, as I'm going to provide lots of port of babel plugins by myself.

Sorry, one other question: Are the Typescript type definitions in types.ts and Visitor class in Visitor.ts written by hand, or programmatically generated somehow from the Rust type definitions? If the latter, can you point me to the code which does this please?

It's written by hand. I actually tried generating it, but I stopped because rust proc macro api is limited.

Yes, I think compatibility with babel plugin is the most important.

That's great to hear. I think my direction is in line with yours then.

I don't expect js plugins to be speedy as rust plugins, because they can't be called in threads.

Ah of course, I hadn't thought of that.

I suppose if plugins were specified as paths (i.e. await transform( js, { plugins: [__dirname + '/myPlugin.js'] } )) then the plugin could be loaded in a worker thread and parallelized. But that's another level of complexity...

Anyway, I have more questions and comments, but I think best for now I just start with step 1 and benchmark the potential serialization methods. I'll let you know any findings. I hope you don't mind if I come back with questions if I'm having trouble with the Rust.

I absolutely do not mind :) Thanks!

There is another way, that is, we modify the fake AST in the node layer, and then generate the smallest instruction set and pass it to Rust.

This is also a basic strategy of cross thread communication in the past. Its core point is to generate the minimum instruction set, anyway.

@yisar I don't think it will work. Even if it's implemented, it will be very slow becuase such api means we have to cross language boundaries frequently.

@kdy1 In fact, there is another another way, that is, If we can find a way so that Rust can communicate directly with V8 and bypass JS, which is also very good.

I don't know if node can do it, but deno does it.

https://github.com/denoland/serde_v8

However, please note that the structure of deno is very simple. It is usually just a queue containing function name and arguments.

If using v8 api directly, we will lost ABI-stable which provided by Node-API

I read this again, and I think the performance will be bad again if we fully imlplement the parser.

JIT is very good for this kind of microbenchmarks.

Turns out that swc was serializing json from the js thread.

Need to revisit this after https://github.com/swc-project/swc/pull/3380 is published

> @overlookmotel/[email protected] bench

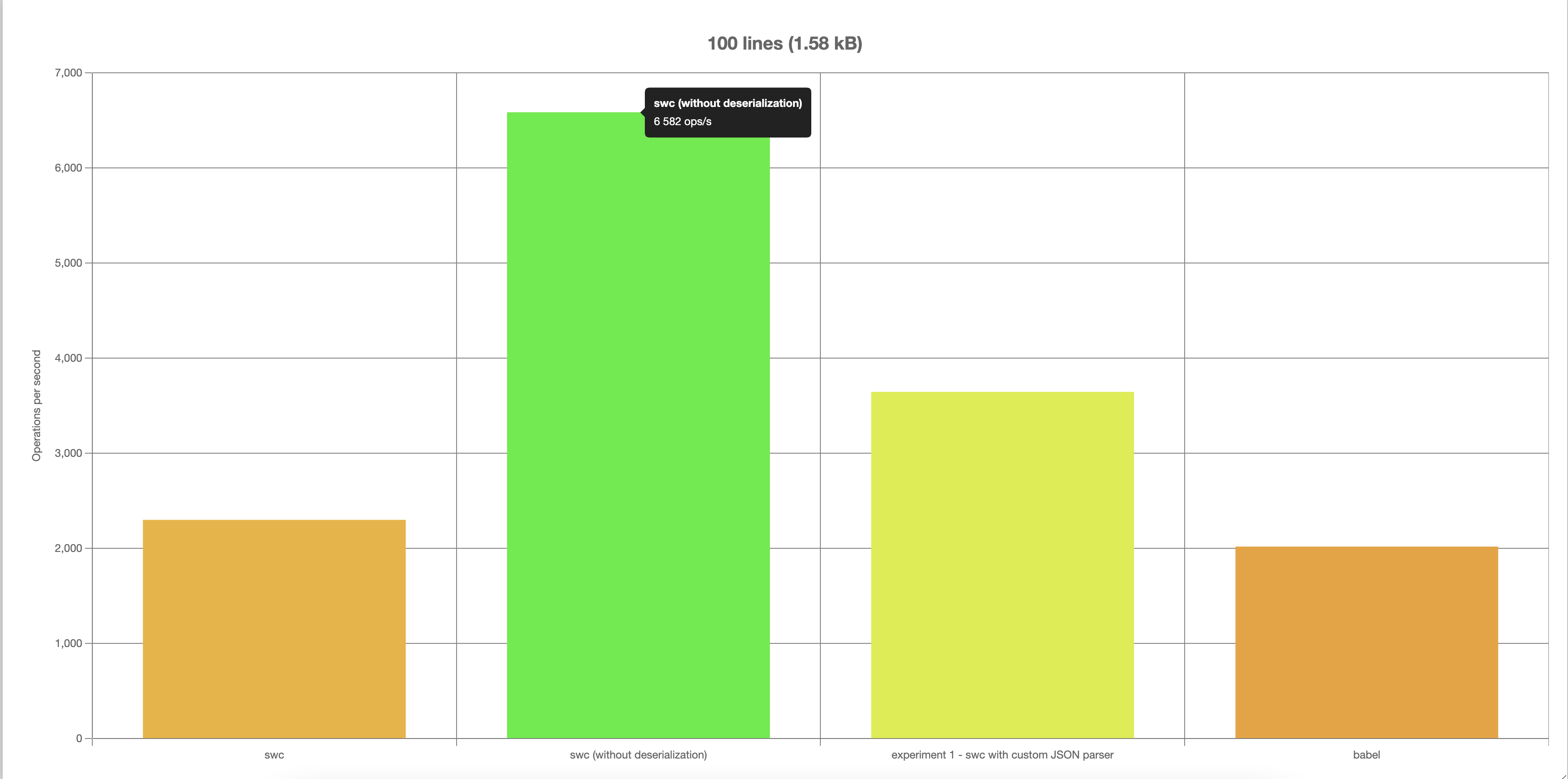

> node benchmark/bench.js

Running "100 lines (1.58 kB)" suite...

Progress: 100%

swc:

2 297 ops/s, ±0.52% | 65.1% slower

swc (without deserialization):

6 582 ops/s, ±0.31% | fastest

experiment 1 - swc with custom JSON parser:

3 642 ops/s, ±0.31% | 44.67% slower

babel:

2 015 ops/s, ±4.30% | slowest, 69.39% slower

Finished 4 cases!

Fastest: swc (without deserialization)

Slowest: babel

Saved to: /Users/kdy1/projects/lab/swc-parse-test/benchmark/100 lines.chart.html

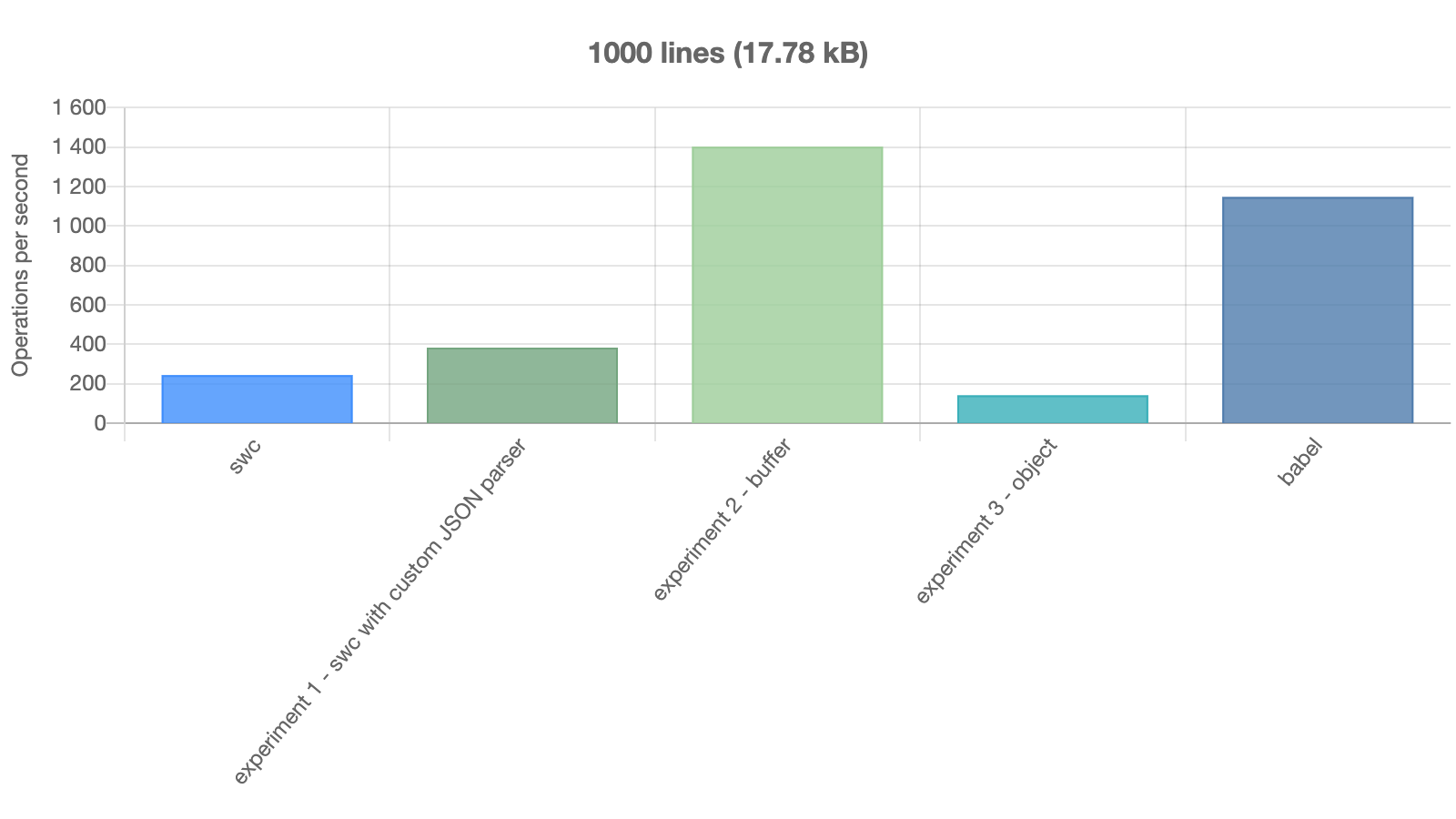

Running "1000 lines (17.78 kB)" suite...

Progress: 100%

swc:

224 ops/s, ±1.52% | 62.6% slower

swc (without deserialization):

599 ops/s, ±0.32% | fastest

experiment 1 - swc with custom JSON parser:

338 ops/s, ±0.45% | 43.57% slower

babel:

215 ops/s, ±3.27% | slowest, 64.11% slower

Finished 4 cases!

Fastest: swc (without deserialization)

Slowest: babel

Saved to: /Users/kdy1/projects/lab/swc-parse-test/benchmark/1000 lines.chart.html

Running "10000 lines (197.78 kB)" suite...

Progress: 100%

swc:

21 ops/s, ±1.64% | 58.82% slower

swc (without deserialization):

51 ops/s, ±2.23% | fastest

experiment 1 - swc with custom JSON parser:

31 ops/s, ±1.58% | 39.22% slower

babel:

5 ops/s, ±88.40% | slowest, 90.2% slower

Finished 4 cases!

Fastest: swc (without deserialization)

Slowest: babel

Saved to: /Users/kdy1/projects/lab/swc-parse-test/benchmark/10000 lines.chart.html

swc-parse-test on master [!?] is 📦 v0.0.1 via v17.4.0 via 🦀 v1.59.0-nightly took 1m8s

❯

Note: In real world, the perf of swc should be multipled by the number a bit smaller than number of cpu cores. So the swc parser is much faster in real world, and more importantly while swc is parsing javascript thread can do what they want to do.

does that mean a js plugin api could be fast enough?

I don't think so, unless we embed some custom runtime for js which allow running js code in parallel

I don't think so, unless we embed some custom runtime for js which allow running js code in parallel

@kdy1 would this be a reasonable thing to think about with QuckJS? Small footprint, supports most (if not all) of JavaScript language semantics, and has a really small footprint compared to trying to do something like embedding Node.

https://bellard.org/quickjs/

On the other hand, you could just embed Deno: https://deno.land/manual/embedding_deno