puffin

puffin copied to clipboard

puffin copied to clipboard

Serverless HTAP cloud data platform powered by Arrow × DuckDB × Iceberg

PuffinDB

Serverless HTAP cloud data platform powered by Arrow × DuckDB × Iceberg

Accelerate DuckDB with 10,000 AWS Lambda functions running on your own VPC

Note: This repository only contains preliminary design documents (Cf. Roadmap)

Kickoff meetup: Rovinj, Croatia, March 29-31, 2023

Introduction

If you are using DuckDB client-side with any client application, adding the PuffinDB extension will let you:

- Distribute queries across thousands of serverless functions and a Monostore

- Read from and write to hundreds of applications using any Airbyte connector

- Collaborate on the same Iceberg tables with other users

- Write back to an Iceberg table with ACID transactional integrity

- Execute cross-database joins (Cf. Edge-Driven Data Integration)

- Translate between 19 SQL dialects

- Invoke remote query generators

- Invoke curl commands

- Execute incremental and observable data pipelines

- Turn DuckDB into a next-generation vector database

- Support the Lance file format for 100× faster random access

- Accelerate and | or schedule the downloading of large tables to your client

- Cache tables and run computations at the edge (Amazon CloudFront × Lambda@Edge)

- Log queries on your data lake

PuffinDB is an initiative of STOIC, and not DuckDB Labs or the DuckDB Foundation.

DuckDB and the DuckDB logo are trademarks of the DuckDB Foundation.

PuffinDB and the PuffinDB logo are trademarks of STOIC (Sutoiku, Inc.).

STOIC is a member of the DuckDB Foundation.

Beliefs

- Nothing beats SQL because nothing can beat maths

- The public cloud is the only truly elastic platform

- Arrow × DuckDB × Iceberg are game changers

- Edge-Driven Data Integration is the way forward

- Clientless + Serverless = Goodness

Rationale

Many excellent distributed SQL engines are available today. Why do we need yet another one?

- True serverless architecture

- Future-proof architecture

- Designed for virtual private cloud deployment

- Designed for small to large datasets

- Designed for real-time analytics

- Designed for interactive analytics

- Designed for transformation and analytics

- Designed for analytics and transactions

- Designed for next-generation query engines

- Designed for next-generation file formats

- Designed for lakehouses

- Designed for data mesh integration

- Designed for all users

- Designed for extensibility

- Designed for embedability

- Optimized for machine-generated queries

- Scalable across large user bases

Outline

- True serverless architecture (run DuckDB on 10,000 Lambda functions)

- Supporting both read and write queries (HTAP)

- Implemented in Python, Rust, and TypeScript (using Bun)

- Powered by Arrow × DuckDB × Iceberg

- Powered by Redis (using Amazon ElastiCache for Redis) for state management

- Accelerated by NAT hole punching for superfast data shuffles

- Integrated with Apache Iceberg, Apache Hudi, and Delta Lake

- Deployed on AWS first, then Microsoft Azure and Google Cloud

- Deployed as two AWS Lambda functions and one Amazon EC2 instance

- Integrated with Amazon Athena (for write queries on lakehouse tables)

- Packaged as an AWS CloudFormation template (using Terraform)

- Released as a free AWS Marketplace product

- Running on your Amazon VPC

- Licensed under MIT License

Features

- Distributed SQL query planner powered by DuckDB

- Distributed SQL query engine powered by DuckDB

- Distributed SQL query execution coordinated by Redis (using Amazon ElastiCache for Redis)

- Distributed data shuffles enabled by direct Lambda-to-Lambda communication through NAT hole punching

- Read queries executed by DuckDB (on AWS Lambda)

- Write queries against Object Store objects executed by DuckDB

- Write queries against Lakehouse tables executed by Amazon Athena

- Built-in Malloy to SQL translator

- Built-in PRQL to SQL translator

- Built-in SQL dialect converter

- Built-in SQL parser | stringifier

- Sub-500ms table scanning API (fetch table partitions from filter predicates) running on standalone function

- Advanced table metadata managed by serverless Metastore

- Concurrent support for multiple table formats (Apache Iceberg, Apache Hudi, and Delta Lake)

- Concurrent suport for multiple Lakehouse instances

- Native support for all Lakehouse Catalogs (AWS Glue Data Catalog, Amazon DynamoDB, and Amazon RDS)

- Support for authentication and authorization

- Support for synchronous and asynchronous invocations

- Support for cascading remote invocations with

SELECT THROUGHsyntax - Joins across heterogenous tables using different table formats

- Joins across tables managed by different Lakehouse instances

- Small filtered partitions cached on AWS Lambda functions

- Query results returned as HTTP response, serialized on Object Store, or streamed through Apache Arrow

- Query results cached on Object Store (Amazon S3) and CDN (Amazon CloudFront)

- Query logs recorded as JSON values in Redis cluster or on data lake using Parquet file

- Transparent support for all file formats supported by DuckDB and the Lakehouse

- Transparent support for all table lifecycle features offered by the Lakehouse

- Planned support for deployment on AWS Fargate

Deployment

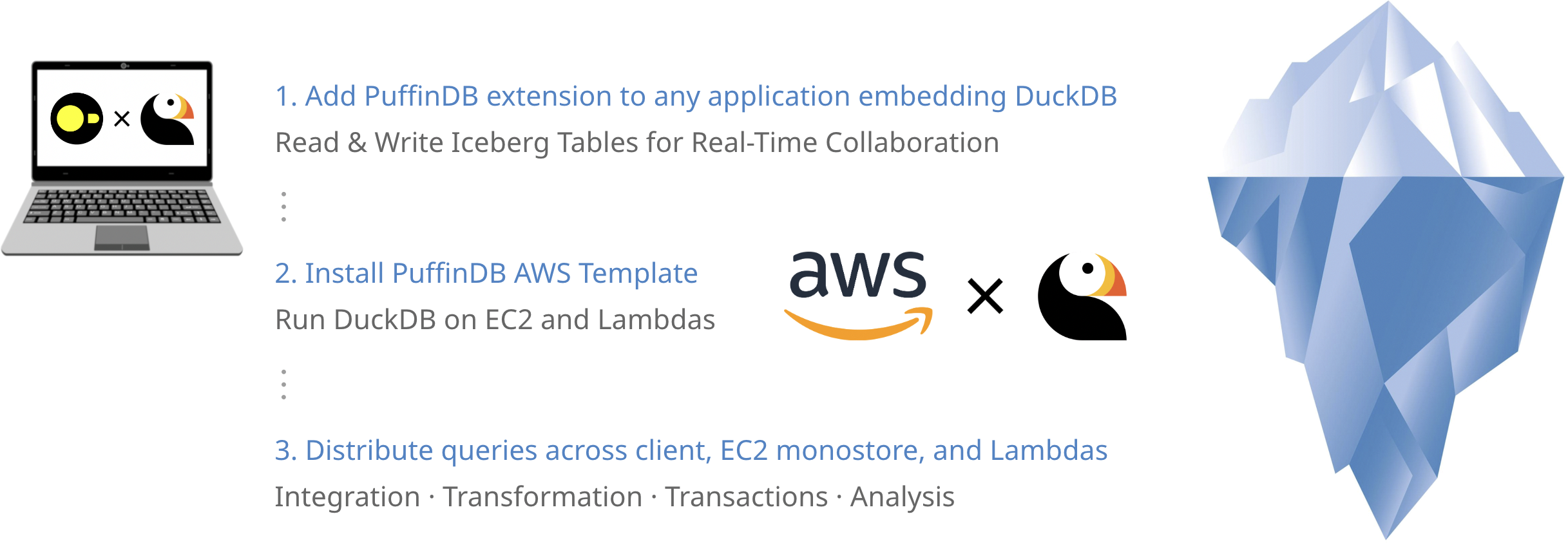

PuffinDB will support four incremental deployment options:

- Node.js and Python modules deeply integrated within your own tool or application

- AWS Lambda functions deployed within your own cloud platform

- AWS CloudFormation template deployed within your own VPC

- AWS Marketplace product added to your own cloud environment

Philosophy

- Developer-first — no non-sense, zero friction

- Lowest latency — every millisecond counts

- Elastic design — from kilobytes to petabytes

FAQ

Please check our Frequently Asked Questions.

Roadmap

Please check our Roadmap.

Sponsors

This project was initiated and is currently funded by STOIC.

Please check our sponsors page for sponsorship opportunities.

Credits

This project leverages several DuckDB features implemented by DuckDB Labs and funded by STOIC:

- Support for Apache Arrow streaming when using Node.js deployment (released)

- Support for user-defined functions when using Node.js deployment (released)

- Support for map-reduced queries with binary map results using new

COMBINEfunction (released) - Support for import of Hive partitions (released)

- Support for partitioned exports with

COPY ... TO ... PARTITION_BY(released) - Support for SQL query parsing | stringifying through standard query API (under development)

- Support for Azure Blob Storage (development starting soon)

We are also considering funding the following projects:

- Support for

SELECT * THROUGH 'https://myPuffinDB.com/' FROM remoteTablesyntax (Cf. EDDI) - Support for

FIXEDfixed-length character strings (Cf. #3) - Support for

CandStpch-dbgenoptions intpchextension

This project was initially inspired by this excellent article from Alon Agmon.

Discussions

Most discussions about this project are currently taking place on the @ghalimi Twitter account.

For a lower-frequency alternative, please follow @PuffinDB.

Notes

PuffinDB should not be confused with the Puffin file format.

Be stoic, be kind, be cool. Like a puffin...