spring-cloud-dataflow

spring-cloud-dataflow copied to clipboard

spring-cloud-dataflow copied to clipboard

CTR app props not resolving when using shell entry point

When Spring Cloud Data Flow launches a CTR with deployment properties for its child tasks it base-64 encodes a portion of the key that will contain the prefix and name of the property for the child deployment property.

For example:

When launching a CTR with a child task named task-a that has a deployment property of deployer.task-a.kubernetes.environment-variables=foo=bar using the default entrypoint, the command line are looks like this:

--composed-task-app-properties.base64_ZGVwbG95ZXIudGFzay1hLmt1YmVybmV0ZXMuZW52aXJvbm1lbnQtdmFyaWFibGVz=foo=bar

The above implementation works.

The problem occurs when a user specified the shell entrypoint. In this case the deployer will change properties such that they can be used as environment variables. It does this by capitalizing the key for the property. The side-effect is that it also capitalizes the base-64 encoded portion. Thus when CTR decodes the base-64 portion of the key it is incorrect. An example below shows an example in how the deployer property is translated for the environment (base-64 is in all caps, where above it is upper and lower case).

COMPOSED-TASK-APP-PROPERTIES_BASE64_ZGVWBG95ZXIUDGLTZXN0YW1WLMT1YMVYBMV0ZXMUZW52AXJVBM1LBNQTDMFYAWFIBGVZ: foo=bar

Users have reported a workaround by adding the composed task runner name to the key for example:

deployer.my-composed-task-name.task-a.deployer.timestamp.kubernetes.environment-variables=foo=bar. Thus CTR assumes the property is meant for it and it will set the child deployer as follows:

COMPOSED-TASK-PROPERTIES: deployer.task-a.deployer.timestamp.kubernetes.environment-variables=foo=bar.

Thanks a lot - also for the first investigation of the issue! Good that you spotted it by this side hint. 👍

Not sure the best way to resolve this. This is the reason that base64 encoding was added

https://github.com/markpollack/spring-cloud-dataflow/commit/ce2601f316fca391911dac170b463b42e1d3e74c

https://github.com/spring-cloud/spring-cloud-dataflow/issues/4566 and the comments in

https://github.com/jvalkeal/spring-cloud-dataflow/commit/f19391307fc0b2bbd096b103785829bed6135fea

are interesting to refresh ourselves on.

- Property key part is now base64 encoded with prefix base64_ because

boot with its binding will silenly remove special characters from

map keys and we could not use wildcards like deployer.*.local.working-directories-root

- Encoded values are then decoded on ctr side.

- One onnoying this is that these base64 encoded values are visible in a manifest but

never directly interact with a user.

Maybe even for the shell entrypoint option, we should be passing in the properties using a single environment variable, just like how it is done for the boot entry point with SPRING_APPLICATION_JSON. We could create a new entrypoint shell-json to reflect this change.

After researching this issue the correct solution is to use the boot entry point. We need to update the documentation so that will say, "I you are using CTR on Kubernetes and you want to pass values via the environment variables, you should use the boot entrypoint."

Hey @cppwfs - I am going to check if this is a possible solution for us, as we decided to use the entrypoint shell for some reasons. I will give an update here, soon.

@klopfdreh Looking forward to your response.

Hey @cppwfs,

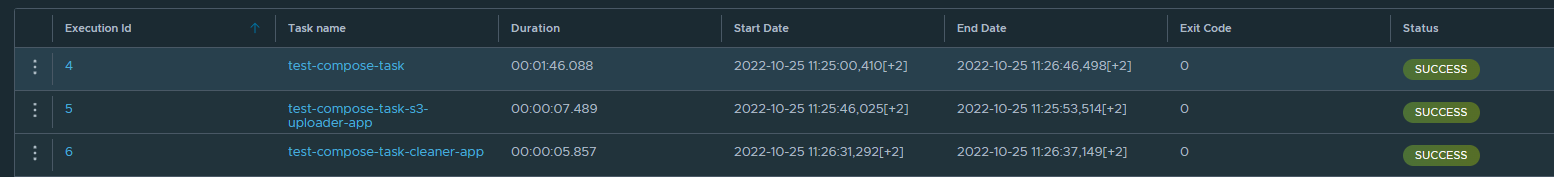

we have tested two scenarios.

-

With entryPointStyle

shelland withdeployer.test-compose-task.s3-uploader-app.kubernetes.environment-variables=...This one does run without any issue and the properties with * are stored in the compose task and the properties withtest-compose-appare stored intest-compose-task-s3-uploader-app-task

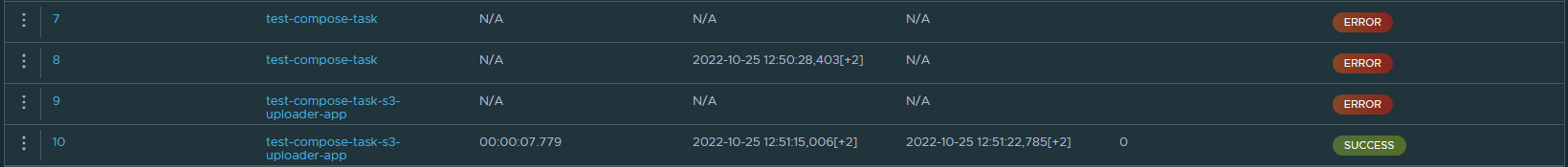

-

With entryPointStyle

bootand withdeployer.s3-uploader-app.kubernetes.environment-variables=...This one caused big issues. No properties have been stored at all. and the task execution shows this. Note: The compose task hasn't even stopped nor executed both of the tasks mentioned in the compose task. Only the first run and than it hangs. The properties are names like this now:

The properties are names like this now:

One thing to mention is that we use spring cloud config server for our task applications. So our task application are configured bootstrap.yml:

spring:

cloud:

config:

enabled: true

uri: ...

username: ...

password: ...

fail-fast: true

name: ...

label: ...

Using the boot entrypoint without using config server the application resolves the original issue. Now we need to discern if the configserver + boot entrypoint have an issue. So can you provide some details so we can replicate.

- Can you provide the logs from the child app as well as the composed task runner?

- Version of SCDF you are using?

- Are you using config server on the Composed Task Runner and its child apps or just the child apps?

Hey @cppwfs - we are working on this, but it will take some time, because we have to ensure that there are no security relevant data in the logs.

Hello @klopfdreh , Thanks for looking into it. Would you be able to answer the other questions?

Hey @cppwfs,

of course.

- We are going to prepare the logs as mentioned

- We are using the following versions

{

"versions": {

"implementation": {

"name": "spring-cloud-dataflow-server",

"version": "2.10.0-M2"

},

"core": {

"name": "Spring Cloud Data Flow Core",

"version": "2.10.0-M2"

},

"dashboard": {

"name": "Spring Cloud Dataflow UI",

"version": "3.3.0-M2"

},

"shell": {

"name": "Spring Cloud Data Flow Shell",

"version": "2.10.0-M2",

"url": "https://repo1.maven.org/maven2/org/springframework/cloud/spring-cloud-dataflow-shell/2.10.0-M2/spring-cloud-dataflow-shell-2.10.0-M2.jar"

}

},

"features": {

"streams": true,

"tasks": true,

"schedules": true,

"monitoringDashboardType": "NONE"

},

"runtimeEnvironment": {

"appDeployer": {

"deployerImplementationVersion": "2.9.0-M2",

"deployerName": "Spring Cloud Skipper Server",

"deployerSpiVersion": "2.9.0-M2",

"javaVersion": "11.0.16.1",

"platformApiVersion": "",

"platformClientVersion": "",

"platformHostVersion": "",

"platformSpecificInfo": {},

"platformType": "Skipper Managed",

"springBootVersion": "2.7.3",

"springVersion": "5.3.22"

},

"taskLaunchers": [

{

"deployerImplementationVersion": "2.8.0-M2",

"deployerName": "KubernetesTaskLauncher",

"deployerSpiVersion": "2.8.0-M2",

"javaVersion": "11.0.16.1",

"platformApiVersion": "v1",

"platformClientVersion": "unknown",

"platformHostVersion": "unknown",

"platformSpecificInfo": {

"namespace": ".......",

"master-url": "https://...../"

},

"platformType": "Kubernetes",

"springBootVersion": "2.7.3",

"springVersion": "5.3.22"

}

]

},

"monitoringDashboardInfo": {

"url": "",

"source": "default-scdf-source",

"refreshInterval": 15

},

"security": {

"isAuthentication": true,

"isAuthenticated": true,

"username": "........",

"roles": [

"ROLE_CREATE",

"ROLE_DEPLOY",

"ROLE_DESTROY",

"ROLE_MANAGE",

"ROLE_MODIFY",

"ROLE_SCHEDULE",

"ROLE_VIEW"

]

}

}

- We are using Spring Cloud Config server only on the child apps, but not on the CTR. The CTR is configured with the following environment variables: SPRING_CLOUD_TASK_BATCH_FAIL_ON_JOB_FAILURE (true) / OAUTH2_CLIENT_CREDENTIALS_SCOPES / OAUTH2_CLIENT_CREDENTIALS_TOKEN_URI / OAUTH2_CLIENT_CREDENTIALS_CLIENT_ID / OAUTH2_CLIENT_CREDENTIALS_CLIENT_SECRET / OAUTH2_CLIENT_CREDENTIALS_CLIENT_AUTHENTICATION_METHOD (CLIENT_SECRET_POST) / TRANSACTION_ISOLATION_LEVEL (ISOLATION_READ_COMMITTED)

Hi! We have prepared the (anonymized) logs for you to have a look at: ctr_runner_boot_entrypoint.log ctr_runner_child_task1_boot_entrypoint.log

Notably, we now also use Config Server for the CTR, though no difference in behavior as to not using it concerning this issue here was noticable.

So with the sample logs above.

We see that CTR launched and i started child_task1.

Did child_task1 finish successfully or did it hang?

The child_task1 finished successfully, but the CTR task did hang.

The second task, child_task2, did not start. Instead, CTR task hangs indefinitely.

Sorry for the noise on the last comment, I deleted immediately after I posted it (not fast enough though :-) ). I remembered from the log that CTR did not launch the second app.

I have seen this before but the circumstance was that the child app and CTR were pointing to 2 different databases. Thus CTR launched the task via SCDF, and monitored the task_execution table that dataflow was associated with. The child app updated the task_execution table in another database. Thus CTR appeared to be hung, because the task_execution entry was never updated in its DB.

I've not been able to replicate the behavior you have reported yet.

Is there another instance of your DB that the child app or CTR could be connecting to?

The tasks we use here all just use one database connection, which is dictated by an envvar passed by the SCDF server itself, which should definitely make it the same everywhere. That can't be it in our case.

We did some further investigations according to this issue, but couldn't solve it, yet. We are using only one database for the CTR and the child task applications.

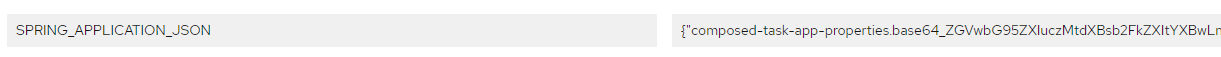

Still, when we set the entryPointStyle to boot the SPRING_APPLICATION_JSON is correctly handed over to the CTR, but after the first child task is completed the CTR gets stuck and does not execute the second child task.

Here is the JSON of the CTR:

{

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMuZW52aXJvbm1lbnRWYXJpYWJsZXM": "APPLICATION_NAME='s3-uploader-app',ENV='komo',RT_ENV='komo',DISABLE_SAFE_DELETE='true',TRANSACTION_LOG_MOUNT_PATH='/persistent/lf-batch-xa-transaction-logs',TRANSACTION_LOG_BASE_NAME='test-compose-task_20230315_111846-1',OTEL_SERVICE_NAME='s3-uploader-task',AWS_ACCOUNT_ENDPOINT='[https://xxxxxxx',AWS_BUCKET_NAME='xxxxxxx',DRY_RUN='true',S3_UPLOADER_RESOURCES_TO_UPLOAD_0_INPUT_RESOURCES_PATTERN_URI='file:/deployments/*.jar',S3_UPLOADER_RESOURCES_TO_UPLOAD_0_OUTPUT_FOLDER_RESOURCE_URI='s3://xxxxxxx/',SPRING_CLOUD_CONFIG_NAME_VALUE='s3-uploader-task',SPRING_CLOUD_CONFIG_LABEL_VALUE='komo',RUN_ID='test-compose-task_20230315_111846-1',TASK_IDENTIFIER='test-compose-task_20230315_111846'](https://xxxxxxx',aws_bucket_name='xxxxxxx',dry_run='true',s3_uploader_resources_to_upload_0_input_resources_pattern_uri='file/deployments/*.jar',S3_UPLOADER_RESOURCES_TO_UPLOAD_0_OUTPUT_FOLDER_RESOURCE_URI='s3://xxxxxxx/',SPRING_CLOUD_CONFIG_NAME_VALUE='s3-uploader-task',SPRING_CLOUD_CONFIG_LABEL_VALUE='komo',RUN_ID='test-compose-task_20230315_111846-1',TASK_IDENTIFIER='test-compose-task_20230315_111846')",

"management.metrics.export.prometheus.enabled": "true",

"spring.datasource.driverClassName": "oracle.jdbc.OracleDriver",

"dataflowServerUri": https://xxxxxxx:8443/lf-batch-spring-cloud-data-flow-server/,

"spring.cloud.task.name": "test-compose-task",

"composed-task-app-properties.base64_ZGVwbG95ZXIuY2xlYW5lci1hcHAua3ViZXJuZXRlcy52b2x1bWVNb3VudHM": "[{name: 'lf-efs-pvc-komo', mountPath: '/persistent'}]",

"management.metrics.tags.service": "task-application",

"composed-task-app-properties.base64_ZGVwbG95ZXIuY2xlYW5lci1hcHAua3ViZXJuZXRlcy5lbnZpcm9ubWVudFZhcmlhYmxlcw": "APPLICATION_NAME='cleaner-app',ENV='komo',RT_ENV='komo',TRANSACTION_LOG_MOUNT_PATH='/persistent/lf-batch-xa-transaction-logs',TRANSACTION_LOG_BASE_NAME='test-compose-task_20230315_111846-2',OTEL_SERVICE_NAME='cleaner-task',DRY_RUN='true',RESOURCE_URIS='file:/deployments/*',SPRING_CLOUD_CONFIG_NAME_VALUE='cleaner-task',SPRING_CLOUD_CONFIG_LABEL_VALUE='komo',DISABLE_SAFE_DELETE='false',RUN_ID='test-compose-task_20230315_111846-2',TASK_IDENTIFIER='test-compose-task_20230315_111846'",

"composed-task-app-properties.base64_ZGVwbG95ZXIuY2xlYW5lci1hcHAua3ViZXJuZXRlcy52b2x1bWVz": "[{name: 'lf-efs-pvc-komo', persistentVolumeClaim: { claimName: 'lf-efs-pvc-komo', readOnly: 'false' }}]",

"management.metrics.export.prometheus.rsocket.host": "xxxxxxx",

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMuZGVwbG95bWVudExhYmVscw": "xxxxxxx",

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMuc2VjcmV0S2V5UmVmcw": "[{envVarName: 'AWS_IAM_USER_ACCESS_KEY_ID', secretName: 'aws-s3-credentials-komo', dataKey: 'AWS_IAM_USER_ACCESS_KEY_ID'},{envVarName: 'AWS_IAM_USER_SECRET_KEY', secretName: 'aws-s3-credentials-komo', dataKey: 'AWS_IAM_USER_SECRET_KEY'}]",

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMudm9sdW1lcw": "[{name: 'lf-efs-pvc-komo', persistentVolumeClaim: { claimName: 'lf-efs-pvc-komo', readOnly: 'false' }}]",

"management.metrics.tags.application": "${spring.cloud.task.name:unknown}-${spring.cloud.task.executionid:unknown}",

"platform-name": "default",

"graph": "test-compose-task-s3-uploader-app && test-compose-task-cleaner-app",

"composed-task-app-properties.base64_ZGVwbG95ZXIuY2xlYW5lci1hcHAua3ViZXJuZXRlcy5kZXBsb3ltZW50TGFiZWxz": "xxxxxxx",

"spring.datasource.url": "jdbc:oracle:xxxxxxx",

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMudm9sdW1lTW91bnRz": "[{name: 'lf-efs-pvc-komo', mountPath: '/persistent'}]",

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMubGltaXRzLmNwdQ": "375m",

"composed-task-app-properties.base64_ZGVwbG95ZXIuczMtdXBsb2FkZXItYXBwLmt1YmVybmV0ZXMubGltaXRzLm1lbW9yeQ": "1000Mi",

"management.metrics.export.prometheus.rsocket.port": "7001",

"management.metrics.export.prometheus.rsocket.enabled": "true",

"composed-task-app-properties.base64_ZGVwbG95ZXIuY2xlYW5lci1hcHAua3ViZXJuZXRlcy5saW1pdHMubWVtb3J5": "1000Mi",

"composed-task-app-properties.base64_ZGVwbG95ZXIuY2xlYW5lci1hcHAua3ViZXJuZXRlcy5saW1pdHMuY3B1": "375m"

}

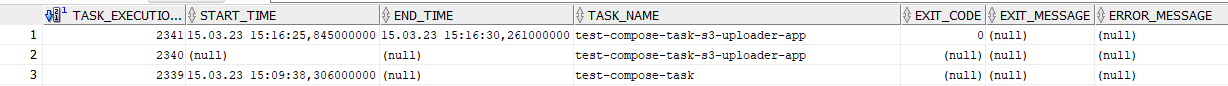

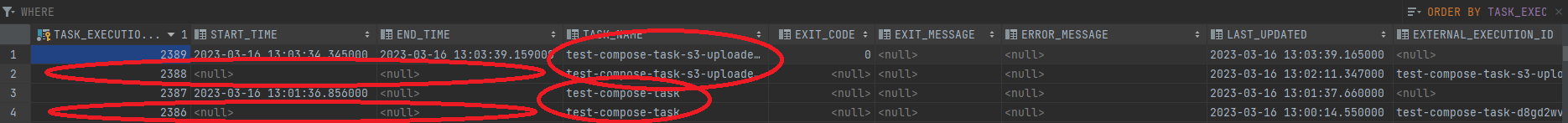

We noticed that there are two entries in the database for the first child task which has been started.

So what happens is that the first one 2340 is started and checked all the time, but the end time is written to 2341. Because the CTR only reads the task with the execution 2340 it gets stuck - the end time is not written here.

Because we are using Oracle we had to change the TRANSACTION_ISOLATION_LEVEL to ISOLATION_READ_COMMITED - can this be the issue?

That is possible with ISOLATION_READ_COMMITED in that a non-repeatable read is one in which data read twice inside the same transaction cannot be guaranteed to contain the same value.

Hey @cppwfs - because we use Oracle and it does not support ISOLATION_REPEATABLE_READ - what should I use for a ISOLATION_LEVEL? Any advice here?

Edit: The same CTR is not going to run more than once at a time.

Before we go down that path, is the spring.cloud.task.executionid set for the child application?

Yes that is what I meant with 2340 and 2341 in this comment: https://github.com/spring-cloud/spring-cloud-dataflow/issues/5070#issuecomment-1470108752

you can also see it in the screenshot at this comment.

Hi @klopfdreh ,

Let me ask a different way. SCDF creates an empty entry in the task_execution table when it launches a task, much like what we see in 2340. Then it passes the spring.cloud.task.executionid=2340 to the task. So when the task launches it will use task-execution-id of 2340. But if the task does not get the spring.cloud.task.executionid=2340 then it will create its own task_execution entry something like what we see in 2341.

So when CTR requests the launch for the task it will get the 2340 and use that to determine if the task is still running.

I'm curious if the child task is getting the spring.cloud.task.executionid=2340.

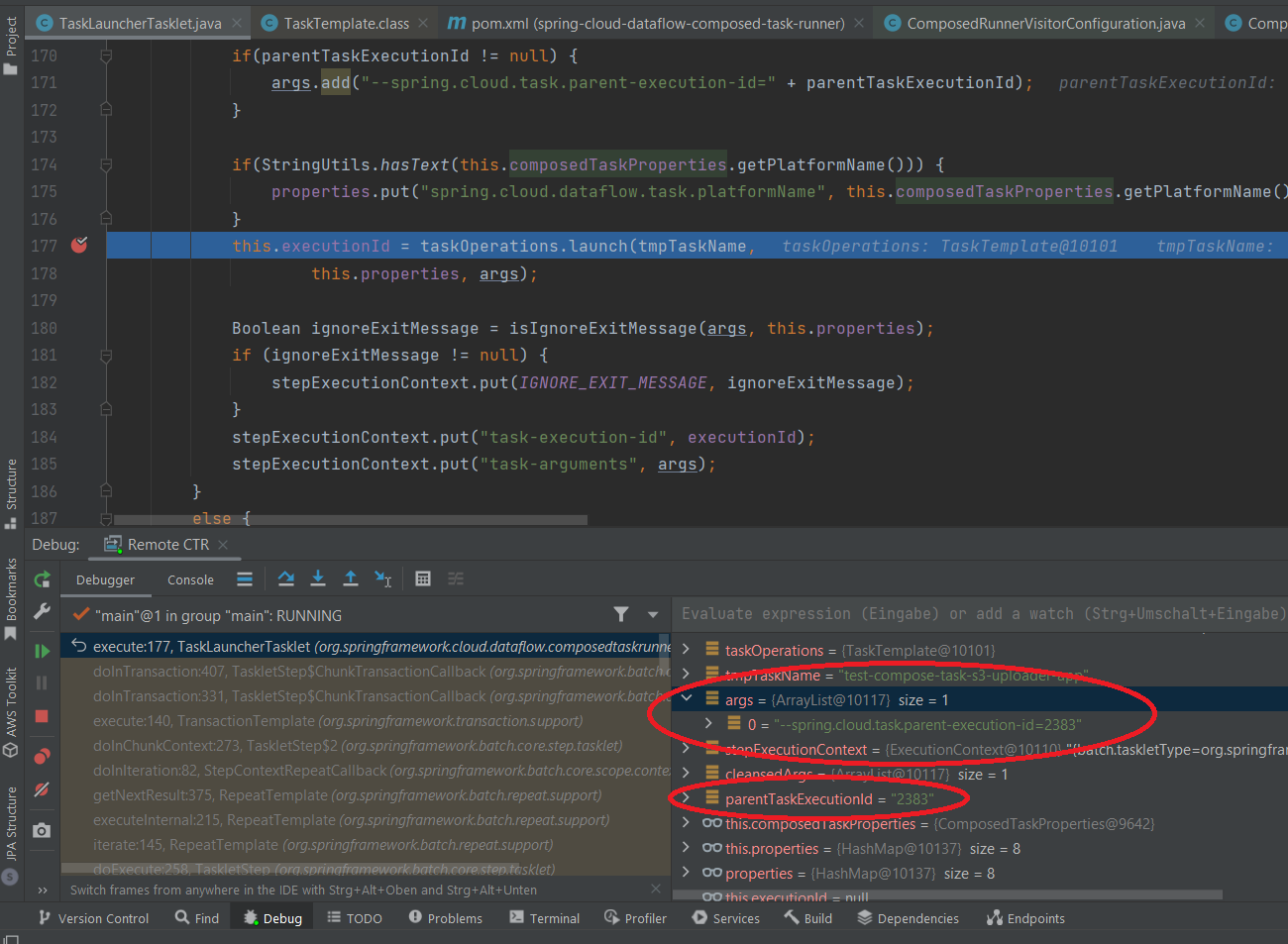

I had a look at the CTR when it launches the task and remote debugged it: https://github.com/spring-cloud/spring-cloud-dataflow/blob/main/spring-cloud-dataflow-composed-task-runner/src/main/java/org/springframework/cloud/dataflow/composedtaskrunner/TaskLauncherTasklet.java#L177

There is no --spring.cloud.task.executionid but only a --spring.cloud.task.parent-execution-id which is handed over to the launch call via the args parameter. This one is received before from the SCDF server with the following call result = this.taskExplorer.getTaskExecutionIdByJobExecutionId(stepContribution.getStepExecution().getJobExecutionId()); in public String getParentTaskExecutionId(StepContribution stepContribution)

When the launch call is made the CTR receives the child executionId from the SCDF server, because it is a rest operation. The resulting executionId is put into the stepExecutionContext. see https://github.com/spring-cloud/spring-cloud-dataflow/blob/main/spring-cloud-dataflow-composed-task-runner/src/main/java/org/springframework/cloud/dataflow/composedtaskrunner/TaskLauncherTasklet.java#L184

The TaskLauncherTasklet also preserves the executionId in a field and checks if it is set. If it is already set the child task has been started and the Thread.sleep(this.composedTaskProperties.getIntervalTimeBetweenChecks()); is called.

So the CTR is not using a given child executionId but creates it by itself.

So my issue still is: Why are two entries are created. By the way I changed the isolation level to ISOLATION_SERIALIZABLE which also doesn't fix the issue.

I had a look at the database again and the compose task is also written twice into the database when started.

This does not happen when the entryPointStyle is shell

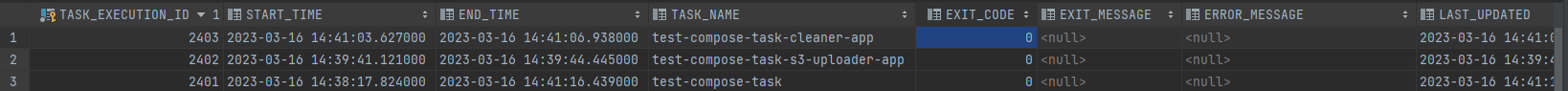

In comparison to the previous post. Here are the TASK_EXECUTION table entries with entryPointStyle shell and the deprecated way of applying the child task properties. deployer.test-compose-task.s3-uploader-app.kubernetes... and deployer.test-compose-task.cleaner-app.kubernetes...

As you can see: No duplicated entries and the START_TIME and END_TIME is applied correctly - also for the compose task

Cool this helps, we'll take a look.

May I ask if there are any updates, yet?

Sorry for the delay in response.

If you create a new definition with a new name does this still occur?

Yes when we switched the entry point we previously cleared the database and re-registered all task applications / ctr task definitions. The issue still occurs in this case. Later on when we noticed that there is no difference we continued testing without recreating the database.