model_optimization

model_optimization copied to clipboard

model_optimization copied to clipboard

Issue in model quanitization

Issue Type

Bug

Source

source

MCT Version

1.4.0

OS Platform and Distribution

colab

Python version

Python 3.7.13

Describe the issue

When I tried to convert my own model. I am getting the below error. I have trained the model on colab and I directly loaded the saved keras model.

I got the below error while converting the model using the below script. https://colab.research.google.com/gist/reuvenperetz/0d2807c02295cd5eaad4fb78462ed52d/issue.ipynb

16 import operator

17

---> 18 import torch

19 from torch import add, flatten, reshape, split, unsqueeze, dropout, sigmoid, tanh, chunk

20 from torch.nn import Conv2d, ConvTranspose2d, Linear, BatchNorm2d

[/usr/local/lib/python3.7/dist-packages/torch/__init__.py](https://localhost:8080/#) in <module>

200 if USE_GLOBAL_DEPS:

201 _load_global_deps()

--> 202 from torch._C import * # noqa: F403

203

204 # Appease the type checker; ordinarily this binding is inserted by the

RuntimeError: THPDtypeType.tp_dict == nullptr INTERNAL ASSERT FAILED at "../torch/csrc/Dtype.cpp":139, please report a

Expected behaviour

No response

Code to reproduce the issue

I used the same code but I loaded my Keras model.

https://colab.research.google.com/gist/reuvenperetz/0d2807c02295cd5eaad4fb78462ed52d/issue.ipynb

Log output

No response

Hi @dsnsabari, Are you trying to quantize a Keras or a PyTorch model?

Hi @reuvenperetz ,

I am trying to quantize the tf.keras model.

@dsnsabari Can you please provide a code to reproduce the error?

@reuvenperetz can you send me the support email id .

@dsnsabari We don't have a support email. Could you please write the code here or create a gist?

@reuvenperetz ,

I have loaded the model below and used the same script you shared for model quantization. Please let me know once downloaded the model. I will remove the public access.

import tensorflow as tf

model = tf.keras.models.load_model('/content/gdrive/model_original_pretrained.h5')

import model_compression_toolkit as mct

from tensorflow.keras.applications.mobilenet import MobileNet

import numpy as np

def representative_data_gen() -> list:

return [np.random.randn(1,160,160,3)]

target_platform_cap = mct.get_target_platform_capabilities('tensorflow', 'default')

#model = MobileNet()

quantized_model, quantization_info = mct.keras_post_training_quantization(model,

representative_data_gen,

target_platform_capabilities=target_platform_cap,

n_iter=1)

@dsnsabari Thanks! I can't load your model as I get the next error:

model = load_model('path') File "lib/python3.8/site-packages/keras/utils/traceback_utils.py", line 67, in error_handler raise e.with_traceback(filtered_tb) from None File "lib/python3.8/site-packages/keras/utils/generic_utils.py", line 793, in func_load code = marshal.loads(raw_code) ValueError: bad marshal data (unknown type code)

Maybe you saved it in a certain way so I get this error?

@reuvenperetz , I am able to load the model colab. Can you try and tell me.

@dsnsabari I can't load it using this gist.

@reuvenperetz , I ran the code gist. and it didn't download the model. can you download the model file and read it from google drive?

you should pass the model path properly with the model file name.

model = tf.keras.models.load_model('/content/gdrive/model_original_pretrained.h5')

@reuvenperetz still I am getting the same error. can you load the model directly and test it ?

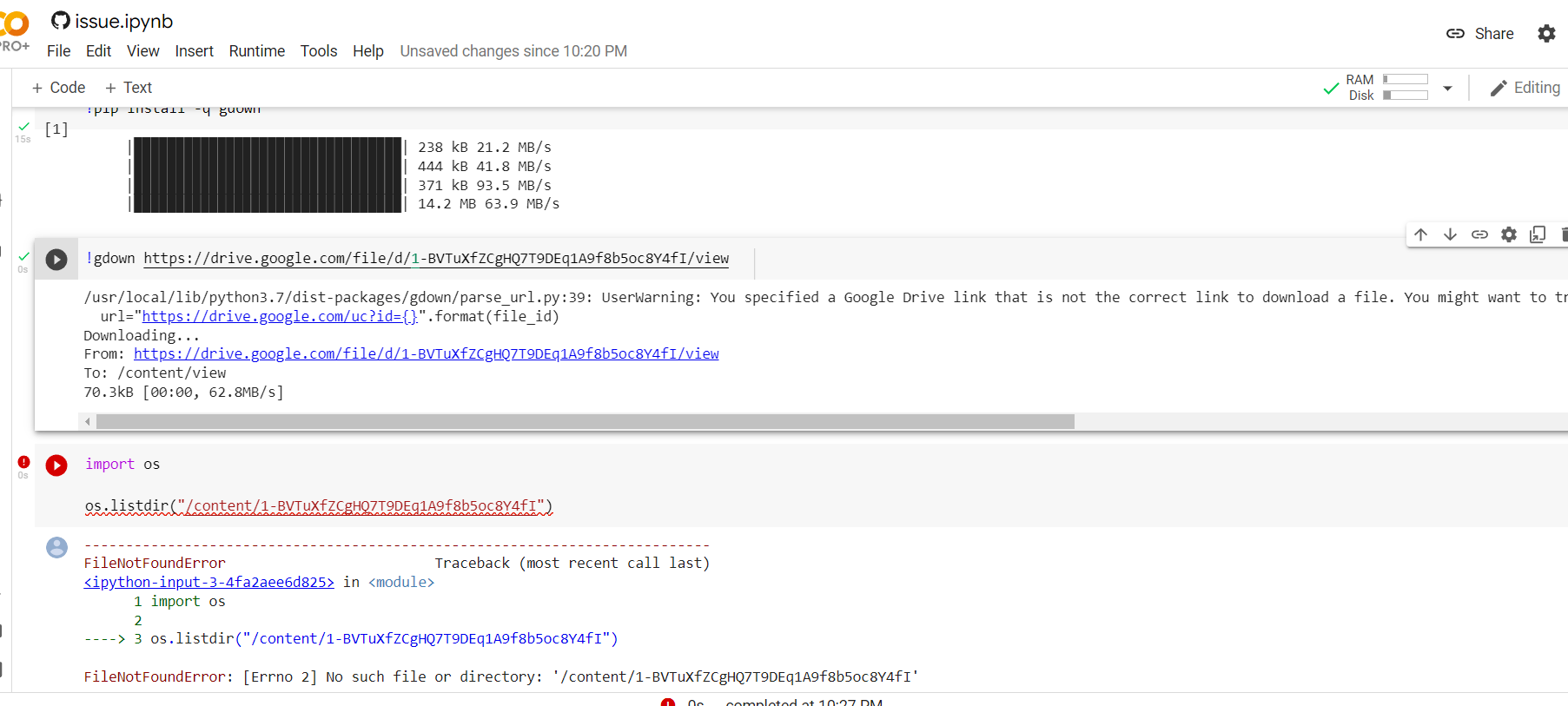

/usr/local/lib/python3.7/dist-packages/gdown/parse_url.py:39: UserWarning: You specified a Google Drive link that is not the correct link to download a file. You might want to try `--fuzzy` option or the following url: https://drive.google.com/uc?id=None

url="https://drive.google.com/uc?id={}".format(file_id)

Downloading...

From: https://drive.google.com/file/d/1-BVTuXfZCgHQ7T9DEq1A9f8b5oc8Y4fI

To: /content/1-BVTuXfZCgHQ7T9DEq1A9f8b5oc8Y4fI

70.3kB [00:00, 2.18MB/s]

@dsnsabari I can't use it it as you removed the model from being public.

@reuvenperetz still i didn't remove public access for the model. I asked you to download the model in local. Can you check whether your are able to download or not in local. I just remove the model link from the thread.

@reuvenperetz Still i am able to view model from the shared link , why are you not downloading and checking it

If you not able to solve the issue, just move to other members

Dear @dsabarinathan, in order to understand the source of the issue we will need the full error stack trace. Also, a gist that reproduces the error could help us a lot, if this is possible.

Stale issue message

I have also encountered this problem. Have you resolved it