gloo

gloo copied to clipboard

gloo copied to clipboard

Envoy will send requests to a closed connection

Gloo Edge Version

1.11.x (latest stable)

Kubernetes Version

1.22.x

Describe the bug

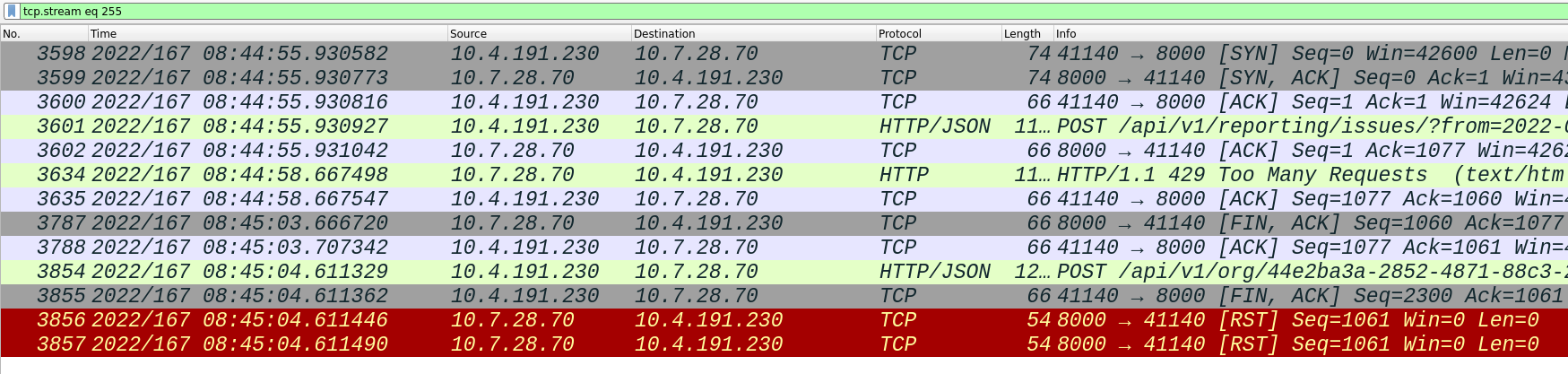

With an http1 upstream envoy will ACK a FIN, but send a request anyways shortly after.

Possible regression of: https://github.com/envoyproxy/envoy/issues/6190

Customer Details:

https://solo-io.slack.com/archives/G01MKQQ0Q3W/p1655824554856459 https://solo-io.slack.com/archives/G01MKQQ0Q3W/p1655824122208049

Steps to reproduce the bug

-

Install Gloo Edge

-

deploy test app

apiVersion: apps/v1

kind: Deployment

metadata:

name: expressapp

namespace: gloo-system

labels:

app: expressapp

spec:

replicas: 1

selector:

matchLabels:

app: expressapp

template:

metadata:

labels:

app: expressapp

spec:

containers:

- name: expressapp

image: kevinshelaga/expressapp:latest

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: expressapp-service

namespace: gloo-system

spec:

selector:

app: expressapp

ports:

- protocol: TCP

port: 3000

targetPort: 3000

type: ClusterIP

- create VS

apiVersion: gateway.solo.io/v1

kind: VirtualService

metadata:

name: default-http

namespace: gloo-system

spec:

virtualHost:

domains:

- "*"

routes:

- matchers:

- prefix: /

routeAction:

single:

upstream:

name: "gloo-system-expressapp-service-3000"

namespace: gloo-system

- run k6 test

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://YOUR-GWP-IP:80"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status == 503) {

console.error(res.body);

}

}

Results: (going direct to service this does not happen)

ERRO[0010] upstream connect error or disconnect/reset before headers. reset reason: connection termination source=console

ERRO[0030] upstream connect error or disconnect/reset before headers. reset reason: connection termination source=console

ERRO[0055] upstream connect error or disconnect/reset before headers. reset reason: connection termination source=console

ERRO[0055] upstream connect error or disconnect/reset before headers. reset reason: connection termination source=console

running (1m00.7s), 000/100 VUs, 1200 complete and 0 interrupted iterations

default ✓ [======================================] 100 VUs 1m0s

✗ is status 503

↳ 0% — ✓ 4 / ✗ 1196

{"responseFlags":"UC","remoteAddress":"192.168.59.115:9988","modifiedPath":"/","xForwardedFor":null,"upstreamName":"gloo-system-expressapp-service-3000_gloo-system","systemTime":"2022-06-27T14:20:13.903Z","site":null,"httpMethod":"GET","apiKey":null,"userName":null,"path":"/","clientDuration":1,"filterState":null,"responseCode":503,"targetDuration":null,"protocol":"HTTP/1.1","requestId":"98efe19f-baba-450a-9080-87c739736474"}

Expected Behavior

Envoy should open a new connection before sending a request

Additional Context

Customer issue with express apps

Zendesk ticket #788 has been linked to this issue.

It appears this is an upstream bug that has existed for quite some time. All versions I tested from 1.24 back to 1.17 exhibited the errant behavior. The following is the reproduction I performed based on Kevin's steps above.

I have used the following yaml to create a testing environment which has demonstrated that this issue occurs under the following conditions:

- when using Edge and vanilla Envoy, but not nginx or direct requests

- when using an ExpressJS upstream, but not a Go upstream (see kevinshelaga/expressapp or bohanon/echo-server-express (source) for the Express applications used and bohanon/echo-server (source) for the Go application used)

- when using the testing tool k6 (but not hey)

Point 3 is probably irrelevant and possibly point 2 as well, but I found it interesting that it only was occurring with Express apps.

The testing yaml below can be applied to a fresh cluster with Gloo Edge installed to set up the test upstreams, a virtual service for Edge to route to them, and basic nginx/envoy proxies to route to them as well. The k6 tool can be installed via brew install k6 or see here for alternate methods.

6647-proxies.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server-express

namespace: gloo-system

labels:

app: echo-server-express

spec:

replicas: 1

selector:

matchLabels:

app: echo-server-express

template:

metadata:

labels:

app: echo-server-express

spec:

containers:

- name: echo-server-express

image: bohanon/echo-server-express:latest

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: echo-server-express-service

namespace: gloo-system

spec:

selector:

app: echo-server-express

ports:

- protocol: TCP

port: 3000

targetPort: 3000

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server

namespace: gloo-system

labels:

app: echo-server

spec:

replicas: 1

selector:

matchLabels:

app: echo-server

template:

metadata:

labels:

app: echo-server

spec:

containers:

- name: echo-server

image: bohanon/echo-server:latest

ports:

- containerPort: 9999

---

apiVersion: v1

kind: Service

metadata:

name: echo-server-service

namespace: gloo-system

spec:

selector:

app: echo-server

ports:

- protocol: TCP

port: 9999

targetPort: 9999

type: ClusterIP

---

apiVersion: gateway.solo.io/v1

kind: VirtualService

metadata:

name: default-http

namespace: gloo-system

spec:

virtualHost:

domains:

- "*"

routes:

- matchers:

- prefix: /express

routeAction:

single:

upstream:

name: "gloo-system-echo-server-express-service-3000"

namespace: gloo-system

options:

prefixRewrite: '/'

- matchers:

- prefix: /go

routeAction:

single:

upstream:

name: "gloo-system-echo-server-service-9999"

namespace: gloo-system

options:

prefixRewrite: '/'

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-conf

namespace: gloo-system

data:

nginx.conf: |

user nginx;

worker_processes 3;

error_log /var/log/nginx/error.log;

events {

worker_connections 10240;

}

http {

log_format main

'remote_addr:$remote_addr\t'

'time_local:$time_local\t'

'method:$request_method\t'

'uri:$request_uri\t'

'host:$host\t'

'status:$status\t'

'bytes_sent:$body_bytes_sent\t'

'referer:$http_referer\t'

'useragent:$http_user_agent\t'

'forwardedfor:$http_x_forwarded_for\t'

'request_time:$request_time';

access_log /var/log/nginx/access.log main;

include /etc/nginx/virtualhost/virtualhost.conf;

}

virtualhost.conf: |

server {

resolver 10.96.0.10 valid=10s;

listen 8081 default_server;

root /usr/local/app;

access_log /var/log/nginx/app.access_log main;

error_log /var/log/nginx/app.error_log;

location /go {

set $gosvc "echo-server-service.gloo-system.svc.cluster.local:9999";

rewrite /go / break;

proxy_pass http://$gosvc;

proxy_http_version 1.1;

}

location /express {

set $expresssvc "echo-server-express-service.gloo-system.svc.cluster.local:3000";

rewrite /express / break;

proxy_pass http://$expresssvc;

proxy_http_version 1.1;

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: gloo-system

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 8081

volumeMounts:

- mountPath: /etc/nginx

readOnly: true

name: nginx-conf

volumes:

- name: nginx-conf

configMap:

name: nginx-conf

items:

- key: nginx.conf

path: nginx.conf

- key: virtualhost.conf

path: virtualhost/virtualhost.conf

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: gloo-system

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 8081

targetPort: 8081

type: ClusterIP

---

apiVersion: v1

kind: ConfigMap

metadata:

name: raw-envoy-config

namespace: gloo-system

data:

envoy.yaml: |

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 19000

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

- address:

socket_address:

address: 0.0.0.0

port_value: 8082

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/go"

route:

cluster: echo-server

prefix_rewrite: "/"

- match:

prefix: "/express"

route:

cluster: echo-server-express

prefix_rewrite: "/"

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: service

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 19001

- name: echo-server-express

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: echo-server-express

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: echo-server-express-service.gloo-system.svc.cluster.local

port_value: 3000

- name: echo-server

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: echo-server

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: echo-server-service.gloo-system.svc.cluster.local

port_value: 9999

admin:

access_log_path: "/dev/null"

address:

socket_address:

address: 0.0.0.0

port_value: 19001

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: vanilla-envoy

namespace: gloo-system

labels:

app: vanilla-envoy

spec:

replicas: 1

selector:

matchLabels:

app: vanilla-envoy

template:

metadata:

labels:

app: vanilla-envoy

spec:

containers:

- name: envoy

image: envoyproxy/envoy:v1.23.2

ports:

- containerPort: 8082

securityContext:

runAsUser: 1111

volumeMounts:

- readOnly: true

mountPath: /config

name: proxy-config

args:

- "envoy"

- "--config-path"

- "/config/envoy.yaml"

- "--log-level"

- "debug"

volumes:

- name: proxy-config

configMap:

name: raw-envoy-config

---

apiVersion: v1

kind: Service

metadata:

name: vanilla-envoy

namespace: gloo-system

spec:

selector:

app: vanilla-envoy

ports:

- protocol: TCP

port: 8082

targetPort: 8082

type: ClusterIP

Ports need to be forwarded with (in separate shells)

kubectl port-forward -n gloo-system deploy/vanilla-envoy 8082:8082

kubectl port-forward -n gloo-system deploy/nginx 8081:8081

kubectl port-forward -n gloo-system deploy/gateway-proxy 8080:8080

kubectl port-forward -n gloo-system deploy/nginx 8081:8081

kubectl port-forward -n gloo-system deploy/echo-server-express 3000:3000

kubectl port-forward -n gloo-system deploy/echo-server 9999:9999

The following are k6 scripts that can be run to count how many times a 503 is received over 1200 requests (100 virtual users each making a request every 5 seconds for 60 seconds).

k6 run path/to/test/script.js

k6 scripts

6647-k6-express-direct.js

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://localhost:3000"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status == 503) {

console.error(res.body);

}

}

6647-k6-express-nginx.js

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://localhost:8081/express"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status == 503) {

console.error(res.body);

}

}

6647-k6-express-vanilla.js

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://localhost:8082/express"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status == 503) {

console.error(res.body);

}

}

6647-k6-express-edge.js

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://localhost:8080/express"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status == 503) {

console.error(res.body);

}

}

6647-k6-go-direct.js

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://localhost:9999"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status === 503) {

console.error(res.body);

}

}

6647-k6-go-edge.js

import http from "k6/http";

import { sleep } from "k6";

import { check } from "k6";

export const options = {

vus: 100,

duration: "60s",

};

export default function () {

const res = http.get(

"http://localhost:8080/go"

);

sleep(5);

check(res, {

"is status 503": (r) => r.status === 503,

});

if (res.status === 503) {

console.error(res.body);

}

}

@yuval-k Is there anything in here that rings any bells? @nfuden mentioned you had experience with similar bugs in upstream.

@kevin-shelaga I have created upstream bug-report issue envoyproxy/envoy#23625. Please let us know how to prioritize the further investigation into the upstream bug.

In October, we presented a resolution to this issue whereby we injected a Connection: Close header to requests to the upstream in hopes that this would help resolve the intermittent 503s. The customer is concerned about the performance implications of never re-using connections. As we have learned from investigation, this is not a bug with Envoy, rather this is caused by improperly behaving upstream servers which fail to send a Connection: Close header in their response to Envoy even when they terminate the connection immediately after. Because the server failed to inform Envoy it would be closing the connection, Envoy allows that connection to be reassigned. The request to which the connection was reassigned then sees the connection be reset when the server finally sends the FIN.

In short, the workaround that was proposed in October and the one I propose below are not workarounds for a bug in Envoy, rather these are workarounds for a poorly behaving upstream server. Envoy will not reassign the connection if the server responds with the Connection: Close header.

It is possible to attempt to mitigate this by configuring retries in Gloo Edge using a retryOn value of reset.

Here is a link to a comment about the race condition when reusing connections with a poorly behaving upstream

I have communicated that this is an issue with the underlying frameworks to the customers and unfortunately there is nothing envoy can do to fix the issue. The prescribed workaround to apply the connection close header is the best approach to resolve any 503s.