Faster-RCNN_TF

Faster-RCNN_TF copied to clipboard

Faster-RCNN_TF copied to clipboard

Feature proposal: Use multiple GPUs

Anyone interested in implementing this? Please let me know, I'd like to help.

This is odd. I ran

./experiments/scripts/faster_rcnn_end2end.sh gpu 0 VGG16 pascal_voc

and yet both of my GPUs are using memory to capacity, according to nvidia-smi. (I wouldn't think gpu 1 is doing much processing though, its temperature is 39 ºC)

I think currently the way to invoke the faster_rcnn_end2end.sh script follows what has implemented in the Caffe version. From the code, I didn't see anything play with the configuration. It will use all visible GPUs no matter the configurations are.

@qinglintian

What I observed from nvidia-smi is that it is only using 1 GPU. I have two titan-x and two 1080TI. How can I use them all for the training here?

@chakpongchung did you make modification to the code? check the environment variable CUDA_VISIBLE_DEVICES are you able to use multiple GPU using TF for other computations? For me, a very simple TF program will use all GPUs available when no specific configurations provided

@qinglintian @lev-kusanagi I did not make any changes to the code.

./experiments/scripts/faster_rcnn_end2end.sh gpu 1 VGG16 pascal_voc

Only gpu1 is running. if I make "gpu 0", only gpu0 is running.

@chakpongchung that's strange. in the code, faster_rcnn_end2end.sh calls train_net.py with the device ID parameter, but in train_net.py, it only prints what has passed in and the device ID doesn's pass further to train.py

Does that mean this code is not supporting multiple GPUs?

@chakpongchung @qinglintian @lev-kusanagi Hey! For me also it shows that only 1 GPU is working. Is there something in the code that must be changed/altered?

Thank you in advance :)

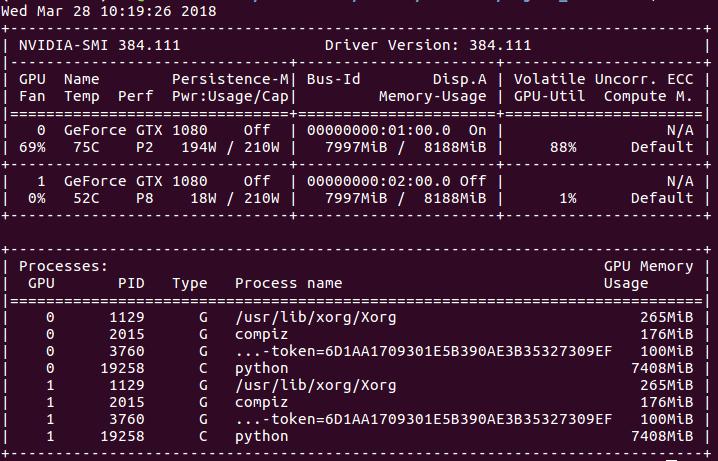

This was the output of nvidia-smi:

Could someone assist me on this topic? I have 2 GPU's and only one seems to work while training. Thank you!

@qinglintian

我从nvidia-smi观察到的是它只使用了1个GPU。我有两个titan-x和两个1080TI。我怎样才能将它们全部用于此处的培训?

same question. I want to use multi-GPU. Have anyone solved it?