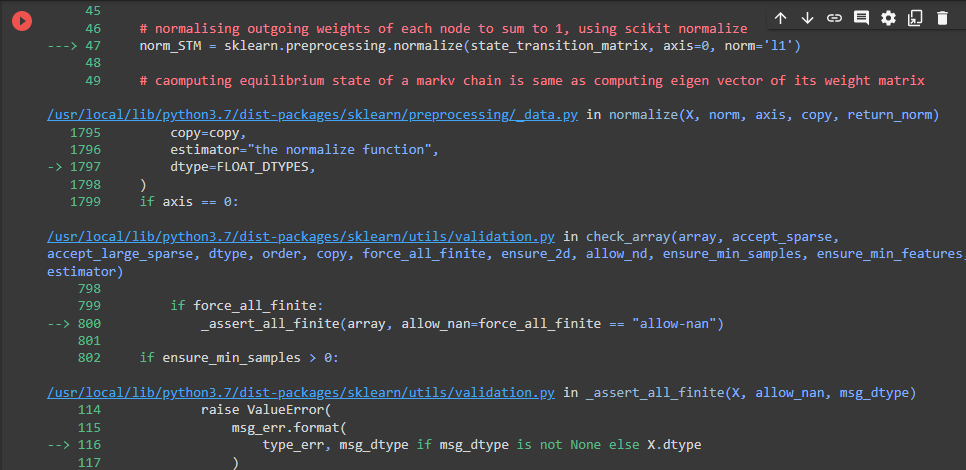

ValueError: Input contains NaN, infinity or a value too large for dtype('float64')

Hi,

The code works perfectly for most of the images in my dataset. However, it yields this error with few samples, I doubled checked whether there's some problem with the input images, and apparently, this error is only yielded when computing the gbvs.compute_saliency while ittikochneibur.compute_saliency works just fine.

Looks like the error is coming from graphBasedActivation.calculate(map, activation_sigma) function from here, which eventually calls markovChain.solve() throwing an error as we see above.

Since map variable is created from the image here, can you please check where are we getting invalid values from?

Apparently, the problem comes from the sklearn normalize function, I checked wether the state_transition_matrix variable had any Nan or infinite values and there seems to be none

What are the dimensions of the state_transition_matrix variable? Can you print it out here? We can run the normalize function on it in a python shell, and see if its an sklearn issue, or some edge case

looks like (896, 896)

Looks like these would need some in-depth investigation. I am not currently working on this project, so I might be able to get back to you by end of this week when I get some free time. Please feel free to post some other information that you feel might help investigation (dataset, or link to the offending-image)

Here's an update, apparently, the problem comes from the markovChain.solve() and exactly from v = np.divide(v, sum(v)) and is due to a division by 0. I think adding a small constant to sum(v) to avoid divisions by 0 would solve the problem