speedtest-go

speedtest-go copied to clipboard

speedtest-go copied to clipboard

Performance improvements for upload --saving-mode

On small memory devices we should reuse a block of memory instead of using strings.Repeat() when upload, although this still loss some bandwidth.

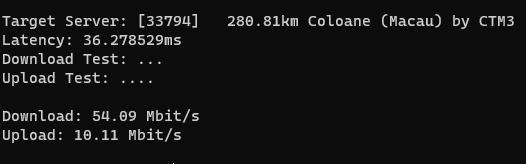

Speedtest running on:

[ 0.000000] Linux version 5.4.143 (builder@buildhost) (gcc version 8.4.0 (OpenWrt GCC 8.4.0 r16279-5cc0535800)) #0 Tue Aug 31 22:20:08 2021 [ 0.000000] SoC Type: MediaTek MT7628AN ver:1 eco:2 [ 0.000000] CPU0 revision is: 00019655 (MIPS 24KEc) [ 0.000000] Memory: 57444K/65536K available (5086K kernel code, 206K rwdata, 636K rodata, 1228K init, 205K bss, 8092K reserved, 0K cma-reserved) [ 0.000000] CPU Clock: 580MHz

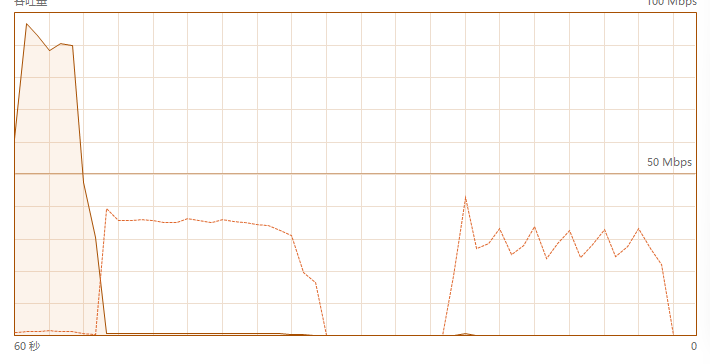

Legend1: Saving-Mode for the current version.

Legend2: Saving-Mode With Reusing a Block(only upload).

Legend3: Compared Normal Upload(left dashed line) and Saving-Mode With Reusing a Block(right dashed line) .

Conclusion: This will result in lower losses and a smaller memory footprint.

@r3inbowari Thank you for your proposal!

I'm not able to understand well the relationship between using less memory and bandwidth.

I welcome the way to reduce memory usage for --saving-mode option but I'd like to make the measurement method consistent within the implementation.

Since I don't deeply understand Golang, I cannot understand your proposal completely, but if your method can reduce much memory usage and if it turns out that there is sufficient value to use the method other than --saving-mode option, I'd like to replace current upload method with your idea.

To do so, I want to know

- How much does your idea reduce the memory usage, not only

--saving-modebut also normal mode? - Is the testing results of the new logic close enough to the current logic?

@showwin Thank you for your reply! The current version doesn't actually work well on devices with around 10MB of free space, even in saving mode.

We could implement a custom io.reader(reuse memory in Read method) instead of using strings.repeat to populate strings.NewReader(). So all we need to do is just replace this io.reader implementation.

Of course this also applies to normal mode.

like this:

func NewRepeatReader(count int) *RepeatReader {

s := make([]byte, 1000)

return &RepeatReader{s: s, count: count}

}

func (h *RepeatReader) Read(b []byte) (n int, err error) {

if h.count <= 0 {

return 0, io.EOF

}

n = copy(b, h.s)

h.count--

return

}

func uploadRequest(ctx context.Context, doer *http.Client, ulURL string, w int) error {

size := ulSizes[w]

reader:= NewRepeatReader(size)

// v := url.Values{}

// v.Add("content", strings.Repeat("0123456789", size*100-51))

// req, err := http.NewRequestWithContext(ctx, http.MethodPost, ulURL, strings.NewReader(v.Encode()))

req, err := http.NewRequestWithContext(ctx, http.MethodPost, ulURL, reader)

req.ContentLength = int64(size*100) * 10

if err != nil {

return err

}

req.Header.Set("Content-Type", "application/x-www-form-urlencoded")

resp, err := doer.Do(req)

if err != nil {

return err

}

defer resp.Body.Close()

_, err = io.Copy(ioutil.Discard, resp.Body)

return err

}

Further, could we copy the same memory block in all upload-worker goroutines to the remote server?

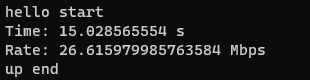

When balancing context switching and mem space, we can run normal mode on devices with almost equal go-runtime memory and measure higher upload speeds.

allocated memory for repeat

Old: (upSizes[i]*100 - 51) * 10 bytes New: only 32KB