Prompt is too long

Bug description

It appears to happen as the chat gets longer and longer. How should I address this?

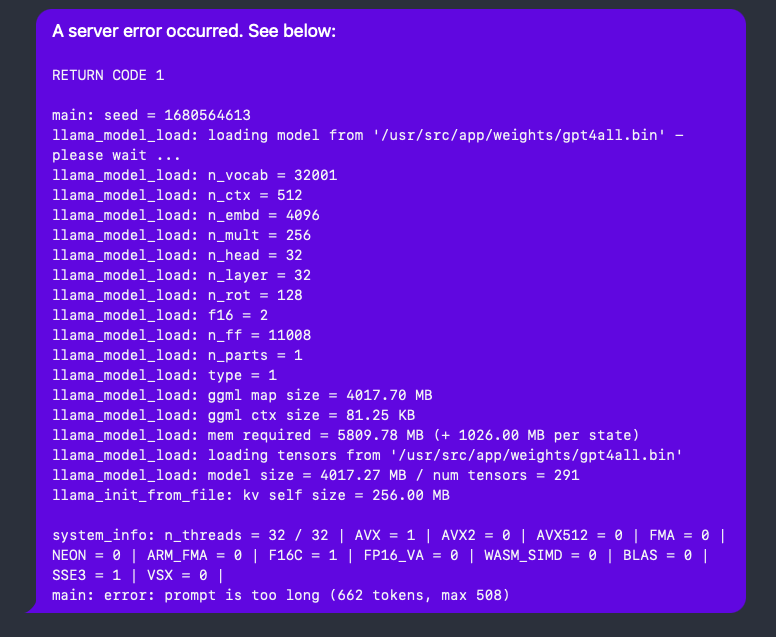

A server error occurred. See below:

RETURN CODE 1

main: seed = 1680564613 llama_model_load: loading model from '/usr/src/app/weights/gpt4all.bin'

main: error: prompt is too long (662 tokens, max 508)

I happen to be using gpt4all.

Steps to reproduce

Just keep chatting. Over time, it will eventually hit this error.

Environment Information

Docker version 20.10.17, build 100c701

Screenshots

Relevant log output

serge-1 | ERROR: serge.utils.generate main: seed = 1680564613

serge-1 | llama_model_load: loading model from '/usr/src/app/weights/gpt4all.bin' - please wait ...

serge-1 | llama_model_load: n_vocab = 32001

serge-1 | llama_model_load: n_ctx = 512

serge-1 | llama_model_load: n_embd = 4096

serge-1 | llama_model_load: n_mult = 256

serge-1 | llama_model_load: n_head = 32

serge-1 | llama_model_load: n_layer = 32

serge-1 | llama_model_load: n_rot = 128

serge-1 | llama_model_load: f16 = 2

serge-1 | llama_model_load: n_ff = 11008

serge-1 | llama_model_load: n_parts = 1

serge-1 | llama_model_load: type = 1

serge-1 | llama_model_load: ggml map size = 4017.70 MB

serge-1 | llama_model_load: ggml ctx size = 81.25 KB

serge-1 | llama_model_load: mem required = 5809.78 MB (+ 1026.00 MB per state)

serge-1 | llama_model_load: loading tensors from '/usr/src/app/weights/gpt4all.bin'

serge-1 | llama_model_load: model size = 4017.27 MB / num tensors = 291

serge-1 | llama_init_from_file: kv self size = 256.00 MB

serge-1 |

serge-1 | system_info: n_threads = 32 / 32 | AVX = 1 | AVX2 = 0 | AVX512 = 0 | FMA = 0 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 |

serge-1 | main: error: prompt is too long (662 tokens, max 508)

Confirmations

- [X] I'm running the latest version of the main branch.

- [X] I checked existing issues to see if this has already been described.

I've looked through the source code of both llama and Serge and it appears to be caused by Serge not cropping the text after the combined prompt of all previous messages exceeds the maximum token count defined as the Prompt Context Length. But it also seems that Serge does not correctly store the max token count, because in all error messages the "llama_model_load: n_ctx = 512" is always 512. I haven't found the root cause for why Serge always sets n_ctx to 512 even if you set it really low in the settings. The "max 508" in the error output is explained by llama reducing the max token count by 4.

I am also experiencing this after some promts

Thanks @DVSProductions. I opened PR #138 to address the issue you brought up of it not correctly passing through the prompt context length.

I have this issue a lot, real party pooper.