serge

serge copied to clipboard

Bad_Alloc on STD out when sending in chat message on localhost with 7B-Native (Code -6)

Bug description

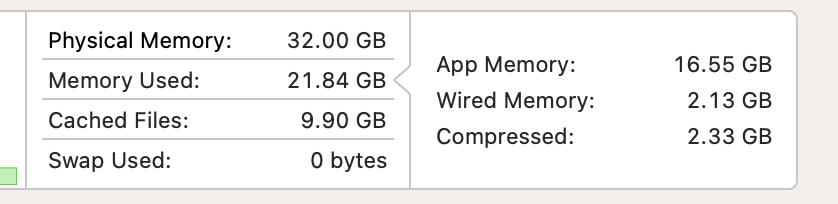

I get a Bad_Alloc error when sending a prompt, even though I have plenty of memory and processor speed on my host machine (based on requirements)

Steps to reproduce

- Ran

docker run -d -v weights:/usr/src/app/weights -v datadb:/data/db/ -p 8008:8008 ghcr.io/nsarrazin/serge:latest - open http://localhost:8008/models

- Select second download (7B-Native)

- Go to Home -> Start Chat

- Type "why is the sky blue" in "Ask a question" and hit "Send"

- Get error

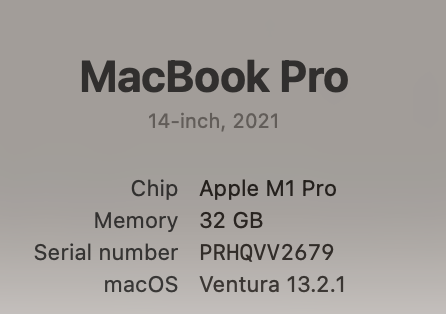

Environment Information

Client: Podman Engine

Version: 4.3.1

API Version: 4.3.1

Go Version: go1.18.8

Built: Wed Nov 9 12:43:58 2022

OS/Arch: darwin/arm64

Server: Podman Engine

Version: 4.4.2

API Version: 4.4.2

Go Version: go1.19.6

Built: Wed Mar 1 03:22:39 2023

Container

Screenshots

Relevant log output

A server error occurred. See below:

RETURN CODE -6

main: seed = 1679990016

llama_model_load: loading model from '/usr/src/app/weights/7B-native.bin' - please wait ...

llama_model_load: n_vocab = 32000

llama_model_load: n_ctx = 512

llama_model_load: n_embd = 4096

llama_model_load: n_mult = 256

llama_model_load: n_head = 32

llama_model_load: n_layer = 32

llama_model_load: n_rot = 128

llama_model_load: f16 = 2

llama_model_load: n_ff = 11008

llama_model_load: n_parts = 1

llama_model_load: type = 1

llama_model_load: ggml ctx size = 4273.34 MB

llama_model_load: mem required = 6065.34 MB (+ 1026.00 MB per state)

terminate called after throwing an instance of 'std::bad_alloc'

what(): std::bad_alloc

### Confirmations

- [X] I'm running the latest version of the main branch.

- [X] I checked existing issues to see if this has already been described.

Same happening to me

Is this still happening with the latest tag @sshadmand @zhukovgreen

Tested. All works fine now. Thank you