kafka-lag-exporter

kafka-lag-exporter copied to clipboard

kafka-lag-exporter copied to clipboard

Kafka Lag Exporter metric isn't showing correct values

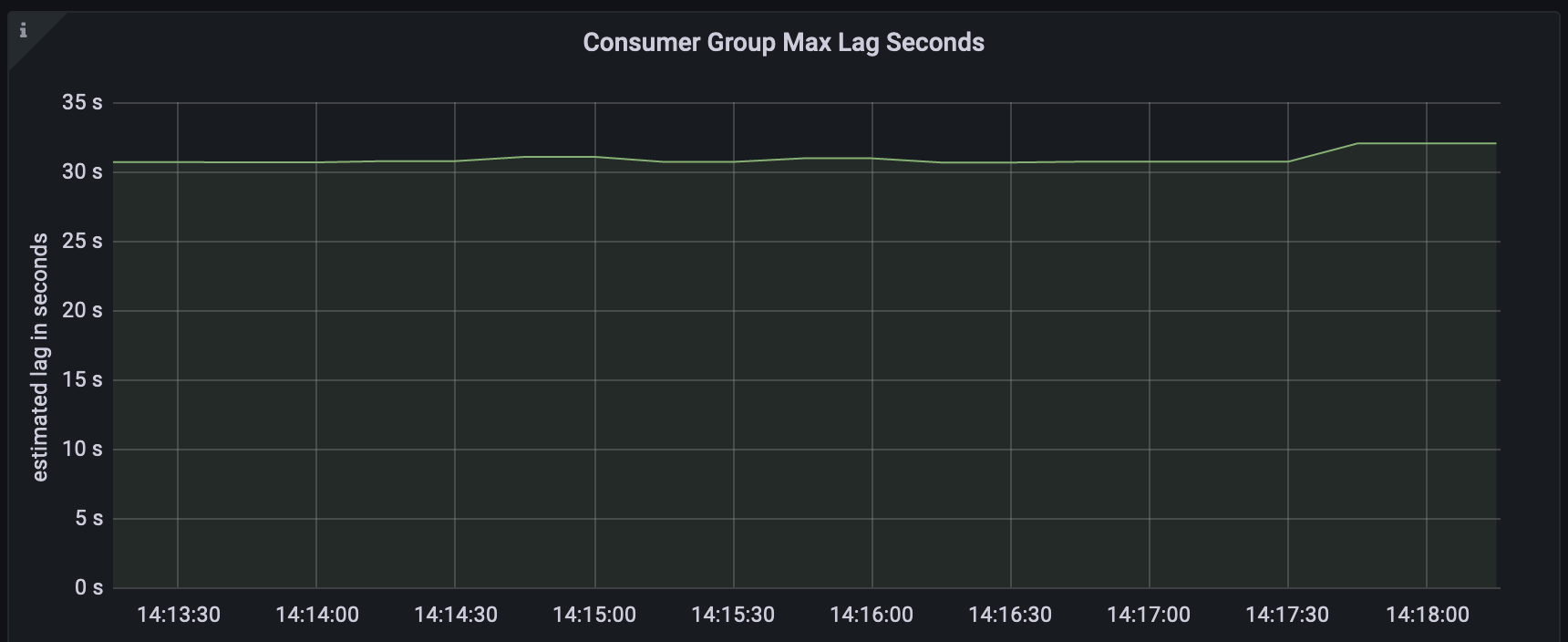

Kafka Lag Exporter is up and running on an MSK cluster but kafka_consumergroup_group_lag_seconds metric bounces between 0-30 seconds with very little load on the service. The custom metric calculates the lag based on polling each message and then subtracting it with the current time to calculate the lag.

The kafka lag exporter is bringing up a lag of 30 seconds while the custom metric shows only 200-250ms of lag. Is this okay? Can someone help with this issue?

@seglo Can this be the result of any misconfiguration on our end?

I have added the grafana dashboard screenshot below.

Let me know if you need more information to understand this.

Hi @panthdesai18. It's difficult to say without more information. Can you provide what's mentioned in the create new bug issue template?

Sure

To reproduce: Values: Clusters: Admin Client Properties: sasl.mechanism: SCRAM-SHA-512 security.protocol: SASL_SSL Bootstrap Brokers: 3 broker Consumer Properties: sasl.mechanism: SCRAM-SHA-512 security.protocol: SASL_SSL Labels: Location: oregon Zone: us-west-2 Metric Whitelist: . Prometheus: Service Monitor: Enabled: true Reporters: Prometheus: Enabled: true Monitoring Namespace: monitoring Port: 9308

I have also added Kafka User to get the access of the kafka cluster. Here is the Kafka Jaas config: KafkaClient { org.apache.kafka.common.security.scram.ScramLoginModule required serviceName="kafka" username="" password=""; };

Environment:

- version: 0.6.8

- version of Apache Kafka Cluster: 2.6.0

- run with the helm chart with some modifications

Unfortunately there's not much I can do without a reproducer test. The estimation depends entirely on lookup table size and throughput. Less throughput or small lookup tables can result in large swings in predictions, typically because a value is extrapolated instead of interpolated.