LAVIS

LAVIS copied to clipboard

LAVIS copied to clipboard

CPU Memery boom in BLIP2 pretraining stage

Thanks for sharing the great repository! I'm attempting to replicate the BLIP2 stage2 results by running pretrain_stage2.sh, but I've been encountering frequent memory overflows. It seems that the memory usage increases after each iteration, and I suspect that the issue might be related to the dataloader implementation, which is quite complex. Would you be able to help me fix this bug or offer some suggestions?

https://github.com/salesforce/LAVIS/blob/0e9a3ecfa5dc668761ce6c0e3cf827578e8593c4/lavis/tasks/base_task.py#L201

I'm having a similar issue when loading a huge json of LAION115m from BLIP-1. It works OK with CUDA_VISIBLE_DEVICES=0 or CUDA_VISIBLE_DEVICES=0,1,2,3, but will fail if I use 8 GPUs (increasing CPU memory usage, then freezing and killed). I guess the issue is from multi-processing or DDP. I also tried smaller num_works but it does not help.

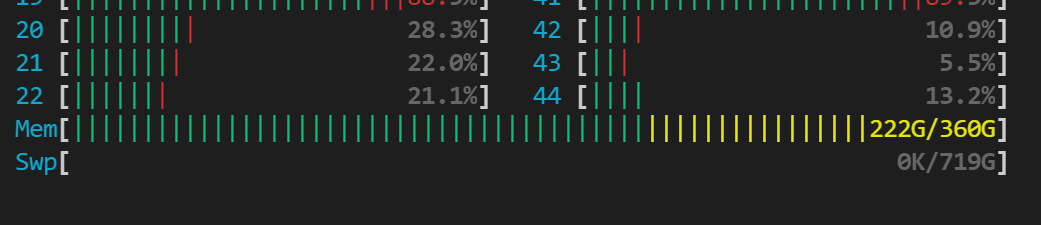

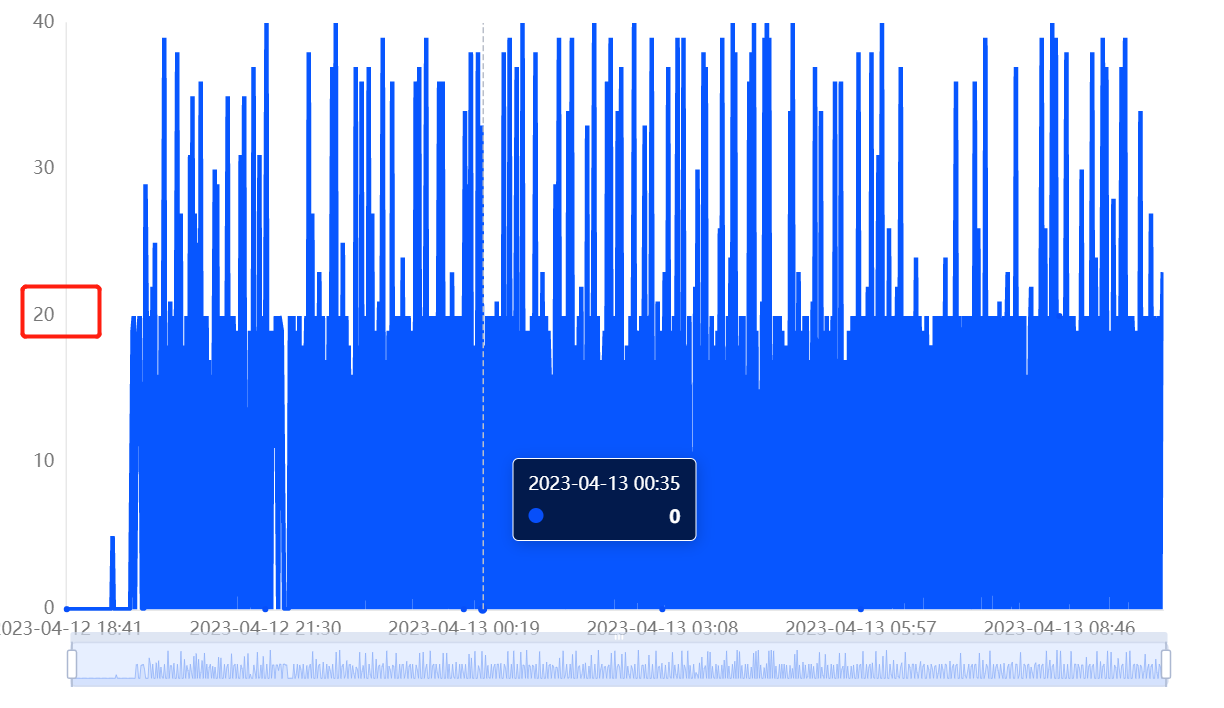

If you set num_worker=0, the CPU memory won't increase. However, the GPU utilization would be very low. In my 8*V100 machine, there are only 20% on average.

I'm having a similar issue when loading a huge json of LAION115m from BLIP-1. It works OK with

CUDA_VISIBLE_DEVICES=0orCUDA_VISIBLE_DEVICES=0,1,2,3, but will fail if I use 8 GPUs (increasing CPU memory usage, then freezing and killed). I guess the issue is from multi-processing or DDP. I also tried smallernum_worksbut it does not help.

https://github.com/salesforce/LAVIS/blob/480a9f382f0f4426fbb767b1803be924453fad0d/lavis/datasets/datasets/dataloader_utils.py#L78

We need to delete the self.batch first

try:

if hasattr(self, "batch"):

del self.batch

self.batch = next(it)

Same. I tested the evaluation on BLIP for the image retrieval task; the dataset has more than 10k image-to-text pairs. The memory keeps increasing and then ends with an out-of-memory error. Any suggestions?

Hi @TinaLiuArcher

The memory keeps increasing and then ends with an out-of-memory error

are you referring to CPU memory or GPU memory?

I have the same issue, CPU memory increases and stays MAX without freeing.

When running, CPU memory utilization increases and frees (possibly thanks to https://github.com/salesforce/LAVIS/issues/247#issuecomment-1508112896 ).

What is the cause?

maybe free CPU memory after transferring the model to GPUs?

Update

I found that num_workers 0 avoid CPU memory to explode. Maybe other values help too, I had 32 before.

Hi @TinaLiuArcher

The memory keeps increasing and then ends with an out-of-memory error

are you referring to CPU memory or GPU memory?

It is the CPU memory. And I modify the workers, and it doesn't help.

In my case, I have testing data larger than 10k to do the retrieval, and it may cause a lot of memory to be saved in the CPU while doing the sim matrix calculation. I ended up saving the embedding feature locally and loaded it when needed.

@TinaLiuArcher Have you finished this memory issue?

I have the same issue, CPU memory increases and stays

MAXwithout freeing. When running, CPU memory utilization increases and frees (possibly thanks to #247 (comment) ).What is the cause? maybe free CPU memory after transferring the

modelto GPUs?Update

I found that

num_workers 0avoid CPU memory to explode. Maybe other values help too, I had 32 before.

this works