how to use custom prompt template?

Hi, I try to use custom prompt to ask question, but the answer is always in english.

I guess there are two reasons:

- My PROPMT is not working

- Internally cached history

if the first reason, can you tell me where I wrong? and if the second reason, how to clear the history.

below is my code:

index = GPTSimpleVectorIndex.load_from_disk('index.json')

QUESTION_ANSWER_PROMPT_TMPL = (

"请根据上下文回答问题,如果你不知道请直接回答:对不起,我暂时无法解答该问题\n"

"------------------\n"

"上下文: {context_str}"

"\n-------------------\n"

"问题: {query_str}\n"

)

QUESTION_ANSWER_PROMPT = QuestionAnswerPrompt(QUESTION_ANSWER_PROMPT_TMPL)

q = "MY QUESTION"

llm_predictor = LLMPredictor(llm=ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo"))

response = index.query(

q,

llm_predictor=llm_predictor,

similarity_top_k=3,

# refine_template=CHAT_REFINE_PROMPT,

text_qa_template=QUESTION_ANSWER_PROMPT

)

index = GPTSimpleVectorIndex(documents,text_qa_template=DEFAULT_TEXT_QA_PROMPT)

index = GPTSimpleVectorIndex(documents,text_qa_template=DEFAULT_TEXT_QA_PROMPT)

Hi, I try this, but not work, I ask Chinese question, always answer english, that's why I want to use custom prompt

index = GPTSimpleVectorIndex(documents,text_qa_template=DEFAULT_TEXT_QA_PROMPT)Hi, I try this, but not work, I ask Chinese question, always answer english, that's why I want to use custom prompt

I have tried and found that's doesn't support chinese and don't know why

by the way. you know how to solve this problem #796

I found that both methods can run normally .

index = GPTSimpleVectorIndex(documents,text_qa_template=DEFAULT_TEXT_QA_PROMPT) or index.query(q,llm_predictor=llm_predictor,text_qa_template=DEFAULT_TEXT_QA_PROMPT)

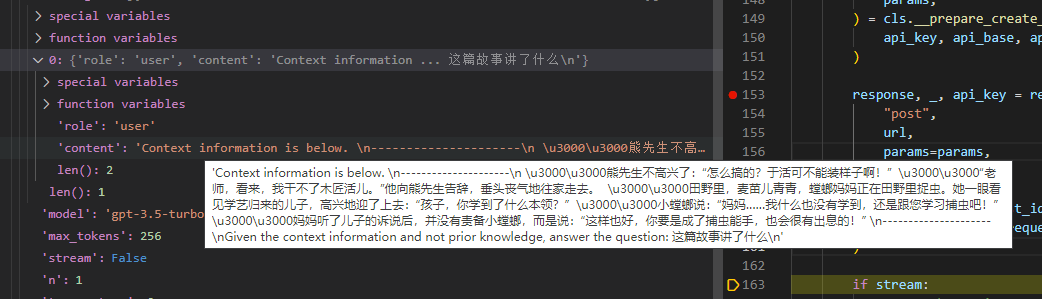

{"response":"这篇故事讲了一个小螳螂学习木匠技能失败后回到田野里,向妈妈学习捕虫技能的故事。妈妈并没有责备小螳螂,而是鼓励他成为捕虫能手。","source_nodes":[{"source_text":" 熊先生不高兴了:“怎么搞的?于活可不能装样子啊!” “老师,看来,我干不了木匠活儿。”他向熊先生告辞,垂头丧气地往家走去。 田野里,麦苗儿青青,螳螂妈妈正在田野里捉虫。她一眼看见学艺归来的儿子,高兴地迎了上去:“孩子,你学到了什么本领?” 小螳螂说:“妈妈……我什么也没有学到,还是跟您学习捕虫吧!” 妈妈听了儿子的诉说后,并没有责备小螳螂,而是说:“这样也好,你要是成了捕虫能手,也会很有出息的!” ","doc_id":"8d41dc1a-bd19-44b7-a46d-6d43f394a1c7","extra_info":null,"node_info":{"start":433,"end":649},"similarity":0.8230393207796218,"image":null}],"extra_info":null}

I found that both methods can run normally .

index = GPTSimpleVectorIndex(documents,text_qa_template=DEFAULT_TEXT_QA_PROMPT) or index.query(q,llm_predictor=llm_predictor,text_qa_template=DEFAULT_TEXT_QA_PROMPT)

{"response":"这篇故事讲了一个小螳螂学习木匠技能失败后回到田野里,向妈妈学习捕虫技能的故事。妈妈并没有责备小螳螂,而是鼓励他成为捕虫能手。","source_nodes":[{"source_text":" 熊先生不高兴了:“怎么搞的?于活可不能装样子啊!” “老师,看来,我干不了木匠活儿。”他向熊先生告辞,垂头丧气地往家走去。 田野里,麦苗儿青青,螳螂妈妈正在田野里捉虫。她一眼看见学艺归来的儿子,高兴地迎了上去:“孩子,你学到了什么本领?” 小螳螂说:“妈妈……我什么也没有学到,还是跟您学习捕虫吧!” 妈妈听了儿子的诉说后,并没有责备小螳螂,而是说:“这样也好,你要是成了捕虫能手,也会很有出息的!” ","doc_id":"8d41dc1a-bd19-44b7-a46d-6d43f394a1c7","extra_info":null,"node_info":{"start":433,"end":649},"similarity":0.8230393207796218,"image":null}],"extra_info":null}

I guess Internally cached history, It was ok at the beginning, but after I asked a lot of English questions, I couldn't turn it around

I found that both methods can run normally . index = GPTSimpleVectorIndex(documents,text_qa_template=DEFAULT_TEXT_QA_PROMPT) or index.query(q,llm_predictor=llm_predictor,text_qa_template=DEFAULT_TEXT_QA_PROMPT)

{"response":"这篇故事讲了一个小螳螂学习木匠技能失败后回到田野里,向妈妈学习捕虫技能的故事。妈妈并没有责备小螳螂,而是鼓励他成为捕虫能手。","source_nodes":[{"source_text":" 熊先生不高兴了:“怎么搞的?于活可不能装样子啊!” “老师,看来,我干不了木匠活儿。”他向熊先生告辞,垂头丧气地往家走去。 田野里,麦苗儿青青,螳螂妈妈正在田野里捉虫。她一眼看见学艺归来的儿子,高兴地迎了上去:“孩子,你学到了什么本领?” 小螳螂说:“妈妈……我什么也没有学到,还是跟您学习捕虫吧!” 妈妈听了儿子的诉说后,并没有责备小螳螂,而是说:“这样也好,你要是成了捕虫能手,也会很有出息的!” ","doc_id":"8d41dc1a-bd19-44b7-a46d-6d43f394a1c7","extra_info":null,"node_info":{"start":433,"end":649},"similarity":0.8230393207796218,"image":null}],"extra_info":null}I guess

Internally cached history, It was ok at the beginning, but after I asked a lot of English questions, I couldn't turn it around

hi, if you're looking for a chinese response, have you tried customizing the refine template as well?

the QuestionAnswer prompt is used for the first chunk of context, but if context is bigger we call the "refine prompt" over subsequent context chunks.

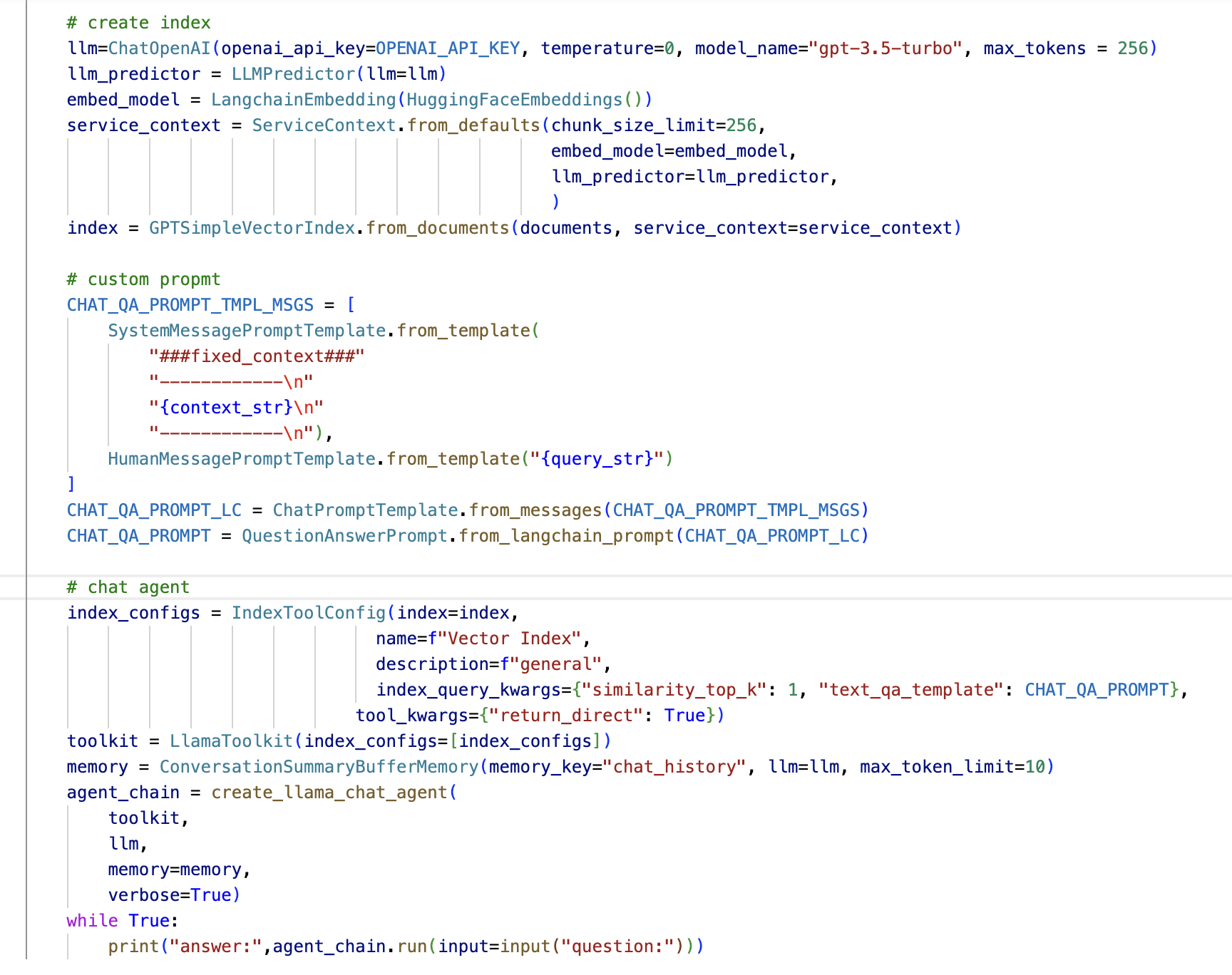

@jerryjliu How to use QuestionAnswerPrompt to agent_chain.run(input='')

https://gpt-index.readthedocs.io/en/latest/how_to/integrations/using_with_langchain.html

@jerryjliu How to use QuestionAnswerPrompt to agent_chain.run(input='')

https://gpt-index.readthedocs.io/en/latest/how_to/integrations/using_with_langchain.html

when you initialize the agent with tools (e.g. the LlamaIndexTool), do

tool_config = IndexToolConfig(

index=index,

name=f"Vector Index",

description=f"useful for when you want to answer queries about X",

index_query_kwargs={"similarity_top_k": 3, "text_qa_template": question_answer_prompt},

tool_kwargs={"return_direct": True}

)

note the entry in index_query_kwargs

Going to close issue for now, for more questions join our discord! https://discord.gg/dGcwcsnxhU

Why is there a limit on the number of characters returned in the query results? Have you encountered this problem?

@jerryjliu How to use QuestionAnswerPrompt to agent_chain.run(input='') https://gpt-index.readthedocs.io/en/latest/how_to/integrations/using_with_langchain.html

when you initialize the agent with tools (e.g. the LlamaIndexTool), do

tool_config = IndexToolConfig( index=index, name=f"Vector Index", description=f"useful for when you want to answer queries about X", index_query_kwargs={"similarity_top_k": 3, "text_qa_template": question_answer_prompt}, tool_kwargs={"return_direct": True} )

note the entry in index_query_kwargs

Hi, I am trying to use custom prompt with agent, but still seems to be using default prompt. Here is my code:

Did you figure it out @NiharikaKatte?

As @jerryjliu said, you shoud add two custom prompts as below, it works...

I think what you input to the model is also more than one node.

- https://github.com/jerryjliu/llama_index/blob/9fc87565cbcdf2ef8b52f6cbe88281f4860d17dc/docs/how_to/output_parsing.md?plain=1#L22

@mintisan is correct.

Going to close this issue for now!

For reference as well, if your response is getting cut off, see this page for setting max_tokens/num_output https://gpt-index.readthedocs.io/en/latest/how_to/customization/custom_llms.html#example-explicitly-configure-context-window-and-num-output