torchdiffeq

torchdiffeq copied to clipboard

ode_demo.py slower on A100 than P100

Dear all,

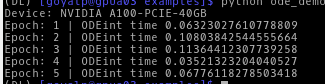

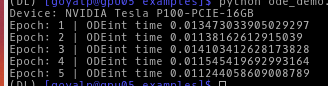

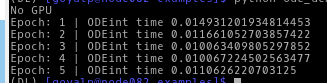

Thank for you providing a nice package for neural ODE. Highly appreciated. I ran the ode_demo.py file on different GPUs and have experienced very different computational time. I ran the demo file on P100, A100, and on a CPU machine. I noticed that the code is significant slower on A100 than P100 (almost by 10--20 times). In fact, A100 performs poorer than the CPU. I only made the following change in the provided demo file to note the time :+1:

current_time = time.time()

pred_y = odeint(func, batch_y0, batch_t).to(device)

print(f"Epoch: {itr} | ODEint time {time.time()-current_time}")

Can you please help me to understand this behavior? I have attached the screenshots of the computational times by running the demo on different machine.