Not able to see latest messages on kowl?

I am using kowl to read messages, and are currently unable to read the latest messages I've tried increasing the ram, but it does not seem like it helps.

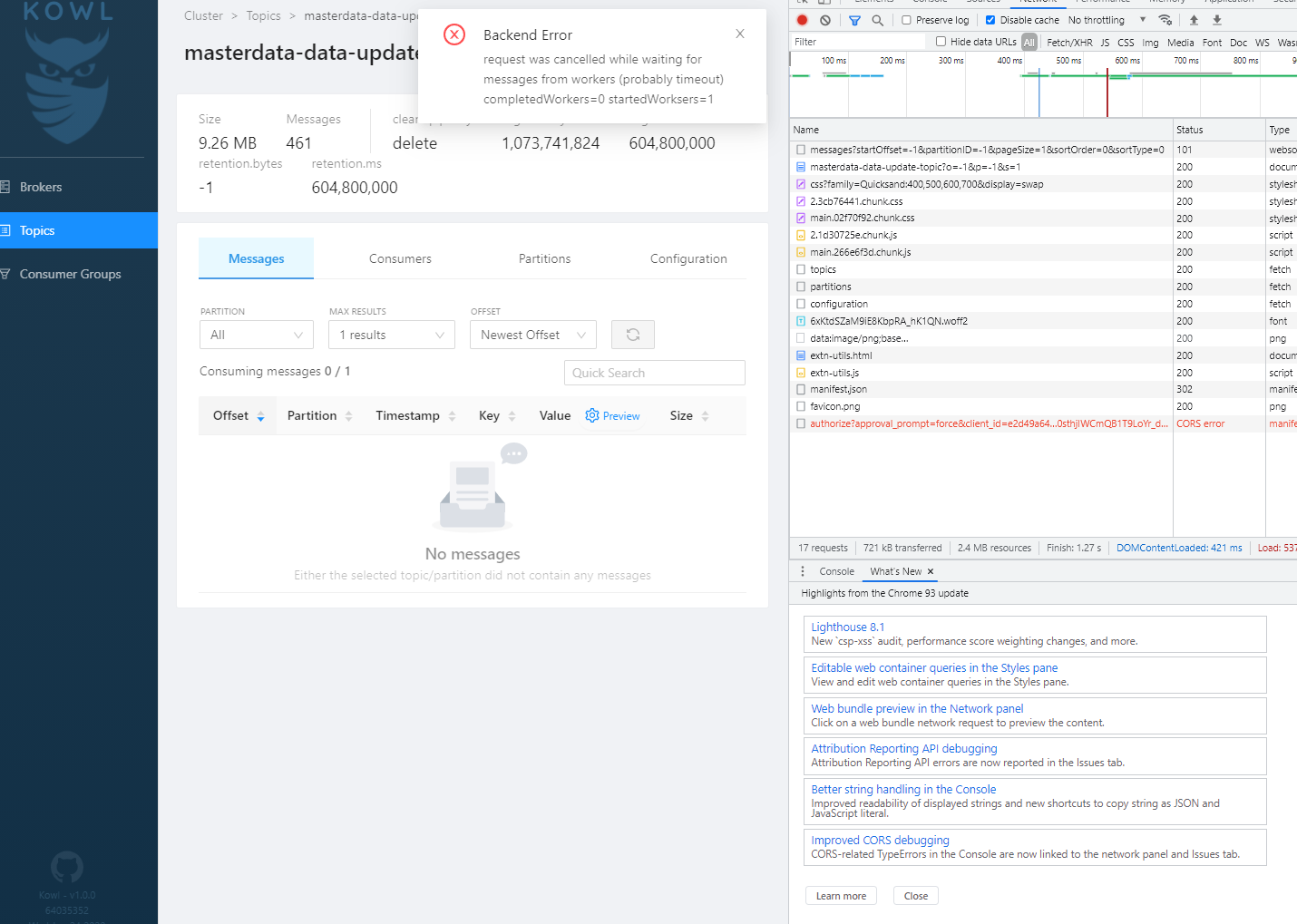

I keep running into this backend error message? what is going on?

Hello @Keerthikan , this looks like a very old Kowl version. Please try the latest version and see if the issue persists.

I tried with a newer version 1.4.0

It does not say backend issue, but I never retrieve my messages.

@Keerthikan Is it the same if you choose more results and does that also happen with other topics? I've seen such an issue only once and we haven't yet figured out what's causing it. Our assumption is that these topics may have special records (such as aborted commits or so). Some more context would be nice

It seems like it is not able to retrieve that latest one. It is able to retrieve all the one before that, if I say Newest-100 for instance, I can get around 89.

What I have send in, is a big message, like 5000 rows, and I can see it has been received due to the incremented value of received messages, but cannot read it in kowl.

@Keerthikan Do you happen to know how big that specific record is? I'm wondering if it acceeds our Kafka client's max config in regards to the size

Just above 1 mb

@weeco is there something I can do? change a config somewhere and try it out?

Hey @Keerthikan , I'm unsure why this happens. I have checked the logic multiple times and couldn't find an issue with that (but it's rather complex admittedly). It proved multiple times to work just fine.

Ideally I'd be able to reproduce this - if given access to a topic/cluster where this can be reproduced is possible, I'd be very happy to have a look. Otherwise I could offer to add more debug log messages around this, but that could mean quite some effort for both of us until we figured out what's going on.

I'm facing the same issue running latest version of kowl + kafka on kubernetes. Interesting fact is that a similar version of the same setup in docker compose works fine.

I was able to fix the issue specific happening in kubernetes.

The problem was that the messages are loaded using web sockets (wss) and I was using kowl behind an ingress controller that didn't enable it by default. I'm using contour, so the way to fix it was to add enableWebsockets: true ingress yaml.

@Keerthikan Looking at your provided screenshot I wonder whether this is indeed a Kowl issue or not.

So on your first screenshot you are using start offset: Newest . Which means it will only return messages that will arrive after you started the request.

The second screenshot shows that no request has been sent to fetch messages (you can see that in the network tab). Can you provide an update whether you still have this issue?

I do still have this issue, it does however sometime resolve when I use incognito, remove cache stuff, restart browser stuff and etc.

It seems like its stuck in some state it cannot get out of, I am not sure whether me using incognito helps?

Hmm @Keerthikan , tough to debug remotely. Maybe it is interfering with a browser plugin? I've seen wild cases where uBlock blocked some requests because topic names contained URLs that looked like ads. E.g. "analytics" etc.?

Further screenshots that show the requests / websocket messages etc. would help I think

This is a bug introduced with version 1.5. I tried with version 1.4 of Kowl and it works properly.

Hi, I have simmilar behaviour Kowl sometimes can't read any messages, sometimes read them partially only(i.e - there are 10 messages in topic but only 2 or 3 are displayed), some logs from the docker containers running when it happens.

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:58.326Z","msg":"metadata update triggered","source":"kafka_client","why":"client initialization"}

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:58.334Z","msg":"assigning partitions","source":"kafka_client","why":"new assignments from direct consumer","how":"assigning everything new, keeping current assignment","input":{"kafka_connect_studies.kafka_connect_studies.users":{"0":{"At":0,"Epoch":-1,"CurrentEpoch":0}}}}

schema-registry | [2022-03-17 10:30:58,350] INFO 172.18.0.5 - - [17/Mar/2022:09:30:58 +0000] "GET /schemas/ids/5 HTTP/1.1" 200 139 "-" "Kowl" GETsT (io.confluent.rest-utils.requests)

schema-registry | [2022-03-17 10:30:58,359] INFO 172.18.0.5 - - [17/Mar/2022:09:30:58 +0000] "GET /schemas/ids/6 HTTP/1.1" 200 556 "-" "Kowl" GETsT (io.confluent.rest-utils.requests)

kowl_1 | panic: runtime error: index out of range [0] with length 0

kowl_1 |

kowl_1 | goroutine 139 [running]:

kowl_1 | github.com/cloudhut/kowl/backend/pkg/kafka.(*deserializer).deserializePayload(0xc000500fd0, {0xc0002dd636, 0x6, 0xc000555d1c}, {0xc000188000, 0x31}, 0xc0000a9320)

kowl_1 | /app/pkg/kafka/deserializer.go:143 +0xe25

kowl_1 | github.com/cloudhut/kowl/backend/pkg/kafka.(*deserializer).DeserializeRecord(0xc000555de0, 0xc0005aa630)

kowl_1 | /app/pkg/kafka/deserializer.go:103 +0xce

kowl_1 | github.com/cloudhut/kowl/backend/pkg/kafka.(*Service).startMessageWorker(0xc000500c00, {0x12efb90, 0xc0005a74c0}, 0xc0004ccf48, 0x11a3140, 0x6e90c6, 0xc0000a9320)

kowl_1 | /app/pkg/kafka/consumer_worker.go:38 +0x272

kowl_1 | created by github.com/cloudhut/kowl/backend/pkg/kafka.(*Service).FetchMessages

kowl_1 | /app/pkg/kafka/consumer.go:119 +0x38b

kafka-debezium-playground_kowl_1 exited with code 2

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.149Z","msg":"successfully connected to kafka cluster","advertised_broker_count":1,"topic_count":9,"controller_id":1,"kafka_version":"v3.0"}

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.149Z","msg":"creating schema registry client and testing connectivity"}

schema-registry | [2022-03-17 10:30:59,153] INFO 172.18.0.5 - - [17/Mar/2022:09:30:59 +0000] "GET /subjects HTTP/1.1" 200 83 "-" "Kowl" GETsT (io.confluent.rest-utils.requests)

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.152Z","msg":"successfully tested schema registry connectivity"}

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.152Z","msg":"creating Kafka connect HTTP clients and testing connectivity to all clusters"}

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.155Z","msg":"tested Kafka connect cluster connectivity","successful_clusters":1,"failed_clusters":0}

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.155Z","msg":"successfully create Kafka connect service"}

kowl_1 | {"level":"info","ts":"2022-03-17T09:30:59.229Z","msg":"Server listening on address","address":"[::]:8080","port":8080}

Any updates on this issue?

I'm facing it as well running on the latest image (master-8fcce39), but I did experience it also with older kowl versions (starting with version 1.5)

To help you locate the issue, I did some experiments with different serializer setups. I can reproduce it when both message key and value are serialized with Avro (backed by a schema registry). It seems like the issue is somehow related to key deserialization, messages without key schema are shown correctly.

Here is the container output:

{"level":"info","ts":"2022-07-18T15:34:05.604Z","msg":"Server listening on address","address":"[::]:9001","port":9001}

{"level":"info","ts":"2022-07-18T15:35:20.362Z","msg":"immediate metadata update triggered","source":"kafka_client","why":"client initialization"}

{"level":"info","ts":"2022-07-18T15:35:20.365Z","msg":"assigning partitions","source":"kafka_client","why":"new assignments from direct consumer","how":"assigning everything new, keeping current assignment","input":"local-demotopic-v1[3{0 e-1 ce0} 4{0 e-1 ce0} 2{0 e-1 ce0} 1{0 e-1 ce0}]"}

panic: runtime error: index out of range [0] with length 0

goroutine 157 [running]:

github.com/redpanda-data/console/backend/pkg/kafka.(*deserializer).deserializePayload(0xc00007ffc0, {0xc0000f2c8e, 0x6, 0x6}, {0xc00003e390, 0x12}, 0xc00002f140?)

/go/pkg/mod/github.com/redpanda-data/console/[email protected]/pkg/kafka/deserializer.go:152 +0xe25

github.com/redpanda-data/console/backend/pkg/kafka.(*deserializer).DeserializeRecord(0xc0016cdde0?, 0xc0002fc240)

/go/pkg/mod/github.com/redpanda-data/console/[email protected]/pkg/kafka/deserializer.go:112 +0xce

github.com/redpanda-data/console/backend/pkg/kafka.(*Service).startMessageWorker(0xc00007fc00, {0x6e5aff8, 0xc0004952c0}, 0x0?, 0x6ca5ef8, 0x0?, 0xc00002f140)

/go/pkg/mod/github.com/redpanda-data/console/[email protected]/pkg/kafka/consumer_worker.go:47 +0x26b

created by github.com/redpanda-data/console/backend/pkg/kafka.(*Service).FetchMessages

/go/pkg/mod/github.com/redpanda-data/console/[email protected]/pkg/kafka/consumer.go:128 +0x3d3

@gscher Thanks for the additional input. I'm willed to debug and sort this out. If I'm able to reproduce the issue, it would be a big enabler to get this fixed.

Do you have a chance to provide the avro schema + a serialized payload which would fail? Or even write a Go test that would fail to deserialize the record? I'm sorry that I don't have an immediate idea what the issue is and that we require your additional input on this - I know this requires time and might be annoying to get a solution for this.

I think I have identified the issue. It has to do with Avro's variable length zig-zag coding and its resulting byte values.

My Avro key schema is just a simple long:

{

"type": "long"

}

Assume I want to serialize long 5 as my key, my schema is associated with ID 3, then I would produce a byte array of the following form:

byte[6] = [ 0, 0, 0, 0, 3, 10]

Trying to deserialize that in the RedPanda Console brings me to the deserializePayload method in deserializer.go to the if-block starting at line 149. The condition matches as I have the magic Avro byte. Also the schema ID is correctly extracted.

However, the TrimLeft operation would remove certain whitespaces, line feeds, etc. In my case, byte 10 corresponds to Ascii Line Feed and therefore, my actual payload value is removed.

This leads to the index out of range we see in the error logs.

I'm not sure, whether it is safe to completely get rid of the TrimLeft operation, but it definitely needs to deal with such cases when actual payload values end up being encoded as whitespace characters.

@gscher This analysis is amazing, thanks so much for investigating and sharing! I think it's safe to remove the whitespace trim. We still have to remove the first 5 bytes, but we shouldn't trim whitespaces when deserializing the Avro payload. Do you want to file a PR for that so that you can take all the credits for the fix? :).

Happy to do that as well. Thanks again for figuring this out, amazing community work!

Edit: I had a look again and I missed that line 149 is the check for the JSON check. I guess we should add an condition there whether trimmed[0] is within the bounds of the array. But the issue is clear now thanks to your analysis.

@weeco Yes, I saw that as well while testing. The thing is that Avro payloads also run through this code block, but skip it based on the startsWithJSON variable. We can either avoid entering the code block for Avro payloads (the question is how, as the conditions are basically the same?), or avoid using TrimLeft here, but this might have consequences for real JSON payloads.

@gscher Based on your suggestion I committed https://github.com/redpanda-data/console/commit/044a0b37afaeadc4f920f3fe5c83d757e3af5453 . Maybe you can give that a run. If you use a docker image it will take some time until it's available due to the cross-platform builds with QEMU.

Looks good to me, that's exactly what I did when I was debugging it. Thanks for fixing it :)