Implement a "data flow" screen

Hello and thanks for Kowl! First of all it just looks great and works fast. Thanks for the experience.

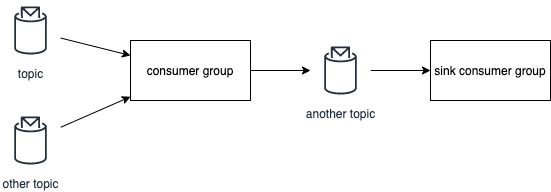

I am missing a screen that would combine all known consumer groups and topics and form visual chains of information.

Something like this (but much nicer):

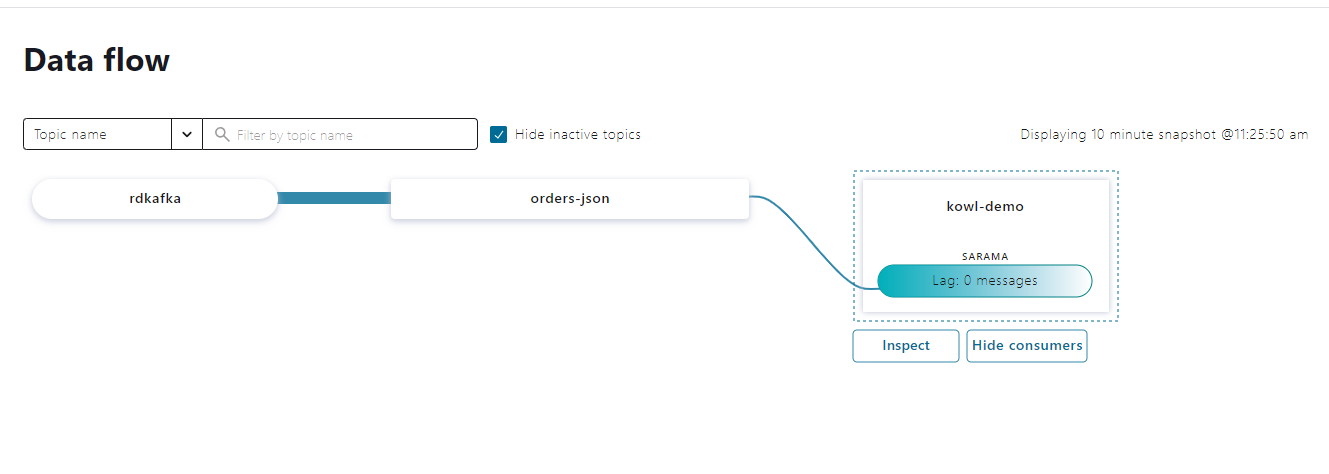

Similar feature is available at confluent.cloud called "Data flow"

Hi @Zaijo , I'm glad you like Kowl.

I like your feature request, however I'm not entirely sure whether this is possible for us. By default Kafka doesn't store something like the producer's client id along with the Kafka messages in a topic. I've seen producers who store metadata (such as the producer's service name) in the message headers, but that's completely up to the producer and probably very different across all Kowl users.

Do you happen to know where Confluent gets the producers' information for each topic from or do you have an idea how to solve this?

Hi @weeco . I don't know. I just saw that Confluent made it work. I hoped that the information about "active producer to a topic" gets stored somewhere.

Just tested this on my own and I'm still wondering how it works in the backend. Definitely a nice feature!

If individual processors published their topology descriptions via KStreams Topology#describe, we could diagram the complete flow information. But that would require some more configuration and access to information outside of the broker itself.

I made a fork of kafka-streams-viz https://zaijo.github.io/kafka-streams-viz/ which simplifies the topology diagram. Moreover, having each active processor publishing its topology description via HTTP GET, we can combine the diagrams into a single "flow diagram" (experiment on this branch: https://github.com/Zaijo/kafka-streams-viz/tree/feature/combine-diagrams)

If individual processors published their topology descriptions via KStreams Topology#describe, we could diagram the complete flow information. But that would require some more configuration and access to information outside of the broker itself.

Right, my above screenshot did not require any of these additional information. The only missing piece is how Confluent figured out the ClientID "rdkafka" produces the topic "orders-json". I'll try to figure it out soonish :)

I closed the duplicate issue I created yesterday for this one. I initially didn't find this issue though since data lineage wasn't mentioned.

This would be an excellent feature to include in Kowl. There are a few key data governance features that Kowl could provide on top of event-driven systems that would make this application even more useful. Organizations are looking for ways to apply data governance to event streaming, and there are not many options. Maybe after some more investigation into these features I'll create separate tickets for each if it makes sense.

As for data lineage, maybe there is a way to track this without relying on metadata that producers and consumers don't already send. Properties like:

client.idconsumer.idgroup.id

Having some or all of these would be enough to draw a simple "data flow" diagram.

And Kafka's JMX console has details on consumers and producers (seems like a quota must be setup first though for some reason). This link is the only thing I've found so far that shows how to get details for connected producers: https://stackoverflow.com/a/48997761/10619940

With Redpanda this information could be more easily accessible.