ioredis

ioredis copied to clipboard

ioredis copied to clipboard

Dockerized Redis cluster is failing with ElastiCache configuration

Hi team, I would love some help.

Versions:

- ioredis: 4.27.6

- node: v14.17.0

- Docker desktop: 3.5.0 (66024)

- OS: MAC Catalina, v10.15.7

I have an AWS ElastiCache Cluster configured with TLS encryption, and was able to successfully setup the Redis client and use it following the README instructions:

const redisClient = new Cluster([{ host: process.env.REDIS_HOST, port: process.env.REDIS_PORT || 6379 }], {

slotsRefreshTimeout: 20000,

dnsLookup: (hostname: string, callback) => callback(null, hostname),

redisOptions: {

showFriendlyErrorStack: true,

tls: {},

keyPrefix: 'some-prefix-',

},

});

However, when trying to run locally using dockerized Redis cluster for testing or developing purposes, ioredis consistently throwing Error: Connection is closed, regardless of the docker configuration:

err: ClusterAllFailedError: Failed to refresh slots cache.

at tryNode (/Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:396:31)

at /Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:413:21

at /Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:671:24

at run (/Users/or/code/device-care-api/node_modules/ioredis/built/utils/index.js:156:22)

at tryCatcher (/Users/or/code/device-care-api/node_modules/standard-as-callback/built/utils.js:12:23)

at /Users/or/code/device-care-api/node_modules/standard-as-callback/built/index.js:33:51

at processTicksAndRejections (internal/process/task_queues.js:95:5) {

lastNodeError: Error: Connection is closed.

at processTicksAndRejections (internal/process/task_queues.js:95:5)

I was able to conclude from turning on the debug mode for ioredis that it's due to ETIMEDOUT error.

Adding the complete details in the comments, would love some help in understanding what am I missing here.

Using grokzen/redis-cluster:

versions

- ioredis: 4.27.6

- Docker desktop: 3.5.0 (66024)

- OS: MAC Catalina, v10.15.7

- node: v14.17.0

- redis (docker): v6.2.0, v6,0.11, v5.0.11

error

error on redis client {

err: ClusterAllFailedError: Failed to refresh slots cache.

at tryNode (/Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:396:31)

at /Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:413:21

at /Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:671:24

at run (/Users/or/code/device-care-api/node_modules/ioredis/built/utils/index.js:156:22)

at tryCatcher (/Users/or/code/device-care-api/node_modules/standard-as-callback/built/utils.js:12:23)

at /Users/or/code/device-care-api/node_modules/standard-as-callback/built/index.js:33:51

at processTicksAndRejections (internal/process/task_queues.js:95:5) {

lastNodeError: Error: Connection is closed.

at processTicksAndRejections (internal/process/task_queues.js:95:5)

},

host: 'localhost',

port: 7000,

defaultOptions: {

enableReadyCheck: true,

slotsRefreshTimeout: 20000,

dnsLookup: [Function: dnsLookup],

redisOptions: {

showFriendlyErrorStack: true,

tls: {},

keyPrefix: 'some-prefix-'

}

}

}

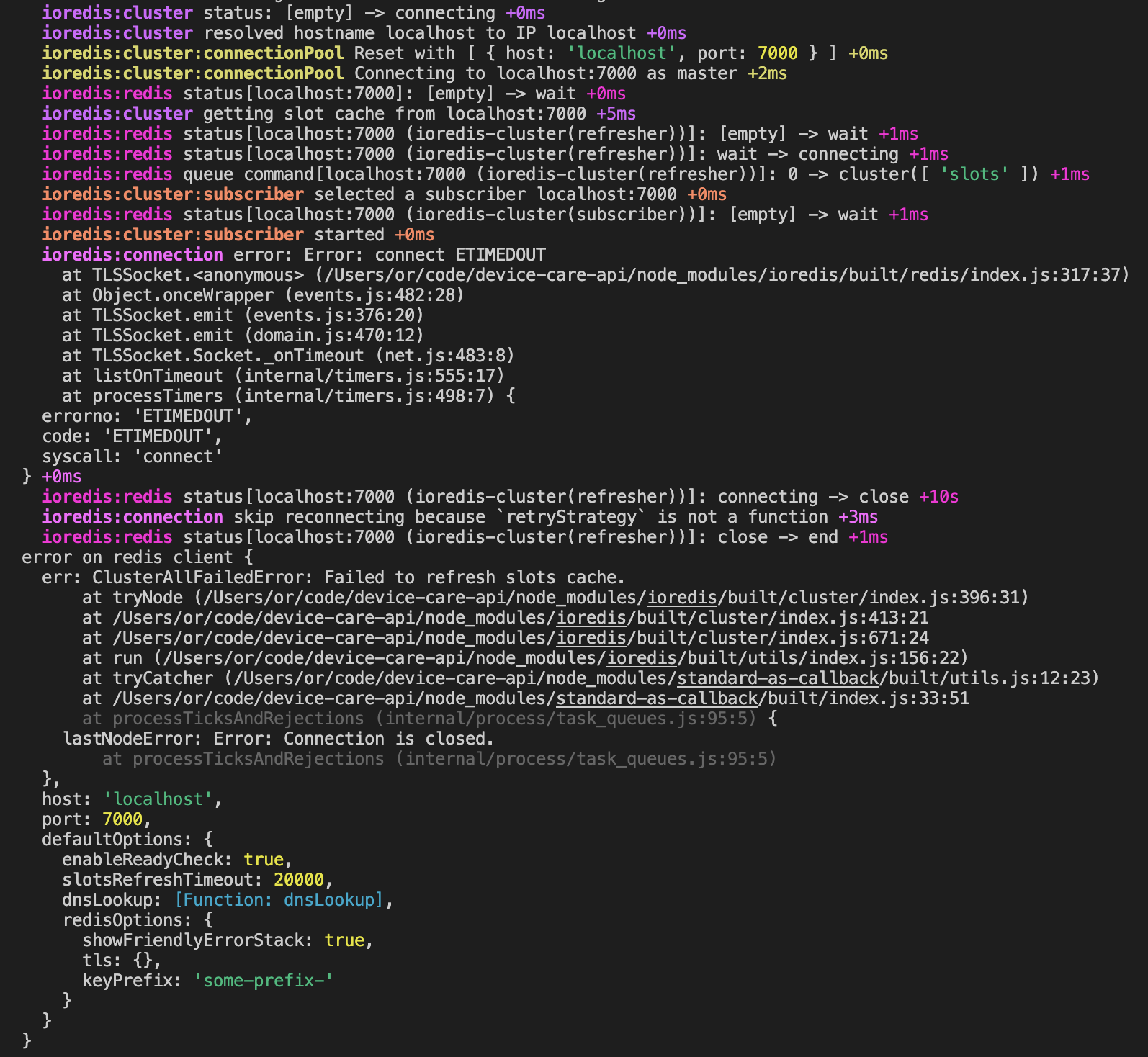

DEBUG=ioredis:*

ioredis:cluster status: [empty] -> connecting +0ms

ioredis:cluster resolved hostname localhost to IP localhost +1ms

ioredis:cluster:connectionPool Reset with [ { host: 'localhost', port: 7000 } ] +0ms

ioredis:cluster:connectionPool Connecting to localhost:7000 as master +1ms

ioredis:redis status[localhost:7000]: [empty] -> wait +0ms

ioredis:cluster getting slot cache from localhost:7000 +5ms

ioredis:redis status[localhost:7000 (ioredis-cluster(refresher))]: [empty] -> wait +1ms

ioredis:redis status[localhost:7000 (ioredis-cluster(refresher))]: wait -> connecting +1ms

ioredis:redis queue command[localhost:7000 (ioredis-cluster(refresher))]: 0 -> cluster([ 'slots' ]) +0ms

ioredis:cluster:subscriber selected a subscriber localhost:7000 +0ms

ioredis:redis status[localhost:7000 (ioredis-cluster(subscriber))]: [empty] -> wait +0ms

ioredis:cluster:subscriber started +0ms

ioredis:connection error: Error: connect ETIMEDOUT

at TLSSocket.<anonymous> (/Users/or/code/device-care-api/node_modules/ioredis/built/redis/index.js:317:37)

at Object.onceWrapper (events.js:482:28)

at TLSSocket.emit (events.js:376:20)

at TLSSocket.emit (domain.js:470:12)

at TLSSocket.Socket._onTimeout (net.js:483:8)

at listOnTimeout (internal/timers.js:555:17)

at processTimers (internal/timers.js:498:7) {

errorno: 'ETIMEDOUT',

code: 'ETIMEDOUT',

syscall: 'connect'

} +0ms

ioredis:redis status[localhost:7000 (ioredis-cluster(refresher))]: connecting -> close +10s

ioredis:connection skip reconnecting because `retryStrategy` is not a function +2ms

ioredis:redis status[localhost:7000 (ioredis-cluster(refresher))]: close -> end +0ms

ioredis screenshot

docker-compose.yml file

version: '3'

services:

redis-cluster:

image: grokzen/redis-cluster:6.2.0

ports:

- 7000-7005:7000-7005

environment:

REDIS_CLUSTER_IP: '0.0.0.0'

docker logs

-- IP Before trim: '192.168.0.2 '

-- IP Before split: '192.168.0.2'

-- IP After trim: '192.168.0.2'

Using redis-cli to create the cluster

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.0.2:7004 to 192.168.0.2:7000

Adding replica 192.168.0.2:7005 to 192.168.0.2:7001

Adding replica 192.168.0.2:7003 to 192.168.0.2:7002

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: b8f2f767063f70f379d9aa9911fe69e270cad21c 192.168.0.2:7000

slots:[0-5460] (5461 slots) master

M: 84cb51e51e94bc7483858f65b08f8675325d82f3 192.168.0.2:7001

slots:[5461-10922] (5462 slots) master

M: ff025bc5dd9bcdbbc6c14772a5fcdd962f67ce8e 192.168.0.2:7002

slots:[10923-16383] (5461 slots) master

S: 5cfdbf9a3584b75694176ab8c893510ffd0076ce 192.168.0.2:7003

replicates ff025bc5dd9bcdbbc6c14772a5fcdd962f67ce8e

S: 32b1f50ddd7b7aa0c96b161c6e9252db300b1536 192.168.0.2:7004

replicates b8f2f767063f70f379d9aa9911fe69e270cad21c

S: a68623916a74e34020235d5acdca5885c1151559 192.168.0.2:7005

replicates 84cb51e51e94bc7483858f65b08f8675325d82f3

Can I set the above configuration? (type 'yes' to accept): >>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

bash: warning: setlocale: LC_ALL: cannot change locale (en_US.UTF-8)

>>> Performing Cluster Check (using node 192.168.0.2:7000)

M: b8f2f767063f70f379d9aa9911fe69e270cad21c 192.168.0.2:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: a68623916a74e34020235d5acdca5885c1151559 192.168.0.2:7005

slots: (0 slots) slave

replicates 84cb51e51e94bc7483858f65b08f8675325d82f3

M: ff025bc5dd9bcdbbc6c14772a5fcdd962f67ce8e 192.168.0.2:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 5cfdbf9a3584b75694176ab8c893510ffd0076ce 192.168.0.2:7003

slots: (0 slots) slave

replicates ff025bc5dd9bcdbbc6c14772a5fcdd962f67ce8e

S: 32b1f50ddd7b7aa0c96b161c6e9252db300b1536 192.168.0.2:7004

slots: (0 slots) slave

replicates b8f2f767063f70f379d9aa9911fe69e270cad21c

M: 84cb51e51e94bc7483858f65b08f8675325d82f3 192.168.0.2:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

==> /var/log/supervisor/redis-1.log <==

45:M 15 Jul 2021 02:38:34.243 * Ready to accept connections

45:M 15 Jul 2021 02:38:36.262 # configEpoch set to 1 via CLUSTER SET-CONFIG-EPOCH

45:M 15 Jul 2021 02:38:36.339 # IP address for this node updated to 192.168.0.2

45:M 15 Jul 2021 02:38:37.271 * Replica 192.168.0.2:7004 asks for synchronization

45:M 15 Jul 2021 02:38:37.271 * Partial resynchronization not accepted: Replication ID mismatch (Replica asked for '0e3efd8423f2d7ffd021e7bf8d0e043561516db8', my replication IDs are '65c1bd113358a60525c9e2346e64f331c2ef70f7' and '0000000000000000000000000000000000000000')

45:M 15 Jul 2021 02:38:37.271 * Replication backlog created, my new replication IDs are '92209a72fc1fde0ab2b87e0c143c67f4f0d3c6a7' and '0000000000000000000000000000000000000000'

45:M 15 Jul 2021 02:38:37.271 * Starting BGSAVE for SYNC with target: disk

45:M 15 Jul 2021 02:38:37.271 * Background saving started by pid 78

78:C 15 Jul 2021 02:38:37.273 * DB saved on disk

78:C 15 Jul 2021 02:38:37.273 * RDB: 0 MB of memory used by copy-on-write

==> /var/log/supervisor/redis-2.log <==

47:M 15 Jul 2021 02:38:34.243 * Ready to accept connections

47:M 15 Jul 2021 02:38:36.262 # configEpoch set to 2 via CLUSTER SET-CONFIG-EPOCH

47:M 15 Jul 2021 02:38:36.449 # IP address for this node updated to 192.168.0.2

47:M 15 Jul 2021 02:38:37.270 * Replica 192.168.0.2:7005 asks for synchronization

47:M 15 Jul 2021 02:38:37.270 * Partial resynchronization not accepted: Replication ID mismatch (Replica asked for '261f6a002e431bf87cd6f5771ffb75f569e4e728', my replication IDs are '4be2f4fa4c4a21f1abd4eb379f155fd627572f0a' and '0000000000000000000000000000000000000000')

47:M 15 Jul 2021 02:38:37.270 * Replication backlog created, my new replication IDs are 'b80e1f22920b68b05c8e6c44fcc63975492e307d' and '0000000000000000000000000000000000000000'

47:M 15 Jul 2021 02:38:37.270 * Starting BGSAVE for SYNC with target: disk

47:M 15 Jul 2021 02:38:37.270 * Background saving started by pid 77

77:C 15 Jul 2021 02:38:37.273 * DB saved on disk

77:C 15 Jul 2021 02:38:37.273 * RDB: 0 MB of memory used by copy-on-write

==> /var/log/supervisor/redis-3.log <==

46:M 15 Jul 2021 02:38:34.248 * Ready to accept connections

46:M 15 Jul 2021 02:38:36.262 # configEpoch set to 3 via CLUSTER SET-CONFIG-EPOCH

46:M 15 Jul 2021 02:38:36.449 # IP address for this node updated to 192.168.0.2

46:M 15 Jul 2021 02:38:37.272 * Replica 192.168.0.2:7003 asks for synchronization

46:M 15 Jul 2021 02:38:37.273 * Partial resynchronization not accepted: Replication ID mismatch (Replica asked for '48975c9c4ee1575bff9f07d7c617788d017adee6', my replication IDs are 'f0eb5017ae3ecb8133ea4fe1b03dd6755449b75c' and '0000000000000000000000000000000000000000')

46:M 15 Jul 2021 02:38:37.273 * Replication backlog created, my new replication IDs are '8c579b92fe117cdd188bd46877af13905172661a' and '0000000000000000000000000000000000000000'

46:M 15 Jul 2021 02:38:37.274 * Starting BGSAVE for SYNC with target: disk

46:M 15 Jul 2021 02:38:37.274 * Background saving started by pid 79

79:C 15 Jul 2021 02:38:37.278 * DB saved on disk

79:C 15 Jul 2021 02:38:37.279 * RDB: 0 MB of memory used by copy-on-write

==> /var/log/supervisor/redis-4.log <==

43:M 15 Jul 2021 02:38:36.349 # IP address for this node updated to 192.168.0.2

43:S 15 Jul 2021 02:38:37.268 * Before turning into a replica, using my own master parameters to synthesize a cached master: I may be able to synchronize with the new master with just a partial transfer.

43:S 15 Jul 2021 02:38:37.268 * Connecting to MASTER 192.168.0.2:7002

43:S 15 Jul 2021 02:38:37.268 * MASTER <-> REPLICA sync started

43:S 15 Jul 2021 02:38:37.268 # Cluster state changed: ok

43:S 15 Jul 2021 02:38:37.268 * Non blocking connect for SYNC fired the event.

43:S 15 Jul 2021 02:38:37.270 * Master replied to PING, replication can continue...

43:S 15 Jul 2021 02:38:37.272 * Trying a partial resynchronization (request 48975c9c4ee1575bff9f07d7c617788d017adee6:1).

43:S 15 Jul 2021 02:38:37.276 * Full resync from master: 8c579b92fe117cdd188bd46877af13905172661a:0

43:S 15 Jul 2021 02:38:37.276 * Discarding previously cached master state.

==> /var/log/supervisor/redis-5.log <==

42:M 15 Jul 2021 02:38:36.348 # IP address for this node updated to 192.168.0.2

42:S 15 Jul 2021 02:38:37.268 * Before turning into a replica, using my own master parameters to synthesize a cached master: I may be able to synchronize with the new master with just a partial transfer.

42:S 15 Jul 2021 02:38:37.268 * Connecting to MASTER 192.168.0.2:7000

42:S 15 Jul 2021 02:38:37.268 * MASTER <-> REPLICA sync started

42:S 15 Jul 2021 02:38:37.268 # Cluster state changed: ok

42:S 15 Jul 2021 02:38:37.268 * Non blocking connect for SYNC fired the event.

42:S 15 Jul 2021 02:38:37.269 * Master replied to PING, replication can continue...

42:S 15 Jul 2021 02:38:37.270 * Trying a partial resynchronization (request 0e3efd8423f2d7ffd021e7bf8d0e043561516db8:1).

42:S 15 Jul 2021 02:38:37.272 * Full resync from master: 92209a72fc1fde0ab2b87e0c143c67f4f0d3c6a7:0

42:S 15 Jul 2021 02:38:37.272 * Discarding previously cached master state.

==> /var/log/supervisor/redis-6.log <==

44:M 15 Jul 2021 02:38:36.449 # IP address for this node updated to 192.168.0.2

44:S 15 Jul 2021 02:38:37.268 * Before turning into a replica, using my own master parameters to synthesize a cached master: I may be able to synchronize with the new master with just a partial transfer.

44:S 15 Jul 2021 02:38:37.268 * Connecting to MASTER 192.168.0.2:7001

44:S 15 Jul 2021 02:38:37.269 * MASTER <-> REPLICA sync started

44:S 15 Jul 2021 02:38:37.269 # Cluster state changed: ok

44:S 15 Jul 2021 02:38:37.269 * Non blocking connect for SYNC fired the event.

44:S 15 Jul 2021 02:38:37.269 * Master replied to PING, replication can continue...

44:S 15 Jul 2021 02:38:37.270 * Trying a partial resynchronization (request 261f6a002e431bf87cd6f5771ffb75f569e4e728:1).

44:S 15 Jul 2021 02:38:37.271 * Full resync from master: b80e1f22920b68b05c8e6c44fcc63975492e307d:0

44:S 15 Jul 2021 02:38:37.271 * Discarding previously cached master state.

==> /var/log/supervisor/redis-1.log <==

45:M 15 Jul 2021 02:38:37.353 * Background saving terminated with success

45:M 15 Jul 2021 02:38:37.354 * Synchronization with replica 192.168.0.2:7004 succeeded

==> /var/log/supervisor/redis-2.log <==

47:M 15 Jul 2021 02:38:37.351 * Background saving terminated with success

47:M 15 Jul 2021 02:38:37.352 * Synchronization with replica 192.168.0.2:7005 succeeded

==> /var/log/supervisor/redis-3.log <==

46:M 15 Jul 2021 02:38:37.356 * Background saving terminated with success

46:M 15 Jul 2021 02:38:37.357 * Synchronization with replica 192.168.0.2:7003 succeeded

==> /var/log/supervisor/redis-4.log <==

43:S 15 Jul 2021 02:38:37.357 * MASTER <-> REPLICA sync: receiving 175 bytes from master to disk

43:S 15 Jul 2021 02:38:37.357 * MASTER <-> REPLICA sync: Flushing old data

43:S 15 Jul 2021 02:38:37.357 * MASTER <-> REPLICA sync: Loading DB in memory

43:S 15 Jul 2021 02:38:37.358 * Loading RDB produced by version 6.2.0

43:S 15 Jul 2021 02:38:37.358 * RDB age 0 seconds

43:S 15 Jul 2021 02:38:37.358 * RDB memory usage when created 2.53 Mb

43:S 15 Jul 2021 02:38:37.358 * MASTER <-> REPLICA sync: Finished with success

43:S 15 Jul 2021 02:38:37.359 * Background append only file rewriting started by pid 83

43:S 15 Jul 2021 02:38:37.384 * AOF rewrite child asks to stop sending diffs.

83:C 15 Jul 2021 02:38:37.384 * Parent agreed to stop sending diffs. Finalizing AOF...

83:C 15 Jul 2021 02:38:37.384 * Concatenating 0.00 MB of AOF diff received from parent.

83:C 15 Jul 2021 02:38:37.384 * SYNC append only file rewrite performed

83:C 15 Jul 2021 02:38:37.384 * AOF rewrite: 0 MB of memory used by copy-on-write

43:S 15 Jul 2021 02:38:37.444 * Background AOF rewrite terminated with success

43:S 15 Jul 2021 02:38:37.444 * Residual parent diff successfully flushed to the rewritten AOF (0.00 MB)

43:S 15 Jul 2021 02:38:37.444 * Background AOF rewrite finished successfully

==> /var/log/supervisor/redis-5.log <==

42:S 15 Jul 2021 02:38:37.354 * MASTER <-> REPLICA sync: receiving 175 bytes from master to disk

42:S 15 Jul 2021 02:38:37.354 * MASTER <-> REPLICA sync: Flushing old data

42:S 15 Jul 2021 02:38:37.354 * MASTER <-> REPLICA sync: Loading DB in memory

42:S 15 Jul 2021 02:38:37.355 * Loading RDB produced by version 6.2.0

42:S 15 Jul 2021 02:38:37.355 * RDB age 0 seconds

42:S 15 Jul 2021 02:38:37.355 * RDB memory usage when created 2.59 Mb

42:S 15 Jul 2021 02:38:37.355 * MASTER <-> REPLICA sync: Finished with success

42:S 15 Jul 2021 02:38:37.356 * Background append only file rewriting started by pid 82

42:S 15 Jul 2021 02:38:37.381 * AOF rewrite child asks to stop sending diffs.

82:C 15 Jul 2021 02:38:37.381 * Parent agreed to stop sending diffs. Finalizing AOF...

82:C 15 Jul 2021 02:38:37.381 * Concatenating 0.00 MB of AOF diff received from parent.

82:C 15 Jul 2021 02:38:37.381 * SYNC append only file rewrite performed

82:C 15 Jul 2021 02:38:37.382 * AOF rewrite: 0 MB of memory used by copy-on-write

42:S 15 Jul 2021 02:38:37.442 * Background AOF rewrite terminated with success

42:S 15 Jul 2021 02:38:37.442 * Residual parent diff successfully flushed to the rewritten AOF (0.00 MB)

42:S 15 Jul 2021 02:38:37.442 * Background AOF rewrite finished successfully

==> /var/log/supervisor/redis-6.log <==

44:S 15 Jul 2021 02:38:37.352 * MASTER <-> REPLICA sync: receiving 175 bytes from master to disk

44:S 15 Jul 2021 02:38:37.352 * MASTER <-> REPLICA sync: Flushing old data

44:S 15 Jul 2021 02:38:37.352 * MASTER <-> REPLICA sync: Loading DB in memory

44:S 15 Jul 2021 02:38:37.354 * Loading RDB produced by version 6.2.0

44:S 15 Jul 2021 02:38:37.354 * RDB age 0 seconds

44:S 15 Jul 2021 02:38:37.354 * RDB memory usage when created 2.54 Mb

44:S 15 Jul 2021 02:38:37.354 * MASTER <-> REPLICA sync: Finished with success

44:S 15 Jul 2021 02:38:37.354 * Background append only file rewriting started by pid 81

44:S 15 Jul 2021 02:38:37.380 * AOF rewrite child asks to stop sending diffs.

81:C 15 Jul 2021 02:38:37.380 * Parent agreed to stop sending diffs. Finalizing AOF...

81:C 15 Jul 2021 02:38:37.380 * Concatenating 0.00 MB of AOF diff received from parent.

81:C 15 Jul 2021 02:38:37.380 * SYNC append only file rewrite performed

81:C 15 Jul 2021 02:38:37.380 * AOF rewrite: 0 MB of memory used by copy-on-write

44:S 15 Jul 2021 02:38:37.455 * Background AOF rewrite terminated with success

44:S 15 Jul 2021 02:38:37.455 * Residual parent diff successfully flushed to the rewritten AOF (0.00 MB)

44:S 15 Jul 2021 02:38:37.455 * Background AOF rewrite finished successfully

==> /var/log/supervisor/redis-1.log <==

45:M 15 Jul 2021 02:38:41.264 # Cluster state changed: ok

==> /var/log/supervisor/redis-2.log <==

47:M 15 Jul 2021 02:38:41.260 # Cluster state changed: ok

==> /var/log/supervisor/redis-3.log <==

46:M 15 Jul 2021 02:38:41.266 # Cluster state changed: ok

Using bitnami/redis-cluster:

versions

- ioredis: 4.27.6

- Docker desktop: 3.5.0 (66024)

- OS: MAC Catalina, v10.15.7

- node: v14.17.0

- redis (docker): v6.2

error

error on redis client {

err: ClusterAllFailedError: Failed to refresh slots cache.

at tryNode (/Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:396:31)

at /Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:413:21

at /Users/or/code/device-care-api/node_modules/ioredis/built/cluster/index.js:671:24

at run (/Users/or/code/device-care-api/node_modules/ioredis/built/utils/index.js:156:22)

at tryCatcher (/Users/or/code/device-care-api/node_modules/standard-as-callback/built/utils.js:12:23)

at /Users/or/code/device-care-api/node_modules/standard-as-callback/built/index.js:33:51

at processTicksAndRejections (internal/process/task_queues.js:95:5) {

lastNodeError: Error: Connection is closed.

at processTicksAndRejections (internal/process/task_queues.js:95:5)

},

host: 'localhost',

port: 9123,

defaultOptions: {

enableReadyCheck: true,

slotsRefreshTimeout: 20000,

dnsLookup: [Function: dnsLookup],

redisOptions: {

showFriendlyErrorStack: true,

tls: {},

keyPrefix: 'some-prefix-'

}

}

}

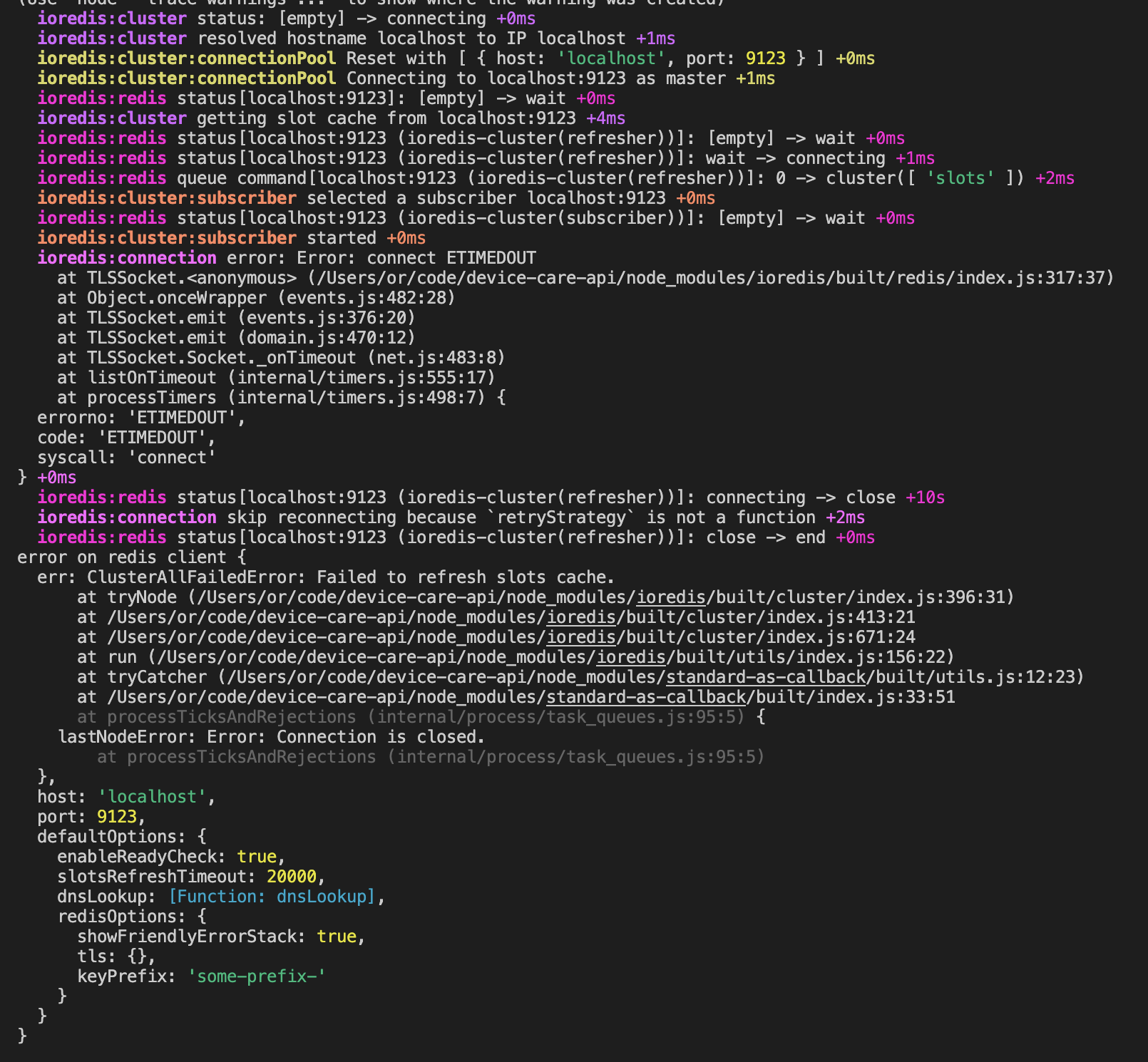

DEBUG=ioredis:*

ioredis:cluster status: [empty] -> connecting +0ms

ioredis:cluster resolved hostname localhost to IP localhost +1ms

ioredis:cluster:connectionPool Reset with [ { host: 'localhost', port: 9123 } ] +0ms

ioredis:cluster:connectionPool Connecting to localhost:9123 as master +1ms

ioredis:redis status[localhost:9123]: [empty] -> wait +0ms

ioredis:cluster getting slot cache from localhost:9123 +4ms

ioredis:redis status[localhost:9123 (ioredis-cluster(refresher))]: [empty] -> wait +0ms

ioredis:redis status[localhost:9123 (ioredis-cluster(refresher))]: wait -> connecting +1ms

ioredis:redis queue command[localhost:9123 (ioredis-cluster(refresher))]: 0 -> cluster([ 'slots' ]) +2ms

ioredis:cluster:subscriber selected a subscriber localhost:9123 +0ms

ioredis:redis status[localhost:9123 (ioredis-cluster(subscriber))]: [empty] -> wait +0ms

ioredis:cluster:subscriber started +0ms

ioredis:connection error: Error: connect ETIMEDOUT

at TLSSocket.<anonymous> (/Users/or/code/device-care-api/node_modules/ioredis/built/redis/index.js:317:37)

at Object.onceWrapper (events.js:482:28)

at TLSSocket.emit (events.js:376:20)

at TLSSocket.emit (domain.js:470:12)

at TLSSocket.Socket._onTimeout (net.js:483:8)

at listOnTimeout (internal/timers.js:555:17)

at processTimers (internal/timers.js:498:7) {

errorno: 'ETIMEDOUT',

code: 'ETIMEDOUT',

syscall: 'connect'

} +0ms

ioredis:redis status[localhost:9123 (ioredis-cluster(refresher))]: connecting -> close +10s

ioredis:connection skip reconnecting because `retryStrategy` is not a function +2ms

ioredis:redis status[localhost:9123 (ioredis-cluster(refresher))]: close -> end +0ms

ioredis screenshot

docker-compose.yml file

version: '3'

services:

redis-cluster:

image: bitnami/redis-cluster:6.2

depends_on:

- redis-node-0

- redis-node-1

- redis-node-2

- redis-node-3

- redis-node-4

- redis-node-5

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

REDIS_CLUSTER_CREATOR: 'yes'

REDIS_CLUSTER_REPLICAS: '1'

redis-node-0:

image: bitnami/redis-cluster:6.2

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- 9123:6379

redis-node-1:

image: bitnami/redis-cluster:6.2

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- 9124:6379

redis-node-2:

image: bitnami/redis-cluster:6.2

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- 9125:6379

redis-node-3:

image: bitnami/redis-cluster:6.2

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- 9126:6379

redis-node-4:

image: bitnami/redis-cluster:6.2

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- 9127:6379

redis-node-5:

image: bitnami/redis-cluster:6.2

environment:

REDIS_NODES: 'redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- 9128:6379

docker logs

redis-cluster 03:57:58.05

redis-cluster 03:57:58.05 Welcome to the Bitnami redis-cluster container

redis-cluster 03:57:58.06 Subscribe to project updates by watching https://github.com/bitnami/bitnami-docker-redis-cluster

redis-cluster 03:57:58.06 Submit issues and feature requests at https://github.com/bitnami/bitnami-docker-redis-cluster/issues

redis-cluster 03:57:58.06

redis-cluster 03:57:58.06 INFO ==> ** Starting Redis setup **

redis-cluster 03:57:58.10 WARN ==> You set the environment variable ALLOW_EMPTY_PASSWORD=yes. For safety reasons, do not use this flag in a production environment.

redis-cluster 03:57:58.11 INFO ==> Initializing Redis

redis-cluster 03:57:58.14 INFO ==> Setting Redis config file

redis-cluster 03:57:58.27 INFO ==> ** Redis setup finished! **

Storing map with hostnames and IPs

96:C 15 Jul 2021 03:57:58.313 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

96:C 15 Jul 2021 03:57:58.313 # Redis version=6.2.4, bits=64, commit=00000000, modified=0, pid=96, just started

96:C 15 Jul 2021 03:57:58.313 # Configuration loaded

96:M 15 Jul 2021 03:57:58.315 * monotonic clock: POSIX clock_gettime

96:M 15 Jul 2021 03:57:58.315 * No cluster configuration found, I'm 19ba95aa92d380869cb0ee7f75556e97a819d3a7

96:M 15 Jul 2021 03:57:58.318 * Running mode=cluster, port=6379.

96:M 15 Jul 2021 03:57:58.318 # Server initialized

96:M 15 Jul 2021 03:57:58.319 * Ready to accept connections

Waiting 0s before querying node ip addresses

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.26.0.5:6379 to 172.26.0.3:6379

Adding replica 172.26.0.2:6379 to 172.26.0.4:6379

Adding replica 172.26.0.6:6379 to 172.26.0.7:6379

M: 2801a20a76db3b15a9ab67c32f447925a3d3d118 172.26.0.3:6379

slots:[0-5460] (5461 slots) master

M: 6e56b93f548bcffc92e2151971f5d1a903730b2b 172.26.0.4:6379

slots:[5461-10922] (5462 slots) master

M: 94a57a9b457e7973d9cf828d8a1fa07139dee7cf 172.26.0.7:6379

slots:[10923-16383] (5461 slots) master

S: 92069a1011705e57552acc9ba05b705d7853a580 172.26.0.6:6379

replicates 94a57a9b457e7973d9cf828d8a1fa07139dee7cf

S: f4c37297fce3bc95731a03611d790bce1fb3015b 172.26.0.5:6379

replicates 2801a20a76db3b15a9ab67c32f447925a3d3d118

S: 77495b84614baae43a742b65da96ecc3efd35568 172.26.0.2:6379

replicates 6e56b93f548bcffc92e2151971f5d1a903730b2b

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.26.0.3:6379)

M: 2801a20a76db3b15a9ab67c32f447925a3d3d118 172.26.0.3:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: f4c37297fce3bc95731a03611d790bce1fb3015b 172.26.0.5:6379

slots: (0 slots) slave

replicates 2801a20a76db3b15a9ab67c32f447925a3d3d118

M: 94a57a9b457e7973d9cf828d8a1fa07139dee7cf 172.26.0.7:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 6e56b93f548bcffc92e2151971f5d1a903730b2b 172.26.0.4:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 92069a1011705e57552acc9ba05b705d7853a580 172.26.0.6:6379

slots: (0 slots) slave

replicates 94a57a9b457e7973d9cf828d8a1fa07139dee7cf

S: 77495b84614baae43a742b65da96ecc3efd35568 172.26.0.2:6379

slots: (0 slots) slave

replicates 6e56b93f548bcffc92e2151971f5d1a903730b2b

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

Cluster correctly created

redis-server "${ARGS[@]}"

i have the exact same issue

Any update? I have the same issue with redis 3.2 and ioredis 4.27.9

Any update? I have the same issue with redis 3.2 and ioredis 4.27.9

My fault, I mixed codis and cluster.