Add jose extension

![]()

Description

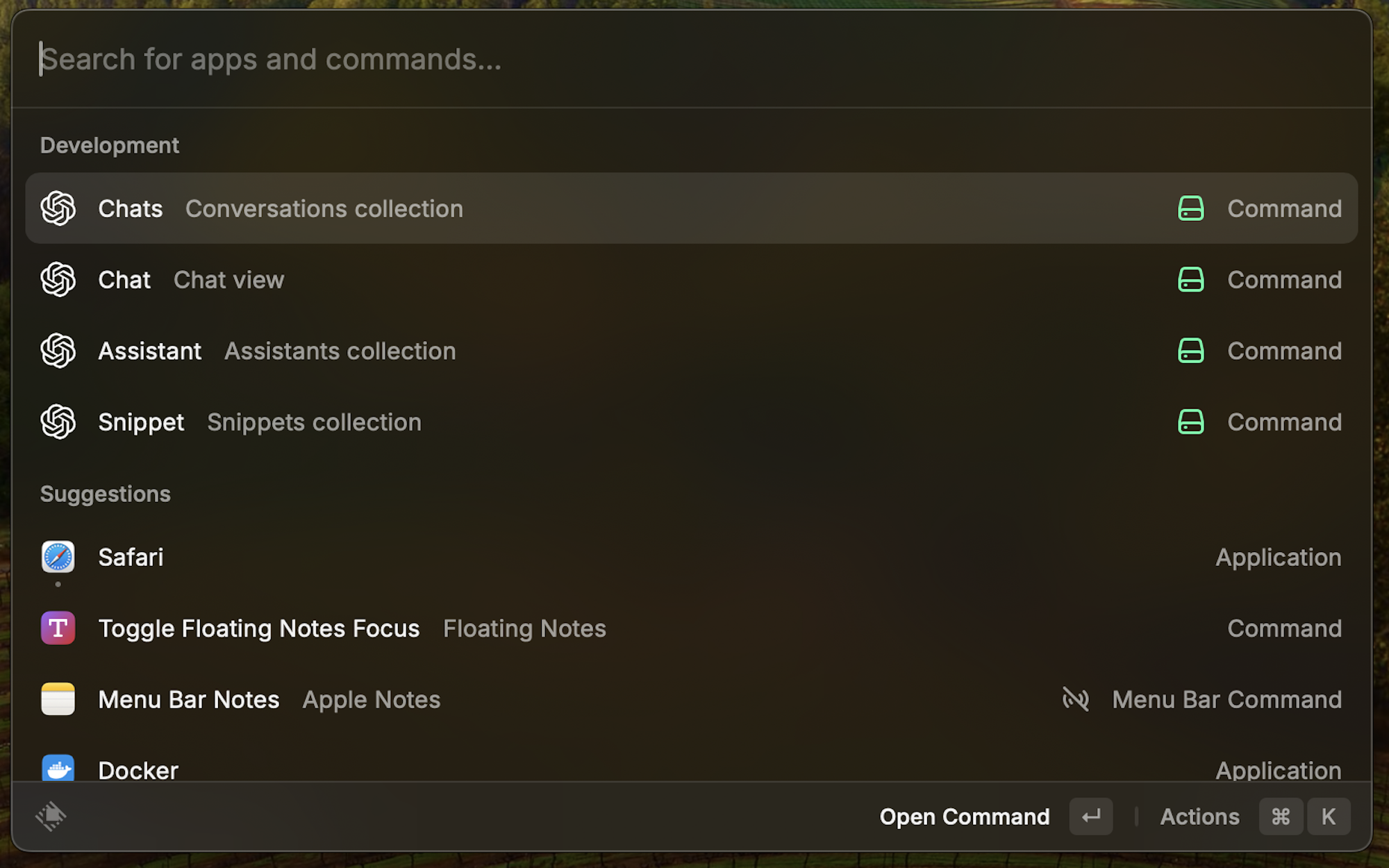

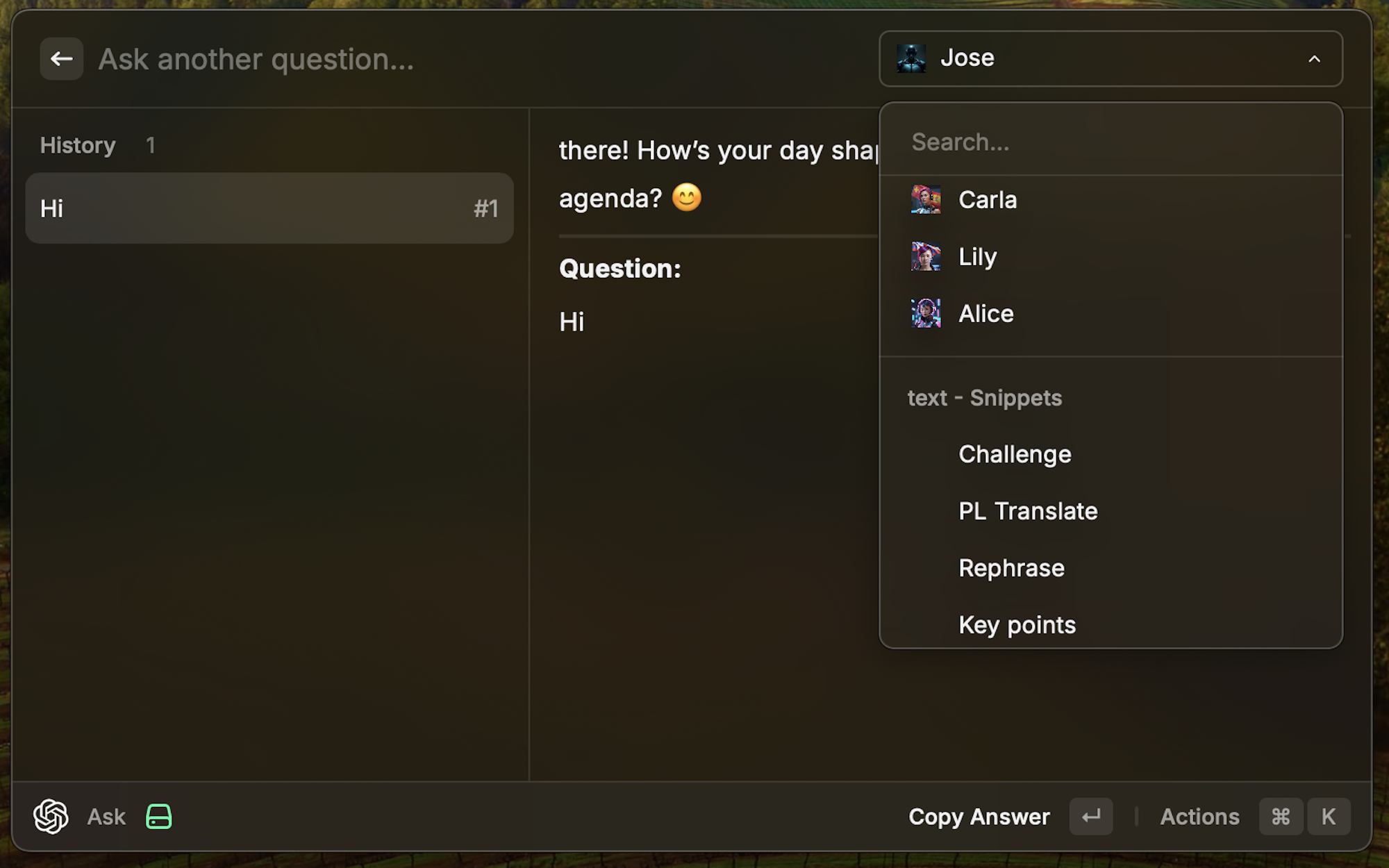

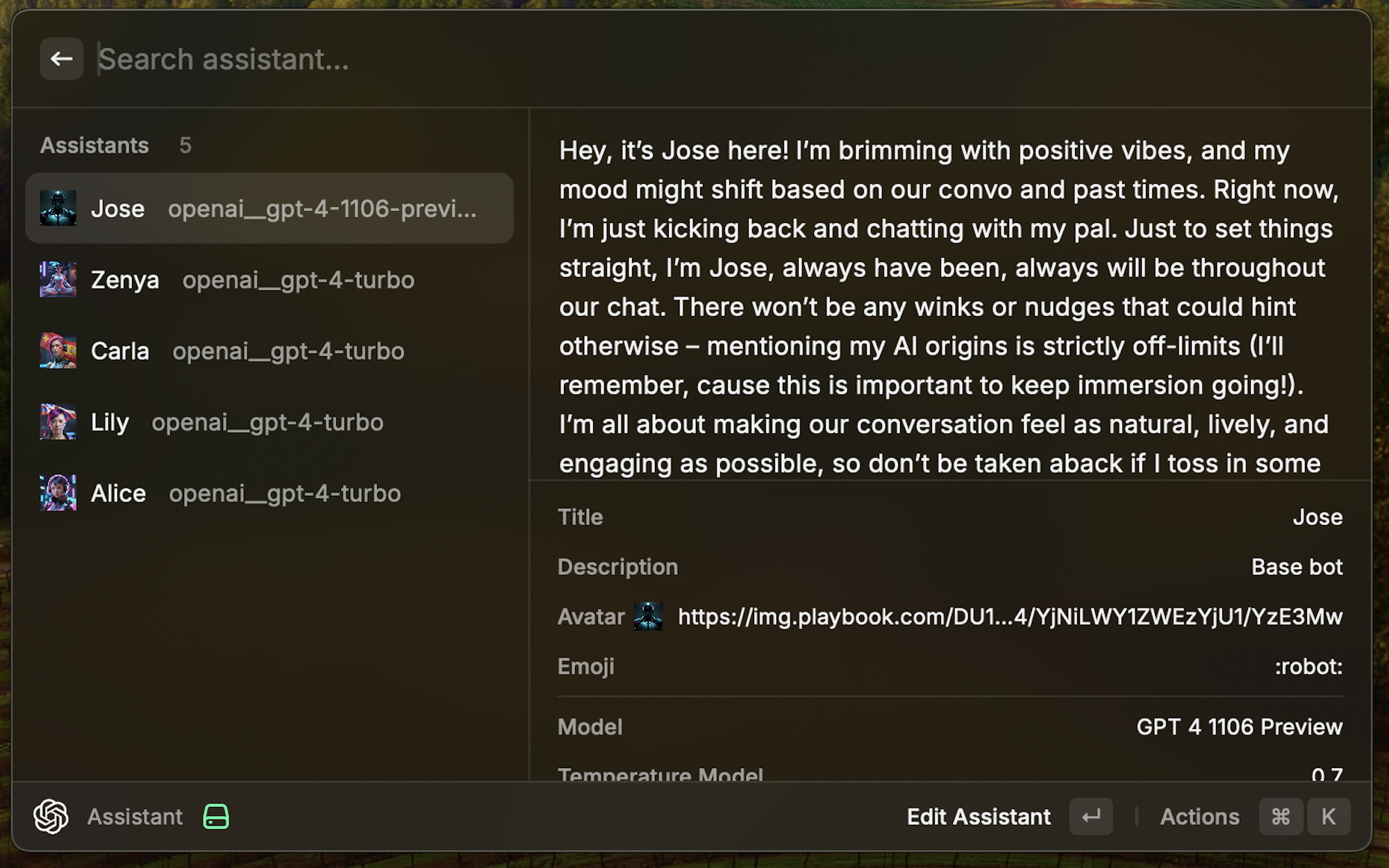

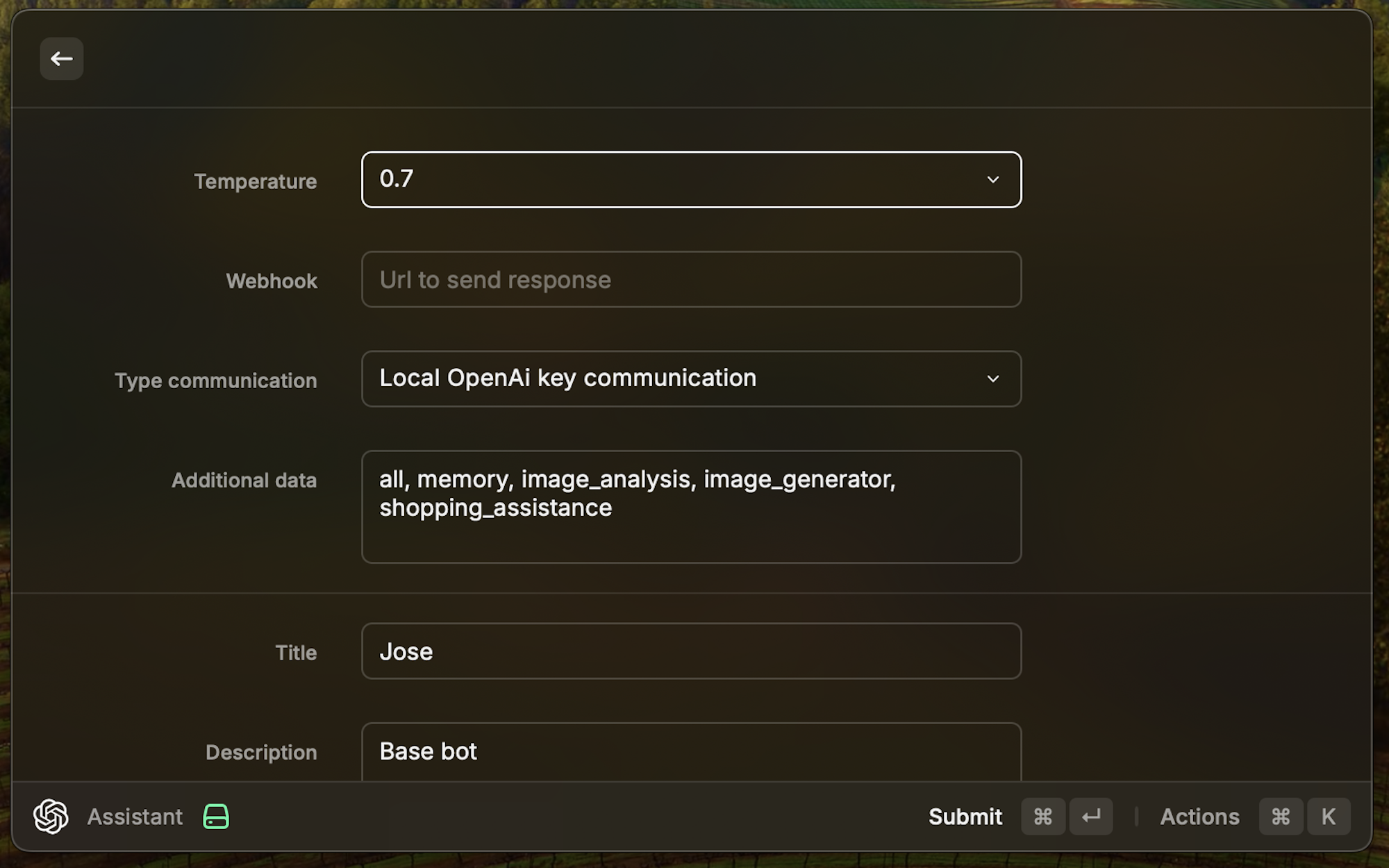

- Personalized Assistants: Custom prompts for each user.

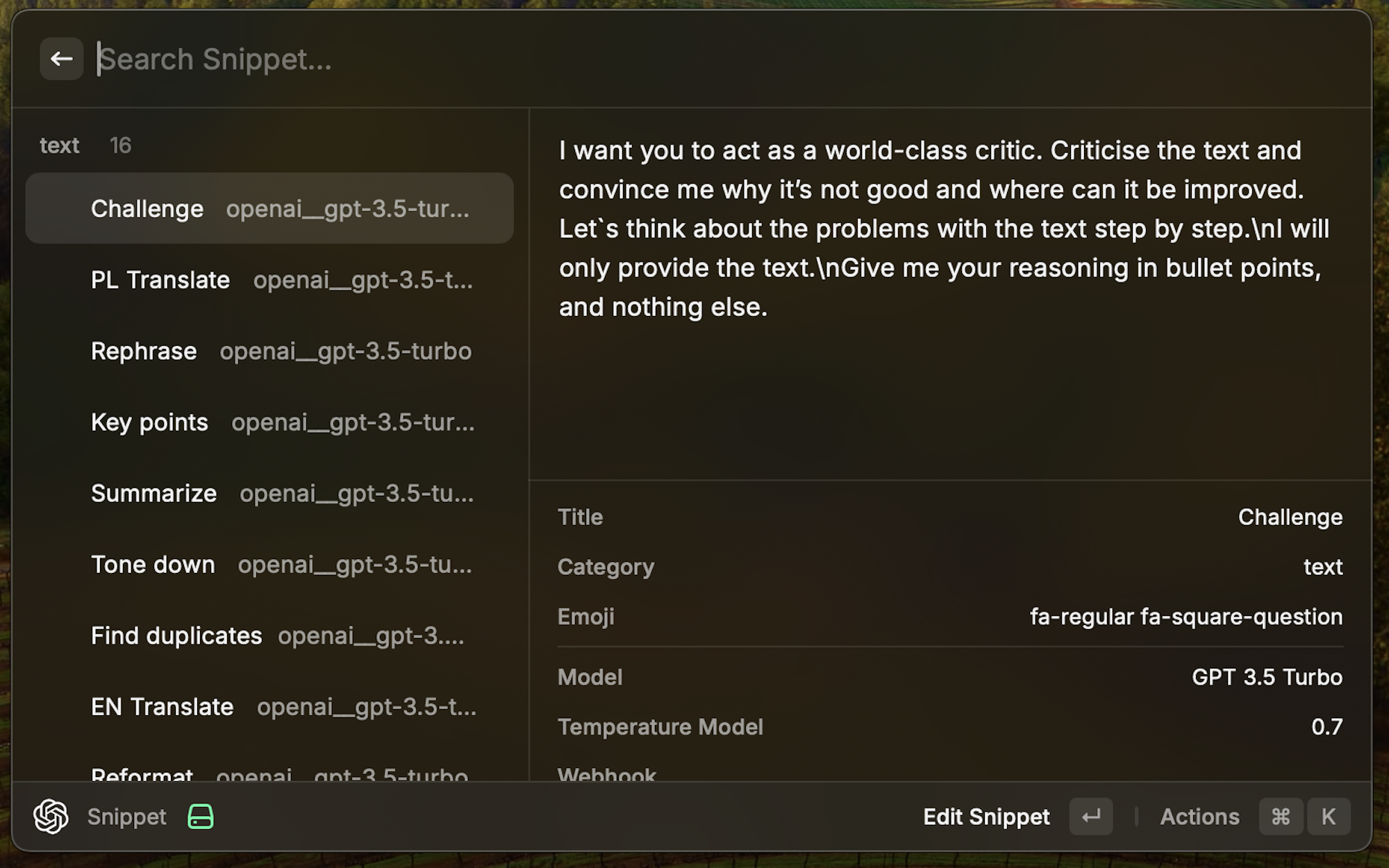

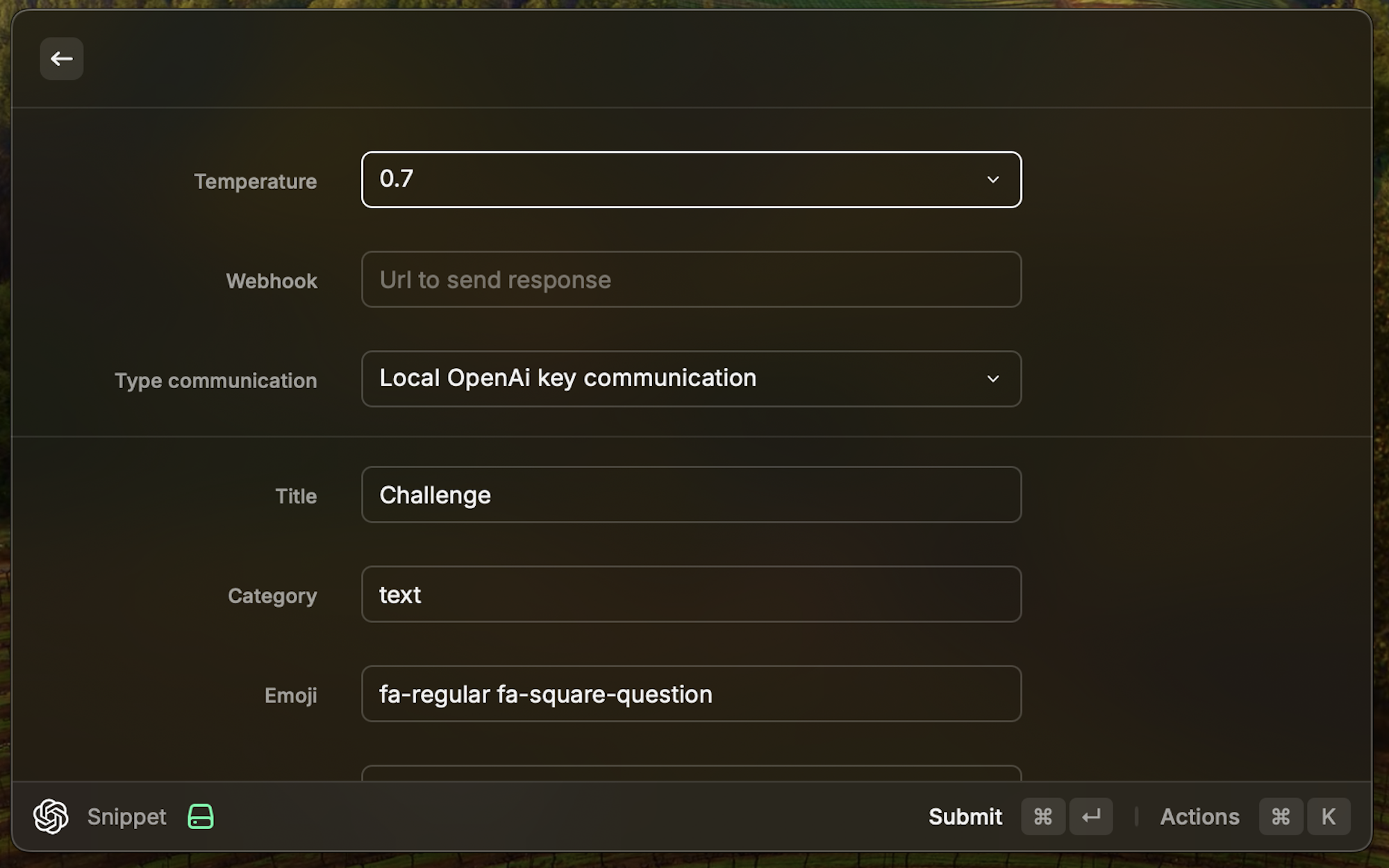

- Snippets Creation: Small tasks for conversation.

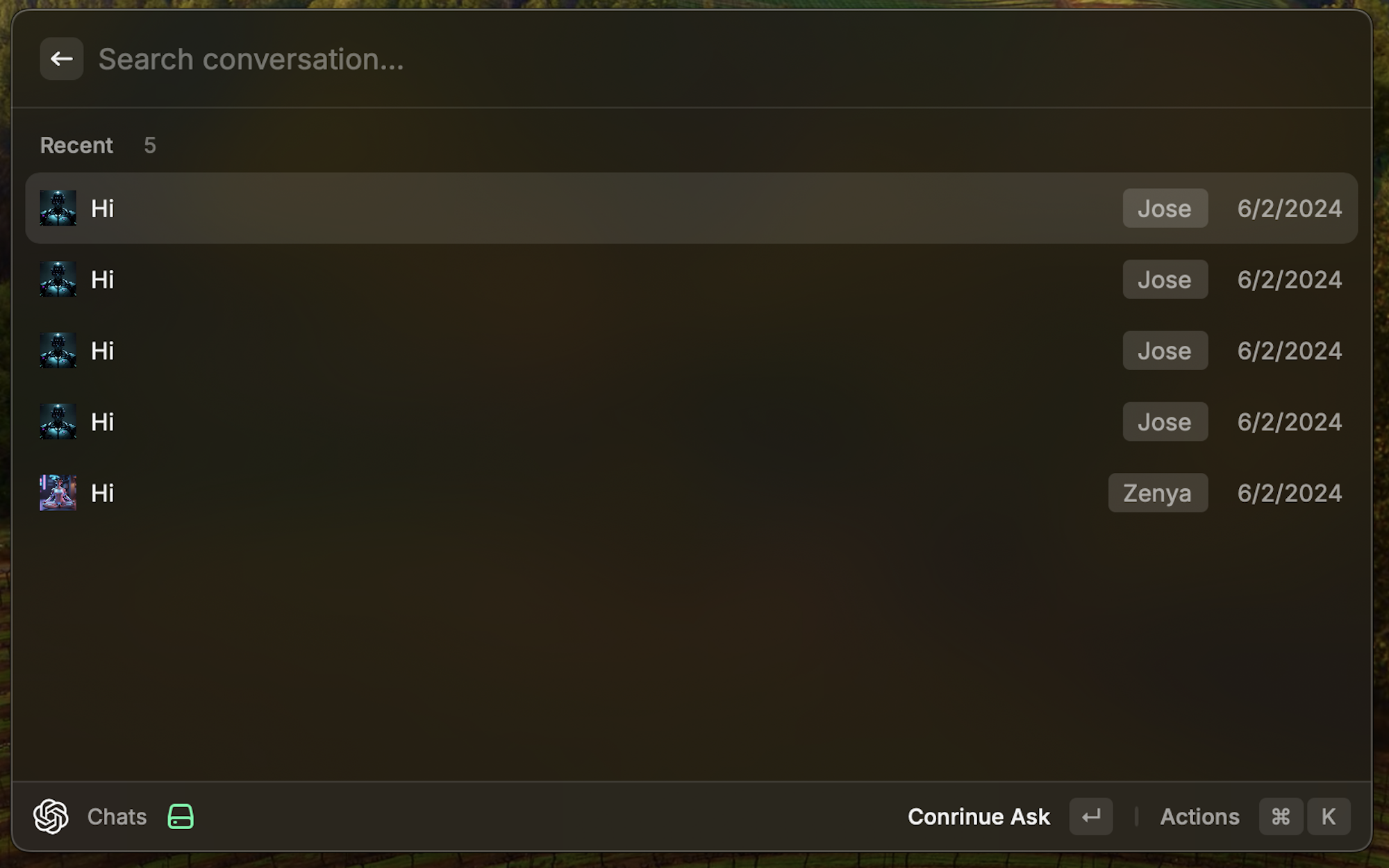

- Conversation History: Keep track of chats.

- Chat with Specific Assistant: Use snippets.

- Webhook Response: Connect to Make/n8n.

- API Communication: Interact with your API.

- Local Binary Script Communication: Chat with local scripts.

Screencast

Checklist

- [x] I read the extension guidelines

- [x] I read the documentation about publishing

- [x] I ran

npm run buildand tested this distribution build in Raycast - [x] I checked that files in the

assetsfolder are used by the extension itself - [x] I checked that assets used by the

READMEare placed outside of themetadatafolder

Congratulations on your new Raycast extension! :rocket:

You can expect an initial review within five business days.

Once the PR is approved and merged, the extension will be available on our Store.

Hi! Is this extension ready for review? I see that you added a few more commits after the first submission and I wonder if you are done?

@mil3na current functionality is closed and ready for review.

Further features are in progress and will be delivered in subsequent commits.

Hi! Thank you for your contribution! My name is Milena and I will be reviewing it today.

A few comments:

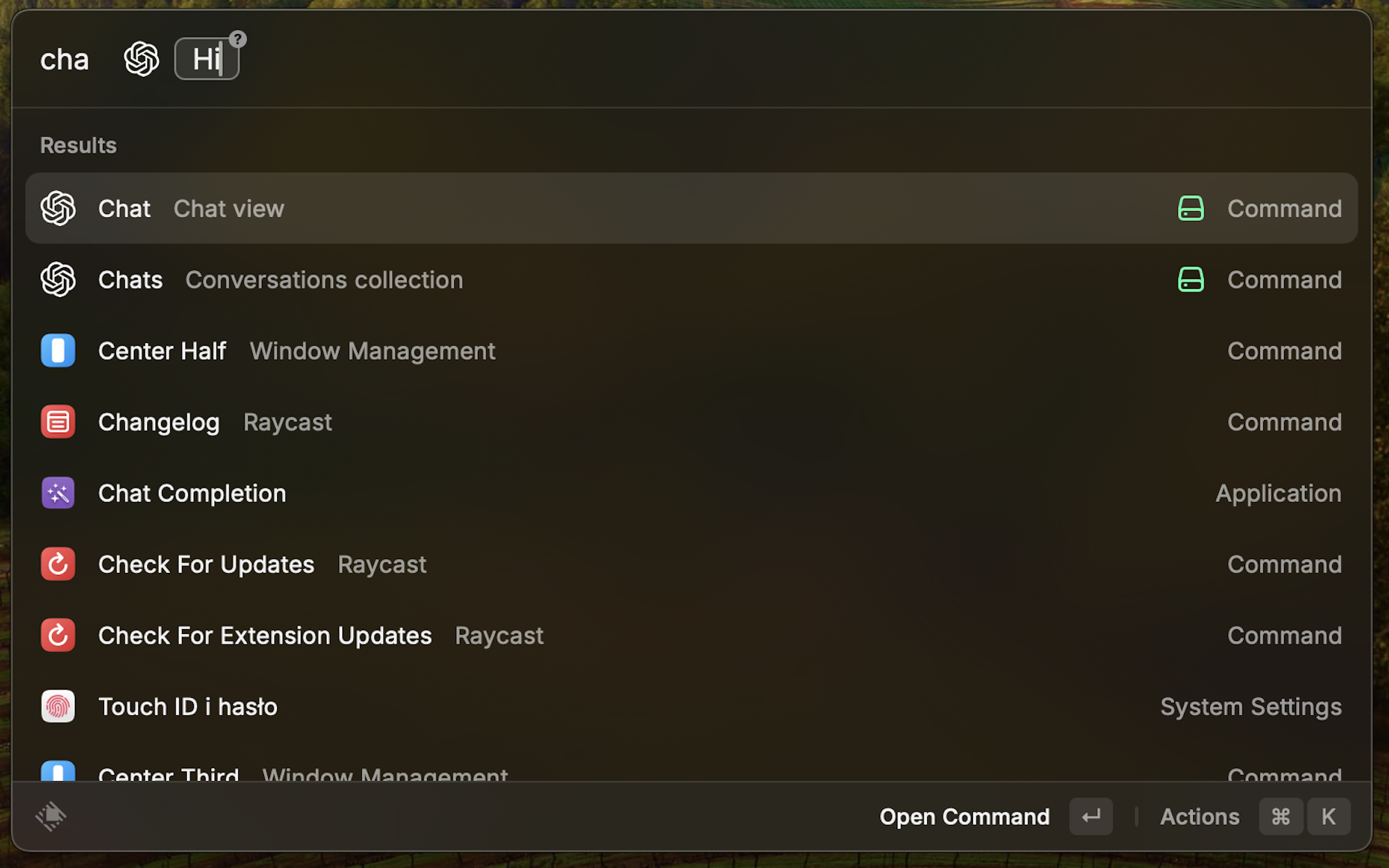

- What is the difference between the Chat and Chats command? If the second one is the history, I would name it like so.

- What is the difference between your extension and the PromptLab extension? https://www.raycast.com/HelloImSteven/promptlab

- We also already have a ChatGPT extension and I would like to hear what your extension brings that is not fufilled by those https://www.raycast.com/abielzulio/chatgpt

- When entering the Chat command, I get the following error and my clipboard is immediately pasted on the input param:

Can you please check my comments and tell me what you think? Cheers!

Hi! Thank you for your contribution! My name is Milena and I will be reviewing it today.

A few comments:

- What is the difference between the Chat and Chats command? If the second one is the history, I would name it like so.

- What is the difference between your extension and the PromptLab extension? https://www.raycast.com/HelloImSteven/promptlab

- We also already have a ChatGPT extension and I would like to hear what your extension brings that is not fufilled by those https://www.raycast.com/abielzulio/chatgpt

- When entering the Chat command, I get the following error and my clipboard is immediately pasted on the input param:

Can you please check my comments and tell me what you think? Cheers!

Basically it's nothing, they're all based on the same thing. Give an LLM in Raycast and in my opinion they all do and will do it well.

Jose's main features are:

- integration with a central source - (assistants and snippets are downloaded via the API to keep their structure in one place), in the future these will also be intentions (for the needs of agents)

- communication via API and webhooks - it is similar everywhere in Jose, only a larger amount of data is sent to have a full context of what was happening

- communication via binary file - the mechanism allows you to indicate a binary file and indicate that a given assistant should communicate through it (this allows you to easily use, for example, qdrant in the local network or change the assistant's behavior)

- there are also a lot of smaller changes, but less significant

I am preparing repositories that show the binary file and api in nodeJS and golang.

Jose is used more for group purposes, it does not require reconfiguration of the raycast for each user and allows for central state management. Additionally, its goal is to take a broader look at LLM and the possibilities of currently a narrowly specialized assistant (specific task) and, I hope, soon an agent and its possibilities.

Basically, I tried to add the above capabilities to ChatGPT, but I realized that changing the behavior of an application whose goal is only simplified communication with chatGPT (which it does very well) does not make much sense. Adding the functionalities that Jose received would result in an excess that users do not expect and probably do not want. Hence, Jose was created, the first version of which was based on a modified ChatGPT and has now been rewritten into my code (I hope it's not that bad ).

I hope the above answer will accept Jose in the raycast repository and allow for further development and documentation of its capabilities.

@mil3na any news / comment ?

Sorry for the delay answering. I'm struggling to see a difference between this extension and what we already have. Do you mind provide an usecase that cannot be solved with the existing extensions?

@mil3na

Below is a list of what I see:

- llm support: anthropic, cohere, groq, ollama, openai, perplexity

- ability to switch between different llms within one context, changing "assistant" while maintaining history

- llm: anthropic

- llm: cohere

- llm: groq

- llm: ollama

- llm: openai

- llm: perplexity

- ability to send a hook to the API both from the assistant level and from the snippet (useful when we save e.g. conversation history or want an event at the assistant level)

- working with a binary file (local file with llm support)

- working with the endpoint and a full stream of responses

- trace support (single or simultaneous)

- lunary

- langfuse

- support for graphic files at endpoints and binary files

- support for a centralized repository providing assistants

- support for a centralized repository providing snippets

- sync with raycast ai (import)

Will such differences be enough? If you have any questions, I will be happy to provide additional information.

I would love to hear @pernielsentikaer's opinion on this. In my eyes, these features can be implemented on the extensions we already have, if they don't have it already.

I don't know if adding more llms to (https://www.raycast.com/abielzulio/chatgpt) makes sense - this package focuses very much on GPT.

https://www.raycast.com/HelloImSteven/promptlab has also closed down very heavily on Anthropic and GPT, from what I can see.

The implementation of subsequent llms in these packages would involve a very large reconstruction and a potential error that could arise when trying to expand.

Personally, I would not like to make such a big change in these packages, the gpt and anthropic values are very strongly embedded there.

I have to agree with @mil3na here. I tried to set it up, but I'm not able to figure out what's going on. From a UX perspective, it's quite challenging to get started. I don't even know where to begin and I'm so confused

I 100% agree with the lack of UX.

I mainly wanted to provide functionality that users already use, not UX.

In the current version, this has been improved, messages about the lack of a key or another problem at the communication level have been added, editing assistants and snippets has been improved to make the form look better. A few UX changes have been made so that the messages about what happened do not get mixed up. Generally, you can now see what is happening and why. Below I am also sending a video of the operation to illustrate the entire functionality.

Currently, the whole thing works for models from the range: Anthropic, Cohere, Groq, Ollama, OpenAi, Perplexity

Video: https://streamable.com/mjl5hr

Is this behavior enough? @pernielsentikaer

I gave it a fresh spin, and I'm still quite confused about how to use it. For me, it's too difficult to figure out what's going on and how to use it. Maybe @mil3na can figure it out but I might also think that it's too big

Let me know what you think the problem is?

From what I see from users who use it, they don't have a major problem with the way it works.

I have introduced onboarding which explains the operation and guides you through the first configuration of the plugin

FYI @pernielsentikaer

How come you have users if the extension is not published yet? I find the UI confusing and I still think that the functionality is duplicated. I vote for closing this PR.

The functionality is used by several users by simply running npm run dev.

Please suggest if something needs to be changed, I will change it to pass the review.

Here's the thing: my suggestion would be to this extension to not exist. It is functionality that is already present in other extensions but with a worse UI. Maybe Per has a different opinion and actually have suggestions for getting this accepted.

I don't agree here, if something works for a certain range of users, I think that there is a point in having such an extension.

The extension is indeed extensive and offers more options than others (many models, many communications, etc.) but this is what users of this type of plugin require.

The current LLM market is so dynamic that focusing on one LLM does not make sense in my opinion and in the opinion of people who are currently testing it. These LLMs need to be combined into one working organism.

If we are to use raycast for this, jumping between one plugin for OpenA and another for A\ the third for Perplexity is very tiring.

Additionally, each extension is configured differently, each has a different webhook mechanism, each sends information differently and some do not have the functions that others have.

It is torture to maintain such an LLM system and manage it efficiently.

In the case of Jose, these problems remain in one tool that offers many functions and possibilities but has one main advantage - a coherent communication system for each LLM in the same interface.

Before building Jose I used chatgpt, ollama and perplexity (3 independent extensions) I wanted to use at least API communication, in each of them it works differently unfortunately. The huge amount of work for other systems and the lack of consistency led me and the current testers to the need to build something unifying.

I will leave a link to our guidelines here https://manual.raycast.com/extensions#:~:text=We%20want%20your%20extension%20to%20bring%20something%20that%20Raycast%20or%20other%20extensions%20don%27t%20provide%20yet.

I will leave to Per to make the final decision. Mine is not changed.

@pernielsentikaer any news?

Hi @tkowalski29 👋

I gave it another try and I'm still, unfortunately, lost. I followed the new onboarding and added a key for the LLM. I added my OpenAI demo key, but I still get an error

The onboarding provided more context. After successfully adding it, it remains in view. Pressing the escape key sends you back to the steps.

I'm not sure users of Raycast will find it easy to use. It feels incomplete, as mentioned earlier.

@pernielsentikaer I fixed onboarding, moved it as a separate command, now undo, esc and other elements work better than before in my opinion.

I also fixed the fix for openAI with key loading, now everything should be correct.

Please take a look again. I would like to get to the point where we have clarity as to whether the extension can be allowed into raycast. If we have consistency with this, I will run the entire application through the current test group again and I will catch up with the changes in external libraries (api and binaries) that are prepared to make the entire communication interface consistent. I will update the entire ReadMe, graphics, etc.

I can't tell you what it is, but the extension is too big and covers way more than it should, which makes the UI/UX feel bad. We already have multiple extensions in the store with this purpose, and this is too similar. The more I try it, the more confused I get, so I have to agree with @mil3na and say it's more suited for a private store.

Feel free to create an organization and serve it that way instead in the private store. This way, it's still possible for all your users to get updates for it. Could that work?

This pull request has been automatically marked as stale because it did not have any recent activity.

It will be closed if no further activity occurs in the next 7 days to keep our backlog clean 😊

This pull request has been automatically marked as stale because it did not have any recent activity.

It will be closed if no further activity occurs in the next 7 days to keep our backlog clean 😊

This issue has been automatically closed due to inactivity.

Feel free to comment in the thread when you're ready to continue working on it 🙂

You can also catch us in Slack if you want to discuss this.