ray

ray copied to clipboard

ray copied to clipboard

[Serve] [Docs] Update metrics that Ray Serve offers in documentation

Description

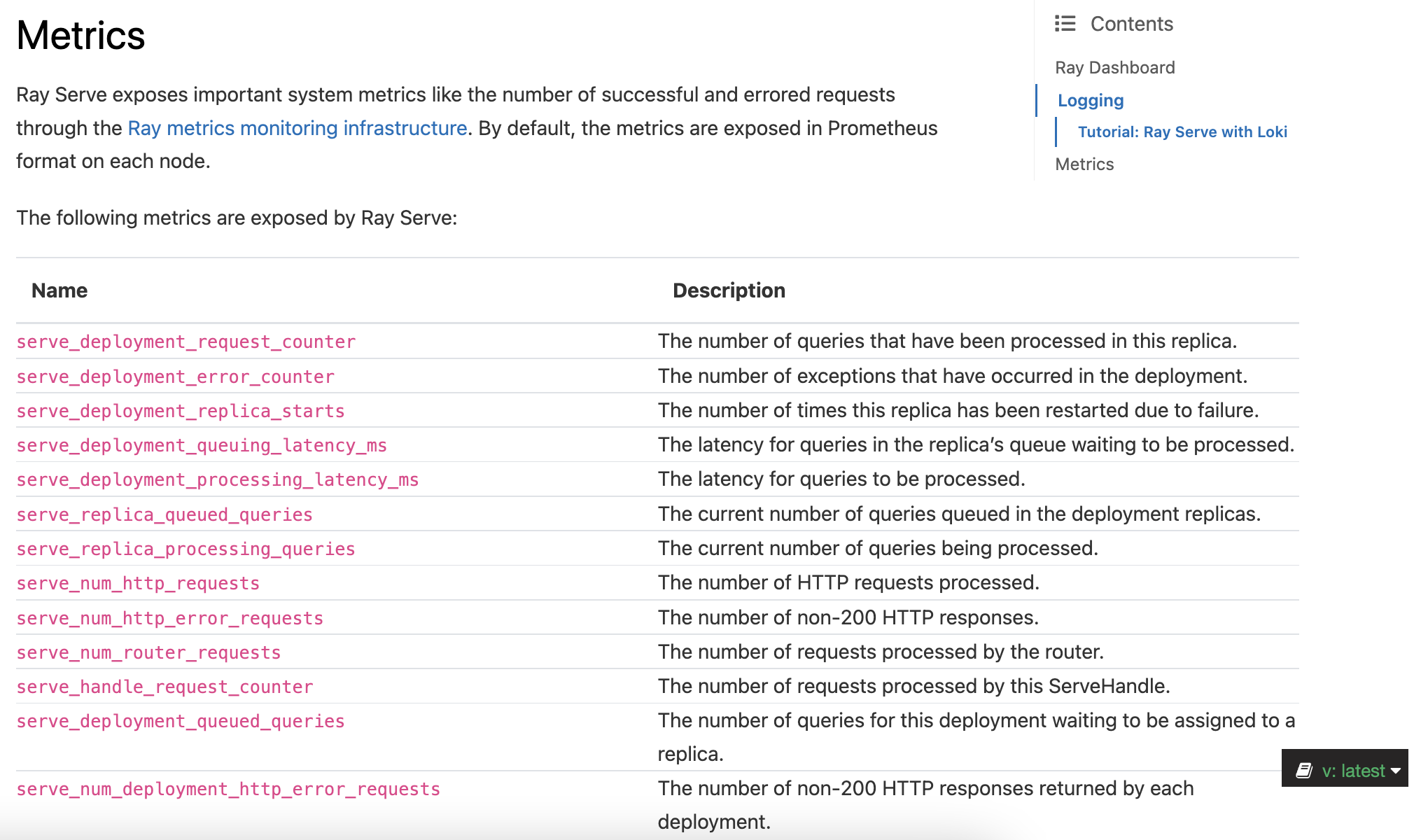

Ray Serve surfaces system metrics for debugging. The metrics documentation is outdated and contains metrics that no longer exist.

For example, the metrics serve_backend_queuing_latency_ms and serve_replica_queued_queries seem to have been removed in #15065. However, they're still documented.

Link

Hi @shrekris-anyscale, @edoakes,

I don't believe this is fully fixed, or something might be wrong somewhere. The PR has only removed the two example metrics (serve_backend_queuing_latency_ms and serve_replica_queued_queries) I have mentioned in Slack (based on which this issue was opened) but did not clean up all metrics I am not able to see on the worker nodes like the serve_num_deployment_http_error_requests or serve_deployment_queued_queries?

The documentation says By default, the metrics are exposed in Prometheus format **on each node**. at https://github.com/ray-project/ray/pull/27777/files#diff-2485229d5cf21684fbe379b5d27ce58010dd5dbb5fa88aa5f239fba1bb07c18fR212

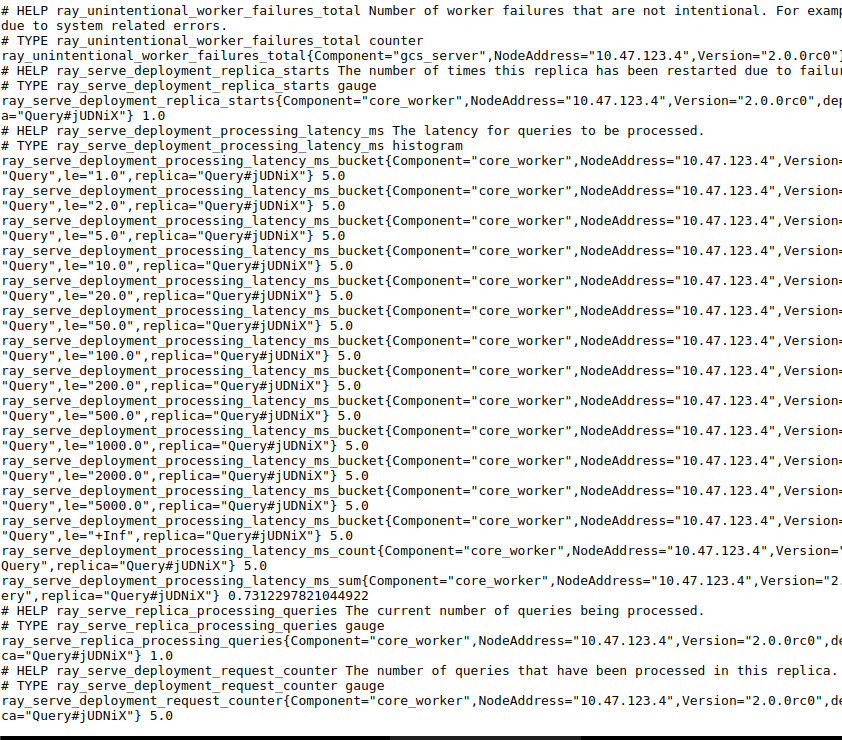

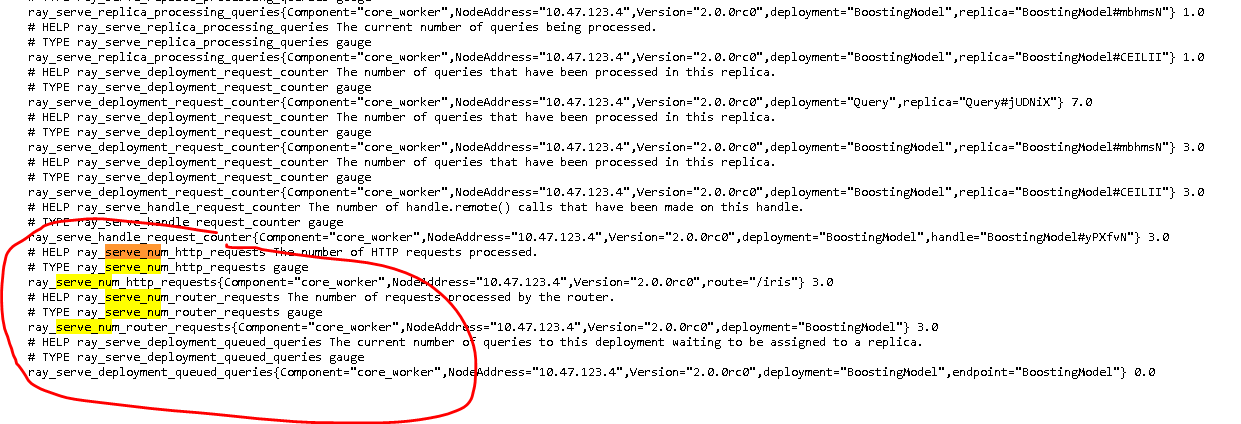

But based on my tests on v2.0.0dev0 several of these metrics are not available on each nodes. See below a screenshot taken from the worker's metric endpoint showing the limited set that is actually available on the worker node:

Where are rest of the metrics exposed then if not on each nodes? Probably the documentation should address that.

Maybe the issue is that some of these metrics only available for ReplicaSet, while others for the Replica?

These metric exposition is lazy. This means that they will show up in the endpoint only when the metric counter/gauge is actually updated in each node. Can you check the placement of the your model ServeReplica in each node?

Would you be interested in help us crafting a reproducible example so we can debug this?

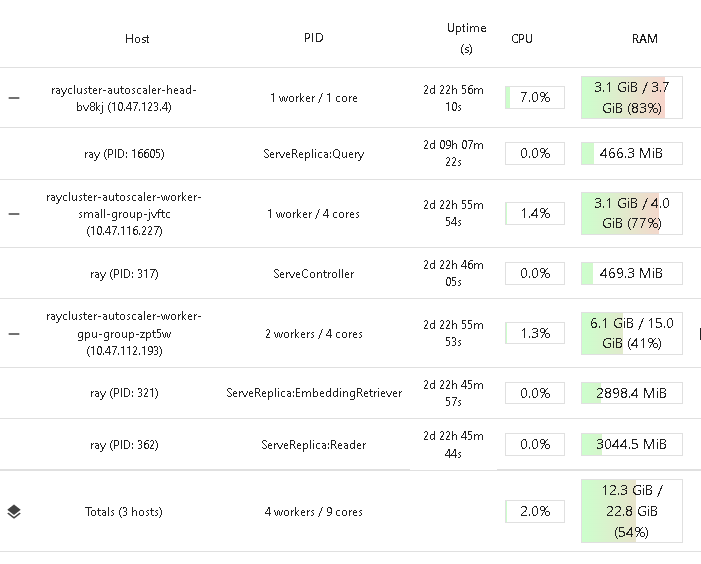

This is the node placement of my test cluster. I have connected to all nodes manually and verified that none of them contain the missing metrics.

It is hard to create a reproducible example for this if I never managed to reproduce the way how it is supposed to work. So I can't tell what is what I am doing is different from what I was supposed to be doing. These deployments were deployed via Haystack using the Ray Serve autoscaling option. The autoscaling option is the only one which I think might be causing this - thinking that maybe that is not using ReplicaSet, as these missing metrics seem to be created by the ReplicaSet.

Also, I am running Ray Serve detached, although I would think that is unlikely to cause the issue.

Oh and Ray Serve is started with http_options={"location": "NoServer"}

Okey, so here is a small reproduce-able example. Matter of fact I couldn't make those metrics to show up at all.

- Deploy Ray cluster v2.0.0rc0 (Py39) (in our case it is running on Kubernetes)

- Install matching Ray Python client

- Run the following code:

import requests

import ray

from ray import serve

ray.init(address="ray://localhost:10001")

serve.start()

@serve.deployment()

class BoostingModel:

def __init__(self):

pass

async def __call__(self, request):

payload = request["vector"]

print(f"Received http request with data {payload}")

human_name = "whatev"

return {"result": human_name}

BoostingModel.deploy()

sample_request_input = {"vector": [1.2, 1.0, 1.1, 0.9]}

# query directly with Python handlers

reader_handle = serve.get_deployment('BoostingModel').get_handle()

resp = ray.get(reader_handle.remote(request=sample_request_input))

print(resp)

-

This might look like this deployed:

-

Port-forward to port 8080 of all workers and head to check the metrics endpoint. What you will find that none contains metrics like

serve_num_deployment_http_error_requestsorserve_deployment_queued_queries, you will only find the following:

# HELP ray_unintentional_worker_failures_total Number of worker failures that are not intentional. For example, worker failures due to system related errors.

# TYPE ray_unintentional_worker_failures_total counter

ray_unintentional_worker_failures_total{Component="gcs_server",NodeAddress="10.47.123.4",Version="2.0.0rc0"} 246.0

# HELP ray_serve_deployment_replica_starts The number of times this replica has been restarted due to failure.

# TYPE ray_serve_deployment_replica_starts gauge

ray_serve_deployment_replica_starts{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",replica="Query#jUDNiX"} 1.0

# HELP ray_serve_deployment_replica_starts The number of times this replica has been restarted due to failure.

# TYPE ray_serve_deployment_replica_starts gauge

ray_serve_deployment_replica_starts{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",replica="Query#XaWcuo"} 1.0

# HELP ray_serve_deployment_replica_starts The number of times this replica has been restarted due to failure.

# TYPE ray_serve_deployment_replica_starts gauge

ray_serve_deployment_replica_starts{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#mbhmsN"} 1.0

# HELP ray_serve_deployment_replica_starts The number of times this replica has been restarted due to failure.

# TYPE ray_serve_deployment_replica_starts gauge

ray_serve_deployment_replica_starts{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#CEILII"} 1.0

# HELP ray_serve_deployment_processing_latency_ms The latency for queries to be processed.

# TYPE ray_serve_deployment_processing_latency_ms histogram

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="1.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="2.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="5.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="10.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="20.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="50.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="100.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="200.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="500.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="1000.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="2000.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="5000.0",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",le="+Inf",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_count{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",replica="Query#jUDNiX"} 7.0

ray_serve_deployment_processing_latency_ms_sum{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",replica="Query#jUDNiX"} 1.0204315185546875

# HELP ray_serve_deployment_processing_latency_ms The latency for queries to be processed.

# TYPE ray_serve_deployment_processing_latency_ms histogram

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="1.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="2.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="5.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="10.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="20.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="50.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="100.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="200.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="500.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="1000.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="2000.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="5000.0",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="+Inf",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_count{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#mbhmsN"} 3.0

ray_serve_deployment_processing_latency_ms_sum{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#mbhmsN"} 0.36978721618652344

# HELP ray_serve_deployment_processing_latency_ms The latency for queries to be processed.

# TYPE ray_serve_deployment_processing_latency_ms histogram

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="1.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="2.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="5.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="10.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="20.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="50.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="100.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="200.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="500.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="1000.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="2000.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="5000.0",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_bucket{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",le="+Inf",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_count{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#CEILII"} 3.0

ray_serve_deployment_processing_latency_ms_sum{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#CEILII"} 0.37789344787597656

# HELP ray_serve_replica_processing_queries The current number of queries being processed.

# TYPE ray_serve_replica_processing_queries gauge

ray_serve_replica_processing_queries{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",replica="Query#jUDNiX"} 1.0

# HELP ray_serve_replica_processing_queries The current number of queries being processed.

# TYPE ray_serve_replica_processing_queries gauge

ray_serve_replica_processing_queries{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#mbhmsN"} 1.0

# HELP ray_serve_replica_processing_queries The current number of queries being processed.

# TYPE ray_serve_replica_processing_queries gauge

ray_serve_replica_processing_queries{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#CEILII"} 1.0

# HELP ray_serve_deployment_request_counter The number of queries that have been processed in this replica.

# TYPE ray_serve_deployment_request_counter gauge

ray_serve_deployment_request_counter{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="Query",replica="Query#jUDNiX"} 7.0

# HELP ray_serve_deployment_request_counter The number of queries that have been processed in this replica.

# TYPE ray_serve_deployment_request_counter gauge

ray_serve_deployment_request_counter{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#mbhmsN"} 3.0

# HELP ray_serve_deployment_request_counter The number of queries that have been processed in this replica.

# TYPE ray_serve_deployment_request_counter gauge

ray_serve_deployment_request_counter{Component="core_worker",NodeAddress="10.47.123.4",Version="2.0.0rc0",deployment="BoostingModel",replica="BoostingModel#CEILII"} 3.0

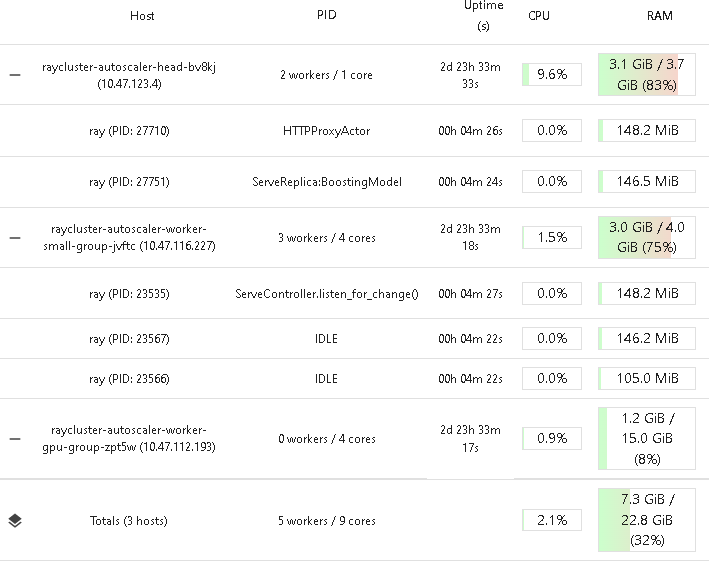

Ok, I have figured out what is happening.

A handful of the Ray Serve metrics are ONLY collected when the HTTP request is used to query and not when queried directly from Python:

ray_serve_deployment_queued_queries, ray_serve_num_router_requests, ray_serve_handle_request_counter, ray_serve_num_http_requests

See below the additional metrics when deploying with the HTTP server and calling from the HTTP server.

Shouldn't the documentation cover which metric is expected to show up when and maybe the Calling Deployments via HTTP and Python should also explain the difference - namely that for Python calling you get very little metrics.

And we might need to raise a separate ticket to get more metrics added to the Python calling scenario - to match the HTTP calling one.

Hi @zoltan-fedor, thanks for digging into this issue! I agree that it would be helpful for the docs to discuss the metrics that show up via HTTP requests vs via Python handle. Do you mind filing two tickets, so we can track this:

- Serve should document which metrics show up due to HTTP and which ones show up due to a Python handle.

- Serve should add more Python handle metrics to mirror HTTP requests.

If you have the bandwidth, it would be helpful if you could submit a PR addressing the first one. If you're interested in that, please let us know, and we can walk you through that process. Thanks again for looking into this!

Hi @shrekris-anyscale , Sure, I am happy to raise the two tickets and also make a PR for #1. My only concern with the PR, that actually I haven't yet found where the Python handle goes, so I have not yet seen in the code where its metrics are being (or might be) collected. This way whatever documentation PR I would do, would be based on mostly trial and error on the Python handler side.

Sure, I am happy to raise the two tickets and also make a PR for https://github.com/ray-project/ray/pull/1.

Thanks that would be great! We really appreciate the help.

My only concern with the PR, that actually I haven't yet found where the Python handle goes, so I have not yet seen in the code where its metrics are being (or might be) collected. This way whatever documentation PR I would do, would be based on mostly trial and error on the Python handler side.

Ah, that's a fair concern. For now, how about we add two short notes to the docs:

- To the monitoring page that lists which metrics are collected only for HTTP.

- As you proposed, to the Python

ServeHandlesection mentioning that certain metrics won't be collected in this approach.