No provision to pass labels to machine pools and nodes

Hello ,

We have a requirement to have multiple machine pools and deploy different kind of workload to each pools.

I was thinking to apply some labels to these pools , and pass my deployment to have nodeSelector . So that my deployment goes to selected nodeSelector label only .

I cant see any options in terraform modules rancher2_cluser_v2 https://registry.terraform.io/providers/rancher/rancher2/latest/docs/resources/cluster_v2#machine_pools

Does any one have bright idea how can I achieve that?

Thanks

I can see adding labels option is available in GUI ( in Advance option ). Not sure if I am missing anything in terraform module.

Hi,

We have tried also to set the labels on machine pools which it seems that is supported: https://github.com/rancher/terraform-provider-rancher2/blob/master/rancher2/schema_cluster_v2_rke_config_machine_pool.go#L143

The above will populate the metadata on MachineDeployment but will not create the nodes with labels as the specs under templates are not correct.

Are we missing something ?

Thanks

Hi,

we ran into this as well and were able to figure it out.

Flow

The flow (backwards) to get labels passed to the eventual nodes:

- (A) MachineDeployment needs to have it in

spec.template.metadata.labels - (B) A controller in rancher takes the a RKEMachinePool object and creates the MachineDeployments. (link to relevant file). It is looking at data from provisioninong.cattle.io/v1/Cluster , specifically the field:

spec.rkeConfig.machinePools - (C) The

provisioninong.cattle.io/v1/Clusterwith machinePools inside is sent from this provider to rancher. And this is the file where the flattening/expanding between the RKEMachinePool and schema is happening. - (D) And last but not least: The schema in this provider

Where is the Problem

Following through all of this we see:

- that (B) does create those labels we want: Link. It takes it from

machinePool.Labelsand assigns it tomachineDeployment.Spec.Template.Labels-> all good - (C) This provider does NOT set the

machinePool.Labels: Link -> so there is no way to set this at the moment through the provider.

But we can set some labels?

But at the moment, as pointed out by @azafairon, labels can be set in the Machine Pool through the common labels/annotations mechanism.

However, these labels get assigned to RKEMachinePool.MachineDeploymentLabels , which as the name suggests goes to the labels of the MachineDeployment (i.e. labels of the Resource, not the spec). (Link)

How to fix/make it possible What I don't know if the above behaviour is intended.

Are the (undocumented) labels of the machine_pool resource meant to:

- be applied to the resource of the MachineDeployment. (current behaviour)

- be applied to the resulting "machine"/node (behaviour we want in this issue)

Depending on the above the fix would be to either:

- (solution A) change the labels to assign to the correct place in machinePool struct (and then it will just work)

- (solution B) or create a new variable in the schema (maybe

machine_labels?) that will make it to the correct place?

Both are a pretty minimal change. I tried out (solution B), and it worked as intended. (see linked PR)

Kind regards, Marc

We are getting the same behavior :cry:

Not sure what is for the labels translated as spec.rkeConfig.machinePools.machineDeploymentLabels in the cluster CRD, but definitely we need to be able to define spec.rkeConfig.machinePools.labels though the provider...

Hi, this is a big gap in the ability to provision clusters in the new provisioning framework. There is no way to allow dedicated Ingress nodes for example. Any chance we will see some traction on #951 ??

As far as I can tell from https://registry.terraform.io/providers/rancher/rancher2/latest/docs/resources/machine_config_v2 (Note: labels and node_taints will be applied to nodes deployed using the Machine Config V2) you are supposed to set node-labels via the machine_config_v2 . But there seems to be a bug preventing you from doing so, see issue https://github.com/rancher/terraform-provider-rancher2/issues/976 .

#951 was merged yesterday, so this issue can be tested with the latest commit of the provider.

Test steps:

- Create a new

.tffile for an RKE2 cluster on any cloud provider - When defining the

machine-poolset a value for both thelabelsandmachine_labelsfields, the exact value is not important - Bring the cluster up, and import it into Rancher

a. This is just done to make inspecting the yaml easier, this verification can also be done with

kubectl - Using the Rancher UI navigate to the provisioned machine and view its YAML.

- Check the

metadata.labelsfield and ensure themachine_labelsvalue is attached to the machine yaml - On the left hand side of the UI, under

Advancedfind theMachineDeploymentfor your newly created cluster. - Ensure that the

MachineDeploymentmetadata.Labelsfield has the value specified in thelabelsfield within the.tffile.

Ticket #949 - Test Results - ✅

With Docker on a single-node instance, using terraform rancher2 provider v1.25.0:

Verified on rancher v2.7.0:

- Fresh install of rancher

v2.7.0 - Using

main.tfbelow, provision requested RKE2 infrastructure w/ bothlabelsandmachine_labelsdefined inmachine_poolsresource block

terraform {

required_providers {

rancher2 = {

source = "rancher/rancher2"

version = "1.25.0"

}

}

}

provider "rancher2" {

api_url = var.rancher_api_url

token_key = var.rancher_admin_bearer_token

insecure = true

}

data "rancher2_cloud_credential" "rancher2_cloud_credential" {

name = var.cloud_credential_name

}

resource "rancher2_machine_config_v2" "rancher2_machine_config_v2" {

generate_name = var.machine_config_name

amazonec2_config {

ami = var.aws_ami

region = var.aws_region

security_group = [var.aws_security_group_name]

subnet_id = var.aws_subnet_id

vpc_id = var.aws_vpc_id

zone = var.aws_zone_letter

}

}

resource "rancher2_cluster_v2" "rancher2_cluster_v2" {

name = var.cluster_name

kubernetes_version = "v1.24.7+rke2r1"

enable_network_policy = var.enable_network_policy

default_cluster_role_for_project_members = var.default_cluster_role_for_project_members

rke_config {

machine_pools {

name = "pool1"

labels = { "jkeslar1" = "true", "remy1" = "false" }

machine_labels = { "jkeslarML1" = "true", "remyML1" = "false" }

cloud_credential_secret_name = data.rancher2_cloud_credential.rancher2_cloud_credential.id

control_plane_role = false

etcd_role = true

worker_role = false

quantity = 1

machine_config {

kind = rancher2_machine_config_v2.rancher2_machine_config_v2.kind

name = rancher2_machine_config_v2.rancher2_machine_config_v2.name

}

}

machine_pools {

name = "pool2"

labels = { "jkeslar2" = "true", "remy2" = "false" }

machine_labels = { "jkeslarML2" = "true", "remyML2" = "false" }

cloud_credential_secret_name = data.rancher2_cloud_credential.rancher2_cloud_credential.id

control_plane_role = true

etcd_role = false

worker_role = false

quantity = 1

machine_config {

kind = rancher2_machine_config_v2.rancher2_machine_config_v2.kind

name = rancher2_machine_config_v2.rancher2_machine_config_v2.name

}

}

machine_pools {

name = "pool3"

labels = { "jkeslar3" = "true", "remy3" = "false" }

machine_labels = { "jkeslarML3" = "true", "remyML3" = "false" }

cloud_credential_secret_name = data.rancher2_cloud_credential.rancher2_cloud_credential.id

control_plane_role = false

etcd_role = false

worker_role = true

quantity = 1

machine_config {

kind = rancher2_machine_config_v2.rancher2_machine_config_v2.kind

name = rancher2_machine_config_v2.rancher2_machine_config_v2.name

}

}

}

}

# Rancher specific variable section.

variable rancher_api_url {}

variable rancher_admin_bearer_token {}

variable cloud_credential_name {}

# AWS specific variables.

variable aws_access_key {}

variable aws_secret_key {}

variable aws_ami {}

variable aws_region {}

variable aws_security_group_name {}

variable aws_subnet_id {}

variable aws_vpc_id {}

variable aws_zone_letter {}

# RKE2/k3s specific variables.

variable machine_config_name {}

variable cluster_name {}

variable enable_network_policy {}

variable default_cluster_role_for_project_members {}

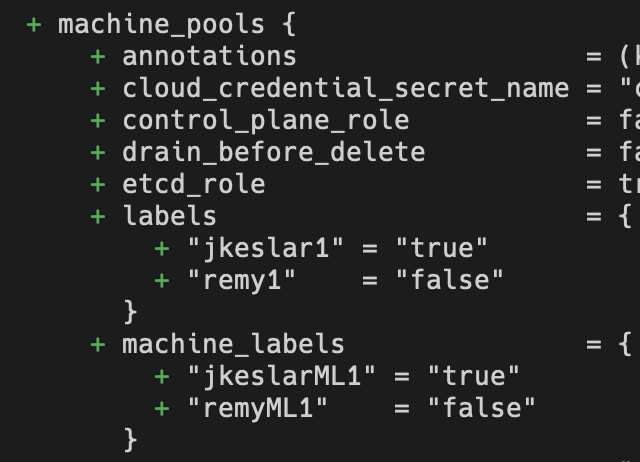

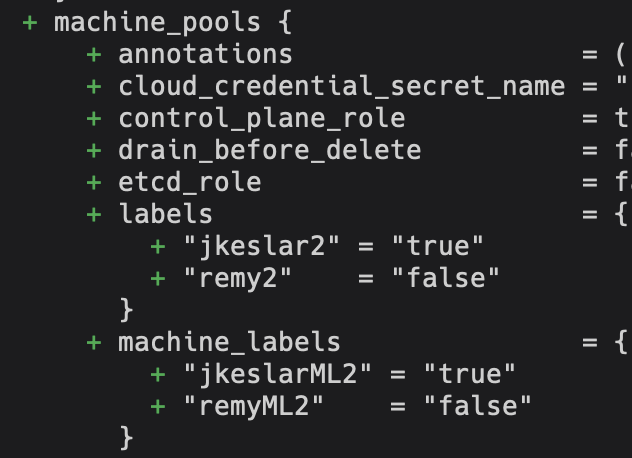

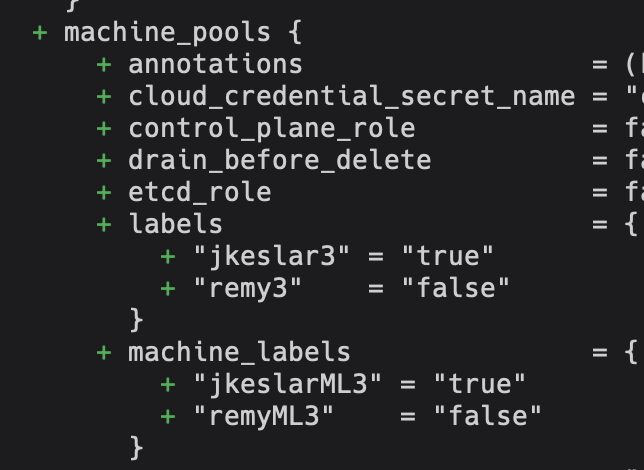

- Verified - TF plan accurately reflects requested

labelsandmachine_labelsonmachine_poolsresource block - Verified - Cluster successfully provisions

- Verified - Accurate

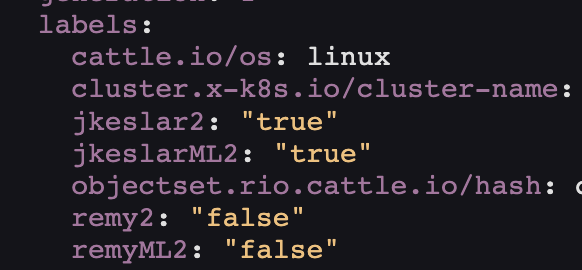

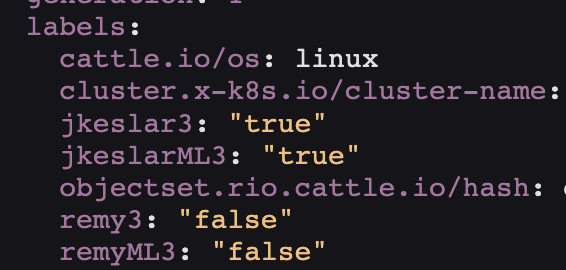

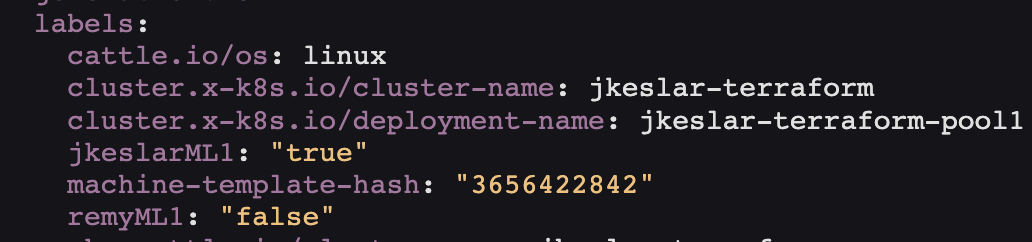

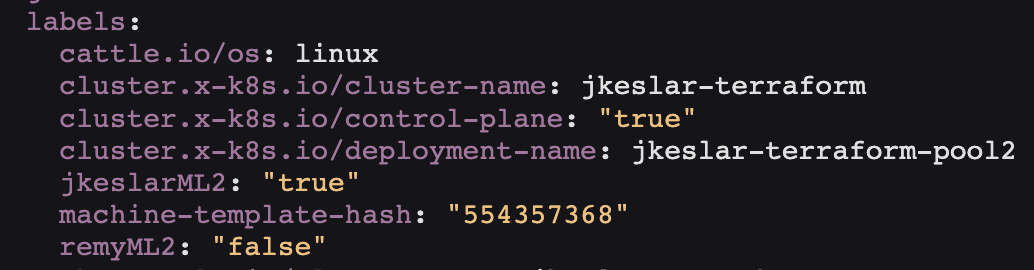

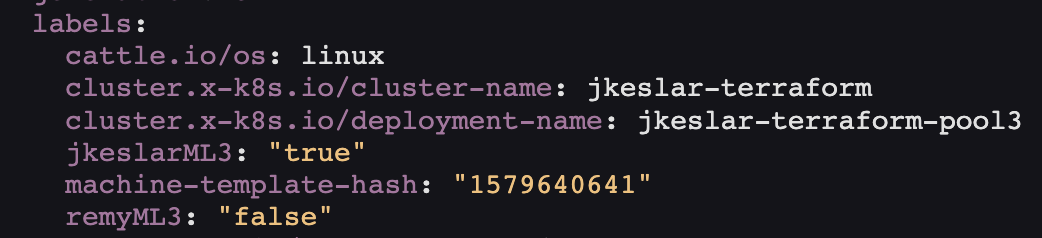

labelsandmachine_labelsobserved in YAML via RancherUI

Screenshots:

Step 3 - TF Plan

Step 4

Step 5 - Machine YAML

Step 5 - MachineDeployment YAML