rails

rails copied to clipboard

rails copied to clipboard

Allows associations to be automatically preloadable.

Summary

Before we load an association for the first time, we can preload the association. This will eliminate most standard N+1 queries in Rails apps.

We do this by capturing the group of records returned by a query in an AutomaticPreloader and having each record have a reference to it.

Once a record has been flagged to allow automatic preloading, subsequent associations are also flagged the same so this will allow deeply nested associations to all be dynamically preloaded

This is off by default and can be opted in with each relation. There is also a global option to enable it by default

For example: This code snippet:

developers = Developer.order(:id).limit(2).to_a

developers.each do |developer|

developer.contracts.to_a.first.company

end

would typically result in a few N+1 queries. The queries would look something like:

SELECT "developers".* FROM "developers" ORDER BY "developers"."id" ASC LIMIT 2

SELECT "contracts".* FROM "contracts" WHERE "contracts"."developer_id" = 1

SELECT "companies".* FROM "companies" WHERE "companies"."id" = 1 LIMIT 1

SELECT "contracts".* FROM "contracts" WHERE "contracts"."developer_id" = 2

SELECT "companies".* FROM "companies" WHERE "companies"."id" = 2 LIMIT 1

With automatic_preloading added (Developer.order(:id).automatic_preloading.limit(2).to_a), the same code would generate queries like:

SELECT "developers".* FROM "developers" ORDER BY "developers"."id" ASC LIMIT 2

SELECT "contracts".* FROM "contracts" WHERE "contracts"."developer_id" IN (1, 2)

SELECT "companies".* FROM "companies" WHERE "companies"."id" IN (1, 2)

I'm really curious about this feature!

Correct me if I'm wrong, but I assume currently we would prevent n+1 queries by explicitly preloading associations like

developers = Developer.includes(contracts: :company).order(:id).limit(2).to_a

And with the proposed change we will basically defer db queries to a later time whenever the association get's accessed without forcing developer to know in advance what kind of data they need later in the code. I can definitely see how it would prevent unintentional n+1 issues, however, and it may be my personal and a bit conservative point of view but I can see benefits in defining preloads explicitly and well in advance. Let's consider an example like:

# developers_controller.rb

def index

@developers = Developer.order(:id).automatic_preloading.limit(2).to_a

end

# developers/index.html.erb

<h1>Developers list</h1>

<% @developers.each do |developer| %>

<div id=<%= developer.id %>>

<h2>Contracts for developer <%developer.name%></h2>

<ul>

<% developer.contracts.each do |contract| %>

<li>Contract <%=contract.title%> with company <%= contract.company.name %></li>

<% end %>

</ul>

</div>

<% end %>

Which, as per my understanding, will basically cause the view layer to execute db queries, which doesn't generally sound like a good practice.

We could probably avoid making queries in the view layer by having some kind of a presenter that explicitly loads objects, but having a presenter is not always necessary and it would be an overkill to have a separate object that explicitly loads records. Kind of goes against the whole idea of automatic preloads.

So what I'm trying to say, I'm just worrying that such feature will make more appealing to build such implicit designs and perhaps complicate query debugging. I personally prefer to design classes in such way to load data from datastores as early as possible and make every other part of the system almost query-less which makes it really easy to write fast unit tests that don't require to communicate with any datastore.

Again, I may be just too conservative in regards to this particular feature, but it feels like I'd still prefer explicit preloads and some separate tooling to prevent n+1 queries in the system, for example https://github.com/palkan/n_plus_one_control

@nvasilevski

You are correct that currently how to prevent an N+1 query would be to explicitly use the includes and that it would cause the view layer to execute db queries. This could be a big change from how N+1 queries have been handled in the past.

That being said, there are few points here that I'd like to make

- This feature doesn't interfere with the ability to add

includes/preload/eager_loadon the query ahead of time. That can still happen. - If somehow you missed an

includes, the view layer would still be the place where db queries are being executed. - When using

includesas part of the query, a developer needs to know both where the query is being used, and where it is being setup. In some cases like the example you outline, if could be really straightforward (the view's controller), but if it was a partial, or if the query came from some kind of service object, suddenly this is no longer clear. - For simple examples it is often easy, but consider something complicated like a GraphQL API where users can specify what kind of responses they want back. Preloading that could require a lot of work (pulling apart the user's request, and figuring out the associations required). With something like this, Rails would take care of the heavy lifting and only preload associations as needed.

I think, with this feature, organizations can experiment with it being enabled by default, and can view for themselves what kind of performance improvement they would see. Anecdotally, I've set this up in two organizations at scale, that the performance improvement has been extremely noticeable

At a glance this seems to have some strong overlap with #45071, which is perhaps a good sign that it's an idea whose time has come.

Can you (cc @gwincr11) explore the similarities and differences in the proposed APIs and implementations? Don't focus on ending up with a single unified approach -- I imagine we'll have some strong opinions on the best fit for the framework -- but anything you can do to help ensure we're thinking about and considering all the right things would be great!

I can see some similarities between the two approaches. maybe @gwincr11 can correct anything that I have incorrect after trying to get up to speed with that PR.

General approachs:

| This branch | #45071 | |

|---|---|---|

| Enabling point | Uses either a global flag or a method called on an ActiveRecord relation to enable it | Wraps queries in a block to enable it |

| Record storage | Stores the array of records in ActiveRecord::Base#automatic_preloader |

Stores the array of records in ActiveRecord::Base#_load_tree |

Some things I notice:

- The block style approach does require constantly adjusting global state in order to determine if it is active. This approach can set a value in the global state initially, but otherwise only interacts with records pulled from a relation.

- With the block style approach, it would mean that query execution, and association access need to be located close to each other. (Consider the above example where the initial query is executed in a controller, but associations are accessed in the view). The approach in this PR will allow those queries to not be tied to each other.

- The block approach does give a way to explicitly disable it, whereas with this PR, once it is on for a set of records, it stays on

- The

_load_treeconcept is more generic and could theoretically be applied in other places. Knowing things like the object parent could be useful for further tooling. However, it does appear that the_load_treeis always created (even if it isn't in a dynamic preloading block) - The globally enabled aspect of this branch doesn't appear to be present with the other approach yet. I think this is really important. Turning it on globally has been a major performance improvement in the past. It also reduces developer overwhere where they no longer need to think about when to utilize it, Rails will handle it for them.

👋 Hi All,

Excited to see another idea in this area. Kudos! This actually looks remarkably similar to where I started with work on my branch. I iterated a few times and ended up in a slightly different spot but spiritually the same. I am happy to see some one else hacking in this area! ❤️ I am not sure what kind of feedback cycles you have gone through with your approach, below I am going to outline some interesting things I have learned along my path of talking to people about my implementation perhaps we can find some ideal api based on our approaches.

The block style approach does require constantly adjusting global state in order to determine if it is active. This approach can set a value in the global state initially, but otherwise only interacts with records pulled from a relation.

The first implementation I put together was turned on globally, much like yours. When I showed this to other developers I encountered a lot of push back around, the thought being it is pretty dangerous to have this just work everywhere and that people did not trust the magic. The preference expressed seemed to be allowing for a more slow opt-in roll out when working in complex applications.

One other thing I learned that suprised me, there are some scenarios where developers do not want dynamic include behavior and instead being able to turn it on and off at will is desired for performance reasons. Mainly this happens when there is a a need to pull an array of models into memory select only certain things out of the array and then only load a relation just for the selected items.

All that being said it is possible to turn on my implementation globally https://github.com/rails/rails/pull/45071/files#diff-31163cd511366615dd55b9ccefa35413065237f0d3b5d7bd8a60b9adb3d6e602R8-R10

ActiveRecord.dynamic_includes_enabled = true

I think having both is important.

With the block style approach, it would mean that query execution, and association access need to be located close to each other. (Consider the https://github.com/rails/rails/pull/45231#issuecomment-1143991277 where the initial query is executed in a controller, but associations are accessed in the view). The approach in this PR will allow those queries to not be tied to each other.

So this one is interesting, if the user wraps the render call from the controller in the block the render call happens with the setting in scope:

class User

def show

ActiveRecord.enable_dynamic_includes do

@user = User.find(params[:id])

@microposts = @user.microposts.paginate(page: params[:page])

render "users/show"

end

end

end

If doing implicit rendering then the scope is incorrect.

class User

def show

ActiveRecord.enable_dynamic_includes do

@user = User.find(params[:id])

@microposts = @user.microposts.paginate(page: params[:page])

end

# render actually happens here

end

end

However if you use an around action filter, it will again work.

class User

around_action :dynamic_includes

def show

@user = User.find(params[:id])

@microposts = @user.microposts.paginate(page: params[:page])

end

end

private

def dynamic_includes

ActiveRecord.enable_dynamic_includes do

yield

end

end

end

I did play around with passing state between objects like you have, but it became confusing when a developer wanted to turn it off somewhere in the method chaining and then some one else wanted it on else where. Since we have complex depth of calls, setting it at the call site was the most understandable pattern we could find as it allows developers to set the performance need at the place of need, and once that place of need is exited the performance tuning goes back to what it was prior. For example if I have:

class User

def some_slow_method

ActiveRecord.disable_dynamic_includes do

posts.select(:user).each do |user|

# this is some slow db query that we only want to happen when really neccesary

user.slow_association.update

end

end

end

ActiveRecord.enable_dynamic_includes do

User.all.each do |u|

u.some_slow_method # N+1 by design

u.someother_relation # dynamic include fixes n+1

end

end

We will not incur the full cost of loading all the slow associations, only the ones that are selected.

The block approach does give a way to explicitly disable it, whereas with this PR, once it is on for a set of records, it stays on

I also tried this approach and it was put us in a position of sometimes over fetching records that were not used on the page, allowing developers to turn it off can lead to more performant usage in some scenarios as mentioned above. Also interestingly the developer manually adding the includes can sometimes help the performance because there is more context available during preloading ahead of time, leading to more optimizations. Dynamic includes should be viewed as a sane default, that may at this time not be as performant as using the includes option has been my finding.

The _load_tree concept is more generic and could theoretically be applied in other places. Knowing things like the object parent could be useful for further tooling. However, it does appear that the _load_tree is always created (even if it isn't in a dynamic preloading block)

In order for dynamic includes to work it must be present. However, I have been splitting up that larger pr and have a new version that allows the developer to turn it on and off. As for the load tree implementation, we would prefer to have a more open API so that we can tie together other services and gems such as Prelude and our Twirp service layer.

The globally enabled aspect of this branch doesn't appear to be present with the other approach yet. I think this is really important. Turning it on globally has been a major performance improvement in the past. It also reduces developer overwhere where they no longer need to think about when to utilize it, Rails will handle it for them.

As I mentioned above my proposed approach allow for both a global approach and a block based approach.

Thanks again for working in this area ❤️

I am also excited that you have ran this on other code bases and seen big improvements. In my tests I have seen really large N+1 queries get fixed I added some logging to my pr to output when it happens and it's a bit shocking in practice how frequently you see the fixes occur and also where.

I've encountered something similar with developer resistance because of the magic. However, once people been using it while it is enabled globally, they typically don't want to go back to manually handling it. But it is good to allow people to make their own choices.

One other thing I learned that suprised me, there are some scenarios where developers do not want dynamic include behavior and instead being able to turn it on and off at will is desired for performance reasons. Mainly this happens when there is a a need to pull an array of models into memory select only certain things out of the array and then only load a relation just for the selected items.

If they are wanting to load all of the records into memory and select certain ones out, I think it would still be advantageous to preload the association. Though this is different if they are querying the relation for a subset of data. I can imagine everyones setup is different.

Having a way to disable it for a records or an association could be pretty useful and I think that could be pretty straightforward to add if we wanted to.

Also I don't think I noted it earlier, but my work has stemmed from this gem that I made several years ago.

If they are wanting to load all of the records into memory and select certain ones out, I think it would still be advantageous to preload the association. Though this is different if they are querying the relation for a subset of data. I can imagine everyones setup is different.

I also felt this way, I was suprised to learn that people where so strongly opposed to this.

Having a way to disable it for a records or an association could be pretty useful and I think that could be pretty straightforward to add if we wanted to.

I agree it is easy to do, I think getting the api right is hard. Some things I think are worth considering:

- The association may need to be used differently in different contexts.

- Developers should be able to define a method that sets up preferred performance characteristics for all callers.

- As a method developer I should be able to define how I want my loading behavior to work, but when it interacts with a method I do not know the performance characteristics of that method should work in the optimum way and then revert back to the performance characteristic my code needs.

Also I don't think I noted it earlier, but my work has stemmed from this gem that I made several years ago.

Nice I did not know this existed. I wonder if we could use this to get some more metrics around how this affects memory usage and performance in some real world applications?

So currently in this PR we have the automatic_preloading method that can be used both to enable or disable (very similar to how strict_loading works)

So someone could do Developer.order(:id).automatic_preloading(false) to disable it (if we was already enabled on a scope or it was globally enabled)

The only thing around disabling that is missing is for individual objects after the initial query has been made. (Theoretically they could disable it by modifying Record#automatic_preloader in this PR, but we could make it much more explicit.)

Re: Memory usage.

I don't have any information on memory usage. My argument that it wouldn't really matter that much is that most of the time, we would have loaded the same amount of objects anyways. However, there are situations, like @gwincr11 outlined, where there may be a really slow associations that we would only load on one object that would now be loaded on multiple records. Anecdotally, we have noticed no significant memory impact.

Re: Performance.

I've measured the performance of globally enabling this in two organization. Here is the changes in general response times across the latest codebase from before/after globally enabling the N+1 automatic loading. (this codebase was roughly getting about 1M requests per hour at the time)

| Before | After | |

|---|---|---|

| P50 | 55.56ms | 57.61 |

| P75 | 101.66ms | 93.26ms |

| P90 | 215.75ms | 166.81ms |

| P95 | 349.52ms | 239.83ms |

| P99 | 1.08s | 669.54ms |

This data was also consistent with the previous code base I set it up on though I no longer have access to the raw numbers. The requests per hour were also well into the millions)

This to me has been really strong signal that N+1 constantly sneak through and into production, and the benefits of being able to globally remove them is really huge.

@matthewd Any additional thoughts you have here?

Hey folks, I just wanted to share an opinion that may sound a little defensive in regards to this feature. Luckily I'm not making decisions so it's just an opinion and I'm definitely open for changing my mind :)

First of all I wanted to mention that I consider this feature useful and no doubt there are a lot of application that can immediately benefit from enabling/utilizing automatic preloading by not producing unnecessary queries. However, I feel like it's not completely suitable to be a "core" Rails feature. Let me quote something from the gem that, as far as I understood, was used as a foundation for the feature (https://github.com/clio/jit_preloader/):

When doing the preload, you have to understand what the code does in order to properly load the associations. When this is a brand new method or a simple method this may be simple, but sometimes it can be difficult or time-consuming to figure this out.

I find this argument reasonable to justify the usage of the new feature or the gem, however In my opinion automatic preloading won't solve the root cause: It's still hard to understand the code, hard to maintain it, hard to make assumptions about it and data loading is spread across multiple layers. The n+1 as a concept is still hidden somewhere deep in the code, even if it's not performing any sql queries. To me that's still a tech debt that needs to be addressed by refactoring the code in a meaningful way.

In other words, I don't believe automatic_preloading is something that can be recommended or required for a new application yet to be built, thus it's not suitable to be a part of the core capabilities.

For a new application what I would recommend instead is:

- Load data at a proper layer which is easy to extend and easy to make assumptions on what queries are going to be performed in order to fetch data required for a particular unit of work

- Write code that is easy to read and not time-consuming to extend

- Use tooling in development & test environments to prevent n+1 queries

- Use monitoring in production to react on any performance regressions

However, as I mentioned, this is still a great feature to work as a temporary (in my opinion) solution for applications that are struggling with n+1 queries. Which makes me thinking, is there anything in the Rails framework that could be changed in a way so the feature could stay as a separate library, but be "pluggable" into Rails as a preloader strategy (or some other abstraction) so the library itself shouldn't require any monkeypatches to be compatible with Rails and only rely on public API instead. At first glance it seems like that jit_preloader gem has some patches which, I assume, makes it harder to maintain especially to keep compatibility between Active Record versions

*This opinion is based on the assumption that all cases that produce n+1 could be refactored in a way that completely eliminates n+1 using existing Rails capabilities

@nvasilevski It's not always easy to voice (or to hear) comments in opposition to feature, but in the spirit of building the best thing, I think it is really important. Thanks for your opinion!

I have a few comments/thoughts from your post

The n+1 as a concept is still hidden somewhere deep in the code, even if it's not performing any sql queries

I would argue that if the code is not making any SQL queries or degrading performance, then it isn't an N+1 query. Thus there is no further need to dig into the root cause. The root cause (Data isn't preloaded), and its symptoms (Lots of queries + performance impact) are solved.

- Re: your recommendation flow I think in a vacuum this is ideal. However I don't think it really captures what is the case for non-trivial apps. Making assumptions about what code further down stream does with the queries is often not an easy activity. This is especially troublesome if some behaviour is conditional.

Already as well, additional non-rails tools are required to prevent N+1 queries, and doing this requires a lot of work. I actually think this is still something that we'd want to encourage because even if all of the current tools were used perfectly, there will still be N+1 queries (more in the next section)

In my experience with large Rails codebases, monitoring is something that developers are actively trying to do, but there are so many priorities. They will put lots of effort in, and still N+1 queries sneak through, and when they try to fix it, it does require a fair amount of time to investigate and fix. If it fix was provided by the framework so that this work didn't need to be done, that would be huge win for everyone.

Rather than require developers to be perfect in monitoring this subtle but impactful issue, we can give them the tools to focus on behaviours that are really important.

- Future thoughts

This opinion is based on the assumption that all cases that produce n+1 could be refactored in a way that completely eliminates n+1 using existing Rails capabilities

Not all N+1 queries currently can be solved with existing Rails capabilities. There are a handful that I can think of such as:

developers = Developer.order(:id).limit(2).to_a

developers.each do |developer|

developer.contacts.maximum(:created_at) # any kind of aggregation (sum, min)

developer.contacts.exists? # Existence checks

developer.contacts.where(company_id: 1) # Any kind of further query

developer.contacts.reload # Any explicit reloads of the association

end

I don't believe there is any current Built in way to handle this. With the addition of some object that collects records from queries (the automatic_preloader object in this PR, it was the _load_tree in https://github.com/rails/rails/pull/45071) these start to also be solvable as well. They wouldn't be automatically solvable in the way the standard query associations are, but tools can still be there so developers can opt into using them (with the aid of tooling in development & production)

By taking this first step, we are also laying some groundwork for how Rails could also provide solutions. And then utilizing the flow you outlined, the remaining N+1 queries can also be resolved.

If they are wanting to load all of the records into memory and select certain ones out, I think it would still be advantageous to preload the association. Though this is different if they are querying the relation for a subset of data. I can imagine everyones setup is different.

I also felt this way, I was suprised to learn that people where so strongly opposed to this.

@gwincr11 Per my experience, this "smart" handling of DB queries is almost impossible to be done in 100% correct way for all use-cases and that's maybe the reason. Anyone since this new feature is opt-in, I don't see any reason to be against it.

So currently in this PR we have the automatic_preloading method that can be used both to enable or disable (very similar to how strict_loading works)

@doliveirakn is there any particular reason to not make it similar to strict_loading (global flip - already present, relation method - already present, and per relation setup - missing) and call it smart_loading?

@simi Naming wise, I'm not against smart_loading. I do like how automatic does imply a little of what is does whereas smart may be a little unclear. That said, my opinion on the naming is not strong.

re: Per relation setup, I see no reason that couldn't be there. I initially didn't think of use cases where you'd want to enable it when setting up a particular association, but I'm open to adding that as required.

This is a great conversation, I am glad it is happening.

I started building the alternate proposed implementation at GitHub because we could not keep up with the number of performance issues N+1 queries we were experiencing, this was exacerbated by the introduction of GraphQL and the fact that Active Record is not the only data source we use. We have some parts of our code base that are over 400 lines of preload code that is tying together different datasources data or is loading data based on state machines. It seemed reasonable to me that Rails default solution should be the fastest possible data fetch and not the worst possible scenario, which it is today.

When I started building automatic preloading(dynamic preloading, smart preloading, fancy loading, magic loading) I thought our application would be in pretty all right shape as we have a lot of developers who have been dedicating a lot of time to performance recently and lots of tooling. When I turned it on the first time and ran just a portion of our controller tests I was shocked to see it fix thousands of N+1 queries that we missed, many in odd places most people do not look, some common places included:

- Deletion flows

- ActiveRecord callbacks

- When reaching across areas of responsibility into code that a developer maybe less likely to know about their is an increased chance of performance problems, for example our billing pages show data from all areas of the application and many times the developer does not know how to properly load data.

- Pages that do not get much attention.

These sorts of places do not benefit from the ideal performance work outlined above, many times people also make alterations to these pages unknowingly because of overlapping models leading to unintentional performance regressions.

We use a number of N+1 checking tools and I was also surprised that these are frequently incorrect or missing N+1's that the autoload tool I built and this proposal fixed. We had over 200 tests that listed out the number of queries that a developer set as ideal, in most cases the tool cut them in half if not more.

GitHub does have some completely different data needs than most Rails applications which increases the complexity of loading this data, especially since we jump between RPC calls, API's, Files systems and Active Record tying all this data together as if it where an association, using tools like Prelude and Platform Loaders. My implementation deals with this by offering an api that can be shared between any data loading tooling, I feel it is important to Active Record and GitHub that the ActiveRecord data used for this preloading methodology be extendable to allow for other gems to work with it and add their own needs.

Here is the original PR I opened which @eileencodes asked me to break up: https://github.com/rails/rails/pull/45071

And the subsequent 3 pr's I created from her request:

- Load Tree, the abstraction that makes dynamic includes and the debugging view possible https://github.com/rails/rails/pull/45161

- Dynamic Includes: https://github.com/rails/rails/pull/45413

- A new Active Record Debugging tool based on Load Tree: https://github.com/rails/rails/pull/45565

I would be very excited to see this land in Rails in some form.

There are couple of cases that I think need to be carefully considered when designing auto-preloading behavior.

First - when performing queries in batches - we should be careful that we do not contain references to the full batch of records after we have finished processing the batch.

For example, in

rare_models = SomeModel.find_each.select(&:some_rare_condition?)

rare_models.first.some_association.load

If each record in rare_models contains a reference to an AutomaticPreloader that has a reference to 1000 other records - then we are going to see some memory bloat.

If loading an association on such a model loads it on the full batch - then we are starting to look at very large numbers of unexpected ActiveRecord instances.

I would suggest that we should clear out the AutomaticPreloader for each record when we complete running a batch so that we don't see memory bloat or start loading excessive numbers of records. It would still be useful to be able to auto preload while processing the batch.

The second case is when using multifetch caches. When we use a multifetch cache in the view - then the records that hit the cache no longer need to have the associations loaded as they are no longer likely to be referenced from the view. Depending on the hit rate - it may be more performant to load the individual associations instead of loading for all the associations for all of the records. Ideally - we should be able to set a new AutomaticPreloader for the records that missed the cache so that they can preload together - but we would also need to consider how we handle nested cache checks where only a subset of the records are being checked in the cache. There is currently a behavior to only preload associations for records that miss the cache (implemented in #31250 - and having this auto preloading behavior would make it possible to make a much more elegant solution for this - as long as we expose APIs to manipulate the AutomaticPreloader.

I don't think that this should be an issue for implementing automatically preloading that is opt in at the point of call - but I think that it would be good to have robust solutions for these cases before we start enabling it by default (which I really would love to have at some point).

Just pinging more here as it has been a few months with no further activity. Is there anything I can do to help move this forward more?

cc: @eileencodes I figured I'd ping you on this as you were also looking at https://github.com/rails/rails/pull/45071 which was aiming to solve a similar problem and I'd be interested in your feedback and getting this PR moving again.

Can you provide a benchmark of what a smart loaded and manually preloaded association looks like? What are the performance implications of smart preloading, and why wouldn't we just use it by default?

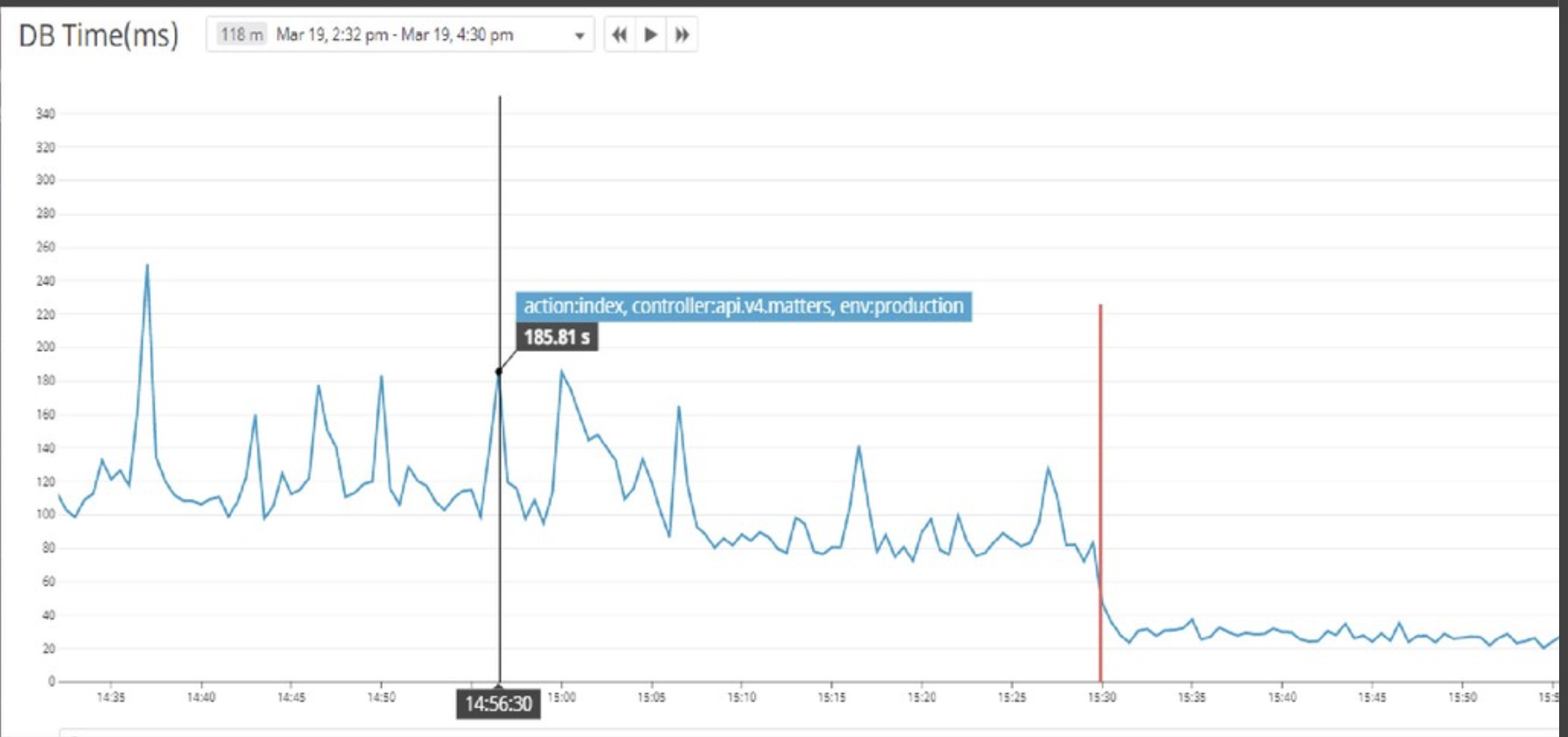

So here is a performance graph from a single endpoint that I used this smart/automatic preloading on.

The red line is when I turned it on. The graph shows aggregate time used by the request. You can see that controller action was taking between 80-240s worth of time per increment. This is because the usage of the data varied wildly. Sometimes there would be few records and few associations, and other times, there would be many of each. There was still N+1 queries even though there was efforts put in to do preloading. The N+1 queries is why the time usage is all over the map.

After this was turned on, it dropped very significantly to the 35-40s area and was fairly consistent.

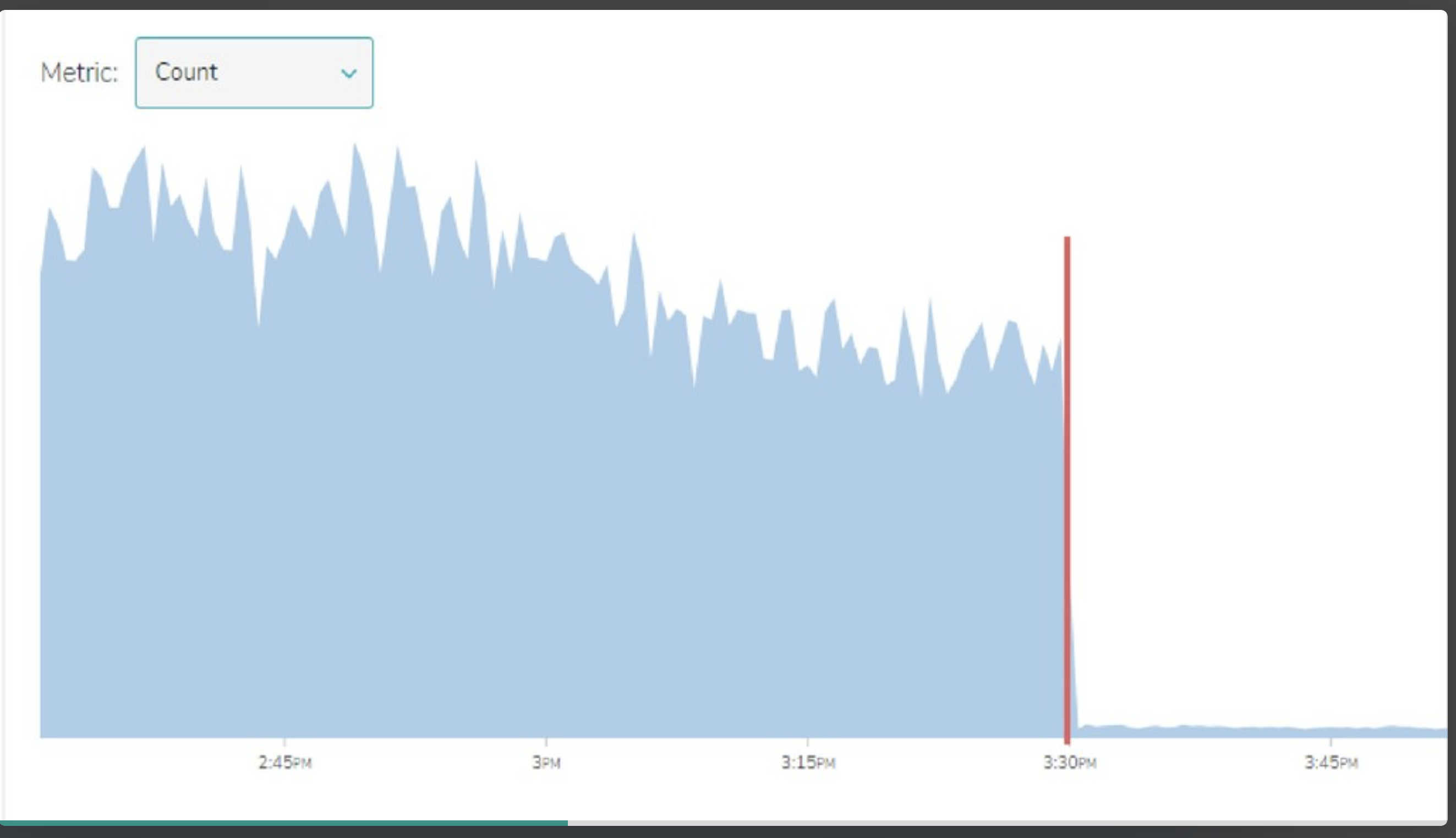

This image is of the total number of N+1 queries in happening in the app. Unfortunately the y-axis is cutoff and I no longer have access to the original data source, but you can see that at the time it was turned off, the number of N+1 queries dropped off very significantly.

(If you are curious why the number isn't 0, there are examples back in this comment that explains how N+1 could still exist)

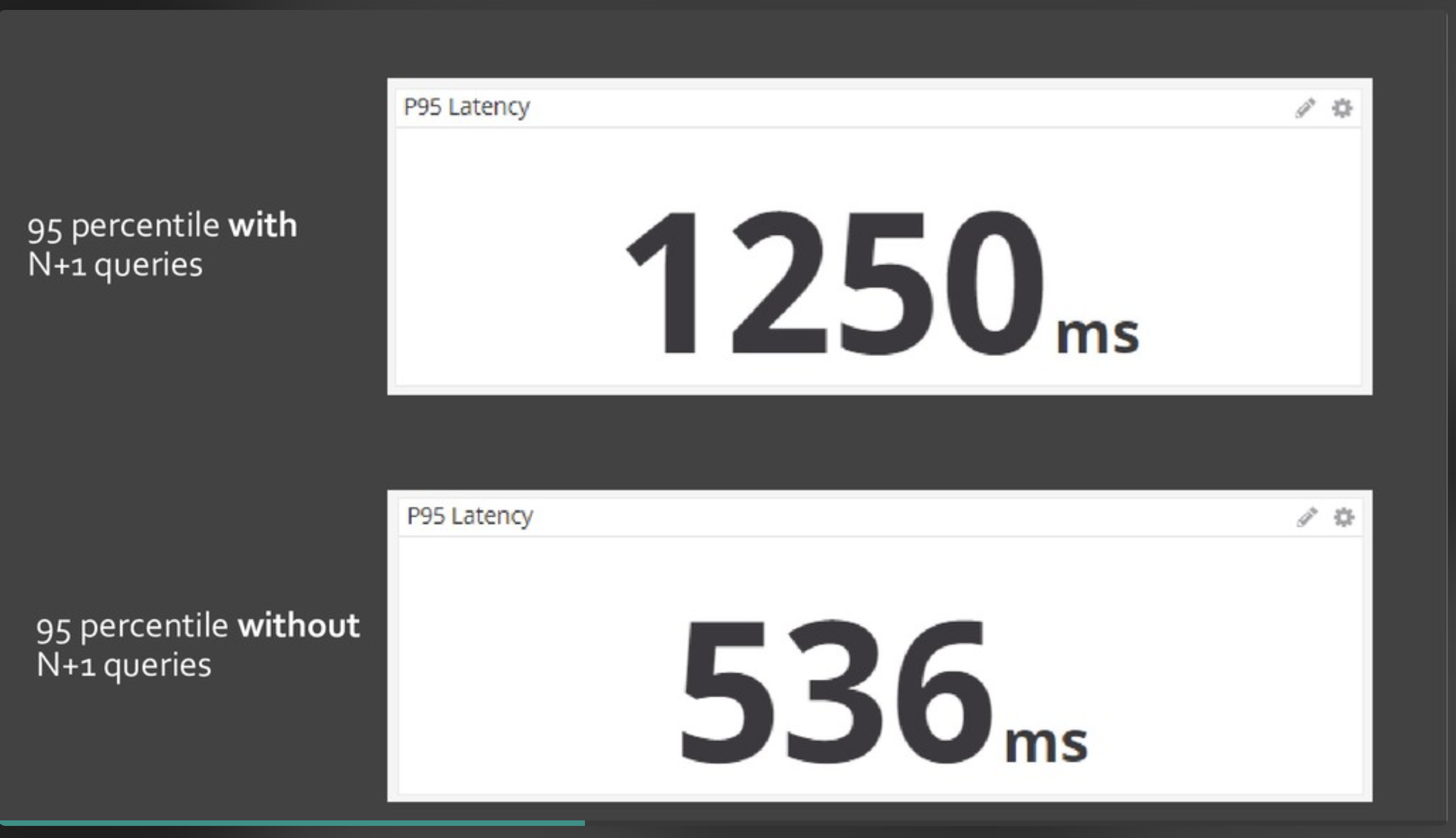

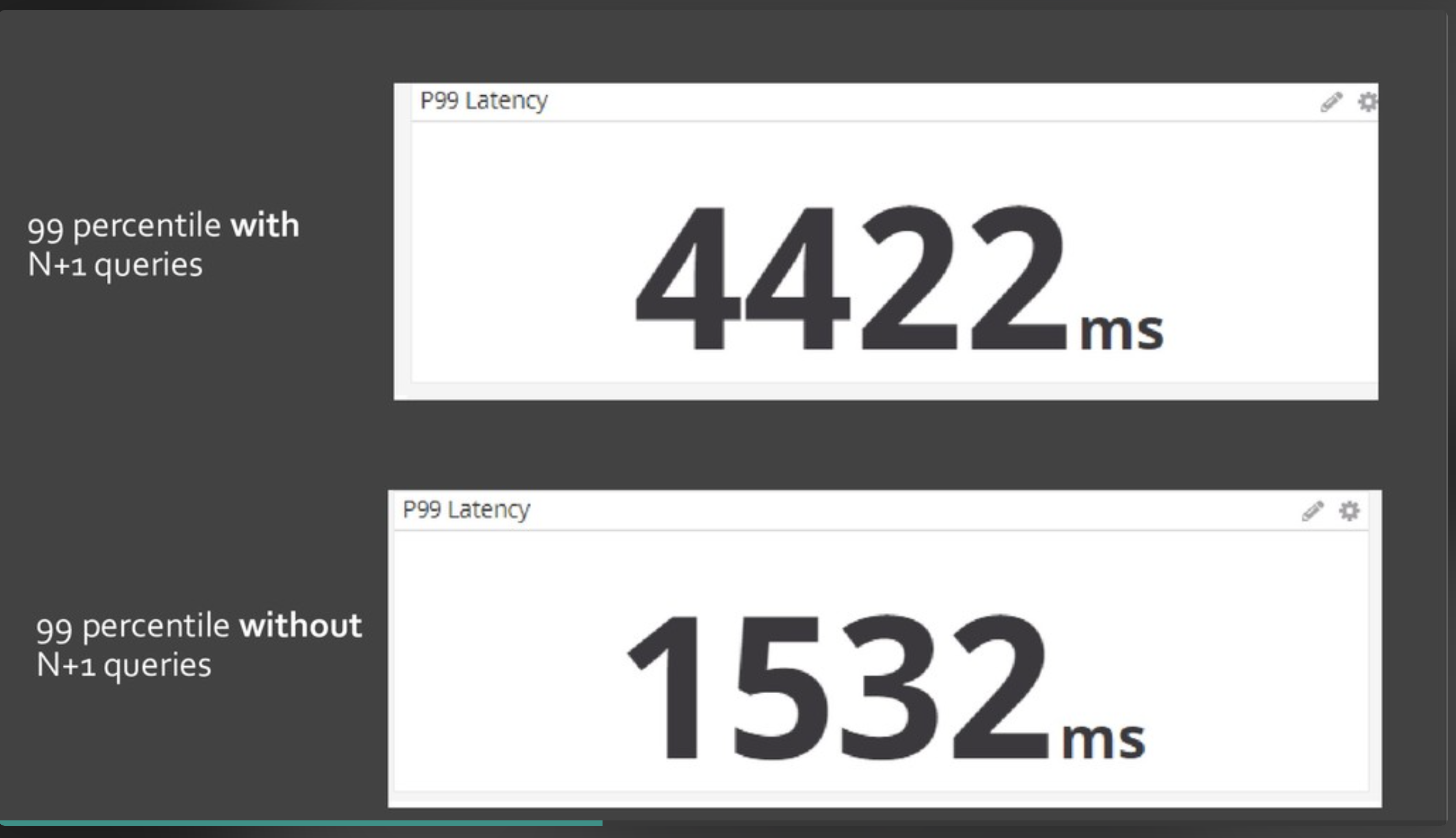

I also have some sample P95/P99 times that were measured an hour prior and an hour after the change was applied

To be honest, I cannot think of a reason why we wouldn't want to turn it on by default, though having it off by default is the safer option. I've successfully rolled this strategy out at 2 large organizations and the performance improvements from each were very noticeable and I haven't seen any lingering issues with this tactic

Excited to see more movement on this and some real world metrics ❤️

I would still love a consideration of the load tree version I offered up if this is being considered for 7.1.0 as it allows us to extend the N+1 loading to tools like Prelude and Twirp. I see @eileencodes just self assigned this she should be very familiar with these tools and how we use them at GitHub.

As requested I split up my initial pr into 3 https://github.com/rails/rails/pull/45161 - The Data structure https://github.com/rails/rails/pull/45413 - Preloading https://github.com/rails/rails/pull/45565 - New active record debugging tools.

These work in a fairly similar way to this pr but offer points for extension.

Here is the original PR Eileen asked me to spilt up https://github.com/rails/rails/pull/45071

For the folks not familiar with twirp or prelude. I am proposing an alternative implementation that makes the data structure which allows for preloading to occur to be a public api open for extension. This pr will keep the loading data structure private.

Also for consideration this implementation does not allow for certain queries to opt out which was a concern raised to me.