reduce the overhead incurred in FetchTensorData

When training model with multiple workers, two ExecuteComputation are not completely pipelined. We had an issue listed here: https://github.com/pytorch/xla/issues/3434 . This issue talks about two methods- RunPostOrder and FetchTensorData. Most part of RunPostOrder method was overlapped with previous execution with the help of this PR: https://github.com/pytorch/xla/pull/3457 . However the FetchTensorData method still remained as a bottleneck. The reason for this was: FetchTensorData runs after previous execution is complete and it runs before the next execution. In this method, it does two things: Reset the IR value and create xla_data. From our experiments resetting these IR values and creating xla_data for the tensors in the graph can be expensive as the graph grows in size. In such cases, it becomes a bottleneck. To avoid such scenarios, this PR creates a copy of the IR values and xla_data for all the tensors when the previous execution is on. It then uses that copy of ir_values for creating a graph for compilation for the next execution.

Out of curiosity, how much speed up does this pr bring.

This pr makes already pretty complicated lazy execution code more confusing, I want to understand how much speed up(and in what circustance, always, small model, large model etc) are we talking about here.

This PR brings 5-6% speedup on BERT large. This should give a significant boost when the execution is comparable to model tracing and other overheads.

Thanks, I will try to review it today. If you have the profile of before and after that would be super helpful.

Thanks, I will try to review it today. If you have the profile of before and after that would be super helpful.

I didn't get a chance to get it on GPU.. Will try to get it this week!

I think I understand the intention of this pr.

FetchData is called after the lock, because it will Remove all Tensor's IR Value and then replace it with a placeholder. These placeholder will be updated after the device execution.

The problem here is that Creating PlaceHolder can be expensive when graph size is large, what this pr does is pre-create those XLAData so the expensive operation can be overlapped.

@aws-rhsoln Unless you have a strong preference I will leave this pr out of the 1.13 release since that will be cut tmr. I will be out for a week so I won't be able to review it next week.

@aws-rhsoln Unless you have a strong preference I will leave this pr out of the 1.13 release since that will be cut tmr. I will be out for a week so I won't be able to review it next week.

Yeah should be fine, we can put this in the next release.

This pr makes already pretty complicated lazy execution code more confusing, I want to understand how much speed up(and in what circustance, always, small model, large model etc) are we talking about here.

This PR brings 5-6% speedup on BERT large. This should give a significant boost when the execution is comparable to model tracing and other overheads.

I tested this change using BERT large on GPU device. Seeing a 3% improvement in throughput.

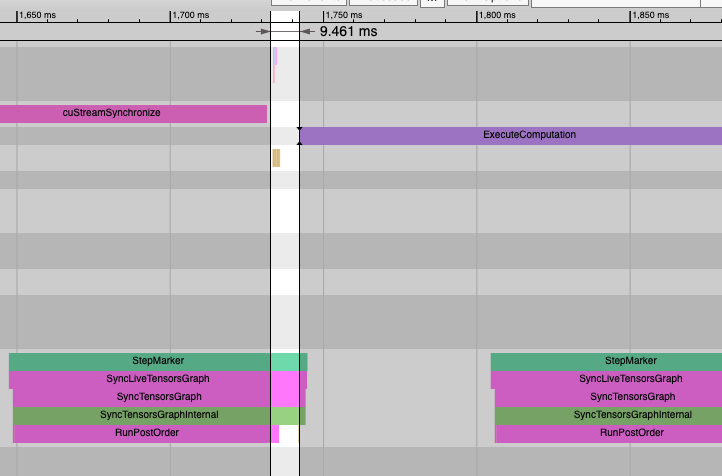

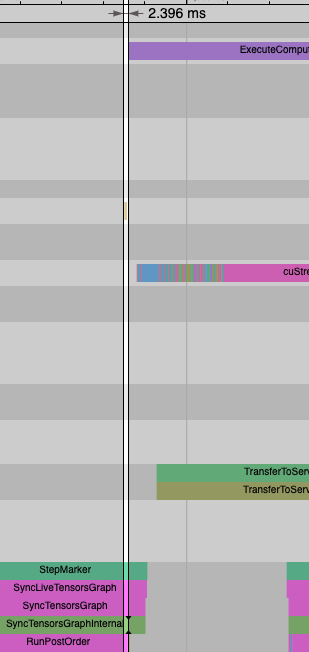

Previously the gap between two executeComputations was about 9ms. This has now reduced to roughly 2.5ms

This mostly lgtm, @alanwaketan can you take another pass? This pr will help us to shrink the gap between executions but it will change a bunch of API. What's the implication to the LTC migration we have?

Changing method signature will definitely affect the LTC migration plan. Wonder if this change can be applied to the upstream LTC as well? @aws-rhsoln

@alanwaketan yea, I think this change should also be upstreamed to LTC.

@alanwaketan yea, I think this change should also be upstreamed to LTC.

Can you point me to the LTC code base, I can take a look

Here we are: https://github.com/pytorch/pytorch/blob/master/torch/csrc/lazy/core/lazy_graph_executor.h. It should be pretty similar.

@alanwaketan yea, I think this change should also be upstreamed to LTC.

Can you point me to the LTC code base, I can take a look

Here we are: https://github.com/pytorch/pytorch/blob/master/torch/csrc/lazy/core/lazy_graph_executor.h. It should be pretty similar.

Oh cool! Taking a look

@alanwaketan yea, I think this change should also be upstreamed to LTC.

Can you point me to the LTC code base, I can take a look

Here we are: https://github.com/pytorch/pytorch/blob/master/torch/csrc/lazy/core/lazy_graph_executor.h. It should be pretty similar.

Oh cool! Taking a look

Okay looks like it is very similar to the one we have here. One question: Do we need to first merge LTC changes and then come here and merge this one? Or both are decoupled?

I guess we can see if the same technique can be applied to the upstream first, i.e., having a pull request first. If the upstream PR works, it shouldn't matter which pull request get merged first. We can review upstream LTC PRs too.

Here is the PR for lazy tensor core: https://github.com/pytorch/pytorch/pull/87119/files @alanwaketan

If this PR looks good, can we merge it? @JackCaoG

@alanwaketan does the upstream one lgty? If upstream pr align the api with this one I can merge this pr to unblock aws team.

@alanwaketan does the upstream one lgty? If upstream pr align the api with this one I can merge this pr to unblock aws team.

Reviewing it.

There were still some unresolved comments on upsteram, @aws-rhsoln can you resolve those. Let me take last pass of this pr then I will merge it.

Thanks @aws-rhsoln !

Thanks for the PR. @JackCaoG did we do any performance analysis on Google side to verify perf gains here? CC @chandrasekhard2

no, I expect the gain is similar. This is not really device dependent.