serve

serve copied to clipboard

serve copied to clipboard

setupModelDependencies deadlock

Context

- torchserve version: 0.5.3

- torch version: 1.10

- torchvision version [if any]: None

- torchtext version [if any]: None

- torchaudio version [if any]: None

- java version: 11

- Operating System and version: Windows server 2019

Your Environment

- Installed using source? [yes/no]: Yes

- Are you planning to deploy it using docker container? [yes/no]: Yes

- Is it a CPU or GPU environment?: Only CPU

- Using a default/custom handler? [If possible upload/share custom handler/model]: custom handler

- What kind of model is it e.g. vision, text, audio?: NER, text

- Are you planning to use local models from model-store or public url being used e.g. from S3 bucket etc.? No [If public url then provide link.]:

- Provide config.properties, logs [ts.log] and parameters used f## Context

- torchserve version: 0.5.3

- torch version: 1.10

- torchvision version [if any]: None

- torchtext version [if any]: None

- torchaudio version [if any]: None

- java version: 11

- Operating System and version: Windows server 2019

Your Environment

- Installed using source? [yes/no]: Yes

- Are you planning to deploy it using docker container? [yes/no]: Yes

- Is it a CPU or GPU environment?: Only CPU

- Using a default/custom handler? [If possible upload/share custom handler/model]: custom handler

- What kind of model is it e.g. vision, text, audio?: NER, text

- Are you planning to use local models from model-store or public url being used e.g. from S3 bucket etc.? No [If public url then provide link.]:

- Provide config.properties, logs [ts.log] and parameters used for model registration/update APIs:

- Link to your project [if any]: Private

Expected Behavior

Model registration with all packages specified in requirements.txt installed.

Current Behavior

After few minutes the python process (pip install) enters in a deadlock. Resource manager show CPU utilization at 0%. It has been tried several times but always fails on the same line. There is no error in the logs. Same model works fine on Linux.

- Link to your project [if any]: Private Private

Possible Solution

The solution consisted of making a new runnable class that reads input and error stream from the process. Java DOCS: By default, the created subprocess does not have its own terminal or console. All its standard I/O (i.e. stdin, stdout, stderr) operations will be redirected to the parent process, where they can be accessed via the streams obtained using the methods getOutputStream(), getInputStream(), and getErrorStream(). The parent process uses these streams to feed input to and get output from the subprocess. Because some native platforms only provide limited buffer size for standard input and output streams, failure to promptly write the input stream or read the output stream of the subprocess may cause the subprocess to block, or even deadlock.

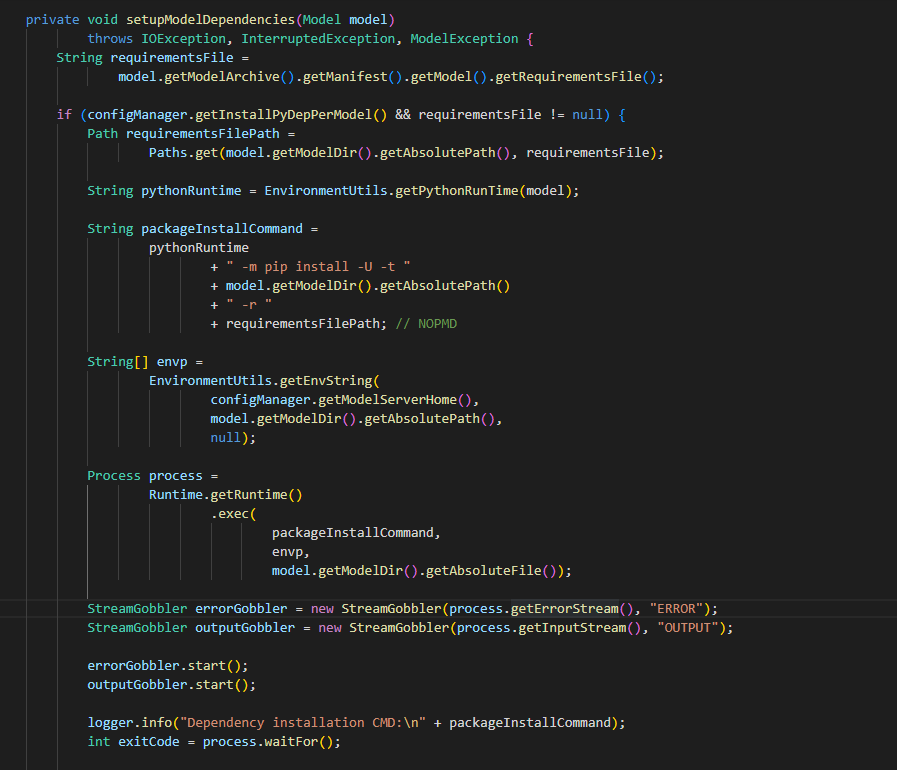

Change setupModelDependencies in ModelManager class. After creating the process and before process.waitFor() handle standard input and output streams (logging or just reading) to prevent buffer filling.

Steps to Reproduce

- try to register a model on Microsoft Server 2019 that has a large number of dependencies. For example, add package flair to requirements.txt .

Failure Logs [if any]

Deadlock

@jpunis could you provide model mar file to help us reproduce this issue?

@lxning Unfortunately I can't. The project is private. You can try to train a simple model using flair (link: https://github.com/flairNLP/flair/blob/master/resources/docs/TUTORIAL_7_TRAINING_A_MODEL.md) then export it using model archiver with requirement file flair == 0.9.

As of now, we don't really support handler files with multiprocessing behavior. Our recommended way of achieving parallelism is to either use multiple torchserve workers or increase the batch size

Its not about multiprocessing or archieving parallelism. its about reading the output stream. If you dont process the output and reaches the maximum size, processes enter deedlock.

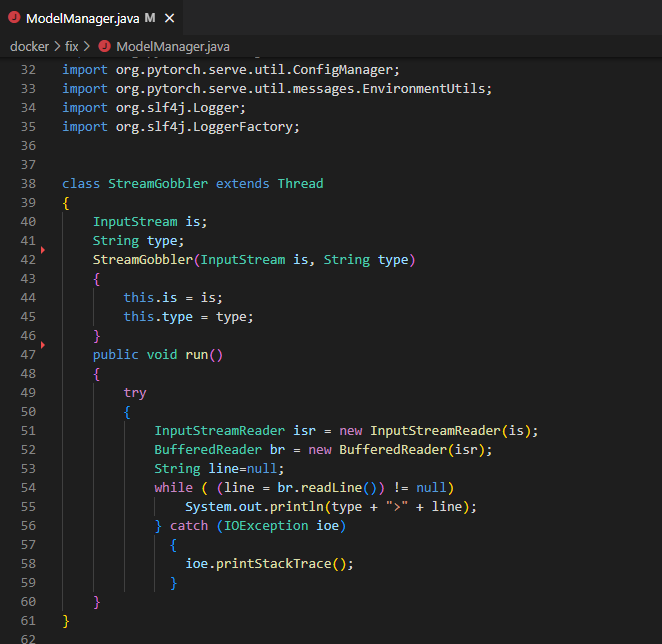

I created one simple class that extends Thread so I can read the output

in the setupModelDependencies i init two object one for reading ERR stream and one for reading the OUT stream

.

.